Great work by Connor Wells & Shubham Sharma @QueensUHealth asking two important #GlobalHealth questions

1. Is there a #publicationbias against papers from #LMICs?

2. Do oncology RCTs match the global disease burden?

Confirms something we always knew

What we did was this...

1. Is there a #publicationbias against papers from #LMICs?

2. Do oncology RCTs match the global disease burden?

Confirms something we always knew

What we did was this...

We identified 3 problems and 2 facts

We looked at all phase 3 studies in oncology from 2014 to 2017; classified origin of these RCTs based on #WorldBank economic classification of countries. We compared RCT designs and results from HICs and LMICs. The findings were striking…

We looked at all phase 3 studies in oncology from 2014 to 2017; classified origin of these RCTs based on #WorldBank economic classification of countries. We compared RCT designs and results from HICs and LMICs. The findings were striking…

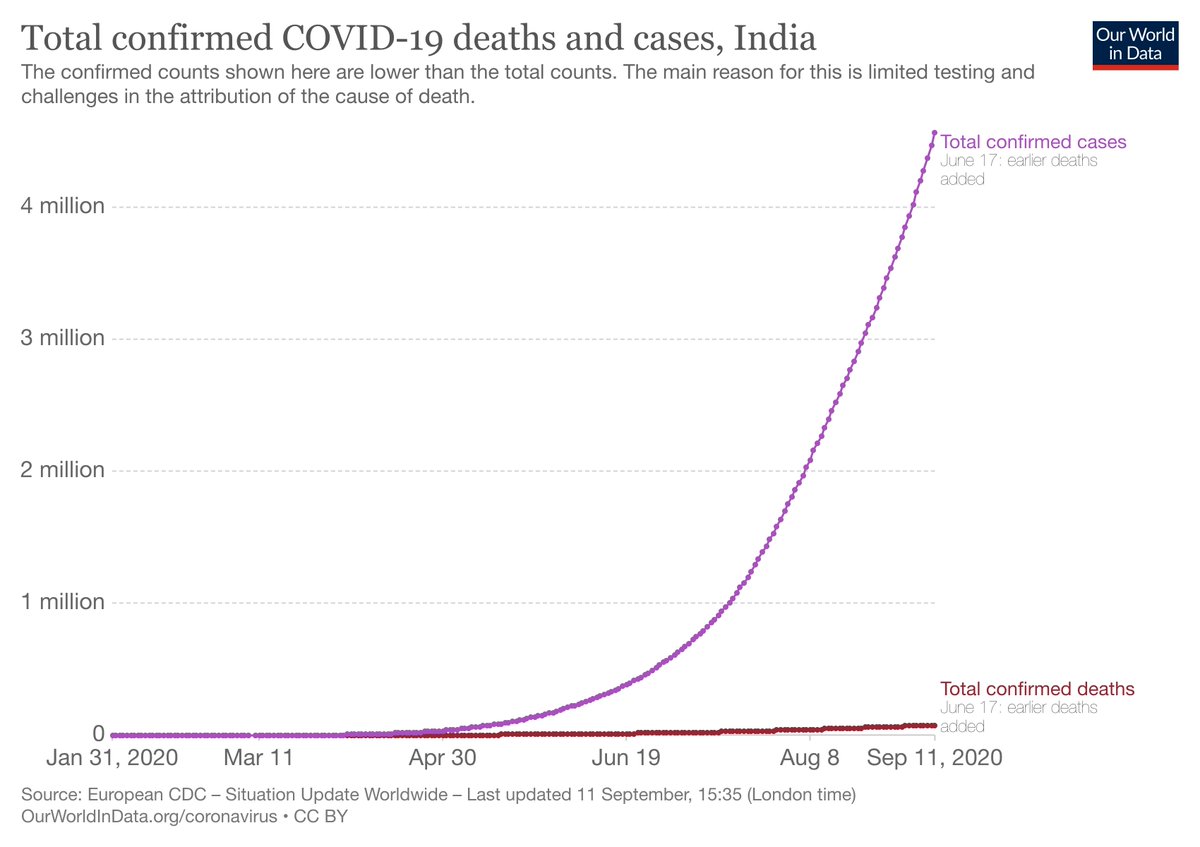

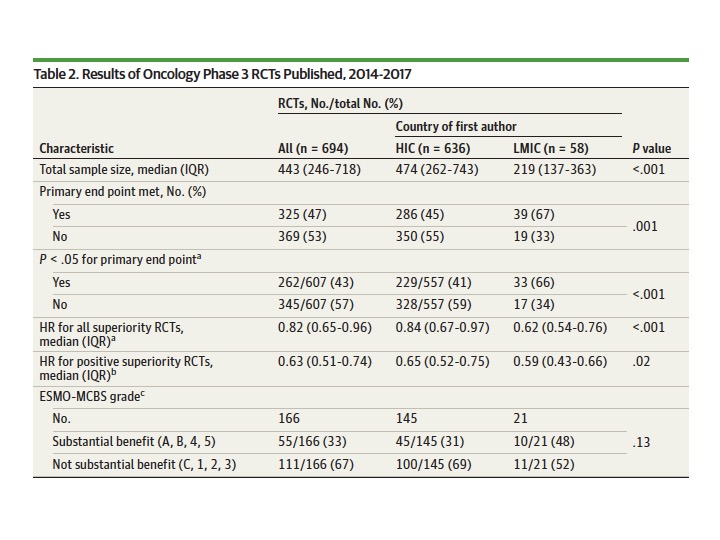

Of 694 RCTs, 636(92%) were led by HICs; 58(8%) by LMICs. This is the first problem – huge imbalance in where research is done. Cancer incidence is strikingly different in HICs & LMICs, with considerable burden in LMICs. How can we accept such a skewed distribution of research?

601 (87%) evaluated systemic therapies & just 88(13%) on surgery & radiotherapy. This is the second problem – disproportionate emphasis on research in systemic therapies; not nearly enough on curative options like surgery and radiotherapy.

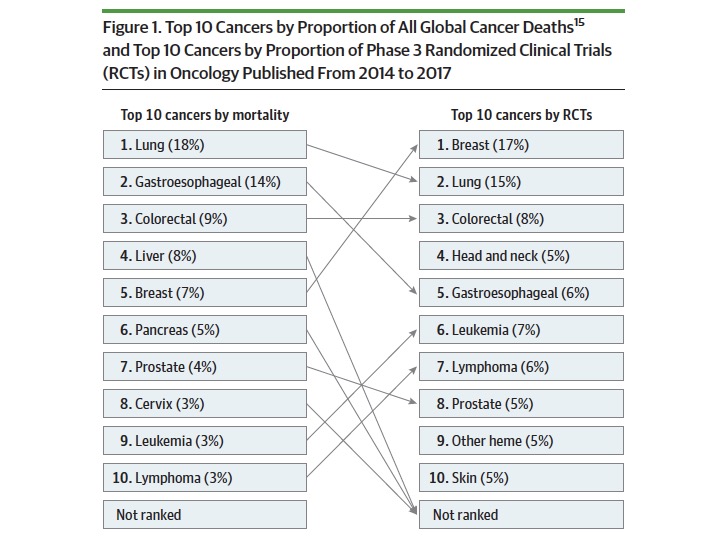

Distribution of RCTs are disproportionate to cancer burden – this is the third problem. With 7% cancer deaths, breast cancer constituted 17% of cancer research; with 14% cancer deaths, gastroesophageal cancer research was just 6%. Similar for liver, pancreas & cervical cancers

LMIC RCTs had smaller sample sizes than HICs (median, 219 vs 474; p<.001),more likely to meet primary end point (39/58; 67% vs 286/636; 45%, p=.001).

Median effect size was larger in LMICs than HICs; HR 0.62 vs 0.84;p<.001

Fact 1:Margins of benefit were higher in LMIC research

Median effect size was larger in LMICs than HICs; HR 0.62 vs 0.84;p<.001

Fact 1:Margins of benefit were higher in LMIC research

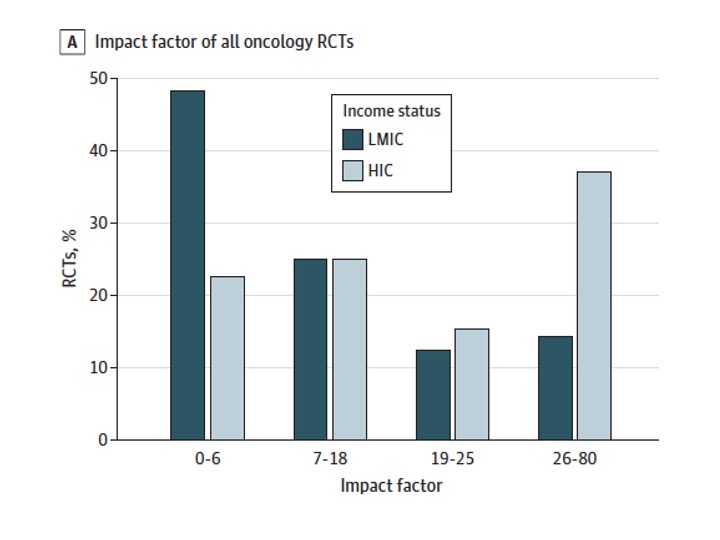

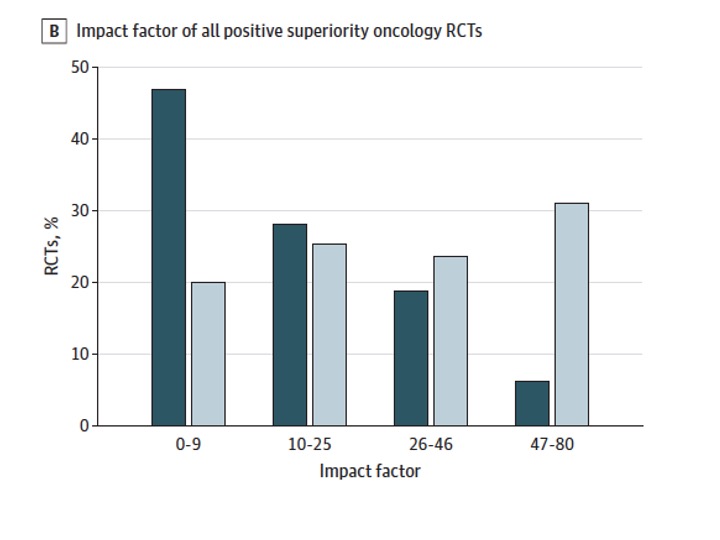

In spite of superior margins of benefit, LMIC RCTs get published in journals with lower median Impact Factor than trials from HICs (7 vs 21; p < .001)

Publication bias persisted regardless of whether a trial was positive or negative (median IF for negative trials: LMIC 5 vs HIC, 18; median IF for positive trials, LMIC, 9 vs HIC 25; p < .001)

Fact 2: Systematic publication bias exists

Fact 2: Systematic publication bias exists

Conclusions

1. Research is done disproportionately higher in HICs and do not match the global burden of cancer

2. RCTs from LMICs are more likely to identify effective therapies and have a larger effect size

3. There is a funding and publication bias against RCTs led by LMICs

1. Research is done disproportionately higher in HICs and do not match the global burden of cancer

2. RCTs from LMICs are more likely to identify effective therapies and have a larger effect size

3. There is a funding and publication bias against RCTs led by LMICs

We need to change this. The end.

• • •

Missing some Tweet in this thread? You can try to

force a refresh