1/ Brief THREAD to unpack @MetaAI's new Project CAIRaoke AI self-supervised learning model announced this morning.

Launch video: facebook.com/zuck/videos/67…

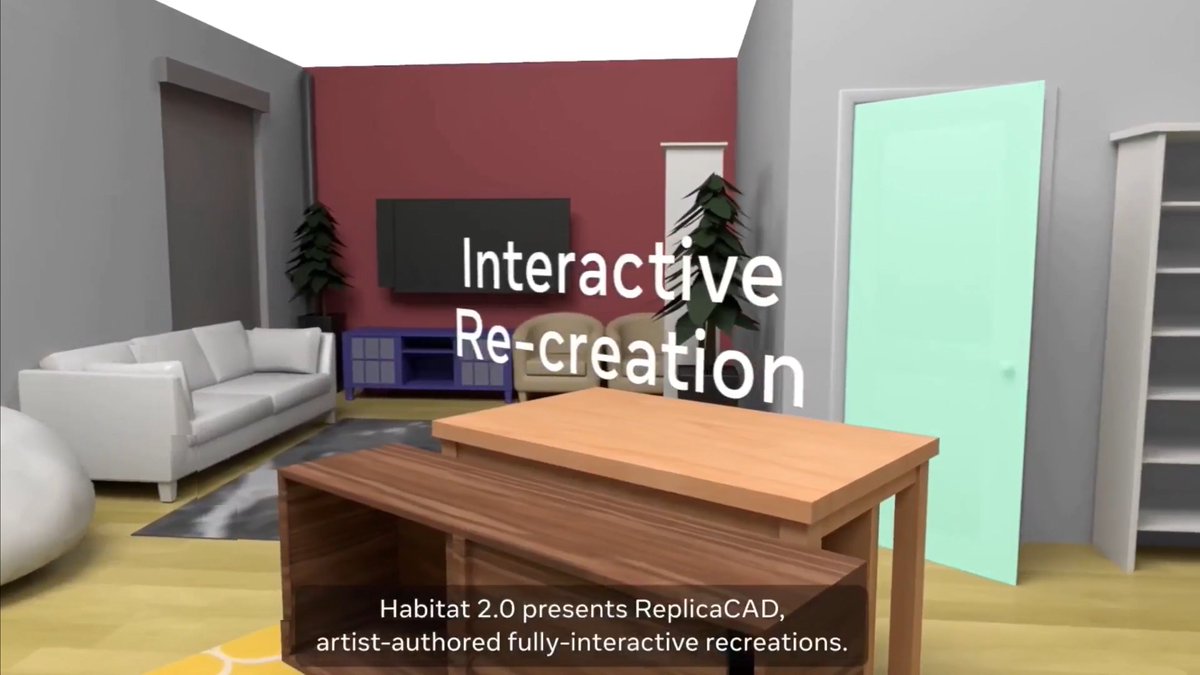

Zuckerberg showed a demo of a Builder Bot conversational VR worldbuilding tool within Horizon Worlds that's featured below.

Launch video: facebook.com/zuck/videos/67…

Zuckerberg showed a demo of a Builder Bot conversational VR worldbuilding tool within Horizon Worlds that's featured below.

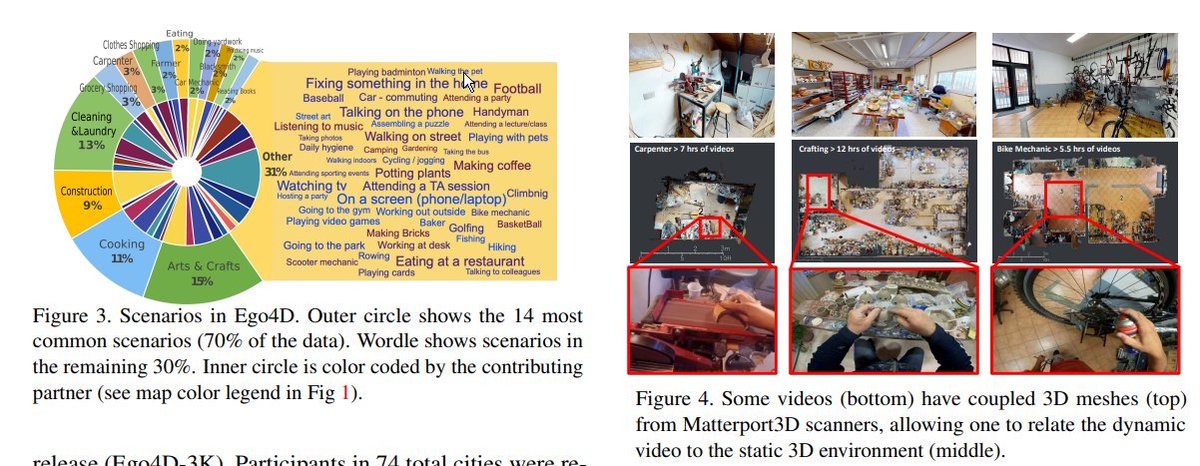

https://twitter.com/Meta/status/1496537931334533129

2/ Horizon World has terrible graphics, but Self-Supervised Learning is foundational AI tech that'll allow multi-modal training beyond labeled data, making speech-to-3D object creation in immersive worlds a reality. Will this yield good world design? TBD.

https://twitter.com/MetaAI/status/1494432233267945472

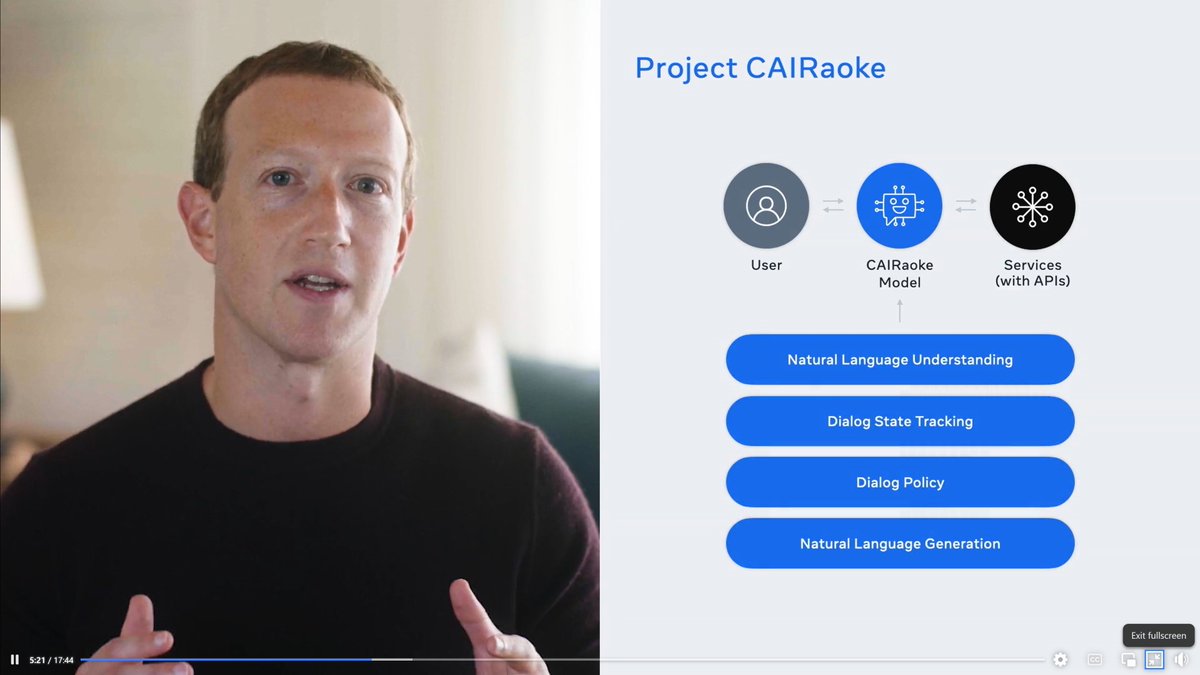

3/ Project CAIRaoke is a new conversational assistant synthesizing Natural Language Understanding, Dialog State Tracking, Dialog Policy, Management, & Natural Language Generation.

They say it's "deeply contextual & personal."

Video:

They say it's "deeply contextual & personal."

Video:

https://twitter.com/MetaAI/status/1496549354483908608

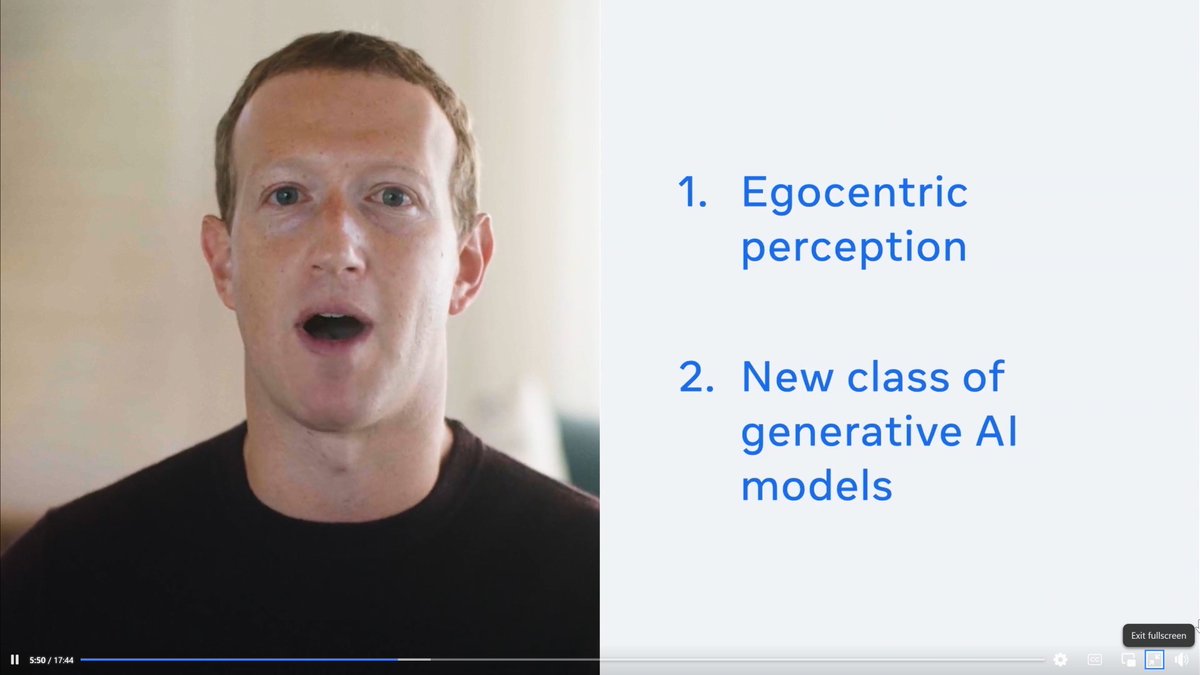

4/ This announcement is another incremental step towards @Meta's vision of "contextually-aware AI" that can only be achieved via "egocentric data capture" & "egocentric perception."

Essentially omniscient AI that can respond to your situation & context.

Essentially omniscient AI that can respond to your situation & context.

https://twitter.com/kentbye/status/1453897720767270912

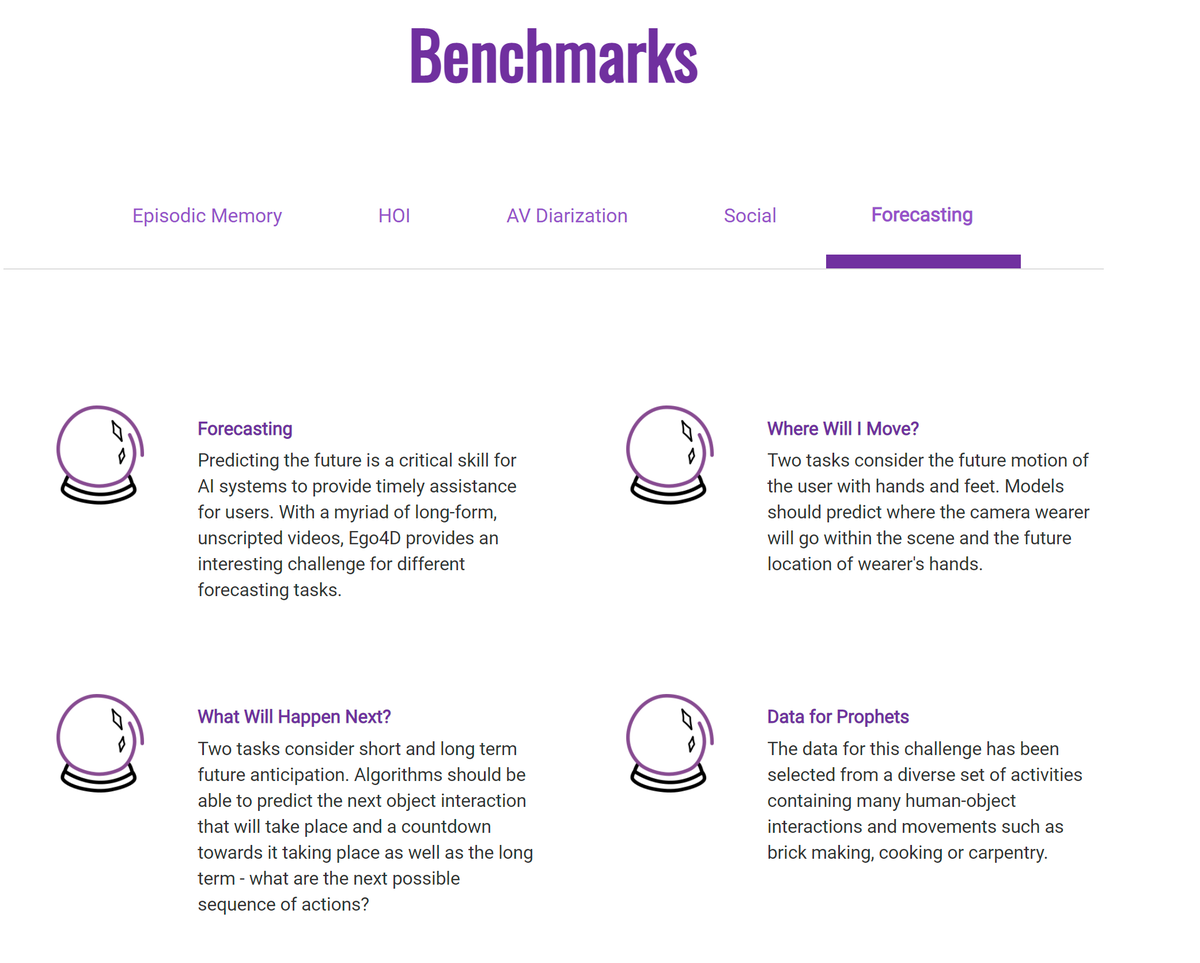

5/ Zuckerberg mentioned #Ego4D, which is 3.7k hrs of egocentric video data in collab w dozen universities to make AI training benchmarks around Episodic Memory, Hand-Object Interactions, AV Diarization, Social, & Forecasting.

More: ego4d-data.org

More: ego4d-data.org

https://twitter.com/kentbye/status/1453908848591265803

6/ I understand the utility of #Ego4D for AR & how it's the next frontier of Computer Vision + AI research, but it's still somewhat creepy as it's also in the context of @meta's omniscient Contextually-Aware AI in the service of surveillance capitalism.

https://twitter.com/kentbye/status/1453905011419668489

7/ Contextually-Aware AI #Ego4D Benchmarks = Surveillance

Episodic Memory

Querying Memory

Query Construction

Recalling Lives

Hand-Object Interactions

State Changes

AV Diarization

Hearing Words

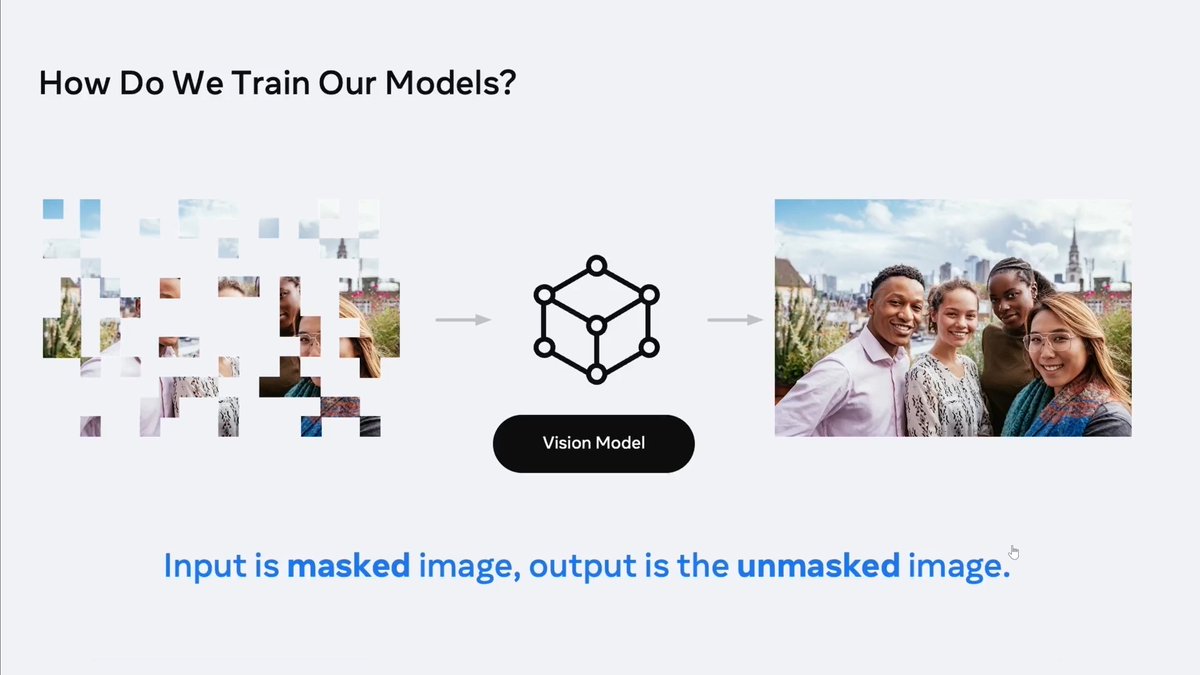

Tracking Conversations

Social Gaze

Social Dynamics

Forecasting Movements & Interactions

Episodic Memory

Querying Memory

Query Construction

Recalling Lives

Hand-Object Interactions

State Changes

AV Diarization

Hearing Words

Tracking Conversations

Social Gaze

Social Dynamics

Forecasting Movements & Interactions

8/ The challenge with Contextually-Aware AI is ensuring it's aligned with Nissenbaum's Contextual Integrity Theory of Privacy ensuring proper flows of information.

How & why is this omniscient-level of data being used & to what ends?

My podcast interview

voicesofvr.com/998-primer-on-…

How & why is this omniscient-level of data being used & to what ends?

My podcast interview

voicesofvr.com/998-primer-on-…

9/ I attended a Meta press briefing on Contextually-Aware AI on 3/18/21, & I paraphrased their goals along with some of my initial reactions to it in this podcast.

SPOILER: I'm not yet convinced of contextually-aware, omnipresent & omniscient AI overlords

voicesofvr.com/985-facebook-h…

SPOILER: I'm not yet convinced of contextually-aware, omnipresent & omniscient AI overlords

voicesofvr.com/985-facebook-h…

10/ There's two other projects @Meta announced today.

1. No Language Left Behind: translation system that can learn any language.

2. Universal Speech Translator: real-time speech-to-speech translation across all languages(!!!) which sounds amazing (but never get to 100% accuracy)

1. No Language Left Behind: translation system that can learn any language.

2. Universal Speech Translator: real-time speech-to-speech translation across all languages(!!!) which sounds amazing (but never get to 100% accuracy)

11/ Zuckerberg emphasized that some of research that Meta is doing with AI is fundamental as it is being used in other contexts like speaking up MRIs up to 4x:

https://twitter.com/techatfacebook/status/1295708258112425985

12/ Zuckerberg claims Meta is commited to building openly & responsibly as AI technologies "deliver the highest levels of privacy & help prevent harm" citing CrypTen.

But not sure how contextually-aware AI will deliver "the highest level of privacy."

It's the opposite of that.

But not sure how contextually-aware AI will deliver "the highest level of privacy."

It's the opposite of that.

13/ That's the highlights from 15-min intro video Zuckerberg streamed from his page.

There's another 2.5 hour video "Inside the Lab: Building for the Metaverse with AI" on @MetaAI's page digging into more topics.

Schedule: ai.facebook.com/events/inside-…

Video: facebook.com/MetaAI/videos/…

There's another 2.5 hour video "Inside the Lab: Building for the Metaverse with AI" on @MetaAI's page digging into more topics.

Schedule: ai.facebook.com/events/inside-…

Video: facebook.com/MetaAI/videos/…

14/ I watched this 2.5 hour program from Meta on "Building for the Metaverse with AI," and the target audience is potential AI engineers as it was an extended job recruitment pitch, especially at the end (see slides below).

But I'll share some reflections on AI tech & ethics.

But I'll share some reflections on AI tech & ethics.

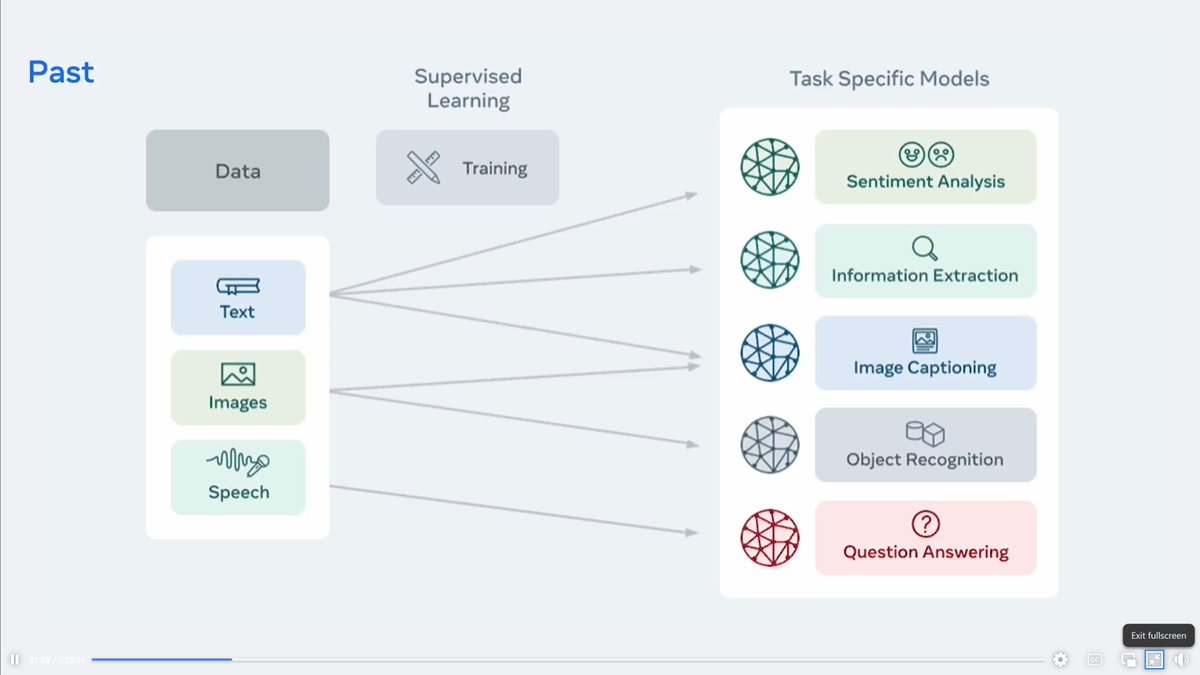

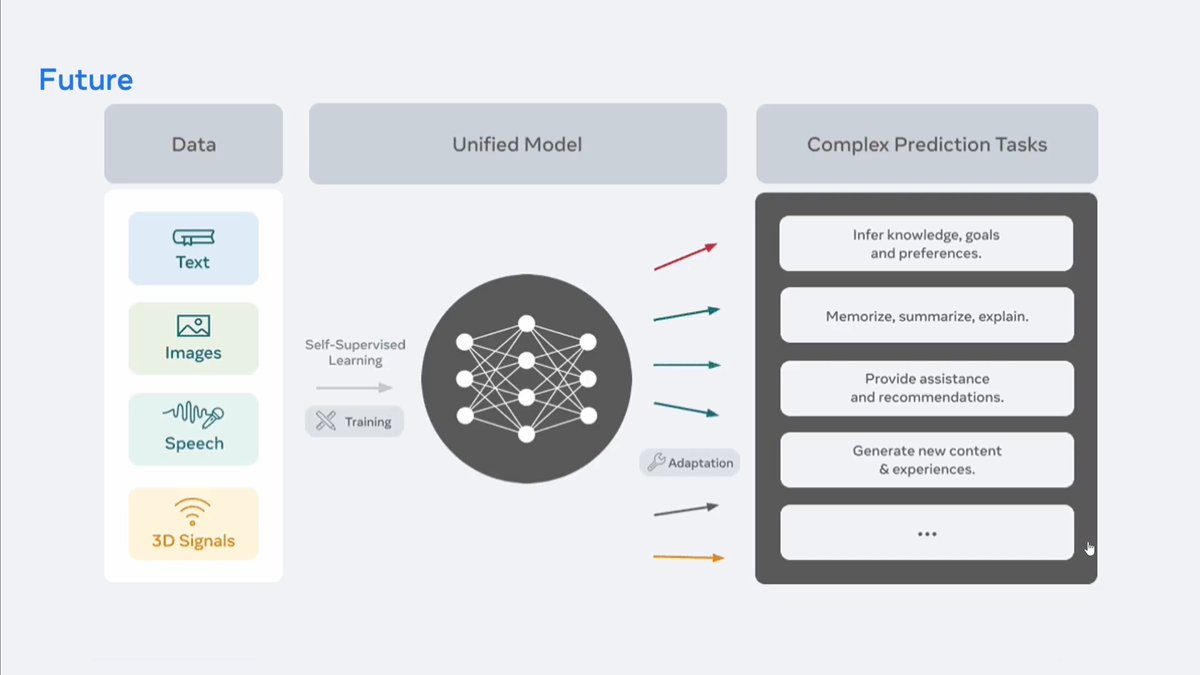

15/ Evolution of @Meta's ML architectures is interesting

Past: Supervised Learning & Task-Specific Models

Present: Self-Supervised Learning on Task-Independent Models to Tasks, Product & Tech

Future: SSL with Unified Model & Complex Prediction Tasks

NOTE: Sentiment Analysis & Ads

Past: Supervised Learning & Task-Specific Models

Present: Self-Supervised Learning on Task-Independent Models to Tasks, Product & Tech

Future: SSL with Unified Model & Complex Prediction Tasks

NOTE: Sentiment Analysis & Ads

16/ In Building for the Metaverse, @Meta will combine Self-Supervised Learning, Continual Learning, Reinforcement Learning, & Planning & Reasoning to ALL XR data like biometric sensor data + egocentric data + environmental data to make Contextually-Relevant AI & a "World" Model.

17/ This all encompassing "World" model is so important is because of the types of multi-modal sensor fusion that @Meta will be doing.

For example, at #IEEEVR 2021 @RealityLabs showed how they could extrapolate Eye Gaze from Hand Pose + Head Pose:

For example, at #IEEEVR 2021 @RealityLabs showed how they could extrapolate Eye Gaze from Hand Pose + Head Pose:

https://twitter.com/kentbye/status/1376409069406220290

18/ Here's a taxonomy of how different XR data & biometric measurements can do psychographic profiling that tracks XR user's actions, mental thoughts, unconscious physiological reactions & emotional states.

What are the mental privacy implications of fusing all of these together?

What are the mental privacy implications of fusing all of these together?

19/ Human Rights Lawyer @brittanheller gave a great @Gatherverse speech on 5 things XR privacy folks should understand about how XR data is completely different including lack of legal definition of this data.

See my video on this:

See my video on this:

https://twitter.com/kentbye/status/1496244534271148034

20/ @Meta first published their Five Pillars of Responsible AI on June 22, 2021 & are diff than their four RI Principles:

Privacy & Security

Fairness & Inclusion

Robustness & Safety

Transparency & Control

Governance & Accountability

ai.facebook.com/blog/facebooks…

Privacy & Security

Fairness & Inclusion

Robustness & Safety

Transparency & Control

Governance & Accountability

ai.facebook.com/blog/facebooks…

https://twitter.com/DavidAdkins/status/1407489430781370372

21/ In April 2021, @Meta published a Privacy Progress Update with 8 Core Principles of how they define privacy, which is all about controlling access to identified data, but there's nothing on physiological XR data or profiling via biometric psychography.

https://twitter.com/rmsherman/status/1387175380096851970

22/ @Meta is still in an old paradigm of defining privacy as identity & control of data rather than contextually-relevant psychographic profiling via biometric data.

They still haven't commented on #NeuroRights like a Right to Mental Privacy.

See my talk

They still haven't commented on #NeuroRights like a Right to Mental Privacy.

See my talk

23/ Meta's Five Pillars of Responsible AI is an incremental step to RI best practices, but there's still a long ways to go.

Two talks with more

Towards a Framework for XR Ethics

Sensemaking Frameworks for the Metaverse & XR Ethics

Two talks with more

Towards a Framework for XR Ethics

Sensemaking Frameworks for the Metaverse & XR Ethics

24/ Meta's consistently frames privacy issues back to individual identity & the ways they're protecting personal identifiable information from leaking out in their ML training analysis.

They also oddly often shifted from privacy into open source & transparency tools like CrypTen.

They also oddly often shifted from privacy into open source & transparency tools like CrypTen.

25/ Back to the overall thrust of the presentation, it covered major applications & intentions for @MetaAI's efforts, and how their research is being applied to the Metaverse, which I'll dig into a bit.

They focused primarily on Robotic Embodiment, Creative Apps, & Safety.

They focused primarily on Robotic Embodiment, Creative Apps, & Safety.

26/ Here's a number of graphics showing the faster improving performance of their Self-Supervised Learning approaches vs Supervised Learning with different algorithms progressing over the years.

27/ Here's a number of different haptics & robotics applications of Self-Supervised Learning.

[NOTE: Searching for Meta vs Facebook has killed their SEO in trying to track down the original papers or references of some of this]

Blog on SSL from 3/4/21:

ai.facebook.com/blog/self-supe…

[NOTE: Searching for Meta vs Facebook has killed their SEO in trying to track down the original papers or references of some of this]

Blog on SSL from 3/4/21:

ai.facebook.com/blog/self-supe…

28/ Here's a series of graphics that shows what modules within an Classical ConvAI Pipeline that Meta's new CAIRaoke Model replaces.

[NOTE: yes, it is pronounced "karaoke"]

Additionally, the CAIRaoke output is recursively fed back in as an iterative input with sample assertions.

[NOTE: yes, it is pronounced "karaoke"]

Additionally, the CAIRaoke output is recursively fed back in as an iterative input with sample assertions.

29/ @Meta showed a speculative AR design demonstrating how creepy Contextually-Aware AI might be.

It knows you already added enough salt & automatically buys more salt for you.

Has a memo from your mom + shows how she sliced Habeneros thinly, unclear if AI is extrapolating these.

It knows you already added enough salt & automatically buys more salt for you.

Has a memo from your mom + shows how she sliced Habeneros thinly, unclear if AI is extrapolating these.

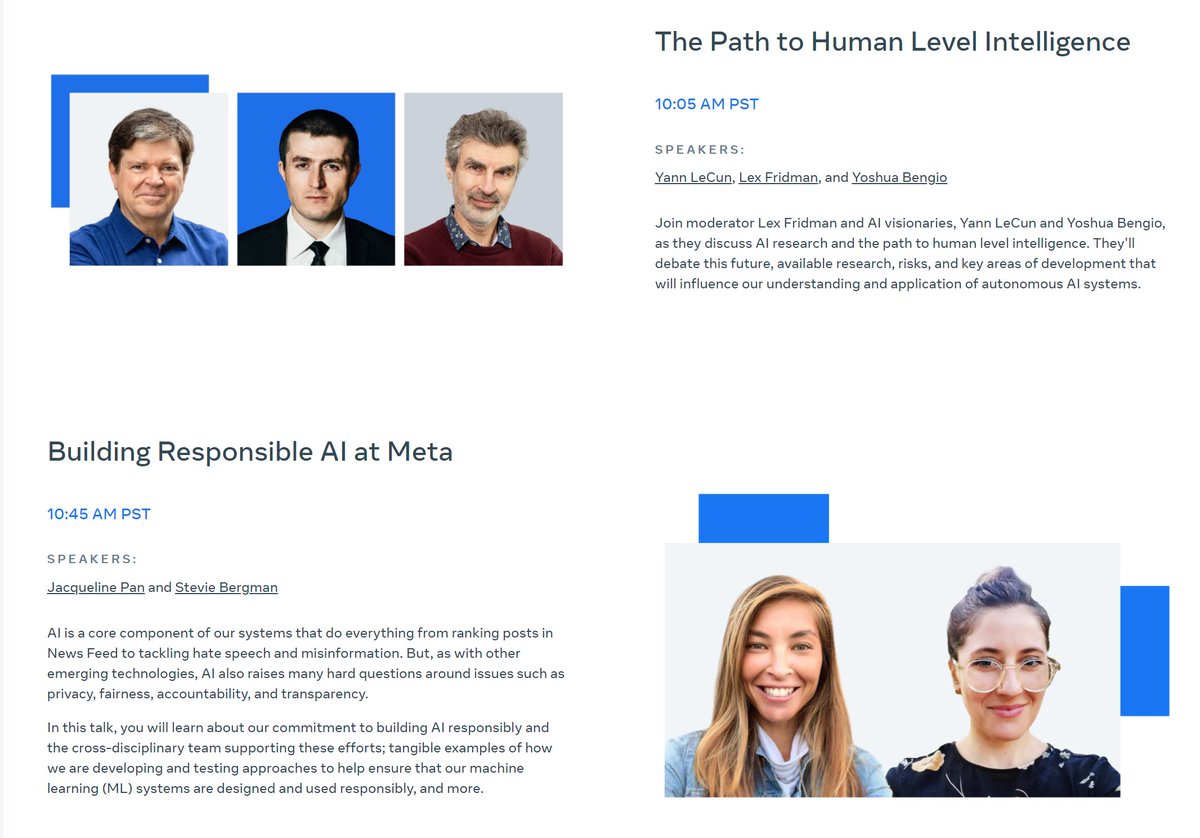

30/ @lexfridman facilitated a really engaging 40-min discussion with pioneering AI researchers Yoshua Bengio & @MetaAI's @ylecun on the pathway to human-level AI, self-supervised learning, & consciousness (Bengio likes Graziano's Attention Schema Theory).

facebook.com/MetaAI/videos/…

facebook.com/MetaAI/videos/…

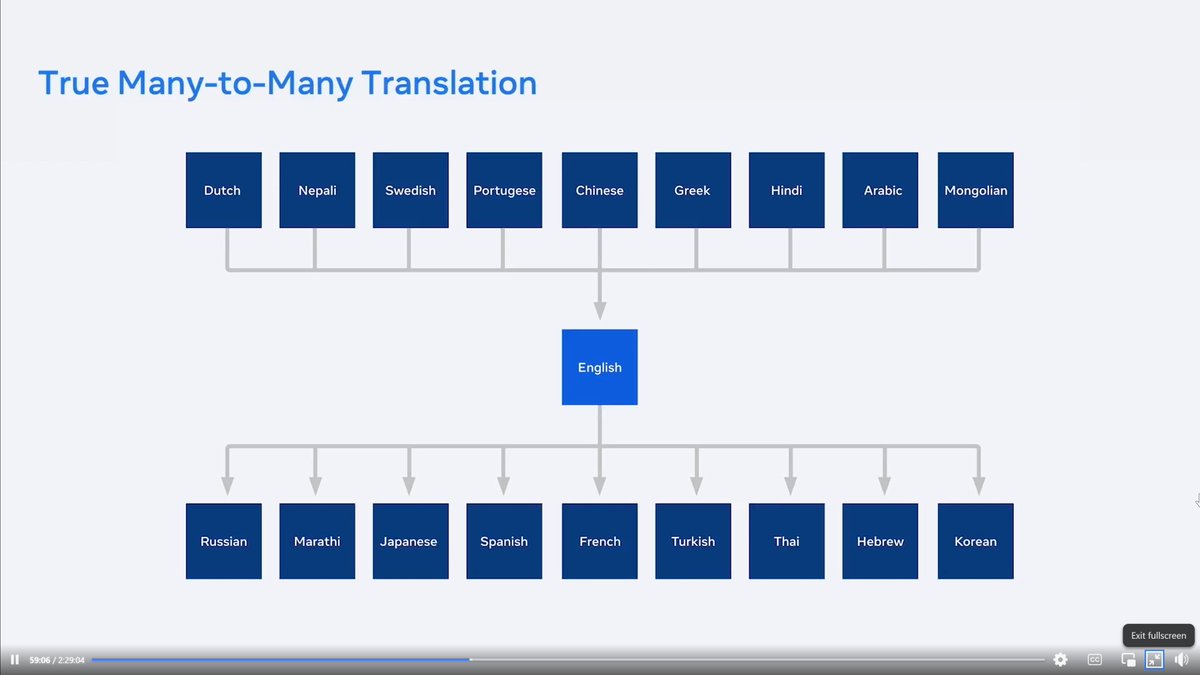

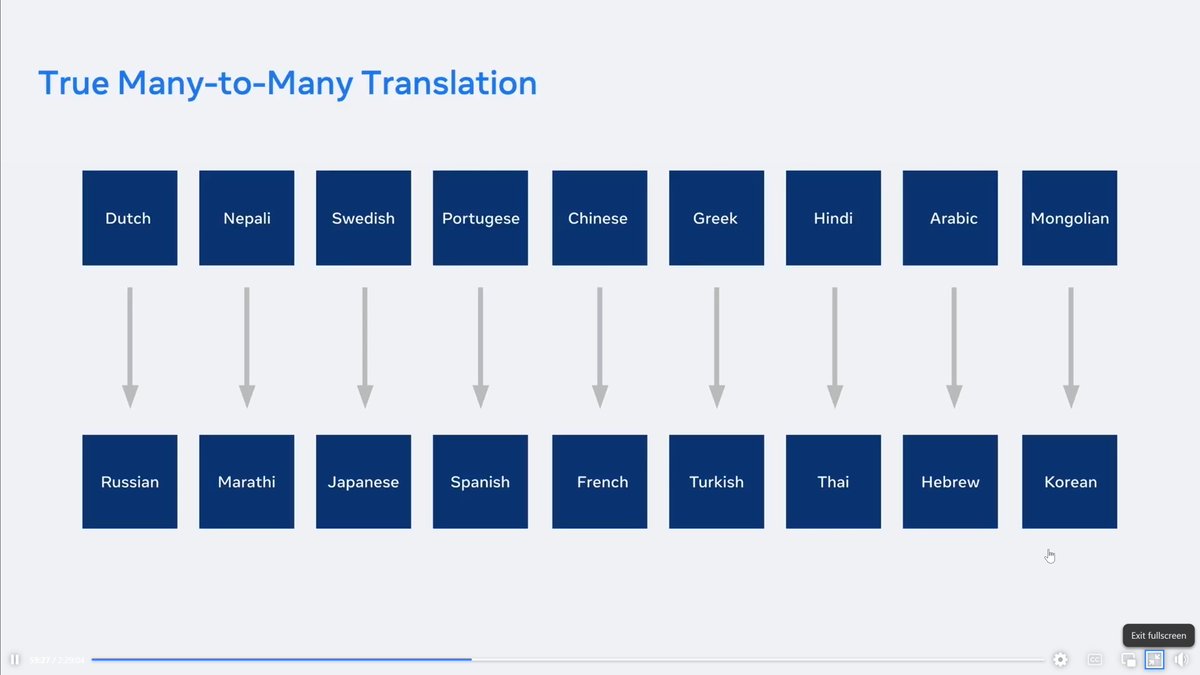

31/ There was a whole section on language translation that I found interesting. I know how speech-to-tech is not and may never reach 100% accuracy, & so I really wonder how to quantify the accuracy & communication loss of the vision of these universal translators.

32/ They used to use English as an intermediate language to translate into, but they're moving to a system where they can directly translate any language into another language.

It makes me wonder about gaps in contextual knowledge & how anyone will ever detect mistranslations

It makes me wonder about gaps in contextual knowledge & how anyone will ever detect mistranslations

33/ Some slides describing how Self-Supervised Learning works by taking away information & then requiring the ML to learning abstract schemas to be used for sensemaking & filling in the gaps. This can be iterated on for SSL to learn how to discern patterns more efficiently.

34/ There was a whole section on Responsible AI approaches & projects that @Meta has been working on.

The process of Tracking Fairness Across Meta in AI is a bit of a more robust & fleshed out responsible innovation framework than Reality Labs has talked about so far.

The process of Tracking Fairness Across Meta in AI is a bit of a more robust & fleshed out responsible innovation framework than Reality Labs has talked about so far.

35/ I don't fully understand how they can model & explain SSL models.

'AI Model Cards are a standardized way to document, track, & monitor individual models...'

'AI System Cards how a group of AI System of AI Models & other non-AI techniques work together to accomplish a task.'

'AI Model Cards are a standardized way to document, track, & monitor individual models...'

'AI System Cards how a group of AI System of AI Models & other non-AI techniques work together to accomplish a task.'

36/ Part of my hesitation in diving into running everything on self-learning AI models is it's very easy to create complex systems that you don't fully understand.

I clicked on links quickly tracking down a story & I was auto-blocked by Meta's AI overlords

I clicked on links quickly tracking down a story & I was auto-blocked by Meta's AI overlords

https://twitter.com/kentbye/status/1496163707700891658

37/ There's a lot of ways in which both safety & security requirements for moderation for XR AND the challenges of immersive VR & AR content + egocentric data capture are all catalysts for new AI benchmarks & challenges and even sometimes new algorithmic approaches.

38/ Starting to wrap up this thread.

These types of talks by Meta are quite dense to sift through, but they also usually reveal quite a lot of interesting insights into their deeper strategies & philosophies.

Also VR, AR, & the Metaverse are great provocations for AI innovations.

These types of talks by Meta are quite dense to sift through, but they also usually reveal quite a lot of interesting insights into their deeper strategies & philosophies.

Also VR, AR, & the Metaverse are great provocations for AI innovations.

39/ Ultimately, this was a recruitment pitch.

It's the most optimistic story that @Meta tells itself about who they are, what they do, & why.

Creating AI to benefit people & society.

Building the future responsibly.

Ensuring fairness & robustness.

Collaborating with stakeholders.

It's the most optimistic story that @Meta tells itself about who they are, what they do, & why.

Creating AI to benefit people & society.

Building the future responsibly.

Ensuring fairness & robustness.

Collaborating with stakeholders.

40/ I have so many disconnects when I hear Zuckerberg say he want to "deliver the highest levels of privacy" while also aspiring to create contextually-aware AI with #Ego4D that can reconstruct memories, track every conversation, & predict your next move.

https://twitter.com/kentbye/status/1496649265329803266

41/ The XR industry is in a phase of self-regulating XR Ethics, & so it's worth focusing on what @Meta is saying & doing.

I have more critical analysis on Meta in my opening @Gatherverse Summit keynote here + my Gatherverse thread with more context 👇

I have more critical analysis on Meta in my opening @Gatherverse Summit keynote here + my Gatherverse thread with more context 👇

https://twitter.com/kentbye/status/1496190445743144960

END/ If you find value in this type of coverage of the XR industry, then please consider supporting me on Patreon.

Thanks!

patreon.com/voicesofvr

Thanks!

patreon.com/voicesofvr

• • •

Missing some Tweet in this thread? You can try to

force a refresh