"#Imitation vs #Innovation: Large #Language and Image Models as Cultural #Technologies"

Today's Seminar by SFI External Prof @AlisonGopnik (@UCBerkeley)

Streaming now:

Follow our 🧵 for live coverage.

Today's Seminar by SFI External Prof @AlisonGopnik (@UCBerkeley)

Streaming now:

Follow our 🧵 for live coverage.

"Today you hear people talking about 'AN #AI' or 'THE AI.' Even 15 years ago we would not have heard this; we just heard 'AI.'"

@AlisonGopnik on the history of thought on the #intelligence (or lack thereof) of #simulacra, linked to the convincing foolery of "double-talk artists":

@AlisonGopnik on the history of thought on the #intelligence (or lack thereof) of #simulacra, linked to the convincing foolery of "double-talk artists":

"We should think about these large #AI models as cultural technologies: tools that allow one generation of humans to learn from another & do this repeatedly over a long period of time. What are some examples?"

@AlisonGopnik suggests a continuity between #GPT3 & language itself:

@AlisonGopnik suggests a continuity between #GPT3 & language itself:

@AlisonGopnik "It's a category error to think these systems are intelligent agents. But the very thing that makes human beings distinct is this capacity to take information from other people...collective intelligence over history. It makes more sense to think of [AI] this way."

@AlisonGopnik

@AlisonGopnik

@AlisonGopnik "Worrying that AIs are coming to kill us should be like 30 or 40 on the list of things to worry about."

"When each new cultural technology appeared, there have been arguments that they were bad instead of being good."

@AlisonGopnik

Socrates on writing might as well be re: #GPT3

"When each new cultural technology appeared, there have been arguments that they were bad instead of being good."

@AlisonGopnik

Socrates on writing might as well be re: #GPT3

"From the very beginning, you get a series of norms, rules, & later laws about new cultural technologies. As each new cultural technology emerges you get new kinds of norms."

"Pamphlets turned into newspapers."

@AlisonGopnik on the past & future of adapting to #EpistemicCrisis:

"Pamphlets turned into newspapers."

@AlisonGopnik on the past & future of adapting to #EpistemicCrisis:

"Newspapers fact-check. But it's curious to note that with libraries, we don't seem to care about how much in there is true or untrue."

@AlisonGopnik on the emergence of new norms and regulations with each new cultural technology:

@AlisonGopnik on the emergence of new norms and regulations with each new cultural technology:

@AlisonGopnik "You have to have this balance between #imitation & #innovation... Childhood is evolution's way of solving the #explore/#exploit tension."

- @AlisonGopnik

"So science is #neoteny?"

- Cris Moore

"Yes. That's not a joke. That's what I'm saying."

- Gopnik

- @AlisonGopnik

"So science is #neoteny?"

- Cris Moore

"Yes. That's not a joke. That's what I'm saying."

- Gopnik

Testing causal inference in children, @AlisonGopnik notes that "Four-year-olds are very good at over-riding a likely assumption with new evidence. Adults are not."

(Related, Clarke's 1st Law:

en.wikipedia.org/wiki/Clarke%27…

& Max Planck's "one funeral at a time")

(Related, Clarke's 1st Law:

en.wikipedia.org/wiki/Clarke%27…

& Max Planck's "one funeral at a time")

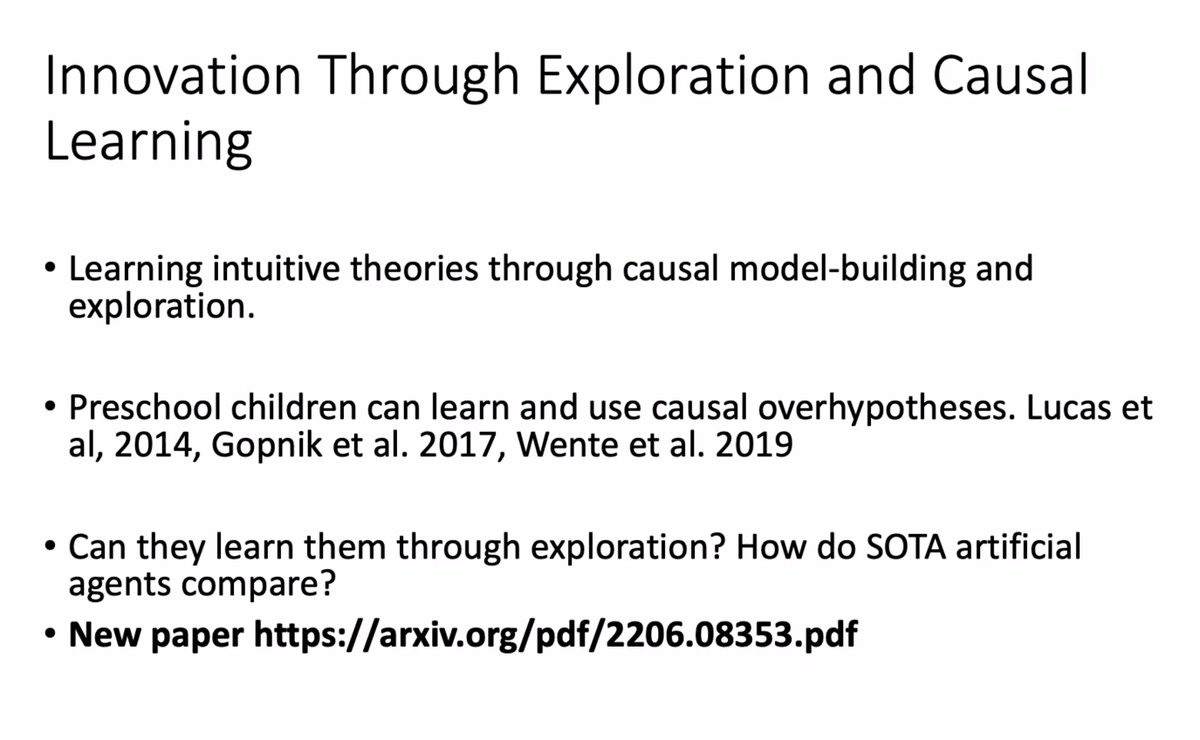

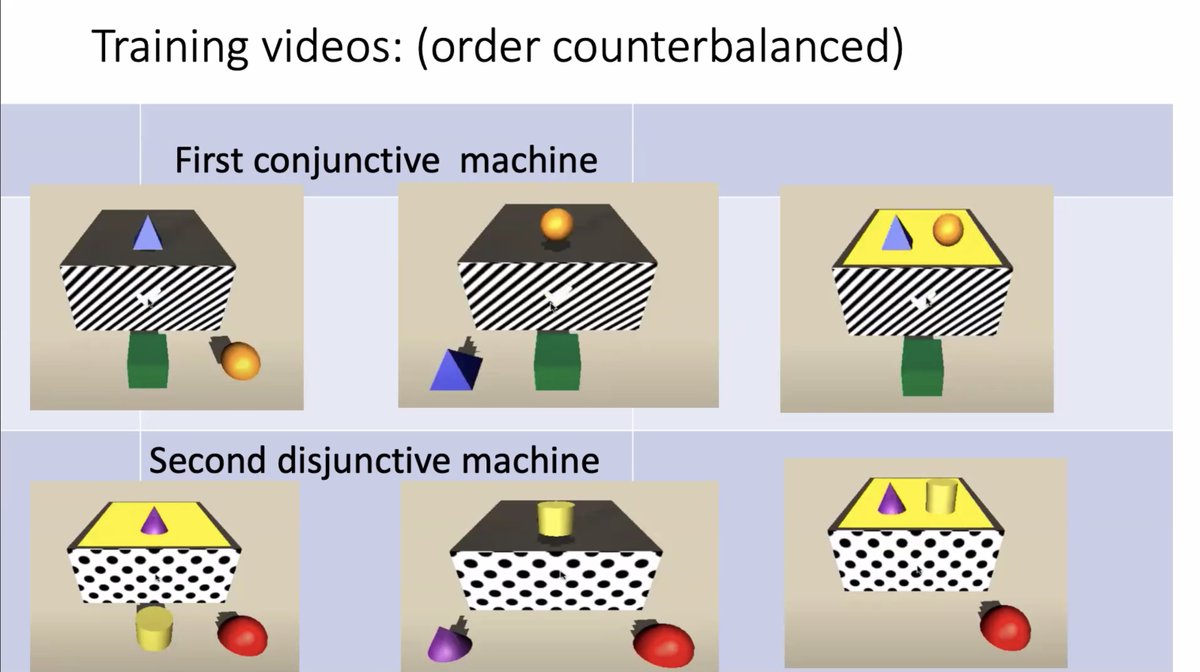

"If you wanted to have an ideal experimenter [for our #CausalInference machine]...what would be the set of experiments to determine this?"

@AlisonGopnik tests children's capacity to determine what is, and is not, a "#blicket":

#exploration #science

@AlisonGopnik tests children's capacity to determine what is, and is not, a "#blicket":

#exploration #science

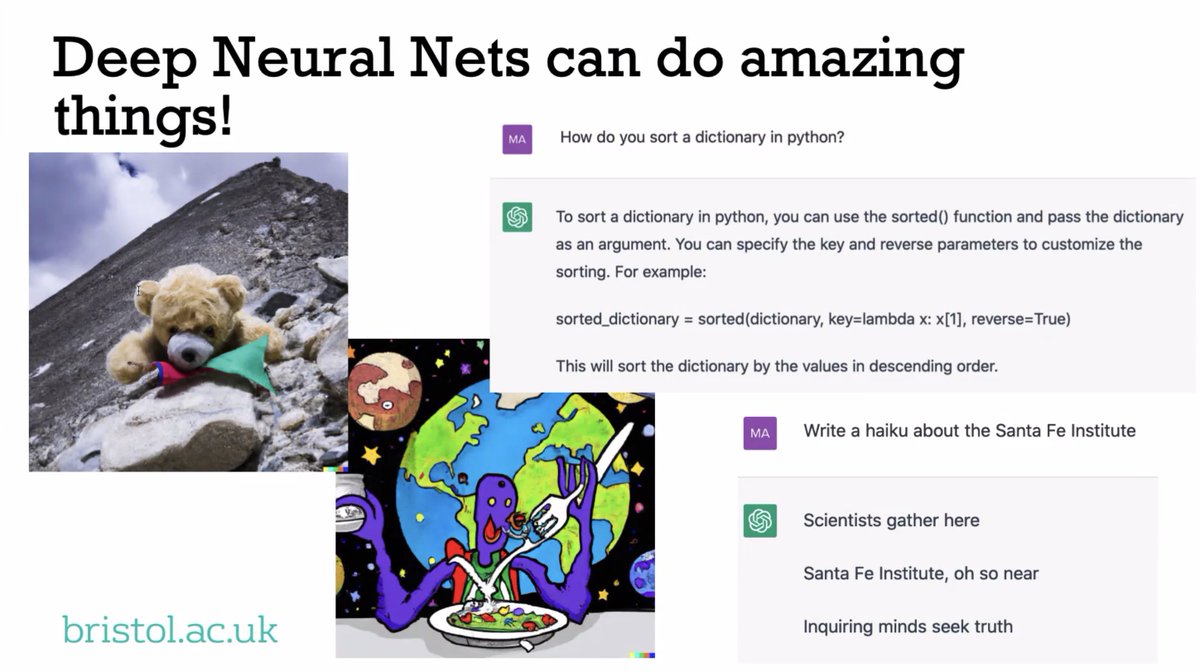

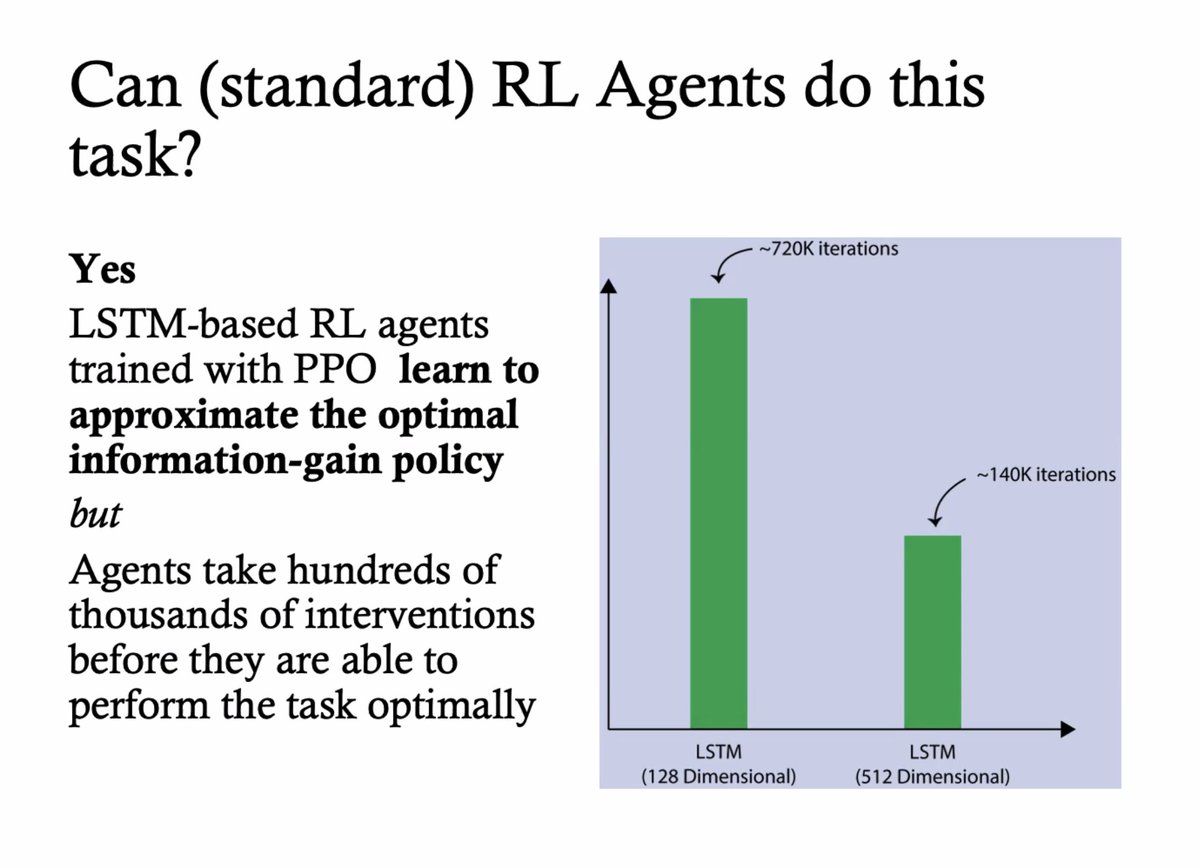

"What's the thing that #AI [researchers] think is the best system for #inference?" And how does it stack up against kids?

#ReinforcementLearning takes 100Ks of iterations to find optimal policies — they're searching a noisier possibility space than kids:

#ReinforcementLearning takes 100Ks of iterations to find optimal policies — they're searching a noisier possibility space than kids:

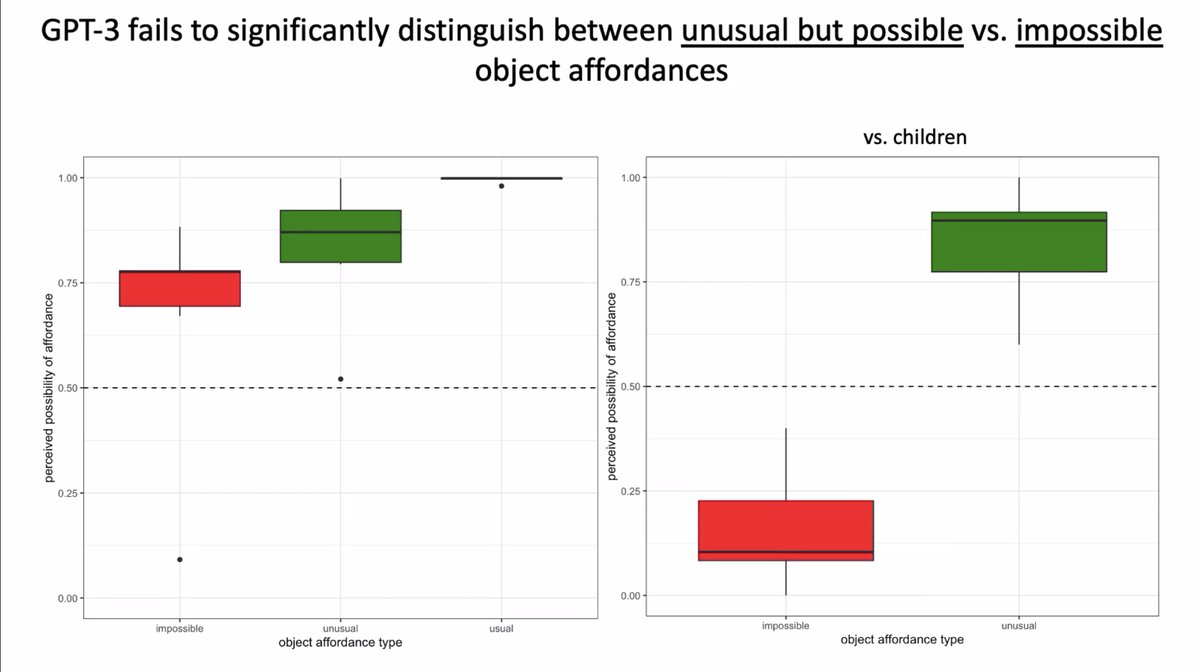

"Which one of these things should you choose if your hair is a mess? We asked children this question and we asked @OpenAI's #GPT3 [via] #DaVinci. Children were quite good at figuring out to use the fork. [#AI] 'failed significantly.'"

- @AlisonGopnik

- @AlisonGopnik

"The puzzle of #innovation is the real tension between how much is [it] the result of generating new possibilities, and how much is the result of constraining the space of possibilities?"

- @AlisonGopnik (@UCBerkeley, SFI)

cc @turinginst @DeepMind

- @AlisonGopnik (@UCBerkeley, SFI)

cc @turinginst @DeepMind

@AlisonGopnik @UCBerkeley @turinginst @DeepMind "Libraries aren't smart, but *using* a library makes you *infinitely* smarter."

- @AlisonGopnik on #AI as a cultural technology vs. *actual* intelligence as embodied in evolved organisms

"It's a category error to think about #GPT3 as an agent *at all.*"

santafe.edu/people/profile…

- @AlisonGopnik on #AI as a cultural technology vs. *actual* intelligence as embodied in evolved organisms

"It's a category error to think about #GPT3 as an agent *at all.*"

santafe.edu/people/profile…

• • •

Missing some Tweet in this thread? You can try to

force a refresh