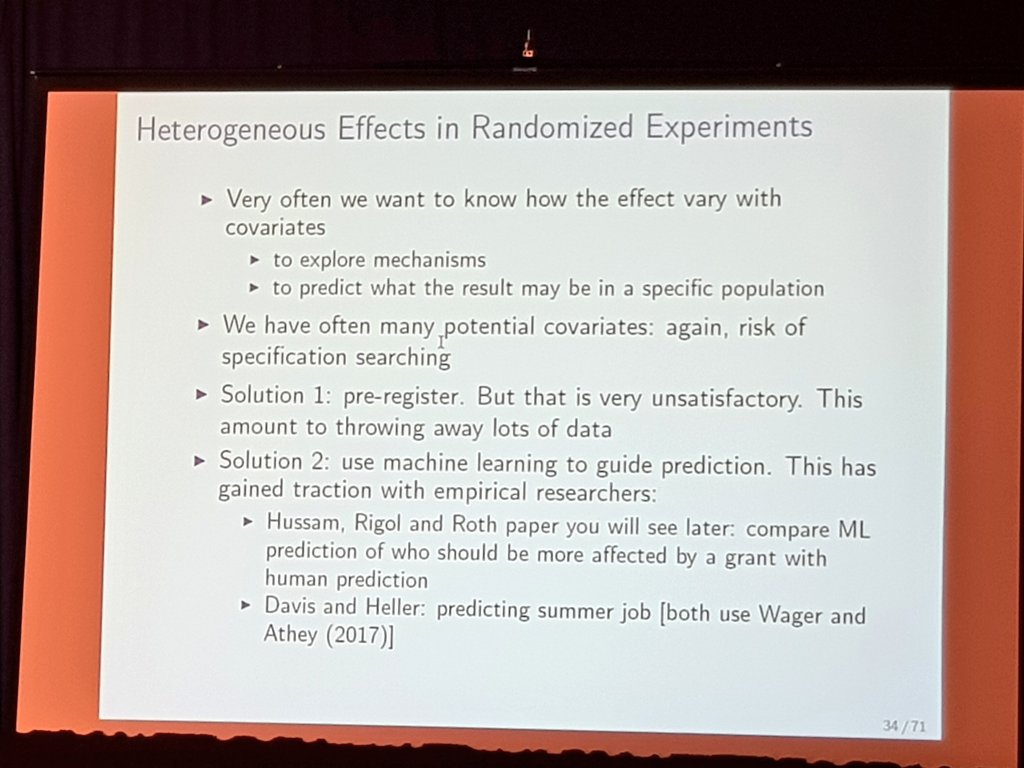

One solution is pre-registering.

This is important for clinical trials. There, the goal is simply to test, does this drug work? Also, there's a slot of money on the table to find a "yes" answer.

There, pre-registering specific subgroups risks throwing out valuable information that is only available ex-post.

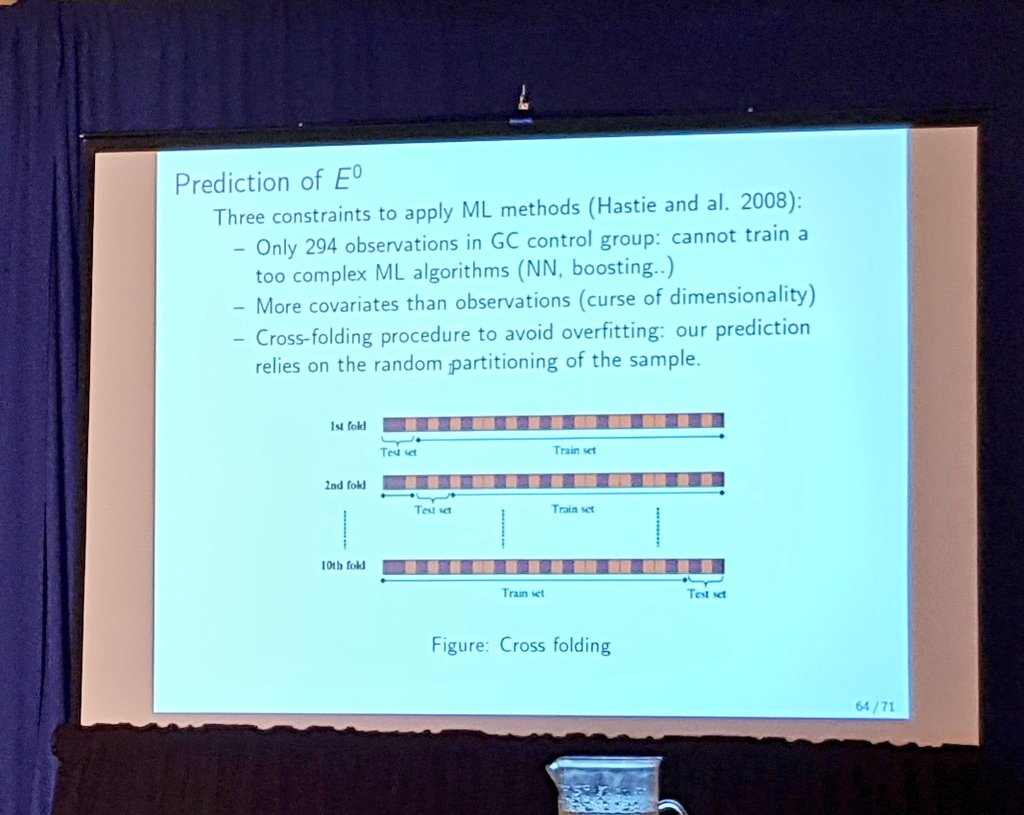

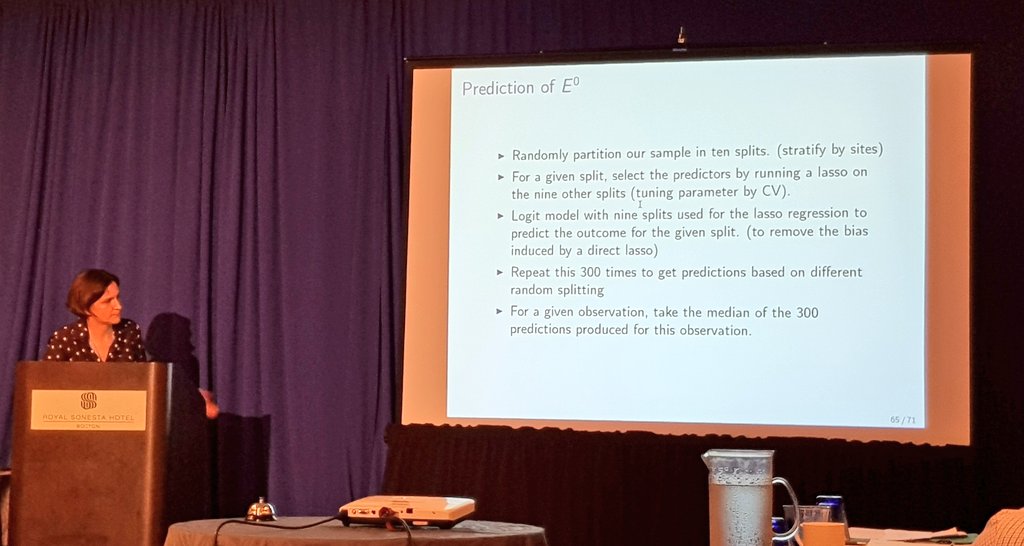

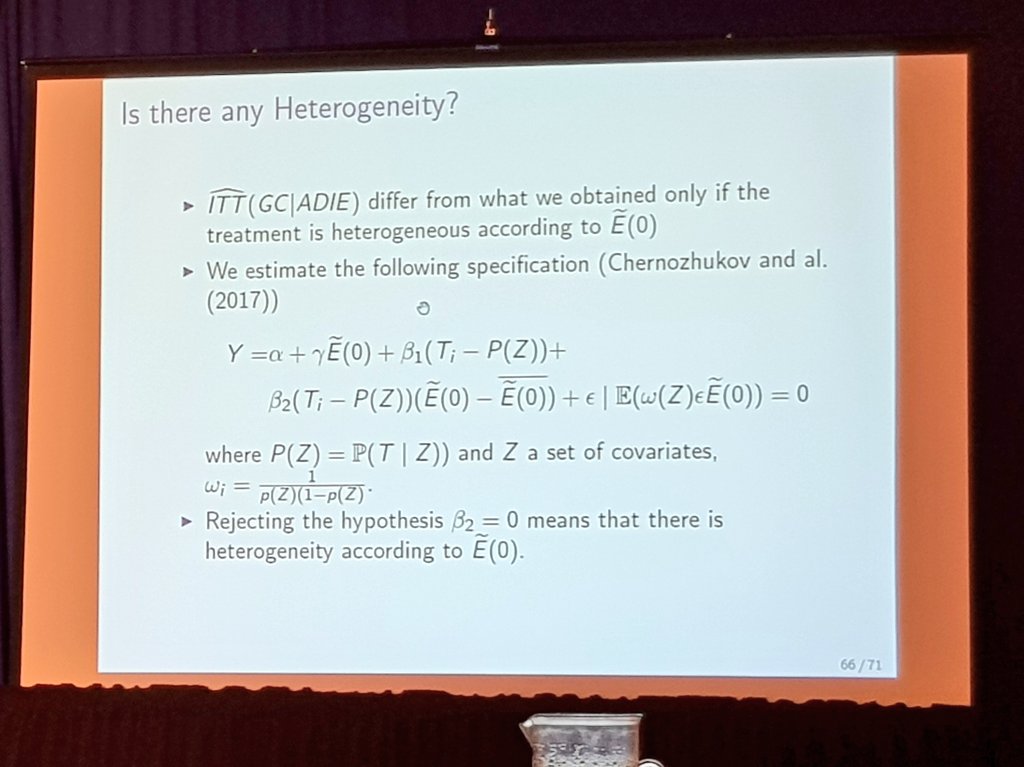

Alternative solution: use a ML process to select the groups.

They target youths with difficult employment situations who have a self-employment project.

The first program is selective. Tries to select the most enthusiastic & most prepared. The second takes all.