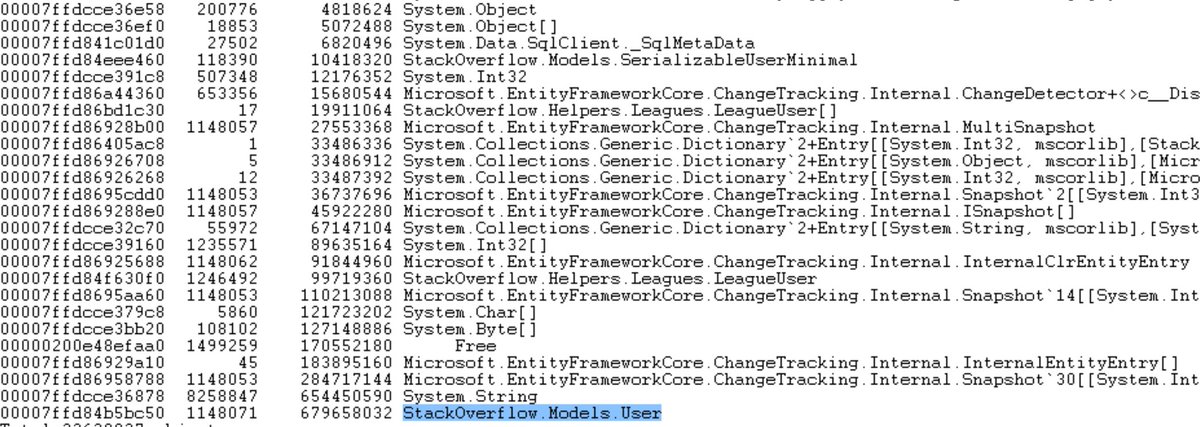

A) We have lots of user objects

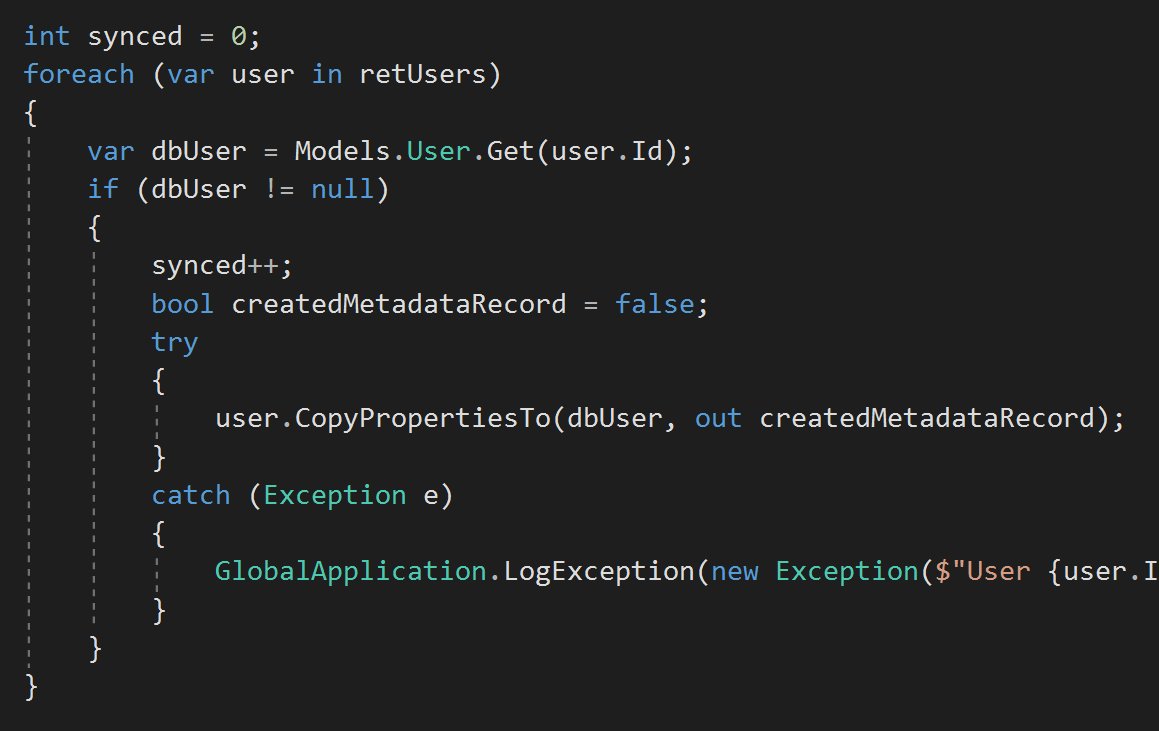

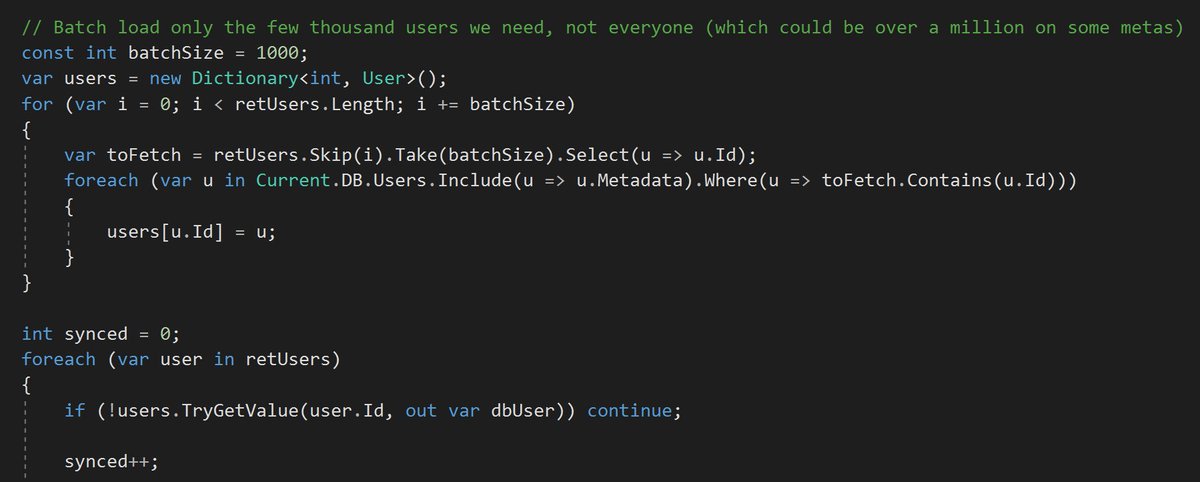

B) We have lots of change tracking

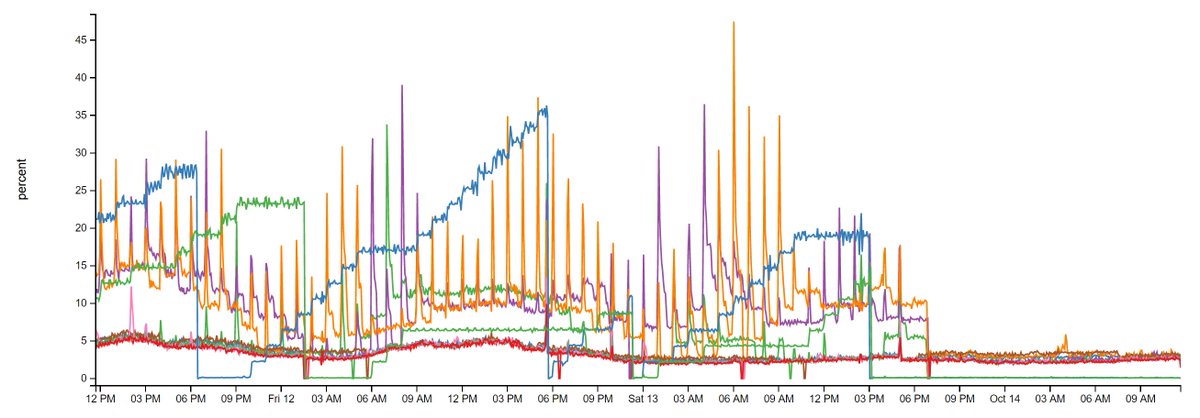

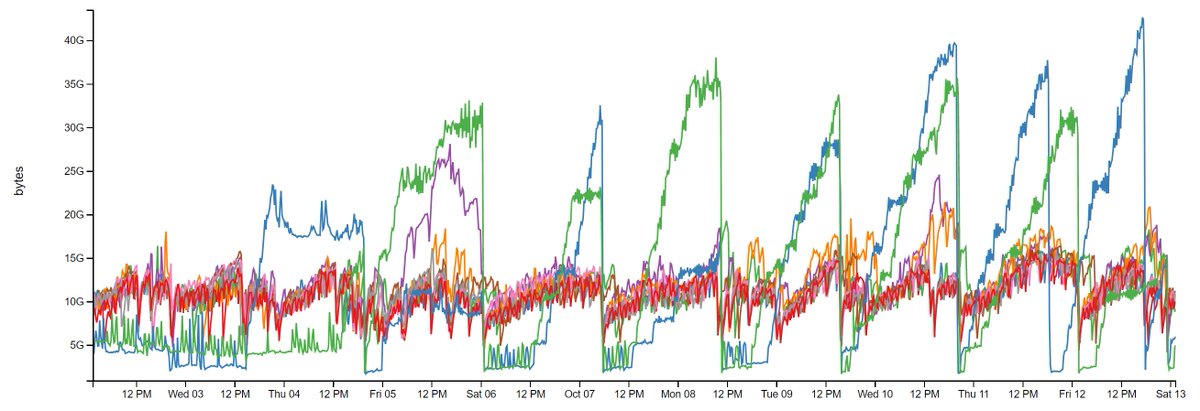

C) We have a memory leak specifically on ny-web10 and 11 servers (where meta.stackoverflow.com runs):

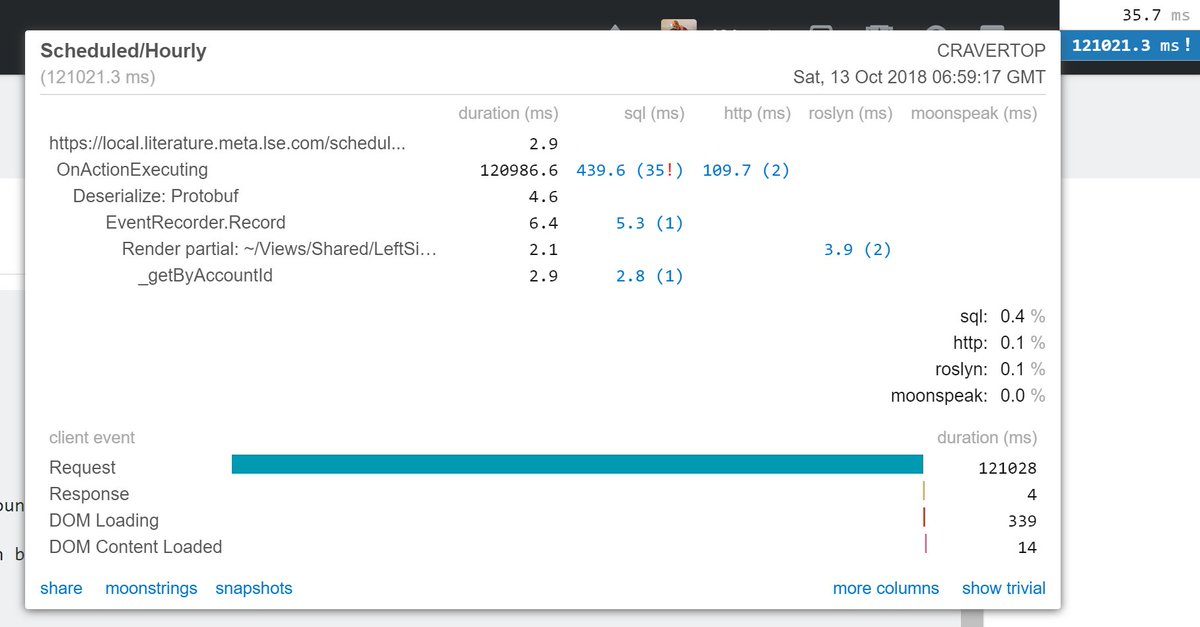

1) Always measure or check *before deploying the fix*, otherwise what are you checking to see if it's fixed?

2) We've got the expensive stack where we're spending lots of time in. That's our starting point for EF Core change tracking optimizations next.

That's all for this round - I'm very pleased with the result and I hope you enjoyed.