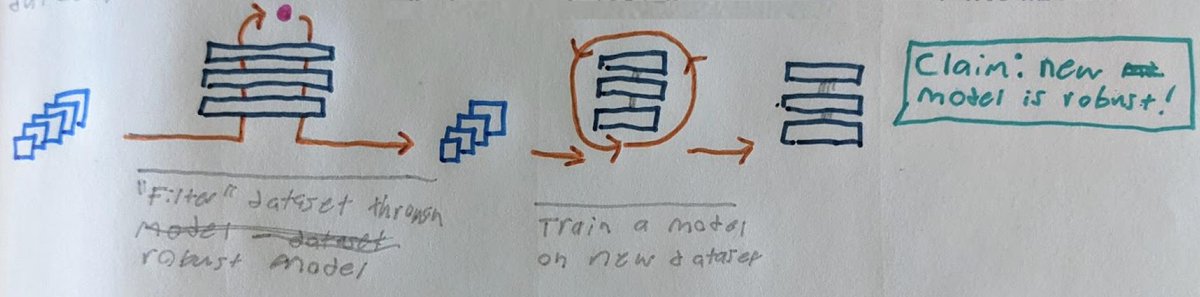

📝Paper: arxiv.org/pdf/1905.02175…

💻Blog: gradientscience.org/adv/

Some quick notes below.

(The method is vaguely similar in technique to Gerhos et al’s “Stylized ImageNet”, but feels pretty different.)

Note that this isn’t a robustness free lunch -- you still need to create the original robust model through adversarial training.

Caveat to all of this: Adversarial examples are not my domain of expertise and I haven't read this super carefully!