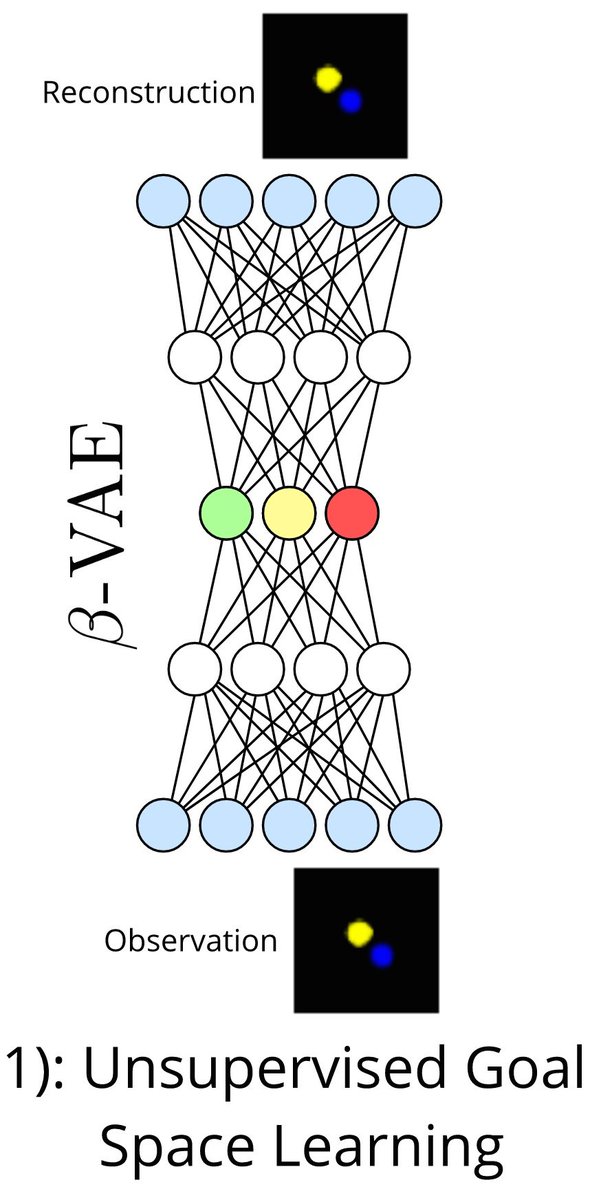

How curiosity-driven autonomous goal setting enables to discover

independantly controllable features of environments

pdf: arxiv.org/abs/1807.01521

Blog: openlab-flowers.inria.fr/t/discovery-of…

Colab: colab.research.google.com/drive/176q8pns…

#machinelearning #AI #NeuralNetworks

How could it discover and represent entities, and find out which ones are controllable (and learn to control them)?

It is an alternative to reinforcement learning, in the sense that it does not pre-suppose there are external scalar rewards coming from the outside world. Not even sparse ones.

Pursuing them makes them use and improve their internal world model, leveraging forms of counterfactual learning.

(e.g. frontiersin.org/articles/10.33… )

One way to do it is sampling goals in parts of the goal space for which the learner expects high learning progress.

e.g.

vocalizations: frontiersin.org/articles/10.33…

to the discovery of tool use: sforestier.com/sites/default/…