📄Paper: openreview.net/pdf?id=HJlnC1r…

🍿Interactive website : epfml.github.io/attention-cnn/

🖥Code: github.com/epfml/attentio…

📝Blog: jbcordonnier.com/posts/attentio…

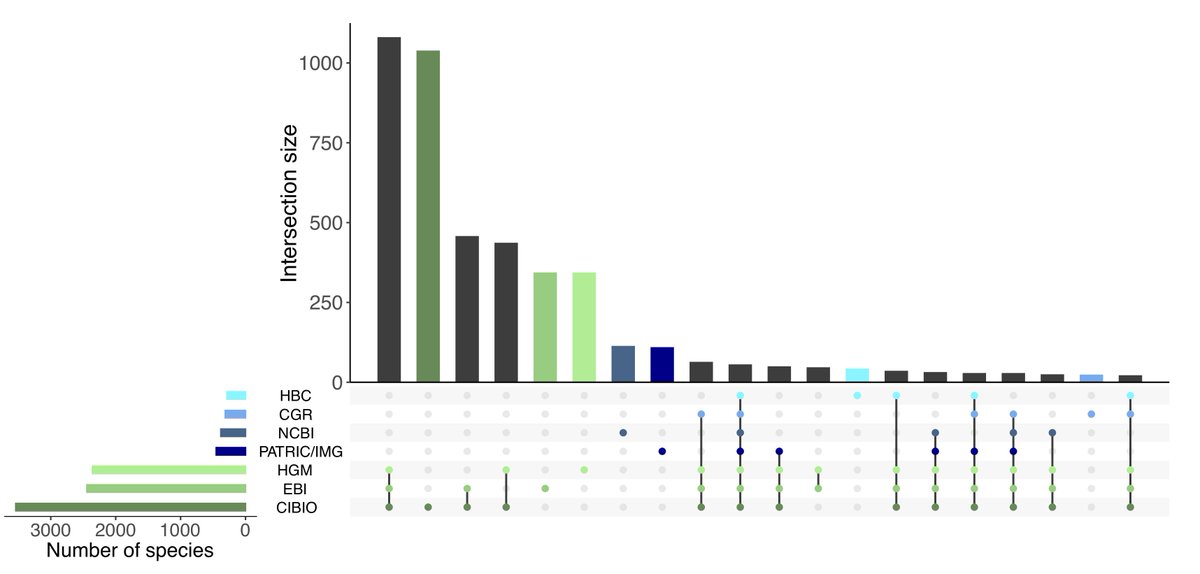

(a) Multiple heads, ex: 3x3 kernel requires 9 heads,

(b) Relative positional encoding to allow translation invariance.

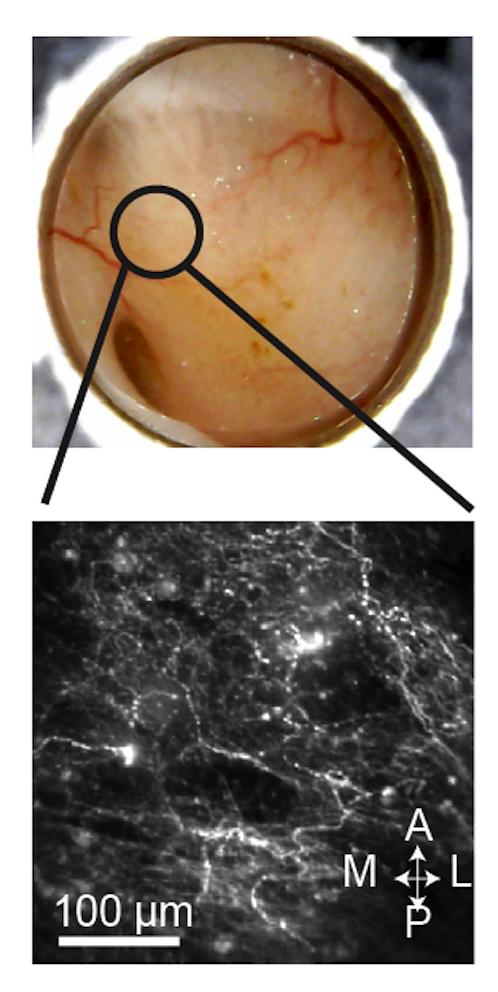

Each head can attend on pixels at a fixed shift from the query pixel forming the pixel receptive field. 2/5

Attention Augmented Convolutional Networks. @IrwanBello et al., 2019. arxiv.org/abs/1904.09925

Stand-Alone Self-Attention in Vision Models. Ramachandran et al., 2019. arxiv.org/abs/1906.05909

3/5

- some heads ignore content and attend on pixels at *fixed* shifts (confirms theory) sliding a grid-like receptive field,

- some heads seem to use content-based attention -> expressive advantage over CNN.

🍿 epfml.github.io/attention-cnn 4/5