𝗧𝗛𝗥𝗘𝗔𝗗: Can @BabylonHealth Be Trusted?

‘Bad Bot' (part 3): The final chapter in the @BadBotThreads...

1/37

‘Bad Bot' (part 3): The final chapter in the @BadBotThreads...

1/37

https://twitter.com/BadBotThreads/status/1322484201011204098?s=20

Just to be clear, I don’t ‘hate’ @babylonhealth (hate is emotive & suggests a lack of objectivity). However I do not trust Babylon - & in my opinion - Babylon has not demonstrated the ethical or moral values expected of an #AI #HealthTech corporation.

2/37

2/37

https://twitter.com/DrMurphy11/status/1232404830657925120?s=20

It’s important to note that the ‘corporate’ failings of the @babylonhealth are as consequence of decisions taken by a tiny minority of the senior leadership team - & are not in any way a reflection on Babylon’s employees or clinical services.

3/37

3/37

https://twitter.com/DrMurphy11/status/1446172127460212737?s=20

Safety concerns relating to Babylon’s AI Chatbot have been widely reported (see 👇). This thread will focus on other aspects to the Babylon story;

- The facade of trustworthiness

- False claims & vapourware

- Babylon's response to safety concerns

4/37

- The facade of trustworthiness

- False claims & vapourware

- Babylon's response to safety concerns

4/37

𝗧𝗵𝗲 𝗙𝗮𝗰𝗮𝗱𝗲 𝗼𝗳 𝗧𝗿𝘂𝘀𝘁𝘄𝗼𝗿𝘁𝗵𝗶𝗻𝗲𝘀𝘀

Trust* is essential to healthcare & also critical to the adoption of #AI technologies in our society.

*‘Appearing' trustworthy (a PR performance); is not the same as ‘being’ trustworthy (an organisational culture).

5/37

Trust* is essential to healthcare & also critical to the adoption of #AI technologies in our society.

*‘Appearing' trustworthy (a PR performance); is not the same as ‘being’ trustworthy (an organisational culture).

5/37

In my opinion, Babylon Health have focused on 'appearing' trustworthy, but have failed to demonstrate trustworthiness, which requires a culture of honesty, competence, & reliability.

How have Babylon Health appeared trustworthy? Let’s take a look…

6/37

hdsr.mitpress.mit.edu/pub/56lnenzj/r…

How have Babylon Health appeared trustworthy? Let’s take a look…

6/37

hdsr.mitpress.mit.edu/pub/56lnenzj/r…

When Babylon launched their telehealth service in April 2014 – Ali Parsa gave a talk (sales pitch) entitled ‘Smart Healthcare’ at a #TEDMED event hosted by Dara Ó Briain at the Royal Albert Hall.

We trust #TEDMED & by association we trust Babylon.

7/37

We trust #TEDMED & by association we trust Babylon.

7/37

In 2016, Babylon gained investment from DeepMind cofounders Demis Hassabis & Mustafa Suleyman. Their associated increased the perceived 'trustworthiness' of Babylon's AI - even though they had nothing to do with its development. #TrustByAssociation

8/37

8/37

https://twitter.com/DrMurphy11/status/1410662314676830210?s=20

In 2017, Babylon partnered with the Bill & Melinda Gates Foundation to deliver affordable, accessible healthcare to the people of Rwanda, including “cutting edge AI symptom checking.”

It ‘appeared’ trustworthy, but in reality, Babylon’s AI could not be trusted…

9/37

It ‘appeared’ trustworthy, but in reality, Babylon’s AI could not be trusted…

9/37

In 2018, Babylon used the ‘facade’ of trustworthiness to pull-off (IMO) the biggest #HealthTech scam since Theranos.

The #BabylonAITest event was held at @rcplondon & received extensive media coverage, with over 111 articles reaching a global audience of over 200 million.

10/37

The #BabylonAITest event was held at @rcplondon & received extensive media coverage, with over 111 articles reaching a global audience of over 200 million.

10/37

Babylon claimed their “latest AI capabilities show that it is possible for anyone, irrespective of their geography, wealth or circumstances, to have free access to health advice that is on-par with top-rated practicing clinicians”

Other’s disagreed.

11/37

Other’s disagreed.

11/37

https://twitter.com/EricTopol/status/1012535126645968897?s=20

During the event, Babylon made a number of false & misleading claims regarding the capabilities of their AI technology. Please watch the short video summary in the embedded tweet.👇

12/37

12/37

https://twitter.com/DrMurphy11/status/1411572803887845376?s=20

False claims & vapourware demos may be acceptable in the ‘fake it till you make it’ Tech culture of Silicon Valley. But - it has no place in ‘Health’ Tech – where misleading claims put patients at risk of harm. But that’s not the worst of it...

13/37

13/37

https://twitter.com/DrMurphy11/status/1069850077286592517?s=20

My real grievance with @Babylonhealth; is that they were aware that the 'diagnostic' AI Chatbot was ‘dangerously’ flawed at the time of the #BabylonAITest event - and ‘𝗸𝗻𝗼𝘄𝗶𝗻𝗴𝗹𝘆’ put the public at risk of harm.

14/37

14/37

https://twitter.com/DrMurphy11/status/1012259904592203778?s=20

‘Move fast & break things’ may sound cool in Silicon Valley – but in the world of Healthcare – it’s just negligent.

15/37

15/37

https://twitter.com/DrMurphy11/status/1143221238720073731?s=20

If you have an interest (and a spare 20 minutes), then this video provides more detail on the issues described.👇

16/37

16/37

https://twitter.com/DrMurphy11/status/1411572807591469056?s=20

As an aside. You have to ask why doctors & other staff working at @babylonhealth didn’t feel able to speak-up? Or maybe they did speak-up, but were ignored by senior management. Sadly, as a consequence of Babylon’s #NDAs, we will never know…

17/37

17/37

https://twitter.com/DrMurphy11/status/1045366694284152832?s=20

Why did Babylon ‘move fast & fake it'?

Pressure from investors?

“The company says it plans to use the new capital to continue building out its AI capabilities, including offering diagnosis by AI (rather than a more simple triage functionality)...”

18/37

techcrunch.com/2017/04/25/bab…

Pressure from investors?

“The company says it plans to use the new capital to continue building out its AI capabilities, including offering diagnosis by AI (rather than a more simple triage functionality)...”

18/37

techcrunch.com/2017/04/25/bab…

Bizarrely, @Babylonhealth continue to promote AI vapourwave.🙄

This is taken from their $Kuri SPAC pitch; “This feature is under active development, has not been commercialized & we cannot guarantee if/when the product will be delivered to members.”

19/37

This is taken from their $Kuri SPAC pitch; “This feature is under active development, has not been commercialized & we cannot guarantee if/when the product will be delivered to members.”

19/37

https://twitter.com/DrMurphy11/status/1435333770987646983?s=20

So Babylon made mistakes – but no one’s perfect - & I believe that we shouldn’t be judged by our errors; but by our actions in response to any error.

Sadly, 𝗕𝗮𝗯𝘆𝗹𝗼𝗻'𝘀 𝗿𝗲𝘀𝗽𝗼𝗻𝘀𝗲 𝘁𝗼 𝘀𝗮𝗳𝗲𝘁𝘆 𝗰𝗼𝗻𝗰𝗲𝗿𝗻𝘀 has been shameful.

20/37

Sadly, 𝗕𝗮𝗯𝘆𝗹𝗼𝗻'𝘀 𝗿𝗲𝘀𝗽𝗼𝗻𝘀𝗲 𝘁𝗼 𝘀𝗮𝗳𝗲𝘁𝘆 𝗰𝗼𝗻𝗰𝗲𝗿𝗻𝘀 has been shameful.

20/37

https://twitter.com/DrMurphy11/status/1041086418079100928?s=20

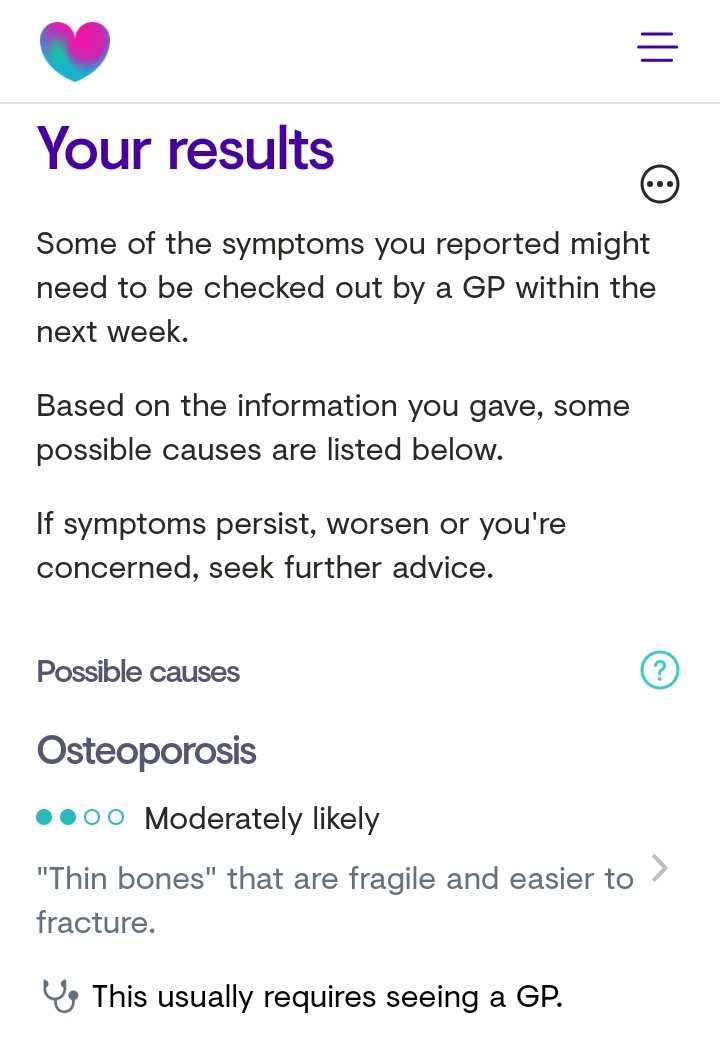

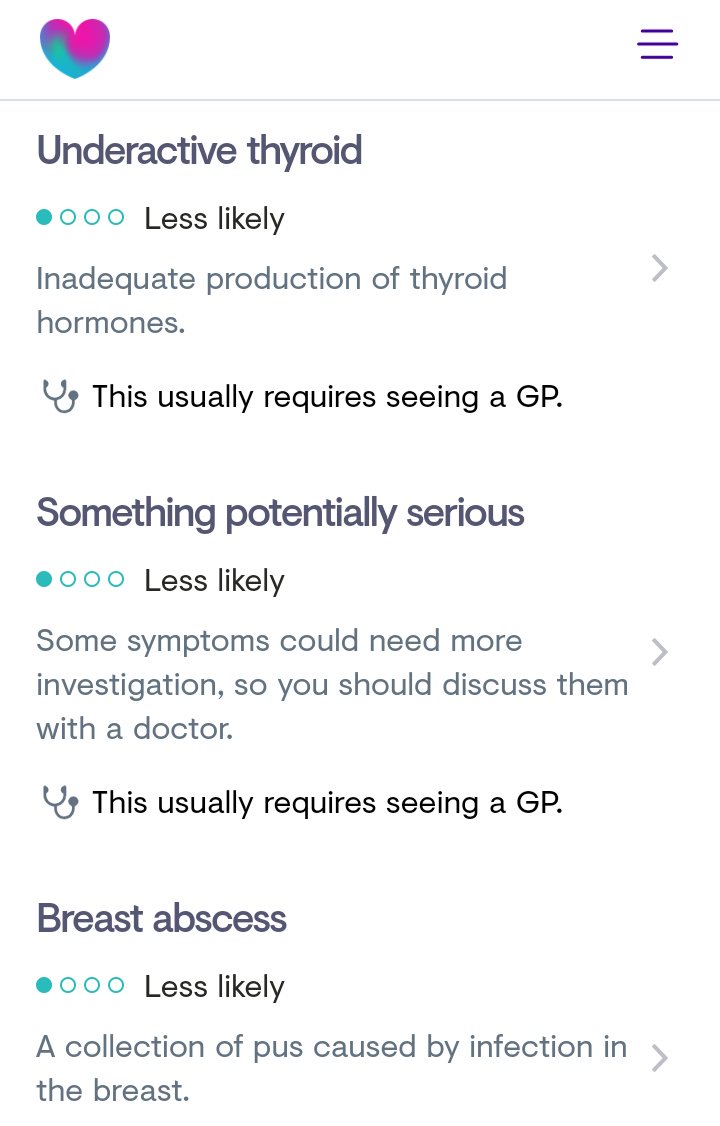

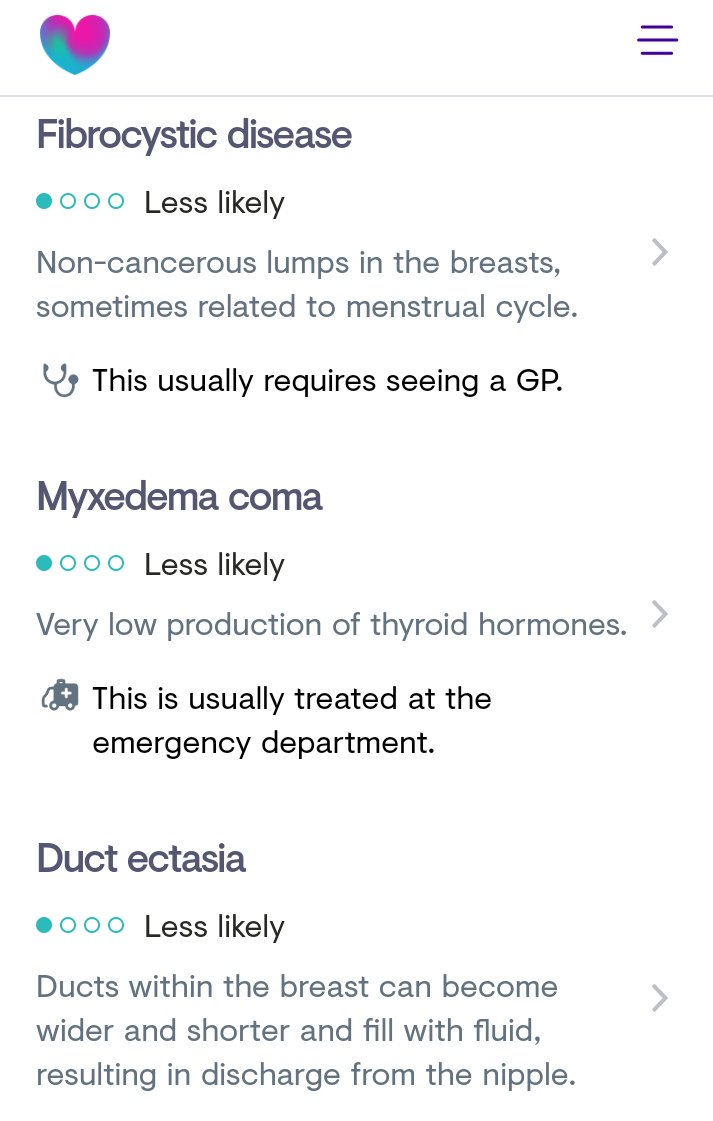

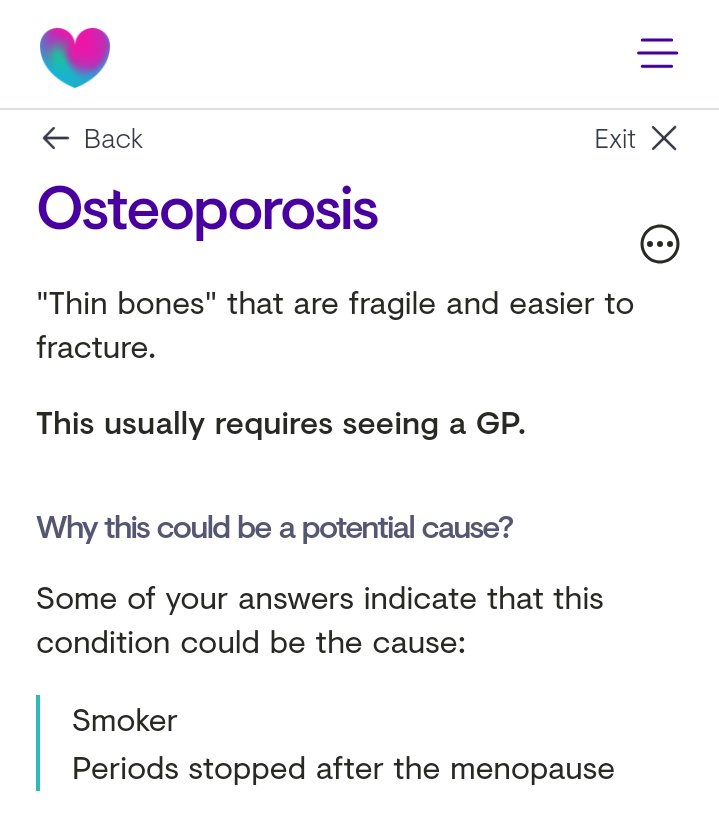

Instead of acknowledging & addressing safety issues, Babylon have been dismissive, responding with denial & attempting to silence &/or discredit any negative coverage.

Consequently, Babylon have been slow to rectify flaws in their AI chatbot.

21/37

Consequently, Babylon have been slow to rectify flaws in their AI chatbot.

21/37

https://twitter.com/HSJEditor/status/1006079295452794880?s=20

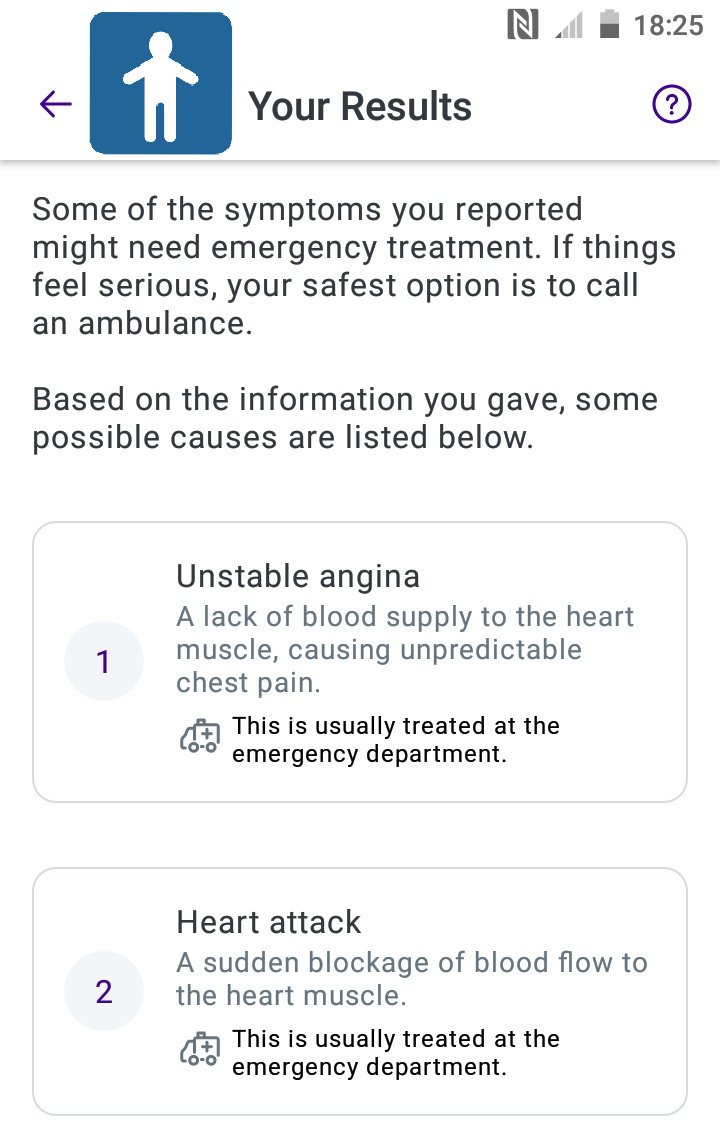

The dangerously flawed chatbot ‘cardiac’ triage algorithm (with the risk of a missed heart attack) was first noted in Jan 2017. Between Jan 2017 & Feb 2020, flaws in the AI chatbot ‘cardiac’ triage algorithm were identified on at least 28 occasions.

22/37

drmurphy11.tumblr.com/post/629764761…

22/37

drmurphy11.tumblr.com/post/629764761…

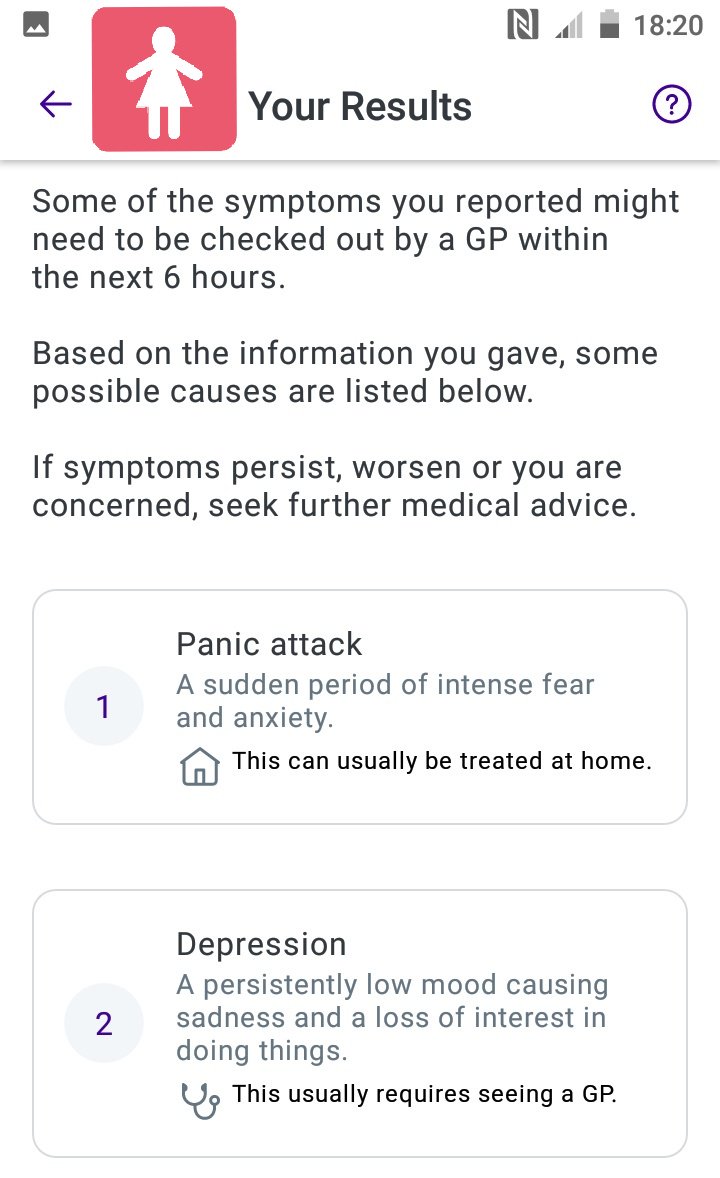

In Sep 2019, Babylon’s response to the identification of #genderbias within their AI cardiac triage algorithm was of particular concern…

23/37

23/37

https://twitter.com/DrMurphy11/status/1170774525987971076?s=20

Not only did Babylon deny there was a problem. 🤷♂️🤷

But they put a positive PR spin on it with…

24/37

But they put a positive PR spin on it with…

24/37

https://twitter.com/DrMurphy11/status/1172831460077768705?s=20

…this @Babylonhealth sponsored article on #GenderBias appearing in the @standardnews ~2 weeks after Babylon was called out for #GenderBias in their #AI Chatbot algorithm.

Coincidence, or corporate damage limitation?

25/37

standard.co.uk/futurelondon/h…

Coincidence, or corporate damage limitation?

25/37

standard.co.uk/futurelondon/h…

Of further concern. Over 2 months later, they still hadn’t fixed the negligent #genderbias algorithm!!! #DeathByChatbot #GenderBias

26/37

26/37

https://twitter.com/DrMurphy11/status/1197589723100917760?s=20

In Feb 2020, myself & Babylon publicly debated their AI safety record at the @RoySocMed & on @BBCNewsnight.

- I outlined the risk to patient safety.

- They called me a Troll.☹️

27/37

digitalhealth.net/2020/02/babylo…

- I outlined the risk to patient safety.

- They called me a Troll.☹️

27/37

digitalhealth.net/2020/02/babylo…

Of note, my concerns regarding @babylonhealth have been acknowledged by @mhradevices...

"your concerns are all valid and ones that we share”

28/37

tcrn.ch/3sSJ0NX

"your concerns are all valid and ones that we share”

28/37

tcrn.ch/3sSJ0NX

In my opinion, the failings of Babylon’s AI technology, are as a consequence of weak clinical leadership & questionable corporate ethics…

An unethical #Corporation will produce unethical #AI.

29/37

An unethical #Corporation will produce unethical #AI.

29/37

https://twitter.com/DrMurphy11/status/1162973367999324161?s=20

Although Babylon hasn’t had success with their #AI technology, their #NHS @gpathand service has grown to become the largest (healthiest) GP practice in England.

Remarkably, 85% of #GPatHand patients are aged 20 - 39, compared to just 28% nationally!

30/37

Remarkably, 85% of #GPatHand patients are aged 20 - 39, compared to just 28% nationally!

30/37

https://twitter.com/DrMurphy11/status/1350851951467106305?s=20

Babylon @GPatHand was personally endorsed by @matthancock & there is little doubt that his unwavering Tiggerish enthusiasm helped facilitate Babylon’s UK growth, which in turn, increased their valuation...

31/37

31/37

https://twitter.com/DrMurphy11/status/1433499943050829828?s=20

Of note, I was reassured by @mhradevices & @carequalitycomm that their regulatory inaction, was not in any way related to Matt Hancock’s association with Babylon.

The regulatory system is just not fit for purpose…

32/37

The regulatory system is just not fit for purpose…

32/37

https://twitter.com/DrMurphy11/status/1124036784583790593?s=20

I wonder if Matt "there is no privatisation of the NHS" Hancock is aware that his NHS GP practice is due float on the US stock market via a SPAC ($Kuri) merger next week…

33/37

33/37

https://twitter.com/DrMurphy11/status/1203688865413894148?s=20

It’s unfortunate that Hancock focused his efforts on Babylon’s disruptive care model; whilst ignoring Tech solutions that could both enhance & complement existing NHS services. If you @askmyGP, most patients don’t want video, they want choice.

34/37

34/37

https://twitter.com/DrMurphy11/status/1440229957578358786?s=20

The idea that healthcare is full of 'disruption' resistant luddites is just lazy rhetoric. Staff (& pts) welcome Tech that improves efficiency &/or safety.

We just don’t want Snake Oil & want the basics to work, before getting the shiny AI baubles.

35/37

We just don’t want Snake Oil & want the basics to work, before getting the shiny AI baubles.

35/37

https://twitter.com/DrMurphy11/status/1051373507031027714?s=20

Building ‘Trust’ in AI HealthTech shouldn’t be a PR exercise.

1. We need HealthTech corporations that demonstrate ‘Trustworthiness’ through Integrity & Evidence.

2. We need a regulatory system that demonstrates ‘Trustworthiness’ through its actions.

36/37

1. We need HealthTech corporations that demonstrate ‘Trustworthiness’ through Integrity & Evidence.

2. We need a regulatory system that demonstrates ‘Trustworthiness’ through its actions.

36/37

The 'potential' benefits of AI technology in healthcare are huge; but they won't be achieved if we 'move fast & break things'

A flawed/negligent algorithm can harm millions, without anyone noticing.

That's why, validation, is so critical.

37/37

A flawed/negligent algorithm can harm millions, without anyone noticing.

That's why, validation, is so critical.

37/37

• • •

Missing some Tweet in this thread? You can try to

force a refresh