NO NO NO NO NO NO NO NO NO NO NO NO NO NO.

Don't do this - human rights defenders already have images and videos undermined - don't contribute to it by utilizing AI-generated imagery in this way. Yes, there will be ways to use AI-generated imagery for advocacy - this is not it.

Don't do this - human rights defenders already have images and videos undermined - don't contribute to it by utilizing AI-generated imagery in this way. Yes, there will be ways to use AI-generated imagery for advocacy - this is not it.

https://twitter.com/Amnesty_Norge/status/1651879572944691201

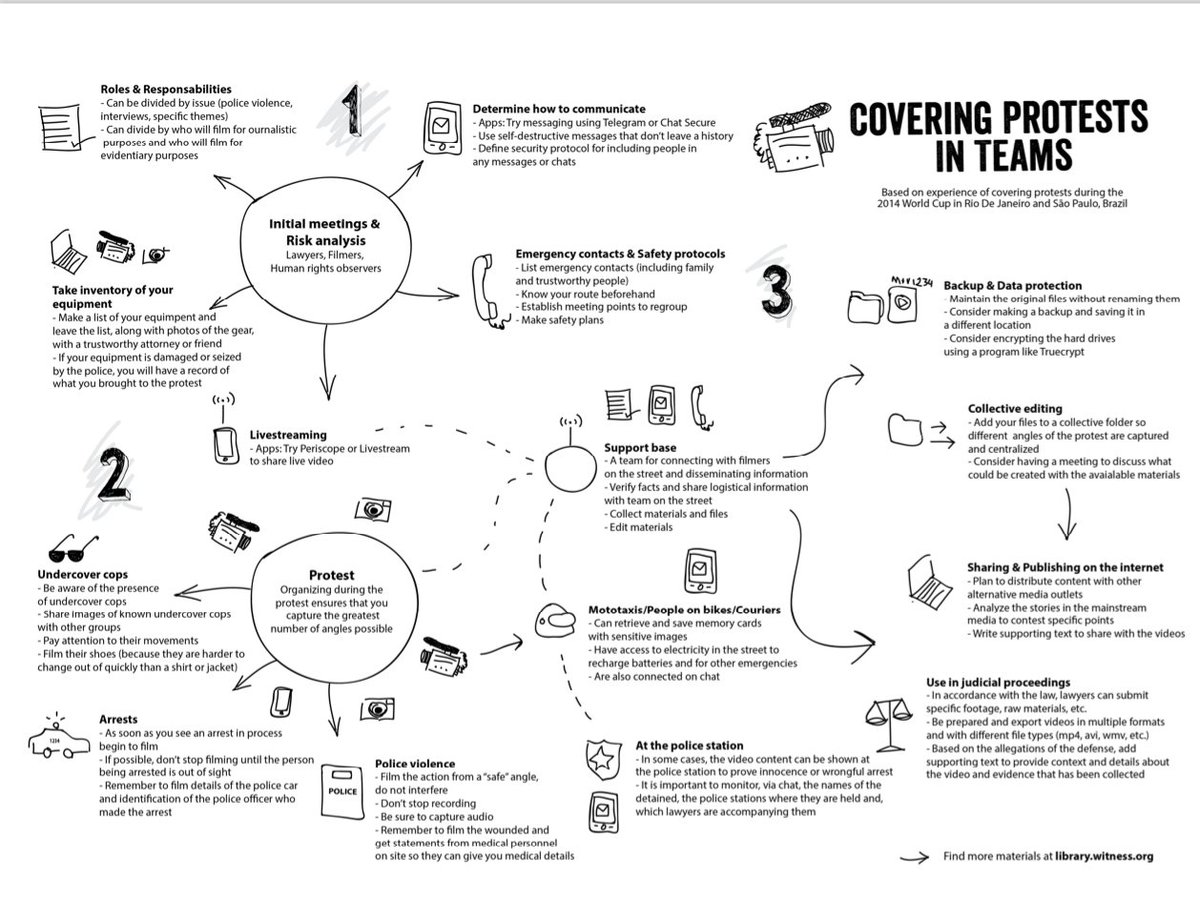

Activists we work with @witnessorg constantly defend credibility of pics/ videos.

In Prepare, Don't Panic work we talked to them (+ journalists) about AI-generated media.

A key fear? Undermining their evidence with claims all can be falsified.

What they wanted? - links pinned

In Prepare, Don't Panic work we talked to them (+ journalists) about AI-generated media.

A key fear? Undermining their evidence with claims all can be falsified.

What they wanted? - links pinned

It's not that there aren't ways to use AI-generated images/ videos - we explored in recent workshop in Nairobi, but also fundamental reality that #humanrights defenders can't be naive or compete with abusers in using or AI imagery: we have more to lose.

A few things human rights defenders DID ask for:

1. Center people globally facing risk to decide how handle harms/solutions in AI-generated media

2.Responsibility on models to tools to distributors not just hope individuals spot

3. Need access 2 detection wired.com/story/opinion-…

1. Center people globally facing risk to decide how handle harms/solutions in AI-generated media

2.Responsibility on models to tools to distributors not just hope individuals spot

3. Need access 2 detection wired.com/story/opinion-…

4. Provide good options for disclosure (human rights protected) in synthetic media world

niemanlab.org/2022/12/synthe…

5. Defend satire/grey areas cocreationstudio.mit.edu/just-joking-ac……

6. Build human rights into authenticity tools.

blog.witness.org/2020/05/authen…

niemanlab.org/2022/12/synthe…

5. Defend satire/grey areas cocreationstudio.mit.edu/just-joking-ac……

6. Build human rights into authenticity tools.

blog.witness.org/2020/05/authen…

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter