That means one thing: hack weekend 😃

Gonna try live tweeting my progress in this thread.

Hopefully it increases my accountability and forces me to ship.

#HackWeekend

I know literally nothing about iOS development

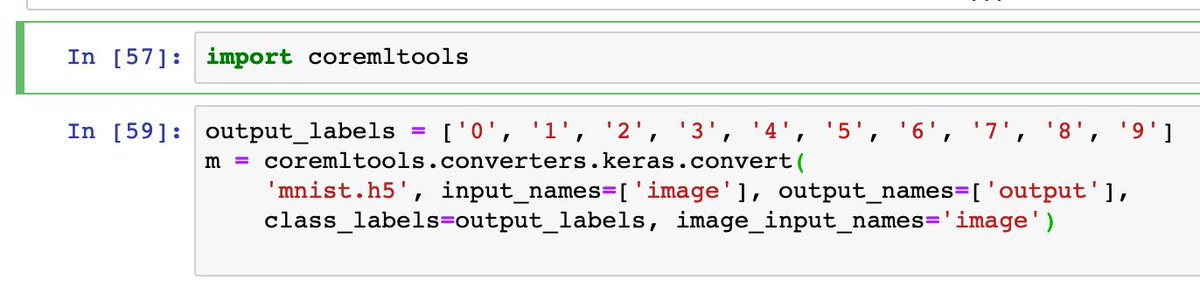

I have worked with GPT-2 before but never CoreML or other formats Swift supports.

openai.com/blog/better-la…

So far:

Ran into a weird issue where all my ui components would appear on top of each other when I ran the app on the emulator. Turned out I needed a stack view, autolayout, and constraints.

Can:

grab the text entered and do stuff with it on button press, can send messages, even have an alert if the text field is empty

1. Is dynamic text input length possible with how CoreML handles graph execution? If not whats the best UX for forcing a sequence length? Padding probably

...

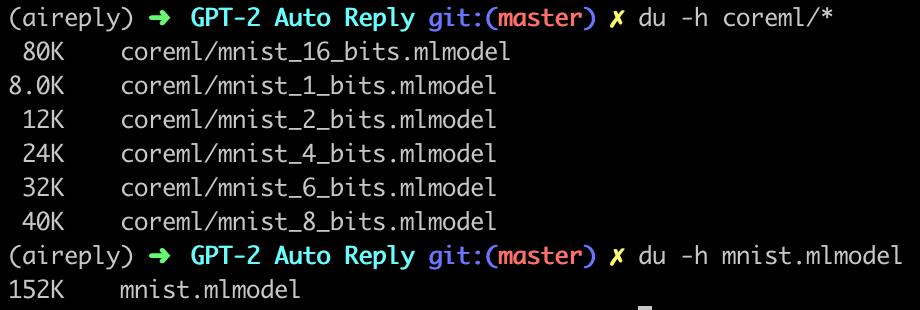

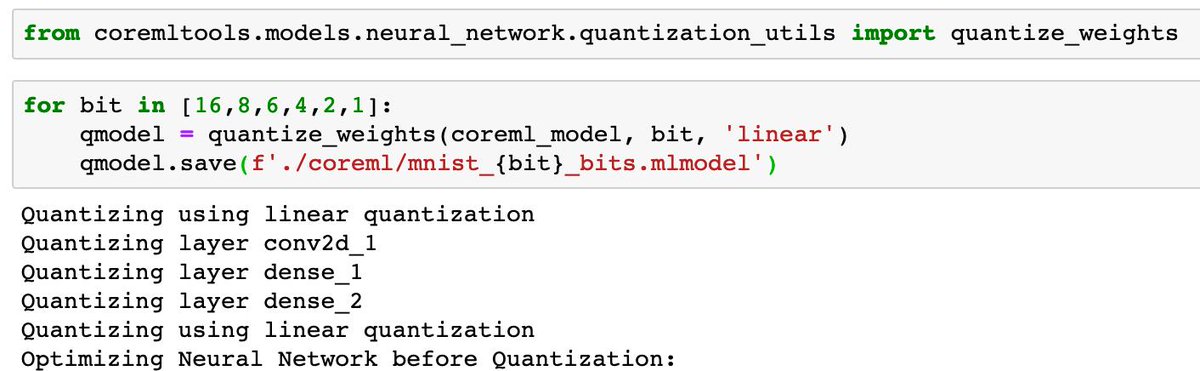

heartbeat.fritz.ai/reducing-corem…

If it doesnt work I might look at TF lite and see if that makes things any easier.

And then theres first class ONNX support (open standard for model serde) pytorch.org/docs/stable/on…

Back to the break

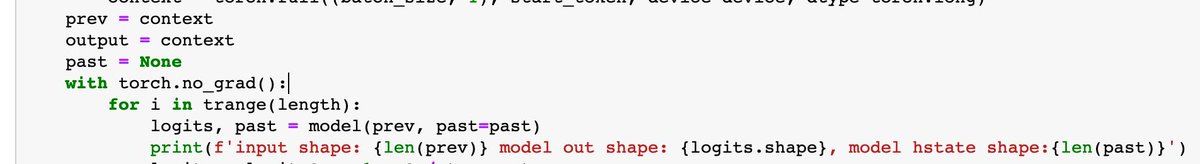

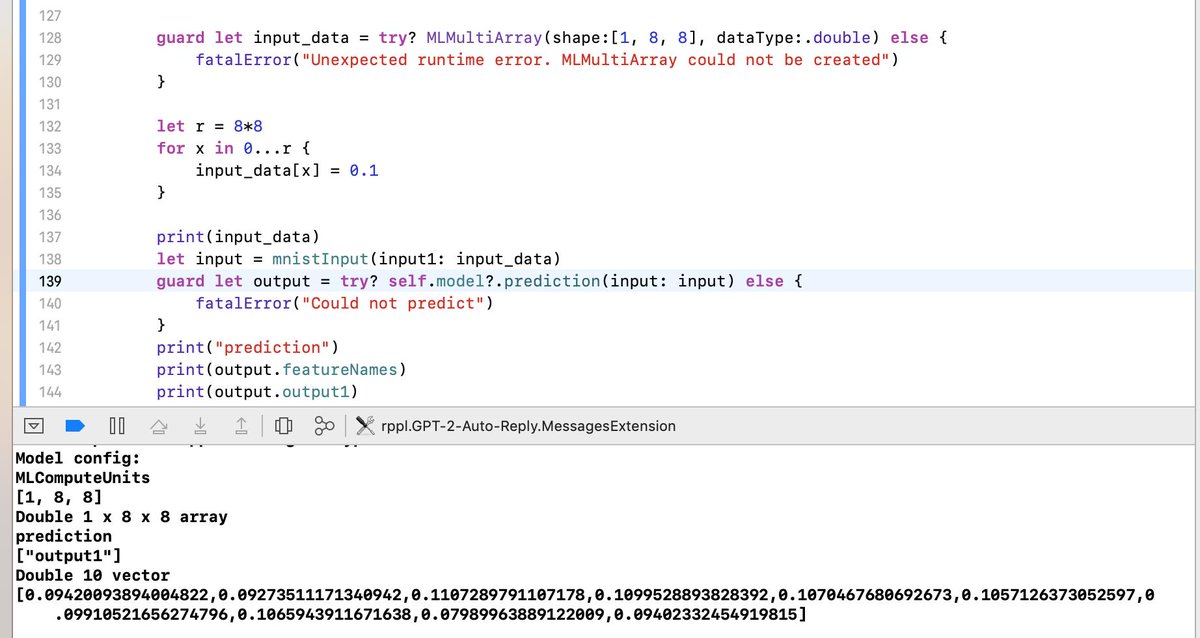

The fact this seems to be the best way to create a vector in Core ML is worrying. Seems better if you're using images directly but still, super limiting.

Hopefully Swift for TF can bridge the gap...

Core ML's arrays do not look fun but it should be possible, there might be better abstractions..

Definitely want to come back to on device later though. Seems possible, just challenging.

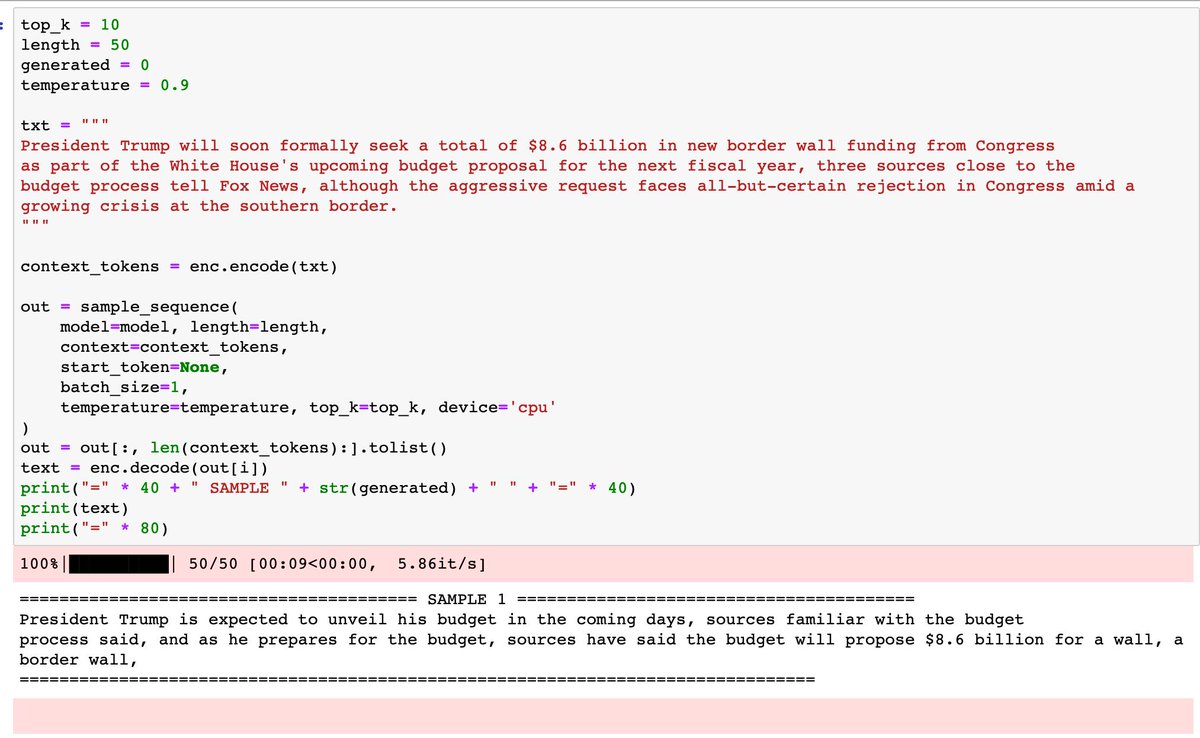

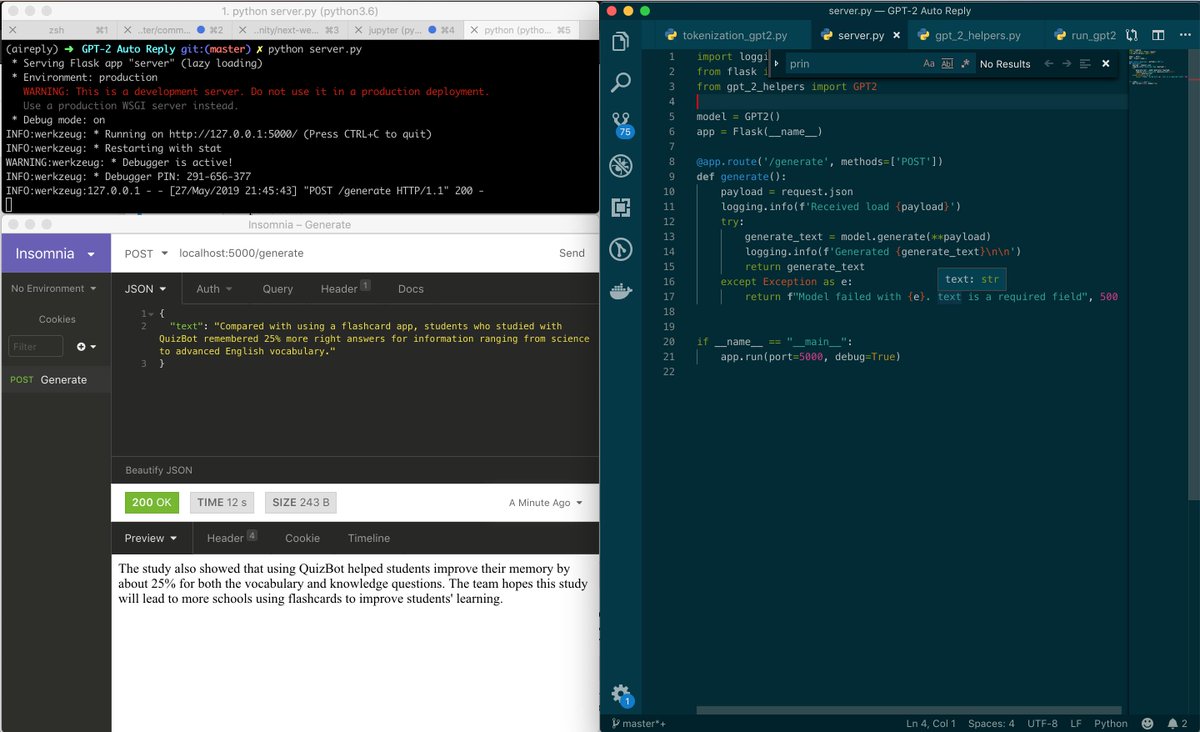

Still a lot of things to add (clean up generated output, smart output length, user defined temp / top_k, on device gen) but happy with the result. Will write a summary / reflection

This was fun! Definitely will do #HackWeekend again 🔥

Quite like the way it feels, never felt "limited" by the language. Can definitively feel some legacy stuff from Objective-C in iOS dev, NSNumber (really NS*) seems a bit unwieldy but overall...

Few things were non-obvious to me; how to do programmatic UI updates? I'm guessing view components can be created / modified by ID? Didn't look too hard but obvs is a solved problem

There are so many ways to do everything, manually dealing with graph sessions sucks, serde is fractured, somethings play nice with keras others don't.

This may be an obvious point "of course you need to port the code between devices"

Its really powerful to be able to minimize the time between cutting edge and consumer use.