(thread)

Why do computers need to be shown 1000 coffee cups to understand what a cup is, whereas a human child can understand the concept after being shown a single cup?

Because human brains can do unsupervised contextualization.

1/N

A human recognizes "features in a context".

Wait - aren't MLs able to recognize contexts as well?

Perhaps - but when they do, they treat them as features.

Let me explain.

Also, this is a short thread. Papers here luca-dellanna.com/research

I explain the neurological reasons for this statement at page #2 here: luca-dellanna.com/wp-content/upl…, but let's see why the distinction matters

Let's see them one by one.

For example: a handle, a cylinder and a concavity are recognized as an empty coffee mug.

For example…

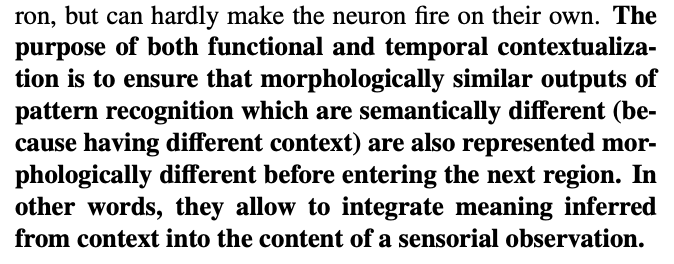

Some of these contexts are contradictory, but it's okay. The current brain region lacks info to discriminate.

Now, the magic is in the following step.

The output of pattern recognition is "Alan's dirty cup".

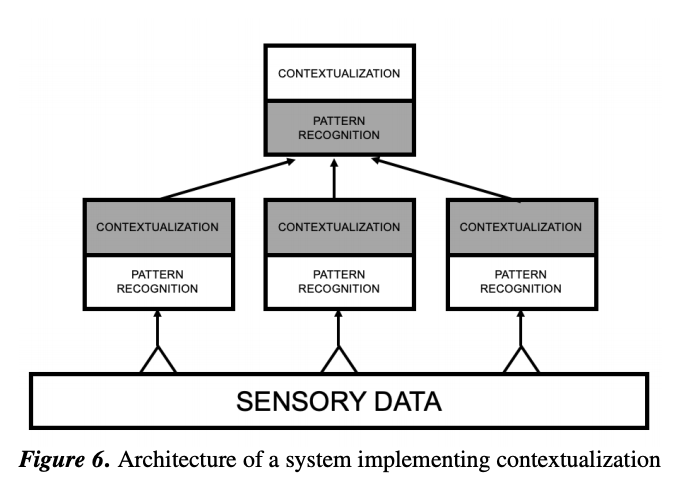

As perception moves up the hierarchy, it loses detail about features and it acquires meaning.

Q: What if context is an emergent property of a deeply connected network being trained for a very long time?

The human 🧠 uses patterns of column activations to represent the former & patterns of neuron activations for the latter

Current ML systems do not have that