amazon.com/Parallel-Distr…

en.wikipedia.org/wiki/Friedrich…

That’s evolutionarily fine because propositional inference is mostly useless in nature (without technology) anyway.

How can a depth-10 circuit compute this?

Taking “Parallel Distributed Processing” seriously: only by considering all possibilities simultaneously.

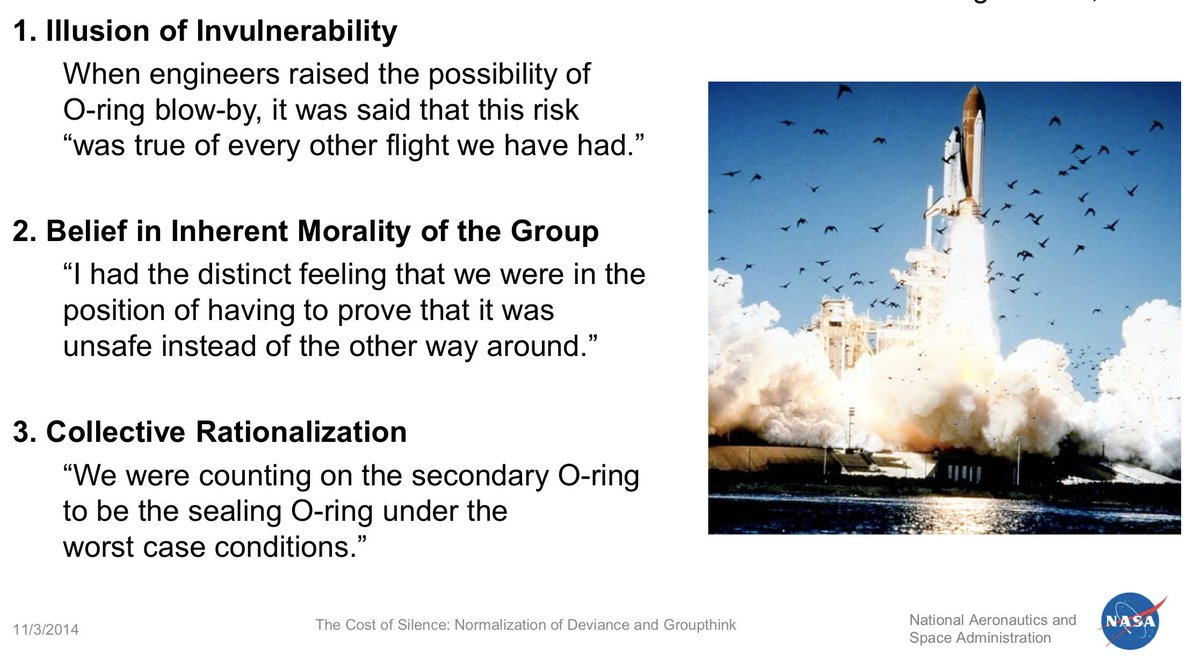

@vervaeke_john argues, persuasively, that this is THE central issue for cognitive science: ipsi.utoronto.ca/sdis/Relevance…

Did you listen to @vervaeke_john’s talk about that? (I recommended it an hour ago.)

Or you could read the explanation in this TRUE story about how I became a character in a Ken Wilber novel, because Heidegger. meaningness.com/metablog/ken-w…