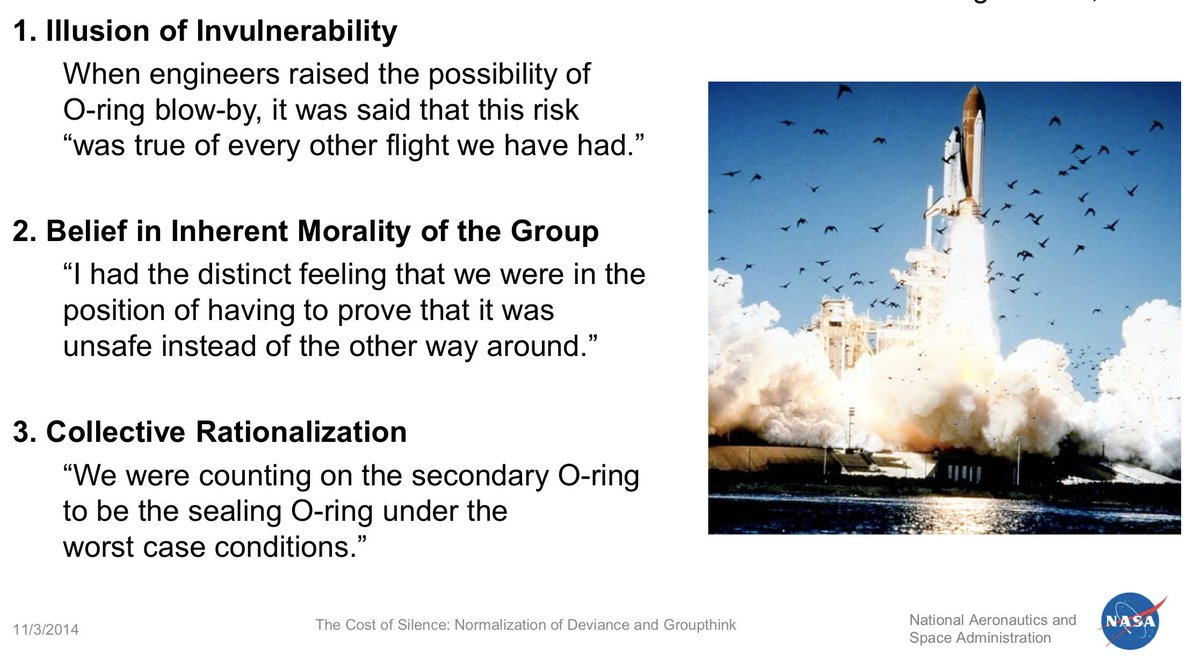

sma.nasa.gov/docs/default-s…

gnssn.iaea.org/NSNI/SC/TM_ITO…

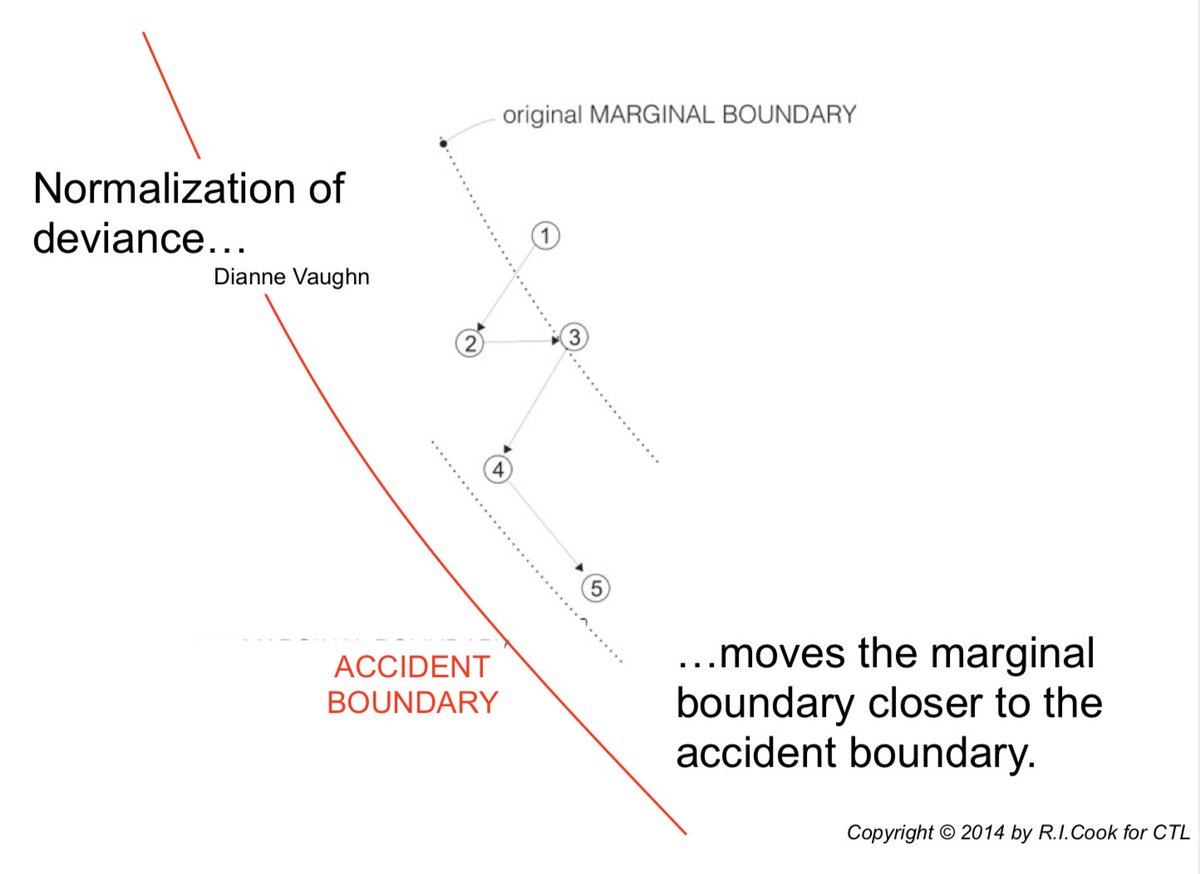

how.complexsystems.fail

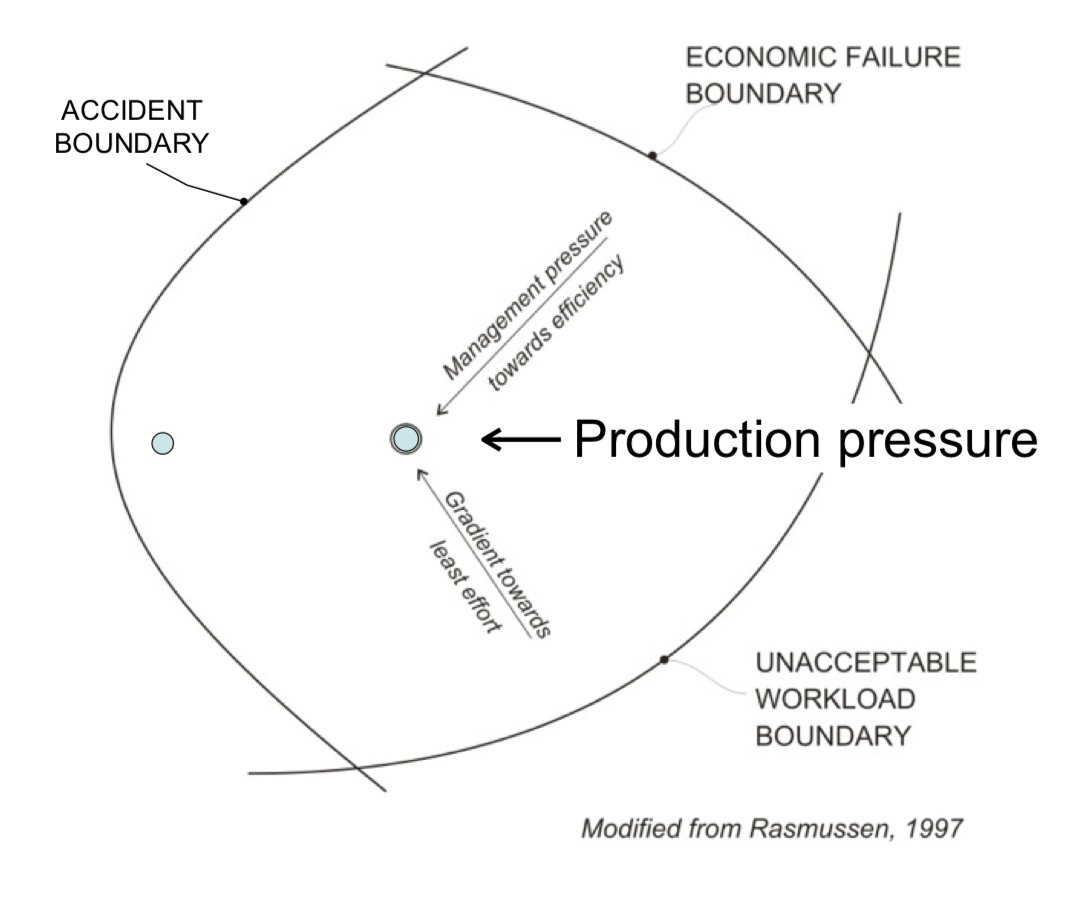

It necessarily depends on circumrational understanding: how the system interacts with its non-systematic environment.

how.complexsystems.fail

ncbi.nlm.nih.gov/pubmed/25742063

Scientific grant process aims to avoid funding bad work, but doesn’t, and significantly decreases the amount of good work.

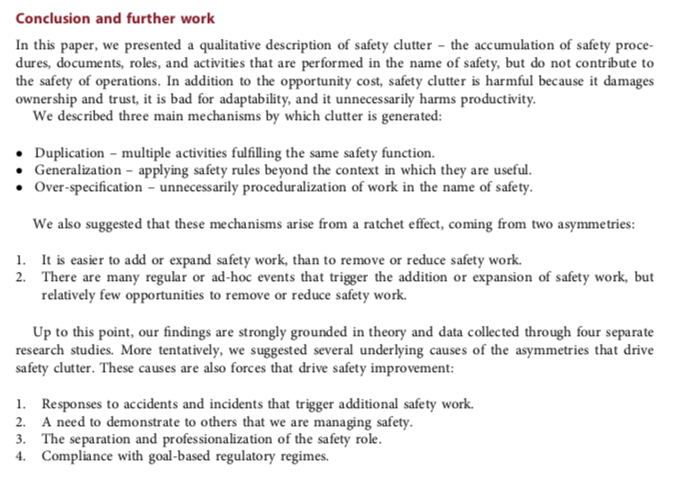

tandfonline.com/doi/abs/10.108…