The #EthereumMerge was a success! But who has time to celebrate? There's still YEARS of work to be done!

In this part 2, we cover the years ahead, looking well into Ethereum’s (ever-changing) roadmap.

FYI, Part 1 is below for a refresher.

In this part 2, we cover the years ahead, looking well into Ethereum’s (ever-changing) roadmap.

FYI, Part 1 is below for a refresher.

https://twitter.com/mt_1466/status/1569334917426814977?s=20&t=4XMpIG9PTfrpf5DbdlppAA

There is no official roadmap for #Ethereum, as it is predicated more on rough community consensus.

However, a high-level, noncontroversial plan stretching into 2023-24 is generally regarded as the agreed-upon path for the project.

However, a high-level, noncontroversial plan stretching into 2023-24 is generally regarded as the agreed-upon path for the project.

A more technical roadmap with the progress of each step filled in was updated by @VitalikButerin in December 2021.

Specific developments are tied to future upgrades and are always subject to change.

Consider Merge 💯!

Specific developments are tied to future upgrades and are always subject to change.

Consider Merge 💯!

The roadmap stages

• The Surge: introducing sharding, ~2023.

• The Verge: Optimise for storage and state size.

• The Purge: Reduce congestion and improve storage.

• The Splurge: Miscellaneous optimizations.

These stages are not sequential & are being worked on concurrently

• The Surge: introducing sharding, ~2023.

• The Verge: Optimise for storage and state size.

• The Purge: Reduce congestion and improve storage.

• The Splurge: Miscellaneous optimizations.

These stages are not sequential & are being worked on concurrently

$ETHs roadmap is constantly evolving; however, the flagship upgrades post-merge are the introduction of shard chains to help the network increase transaction throughput, improvements to rollups, and improving Ethereum’s ability to manage data storage.

@TimBeiko

@TimBeiko

The Surge itself then is focused on improving scalability at its data availability (DA) layer through data #sharding.

Sharding is the partitioning of a database into subsections. Rather than building layers on top (#L222s), sharding horizontally without a hierarchy.

Sharding is the partitioning of a database into subsections. Rather than building layers on top (#L222s), sharding horizontally without a hierarchy.

Ethereum will be split into different shards, each one independently processing transactions.

Sharding is often referred to as a L1 scaling solution because it’s implemented at the base-level protocol of Ethereum itself. Whereas rollups are L2s and present less systemic risk

Sharding is often referred to as a L1 scaling solution because it’s implemented at the base-level protocol of Ethereum itself. Whereas rollups are L2s and present less systemic risk

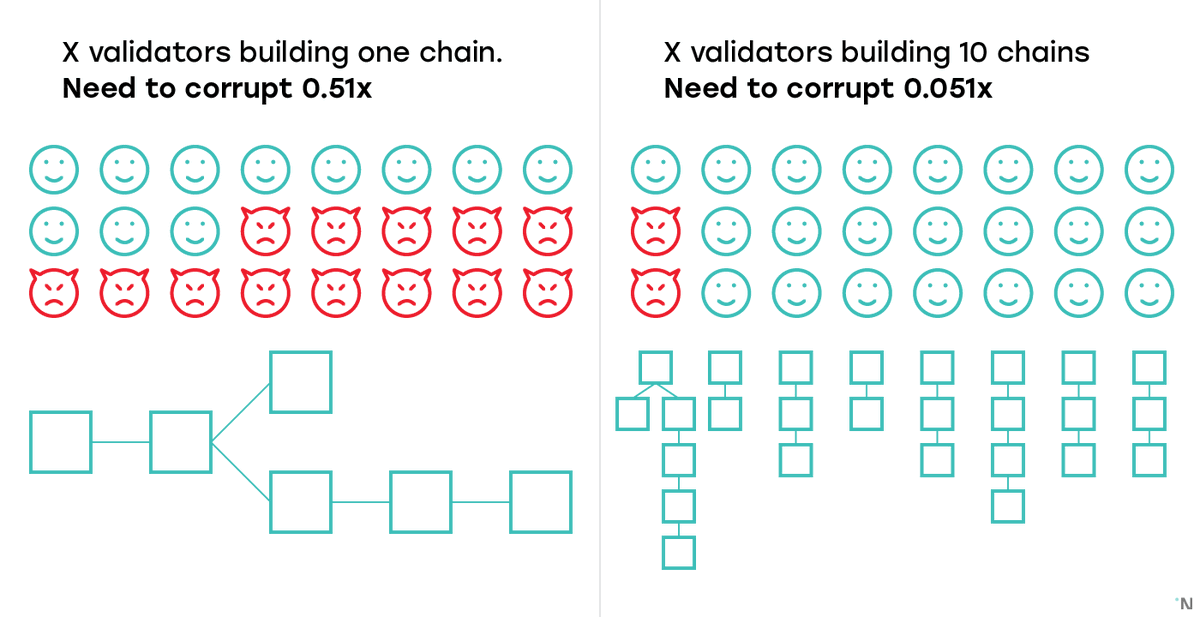

In this sharding model, validators are assigned to specific shards and only process and validate transactions in that shard. In Ethereum's planned sharding model, validators are randomly selected.

Every shard has a (pseudo) randomly-chosen committee of validators that ensures it is (nearly) impossible for an attacker controlling less than ⅓ of all validators to attack a single shard.

(randomness is hard and complicated but here's an image of #RANDAO and #VDF) @dannyryan

(randomness is hard and complicated but here's an image of #RANDAO and #VDF) @dannyryan

This means they are only responsible for processing and validating txs in those specific shards, not the entirety of the network.

The randomness of the validator selection process ensures it’s (nearly) impossible for a nefarious actor to successfully attack the network.

The randomness of the validator selection process ensures it’s (nearly) impossible for a nefarious actor to successfully attack the network.

Shards will be divided among nodes so that every individual node is doing less work.

But collectively, all of the necessary work is getting done—and quickler. More than one node will process each individual data unit, but no single node has to process all of the data anymore.

But collectively, all of the necessary work is getting done—and quickler. More than one node will process each individual data unit, but no single node has to process all of the data anymore.

Ethereum developers are looking to implement #Danksharding (named after Ethereum researcher Dankrad Feist), which aims to improve the efficiency and cost of L2 rollups.

This is because the bottleneck for rollup scalability is data availability capacity rather than execution capacity.

This will give L2s more space to store the chain’s data and offer additional data capacity for rollups.

This will give L2s more space to store the chain’s data and offer additional data capacity for rollups.

In the danksharding model, shards will serve as data storage “buckets” for new network data storage demands from rollups.

This enables tremendous scalability gains on the rollup execution layer.

@JackNiewold

This enables tremendous scalability gains on the rollup execution layer.

@JackNiewold

Just as significant, shards will also help avoid putting overly-onerous demand on full nodes, allowing the network to maintain decentralization.

How do we get to sharding, though?

EIP-4488: Rolling out a 100% complete version of danksharding is incredibly complex and will likely take 2-3+ years.

Bc of this, there are intermediary options being discussed, including #EIP4488 and EIP-4844 (proto-danksharding).

@apolynya

EIP-4488: Rolling out a 100% complete version of danksharding is incredibly complex and will likely take 2-3+ years.

Bc of this, there are intermediary options being discussed, including #EIP4488 and EIP-4844 (proto-danksharding).

@apolynya

EIP-4488 is the simplest and quickest way to improve rollups and drive down costs. However, it also has the least amount of attention currently.

So, what is it?

So, what is it?

EIP-4488 attempts to reduce rollup costs (while mitigating storage bloat) through two primary factors:

1) Reduce calldata cost from 16 gas/byte to 3 gas/byte

2) A limit of 1 MB per block + extra 300 bytes/tx

This could reduce rollup costs by ~80% (in just a few months!)

1) Reduce calldata cost from 16 gas/byte to 3 gas/byte

2) A limit of 1 MB per block + extra 300 bytes/tx

This could reduce rollup costs by ~80% (in just a few months!)

EIP-4844 (Proto-danksharding or PDS)

Proto-danksharding (PDS) is an alternative to EIP-4488 but is still a temporary stepping stone to the ultimate goal of “full” danksharding. However, even PDS is quite complex.

@dankrad

Proto-danksharding (PDS) is an alternative to EIP-4488 but is still a temporary stepping stone to the ultimate goal of “full” danksharding. However, even PDS is quite complex.

@dankrad

Rather than rollups using calldata storage (permanently on-chain), under PDS, rollups could post bundles under a new “blob” transaction type (cheaper) and pruned after ~1 month.

eip4844.com

eip4844.com

Rollup transactions would have their own “channel,” operating through a data blob market that uses its own fee structure & floating gas limits (their own 1559 mechanism!)

This means, even with heightened demand and activity from DeFi or NFTs, data costs won’t go up for rollups

This means, even with heightened demand and activity from DeFi or NFTs, data costs won’t go up for rollups

This creates two different gas markets - one for general computation and one specifically for data availability (DA), making the overall economic model more efficient than it was previously.

Data blobs are an entirely new transaction format, and only the blob’s hash can be accessed via a new opcode.

This guarantees the data content will never be accessed by the #EVM, reducing the gas cost of posting the data compared to with calldata.

@jon_charb

This guarantees the data content will never be accessed by the #EVM, reducing the gas cost of posting the data compared to with calldata.

@jon_charb

And that's a wrap for now!

If you want to learn more about what the future for #ETH holds, including the Verge, Purge, Splurge, #PBS, and more, check out the full article!

cryptoeq.io/articles/now-w…

If you want to learn more about what the future for #ETH holds, including the Verge, Purge, Splurge, #PBS, and more, check out the full article!

cryptoeq.io/articles/now-w…

• • •

Missing some Tweet in this thread? You can try to

force a refresh