"Training Machines to Learn the Way Humans Do: an Alternative to #Backpropagation"

Today's SFI Seminar by Sanjukta Krishnagopal

(@UCBerkeley & @UCLA)

Starting now — follow this 🧵 for highlights:

Today's SFI Seminar by Sanjukta Krishnagopal

(@UCBerkeley & @UCLA)

Starting now — follow this 🧵 for highlights:

"When we learn something new, we look for relationships with things we know already."

"I don't just forget Calculus because I learned something else."

"We automatically know what a 'cat-dog' would look like, if it were to exist."

"We learn by training on very few examples."

"I don't just forget Calculus because I learned something else."

"We automatically know what a 'cat-dog' would look like, if it were to exist."

"We learn by training on very few examples."

1, 2) "[#MachineLearning] is fundamentally different from the way humans learn things."

3) Re: #FeedForward #NeuralNetworks

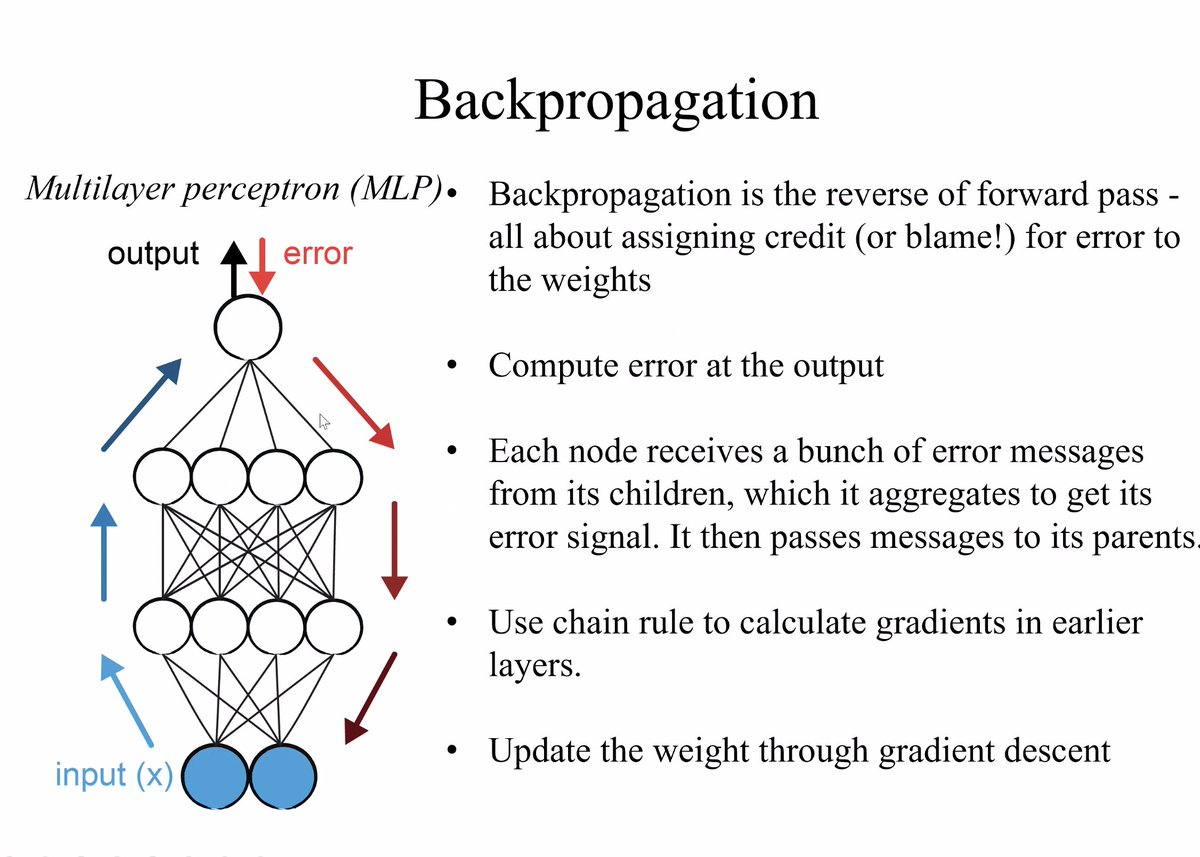

"You choose some loss function...maybe I'm learning the wrong weights. So I define some goal and then I want to learn these weights, these thetas."

3) Re: #FeedForward #NeuralNetworks

"You choose some loss function...maybe I'm learning the wrong weights. So I define some goal and then I want to learn these weights, these thetas."

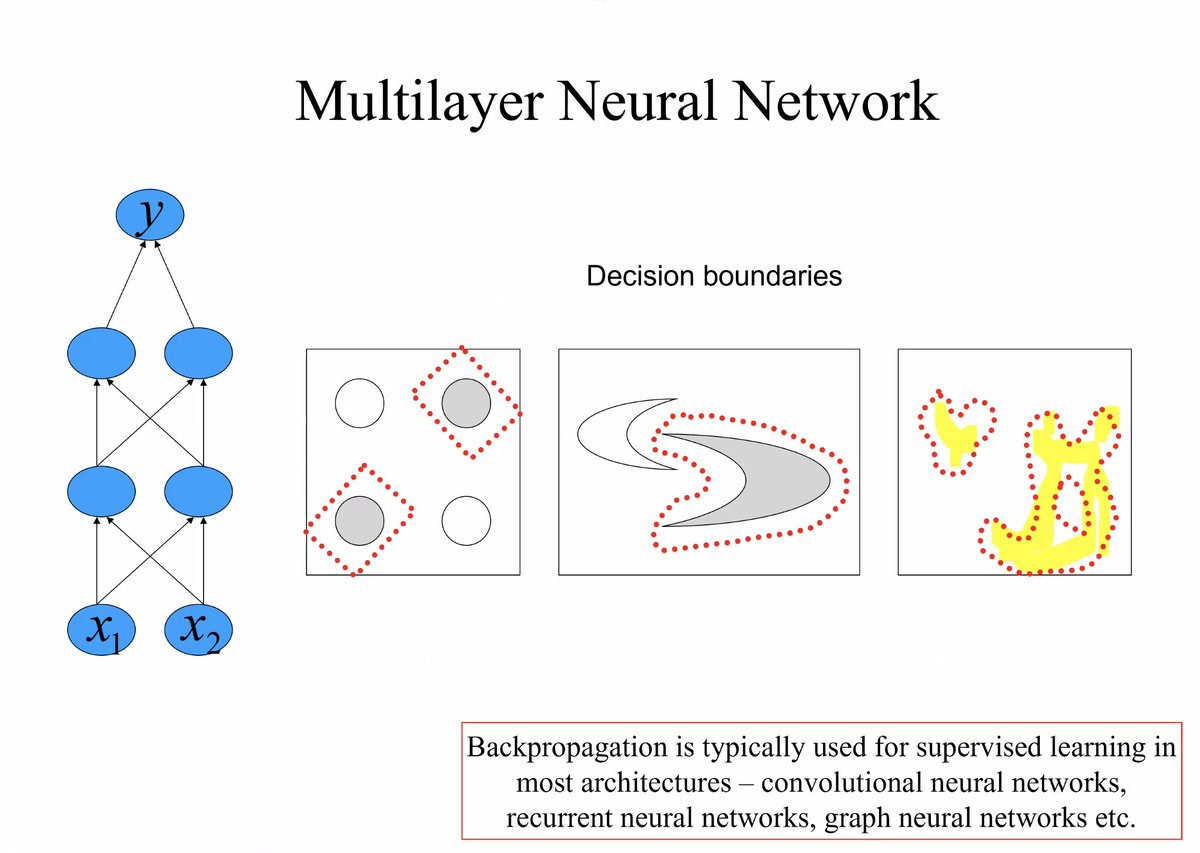

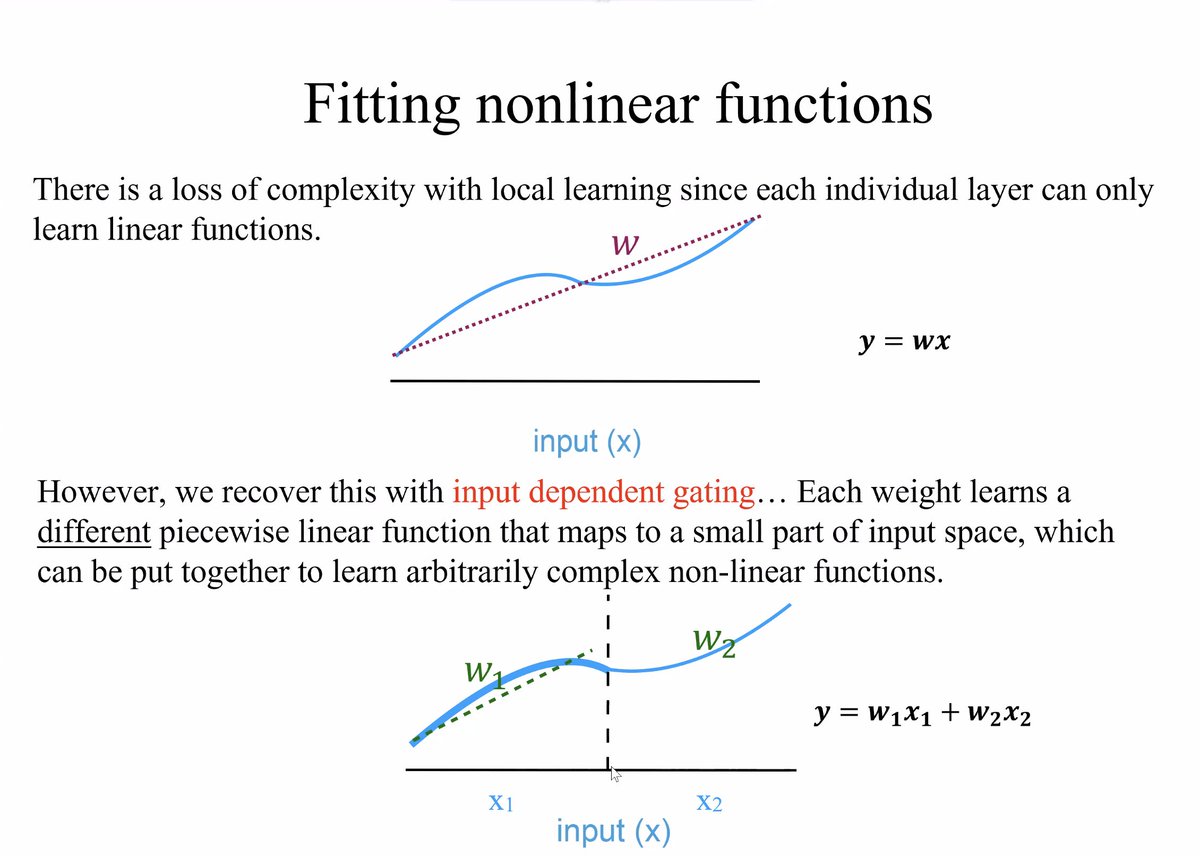

"The reason that one-layer #networks don't really work is that they can only learn linear functions. With multilayer neural networks, you can learn decision boundaries through #backpropagation...so it's a fundamental part of how we train machines, these days."

"The #brain learns [instead] by local #learning — instead of the error getting fed back through backpropagation, each #neuron does some kind of linear regression. It [consequently] works very fast. We have experimental evidence that the brain does something like this."

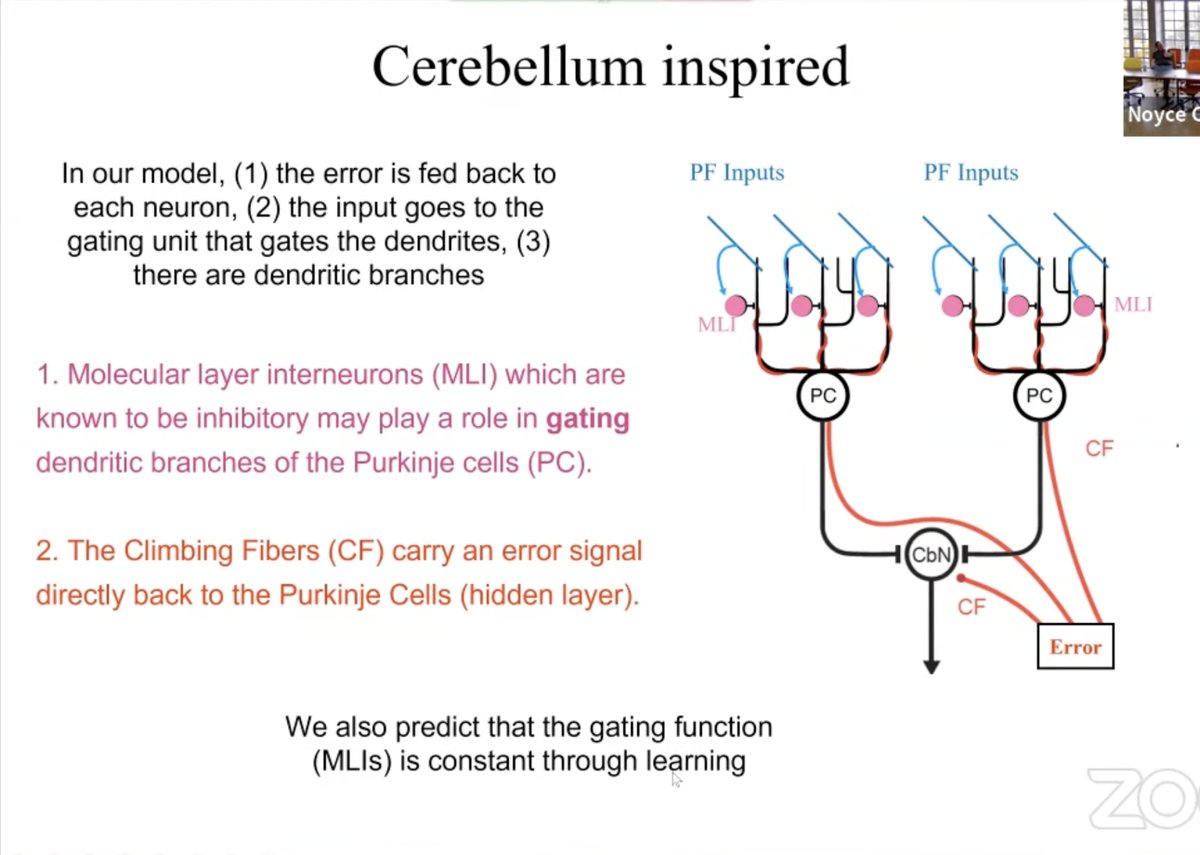

For dendritic gated networks in animal #brains:

"For each branch I pick a random hyperplane and draw [it] somewhere in this square, and say, 'If this input falls on one side, the gate will be open, and if it falls on the other side, the gate will be closed."

"For each branch I pick a random hyperplane and draw [it] somewhere in this square, and say, 'If this input falls on one side, the gate will be open, and if it falls on the other side, the gate will be closed."

"Each weight learns a different piecewise linear function, and then I aggregate as I go through the layers. This neuron is learning this section, this neuron is learning this section, and then the next layer is learning both sections."

2) "What do we want? Some desirable features of this model include that it is modeled on the #cerebellum. There isn't any ridiculous time delay due to forward and backward passes."

3) "Parallel fiber inputs go in through 'dendrites' and each branch has a gating key..."

3) "Parallel fiber inputs go in through 'dendrites' and each branch has a gating key..."

"The fact that the gates are significantly more correlated through learning than the error signal validates our decision to use [this approach]."

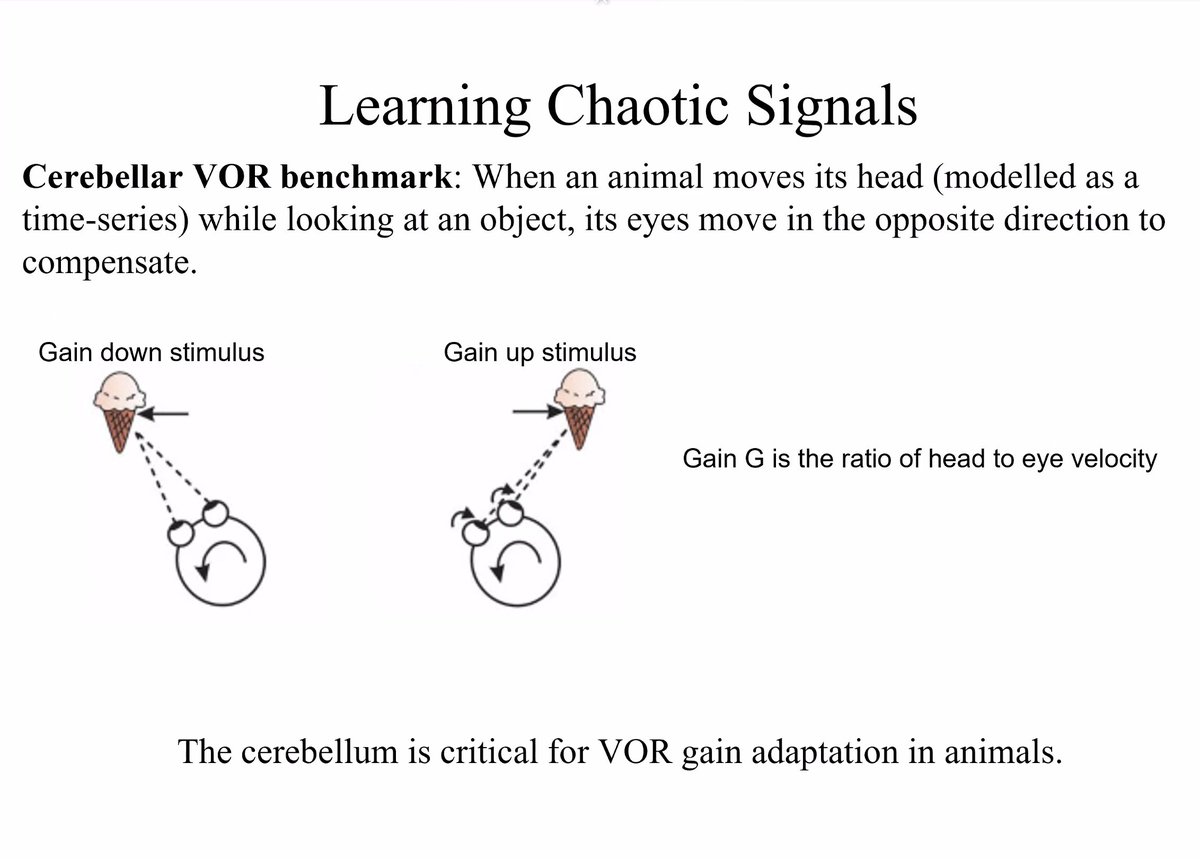

1) On the desirable features for computational experiments exploring a cerebellar model for #MachineLearning

2) "If you keep your finger in front of you and you move your head, you'll notice your eyes fixate very well on your fingernail...unlike if you move your finger around."

2) "If you keep your finger in front of you and you move your head, you'll notice your eyes fixate very well on your fingernail...unlike if you move your finger around."

"Both kinds of #NeuralNetworks learn this chaotic time series, but in different ways. DGNs learn this in very intuitive ways."

On Mitigating "catastrophic #forgetting":

"Instead of hyperplanes, can we have hypermanifolds?"

Re: Continuous learning with biologically plausible networks that don't suffer catastrophic forgetting...

Link to the pre-print c/o @DeepMind:

deepmind.com/publications/a…

Re: Continuous learning with biologically plausible networks that don't suffer catastrophic forgetting...

Link to the pre-print c/o @DeepMind:

deepmind.com/publications/a…

• • •

Missing some Tweet in this thread? You can try to

force a refresh