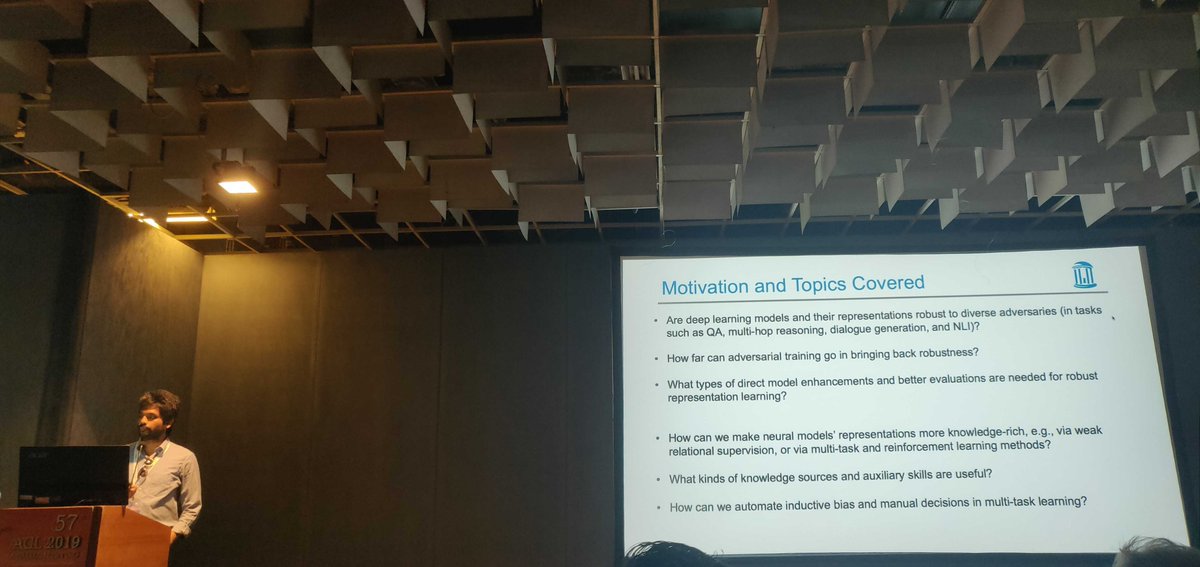

"Modeling Output spaces of NLP models" instead of the common #Bertology that focuses on Modeling input spaces only.

#ACL2019nlp

* Transformers (possible but not yet implemented)

* do KNN decoding efficiently

* other conditional language modelling task

* updating target embeddings during generation

* syntactic informed generation

the discriminators: are not the right type for language

The softmax layer that makes end-to-end gans non-differentiable

Their work (skipped during the presentation): train end to end language gans for continuous language generation.

VON MISES-FISHER LOSS FOR TRAINING SEQUENCE

TO SEQUENCE MODELS WITH CONTINUOUS OUTPUTS

ICLR 2019

arxiv.org/pdf/1812.04616…

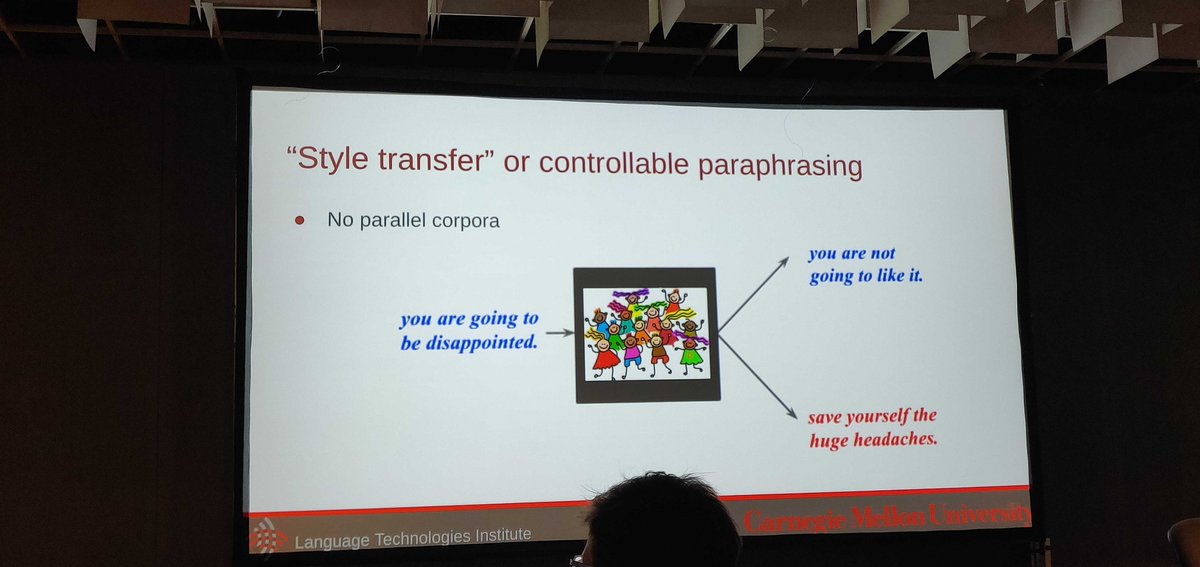

Summary of Yulia Tsvetkov's talk #4 #Rep4nlp #ACL2019nlp

Thanks for the great talk!!

This is a topic that interests me personally and I keep thinking every day that we need new ways of doing NLG that allows control rather than legacy ways of mapping inputs-outputs