If you’ve been following the posts on Math3ma for the past 6-or-so months, you’ll be delighted to know the content is all related. But more on that later…

More specifically, we’re interested in modeling probability distributions on *sequences*—strings of symbols from some finite alphabet.

1. Find a model that can *infer* a probability distribution if you show it a few examples.

2. Understand the theory well enough to *predict* how good the model will perform based the number of examples used.

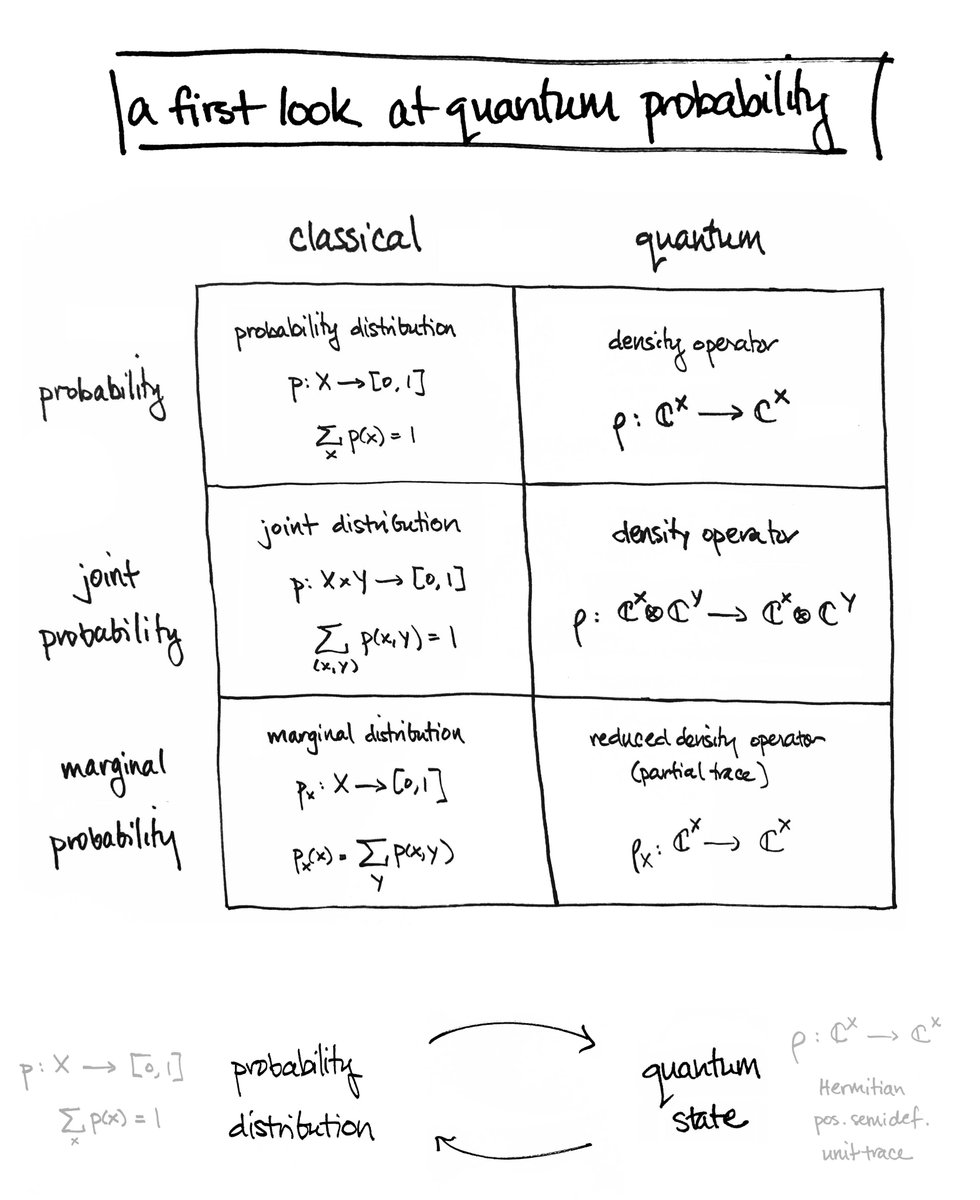

It’s very simple: We model probability distributions by density operators, a special kind of linear transformation.

Given an empirical probability distribution on a training set of sequences, we define a unit vector which is essentially the sum of the samples weighted by the *square roots* of their probabilities.

And since the coordinates of the vector are *square roots* of probabilities, we are in the realm of “2-norm probability.”

It's just linear algebra!

We tweak it to get a *new* vector.

This is all happening in a tensor product of Hilbert spaces, so there are nice tensor network diagrams:

MPS are nice bc far fewer parameters are needed to describe them than a generic vector in a tensor product. This is especially useful if you're working in ultra-large-dimensional spaces. MPS are efficient!

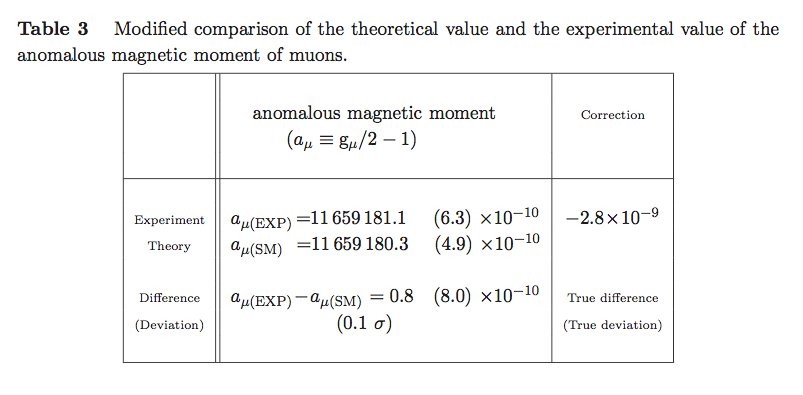

🔶 = experimental average

🔷 = theoretical prediction

The results are produced using the @ITensorLib library, itensor.org; details are in Section 6 of the paper.

✨Eigenvectors of the reduced densities of pure, entangled quantum states contain conditional probabilistic information about your data.✨

I won’t unwind this here. It’s all laid out on Math3ma:

math3ma.com/blog/a-first-l…

journals.aps.org/prl/abstract/1…

I might share a deeper look into the mathematics later in the future. In the mean time, feel free to dive in! arxiv.org/abs/1910.07425

</thread>