"Sure but are you webscal-💥🔫"

Takeaway: "If something is hard, do it often!" @lbernail #LISA19

(See also: devops, software deployment, etc. :))

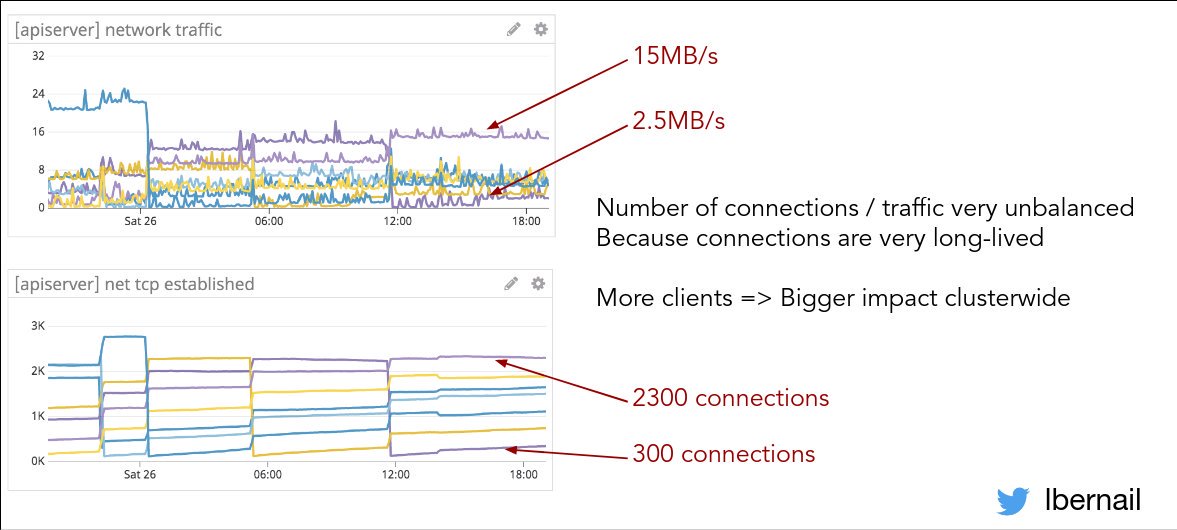

The constraints that they have at Datadog are just blowing up all the normal assumptions about anything.

Huge traffic, low-latency is required, 1000+ nodes cluster, lots of cross-cluster traffic, requires integration with VMs, too. 🤯

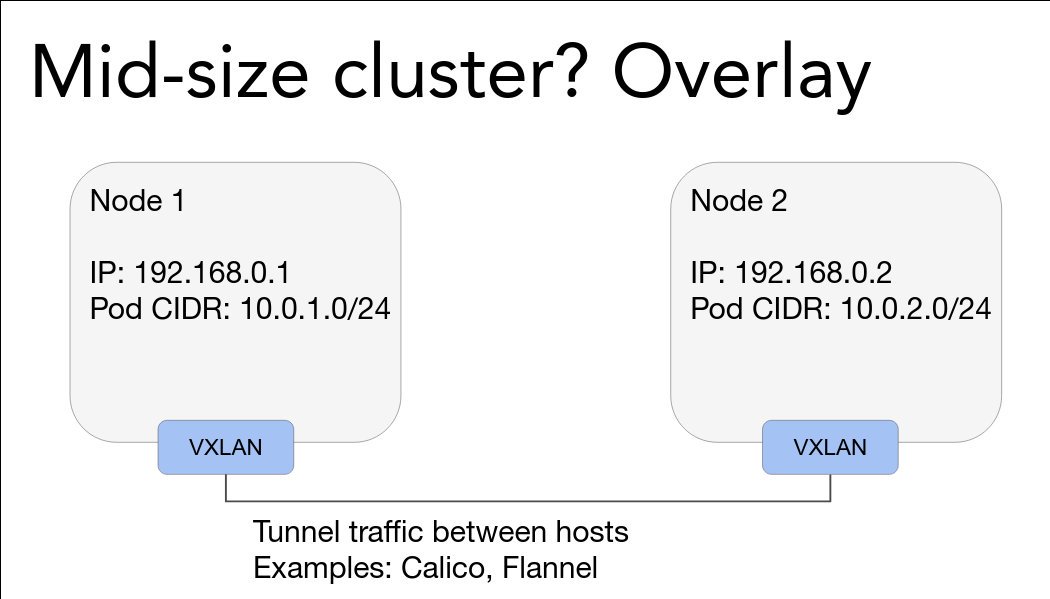

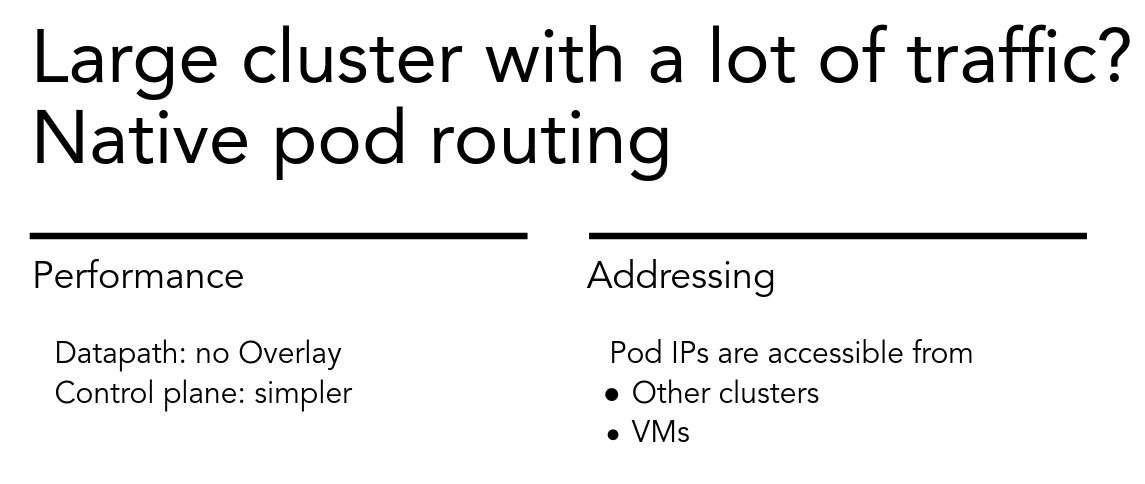

Medium clusters can use overlays, which are nice but have overhead.

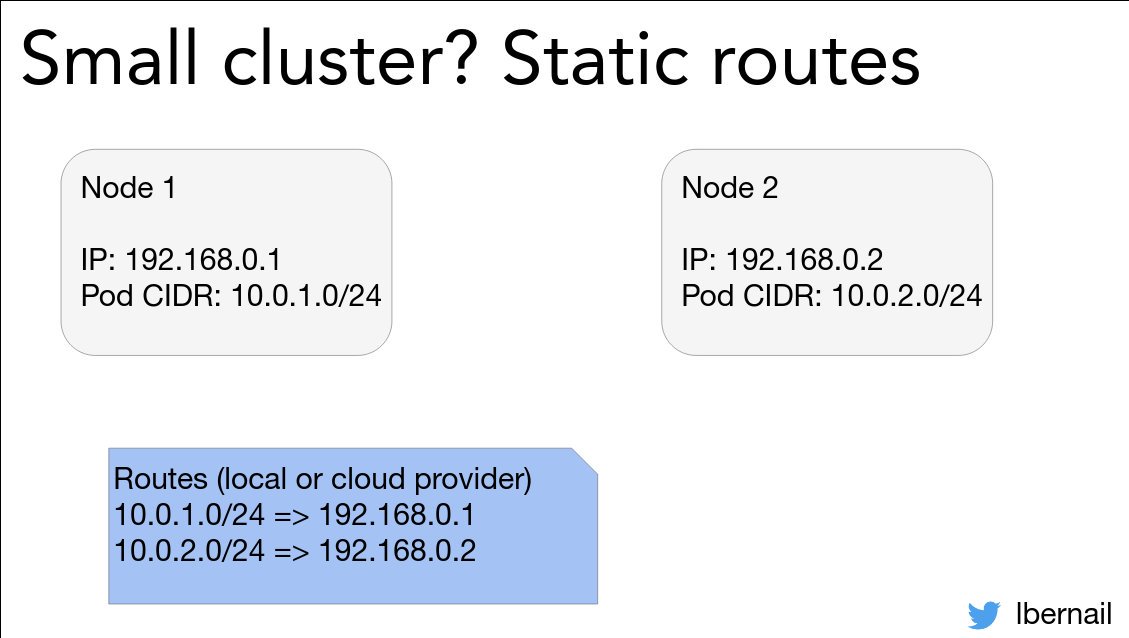

Big clusters with perf requirements will need to use native pod routing, i.e. make sure that the cloud fabric can carry pod traffic like 1st class traffic.

Laurent is giving a big shoutout to the @lyfteng CNI plugin and to @ciliumproject 💯

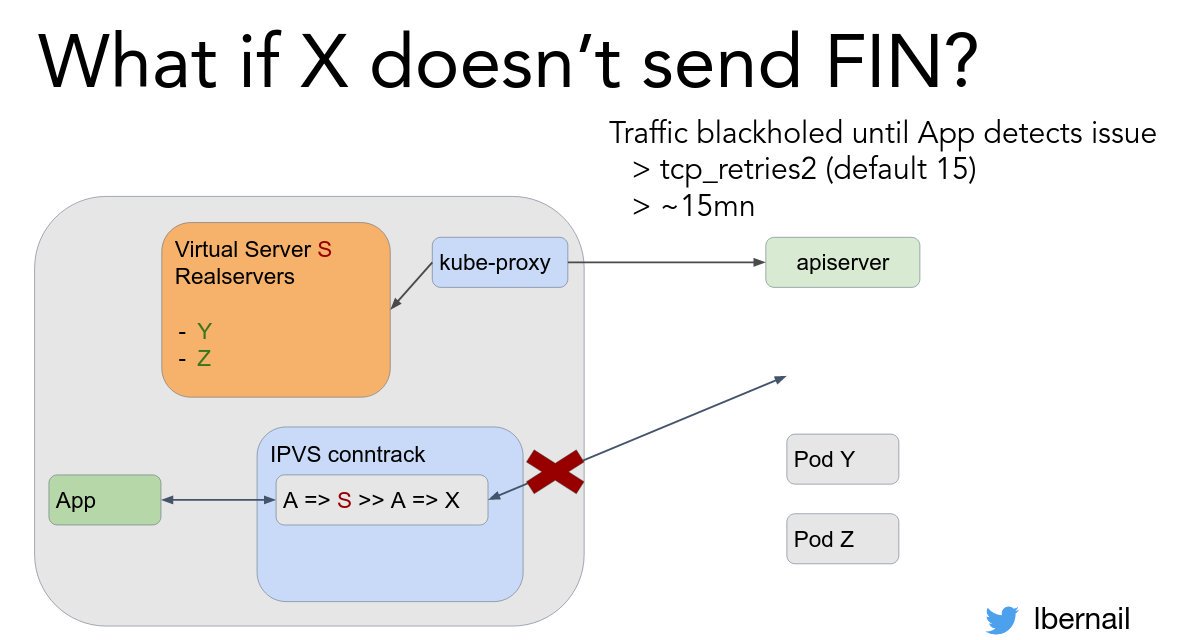

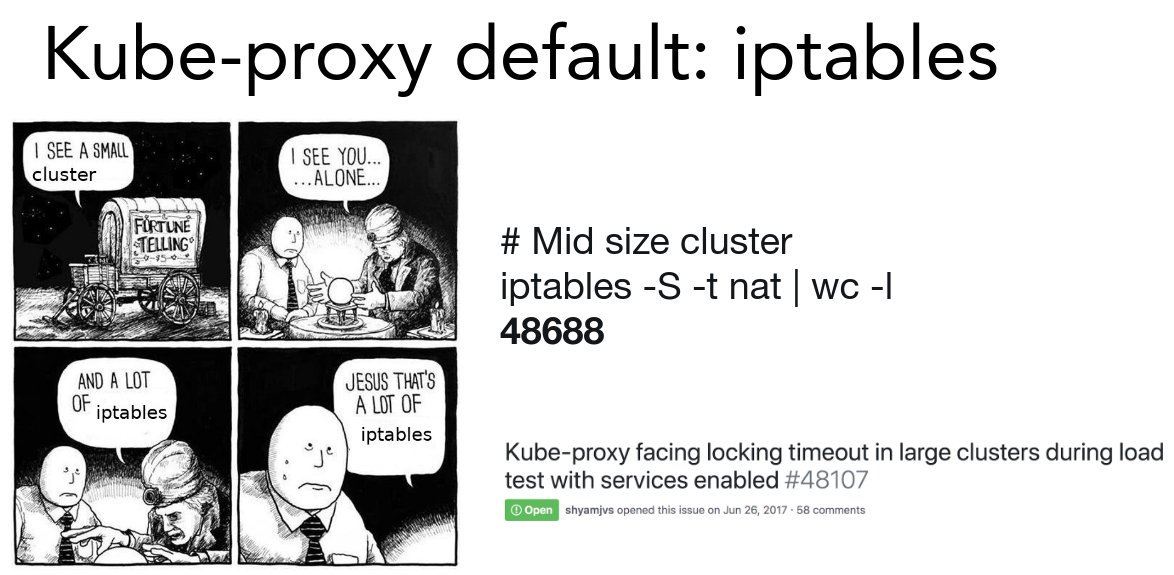

Switching to IPVS helps, ... BUT !!!

What happens then?

Mitigation: check Pod ReadinessGates!

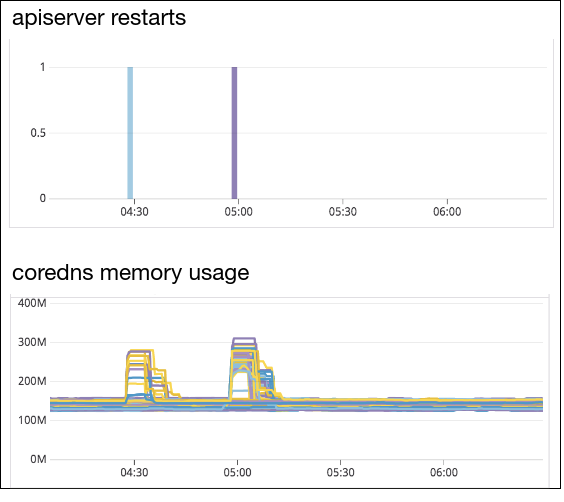

DNS (it’s always DNS!)

Challenges with Stateful applications

How to DDOS <insert ~anything> with Daemonsets

Node Lifecycle

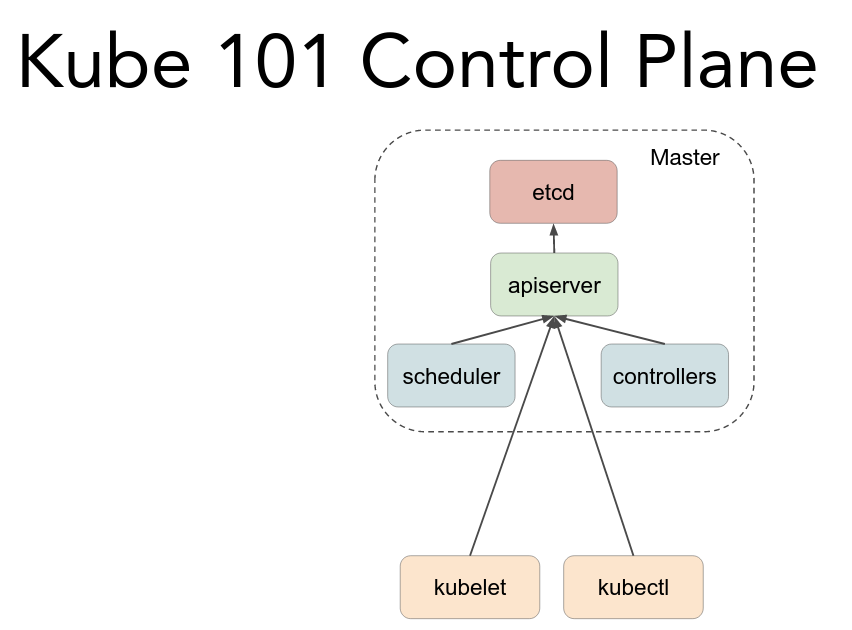

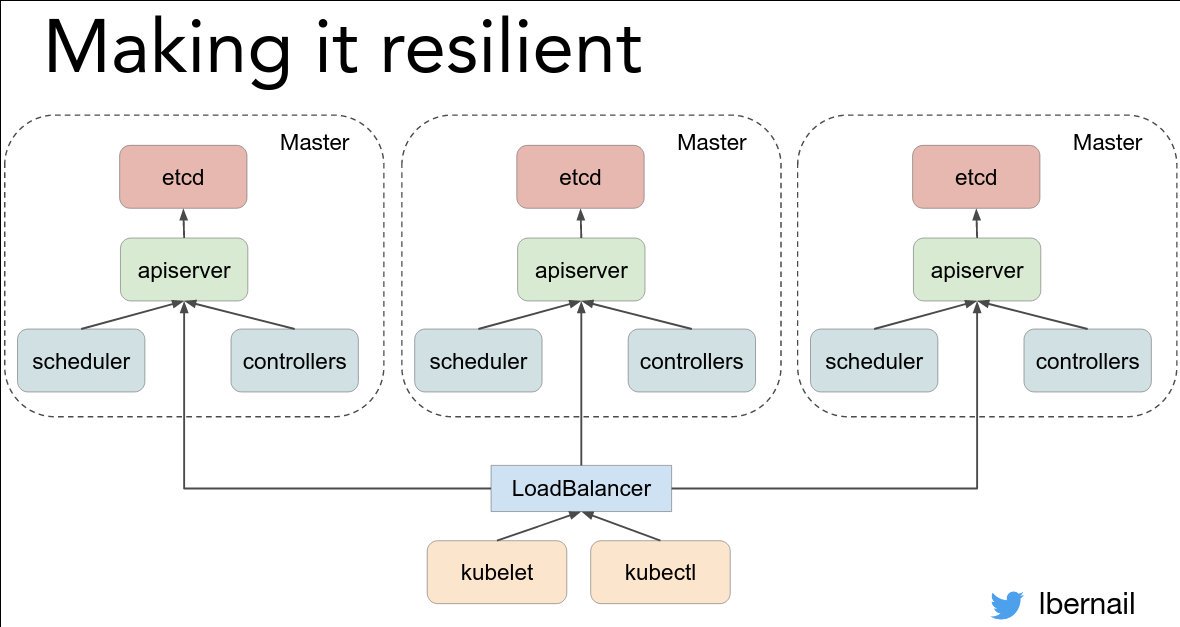

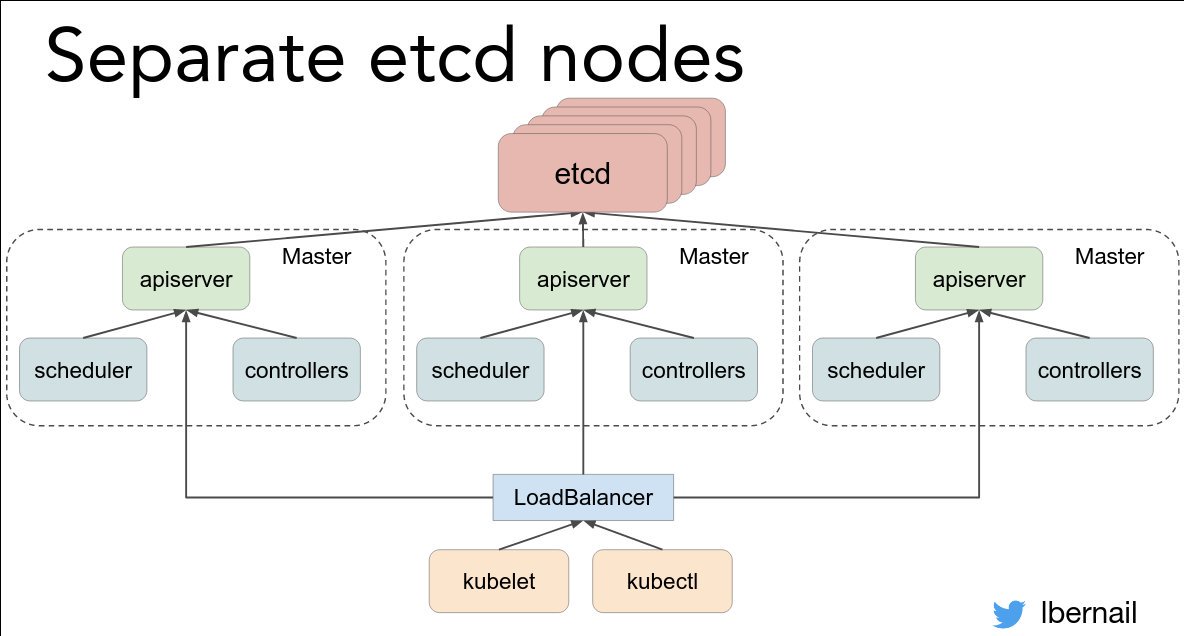

Cluster Lifecycle

Deploying applications

He could easily deliver a talk or two or five on ~each~ of them!

“Kubernetes the very hard way at Datadog”

“10 ways to shoot yourself in the foot with Kubernetes”

“Kubernetes Failure Stories”

k8s.af

Thanks Laurent for sharing all this! 💯