🧵 "A #biologist's perspective of #process and #pattern in #innovation"

by SFI External Professor @HochTwit

Starting in just a few minutes on our YouTube channel.

Follow this thread for select slides and quotations...

youtube.com/user/santafein…

by SFI External Professor @HochTwit

Starting in just a few minutes on our YouTube channel.

Follow this thread for select slides and quotations...

youtube.com/user/santafein…

"I'm not going to pretend there's a unified theory of #innovation, and I'm going to explain why."

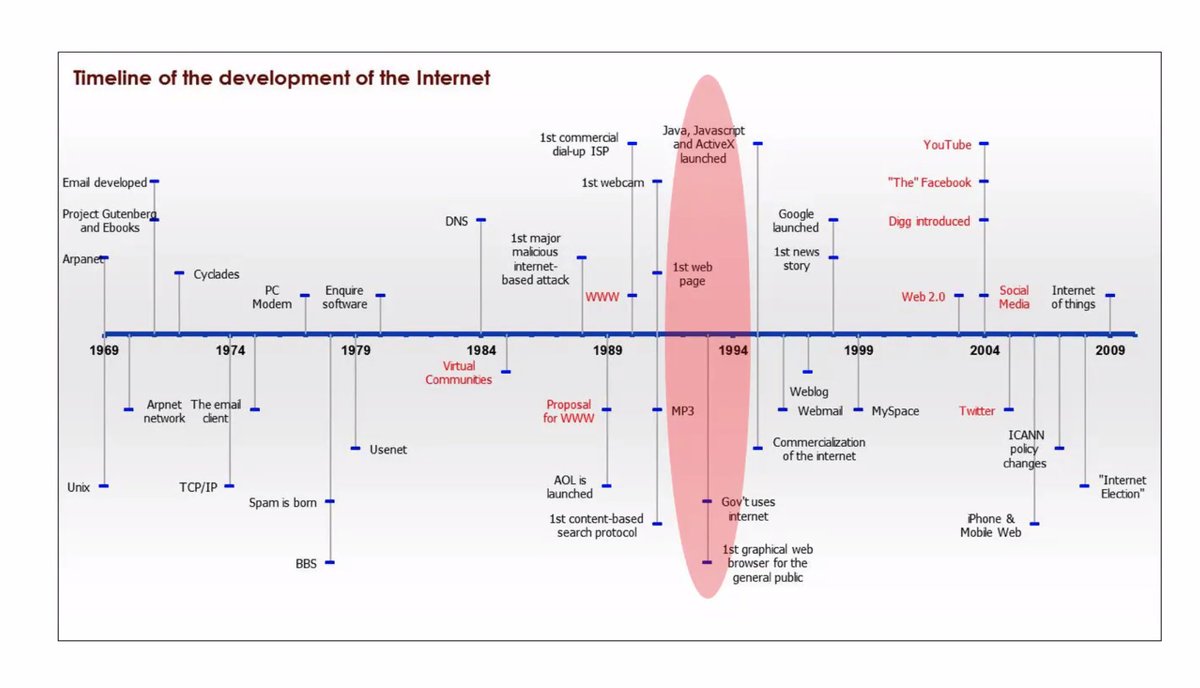

We begin with a tale of #Minitel: the original, now-extinct French "Web"...and then back further to #ARPANet...and then to theoretical precursors.

@HochTwit

We begin with a tale of #Minitel: the original, now-extinct French "Web"...and then back further to #ARPANet...and then to theoretical precursors.

@HochTwit

"There is no first-principles definition for #innovation."

"How could a company start with selling books online when people want to see a book in person and look through it? Nonetheless, @amazon survived..."

Before Amazon, Books.com on TelNet, later bought by B&N

"How could a company start with selling books online when people want to see a book in person and look through it? Nonetheless, @amazon survived..."

Before Amazon, Books.com on TelNet, later bought by B&N

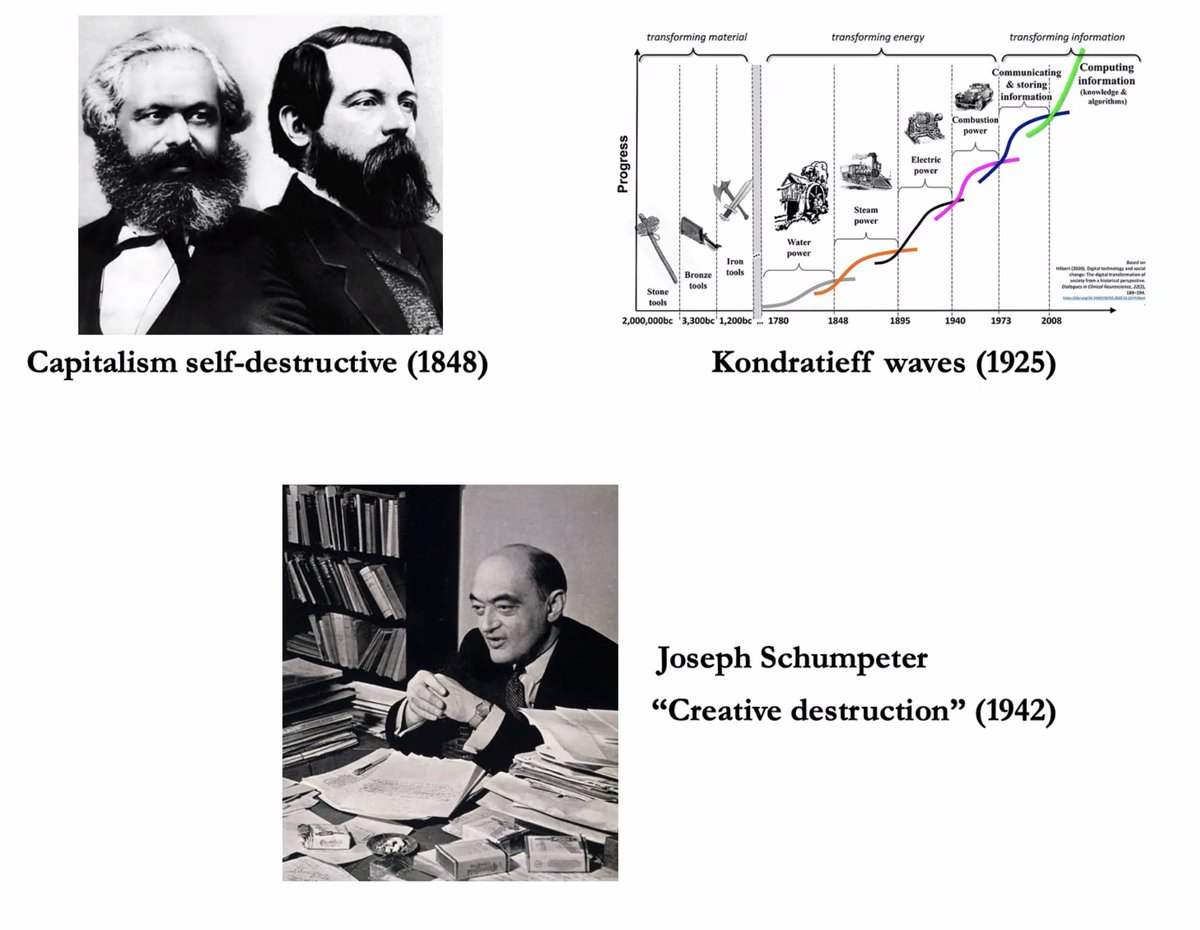

"We usually credit the transformatory impacts of #innovation to Austrian economist Joseph Schumpeter and his idea of #CreativeDestruction, that entire sectors were turned over and products from the past erased by products currently developed."

Earlier, Marx & Engels:

Earlier, Marx & Engels:

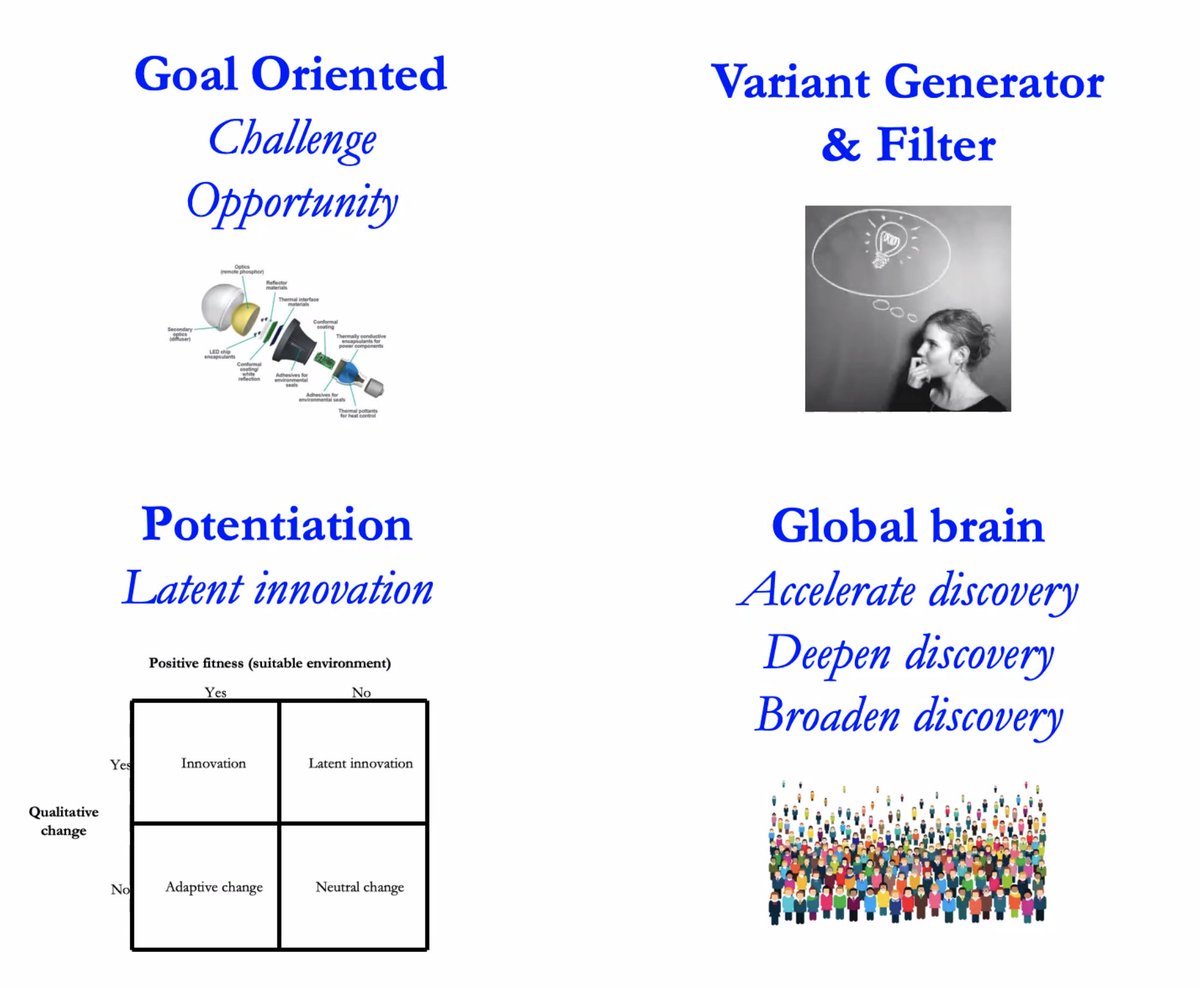

Three paths to #innovation:

• "Can we really construct something de novo from mutations?"

• "A screwdriver in principle has no #function, but we buy it for a specific function...this is adaptation."

• And then #Exaptation of existing features.

Re: convergence on #Flight:

• "Can we really construct something de novo from mutations?"

• "A screwdriver in principle has no #function, but we buy it for a specific function...this is adaptation."

• And then #Exaptation of existing features.

Re: convergence on #Flight:

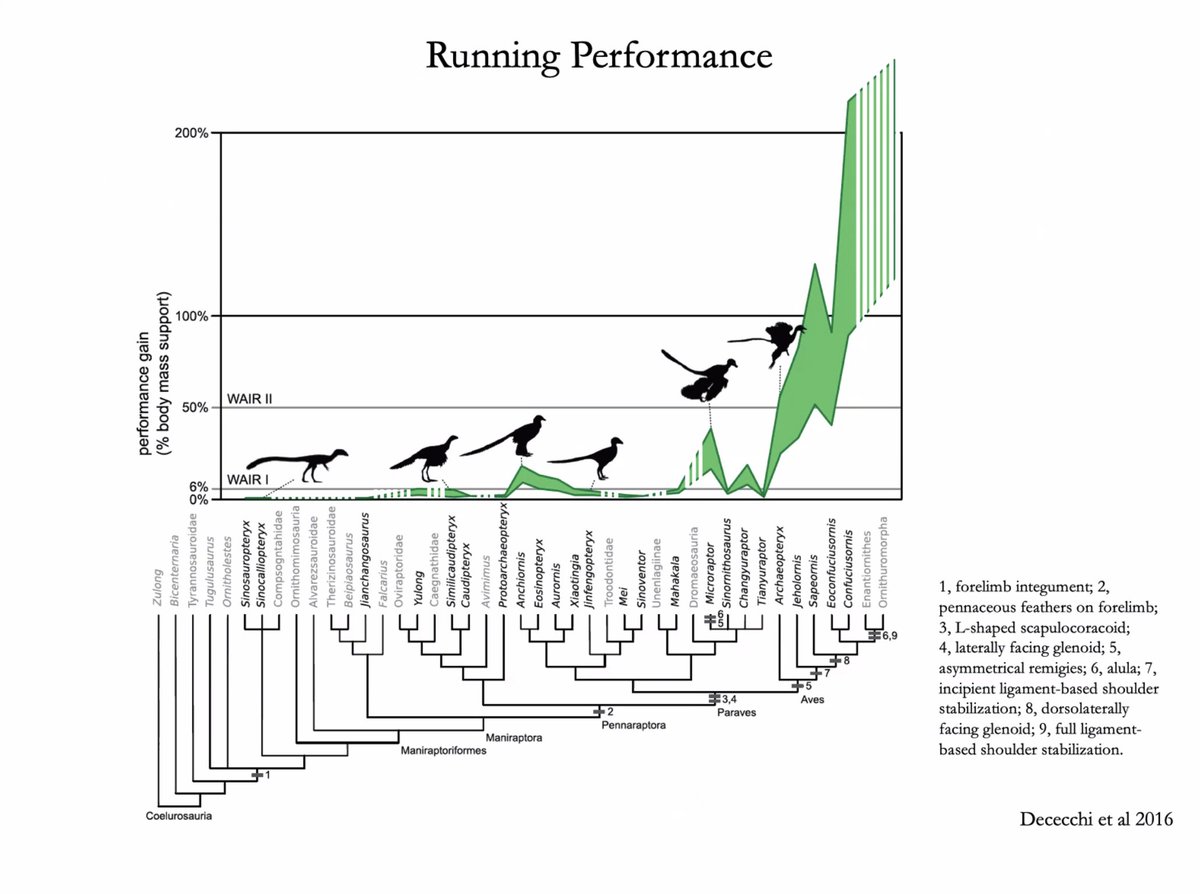

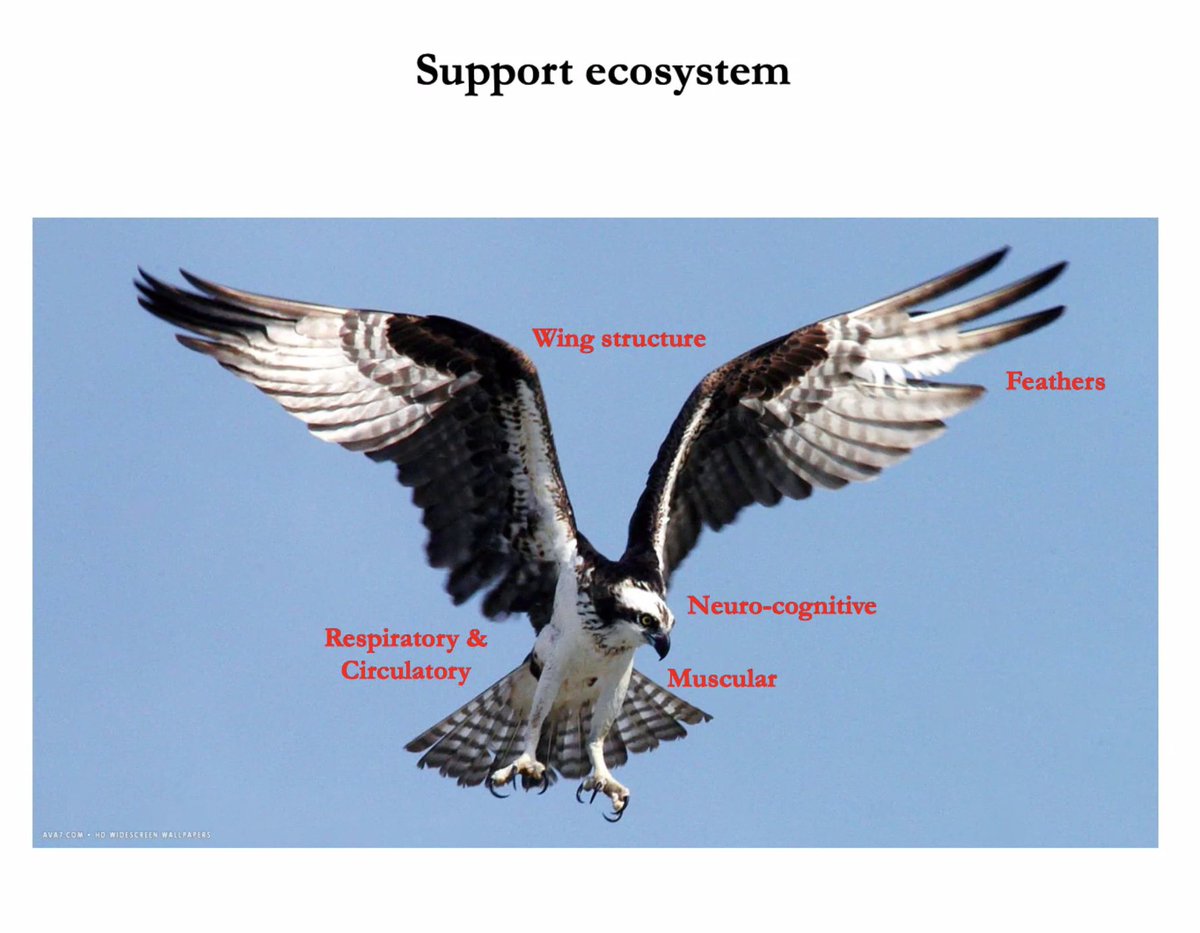

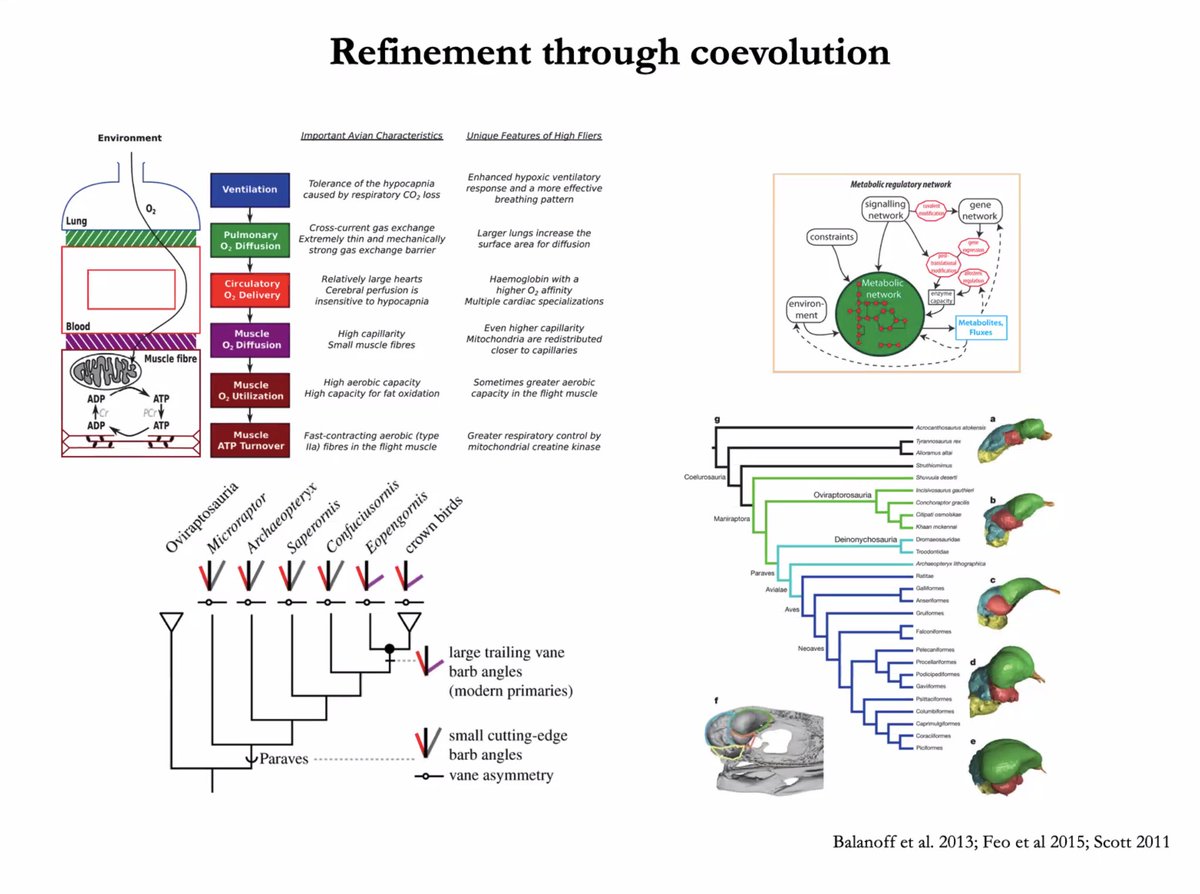

"Parts of the organism are co-evolving to permit the invasion to this new niche [from terrestrial life to #flight]."

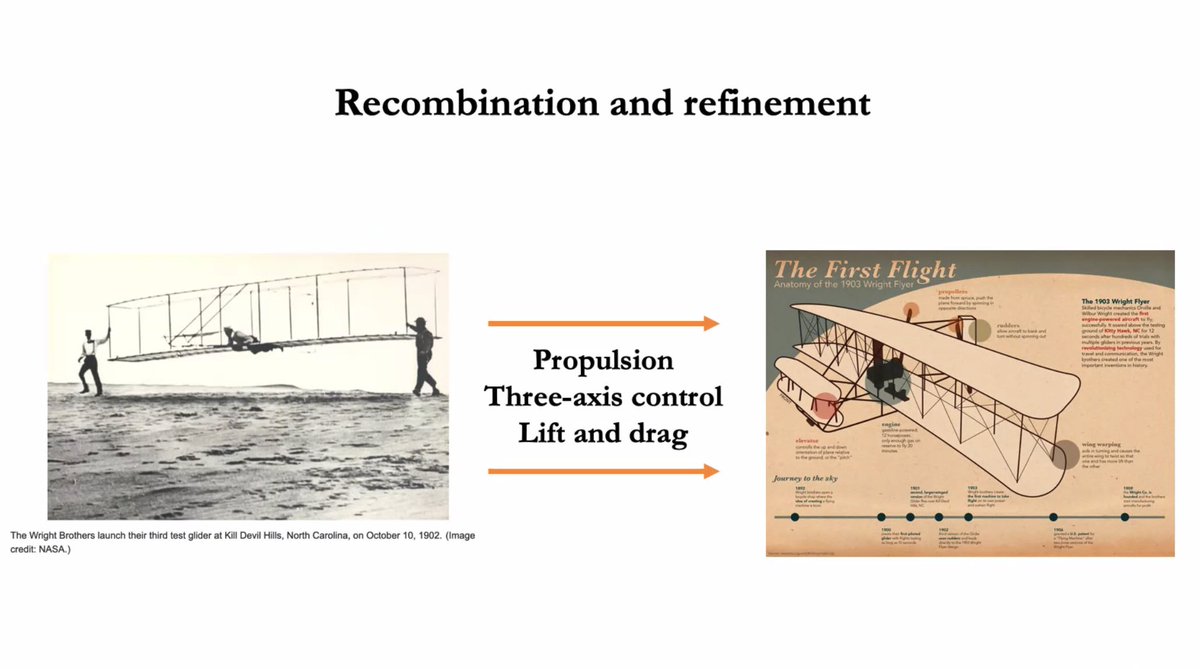

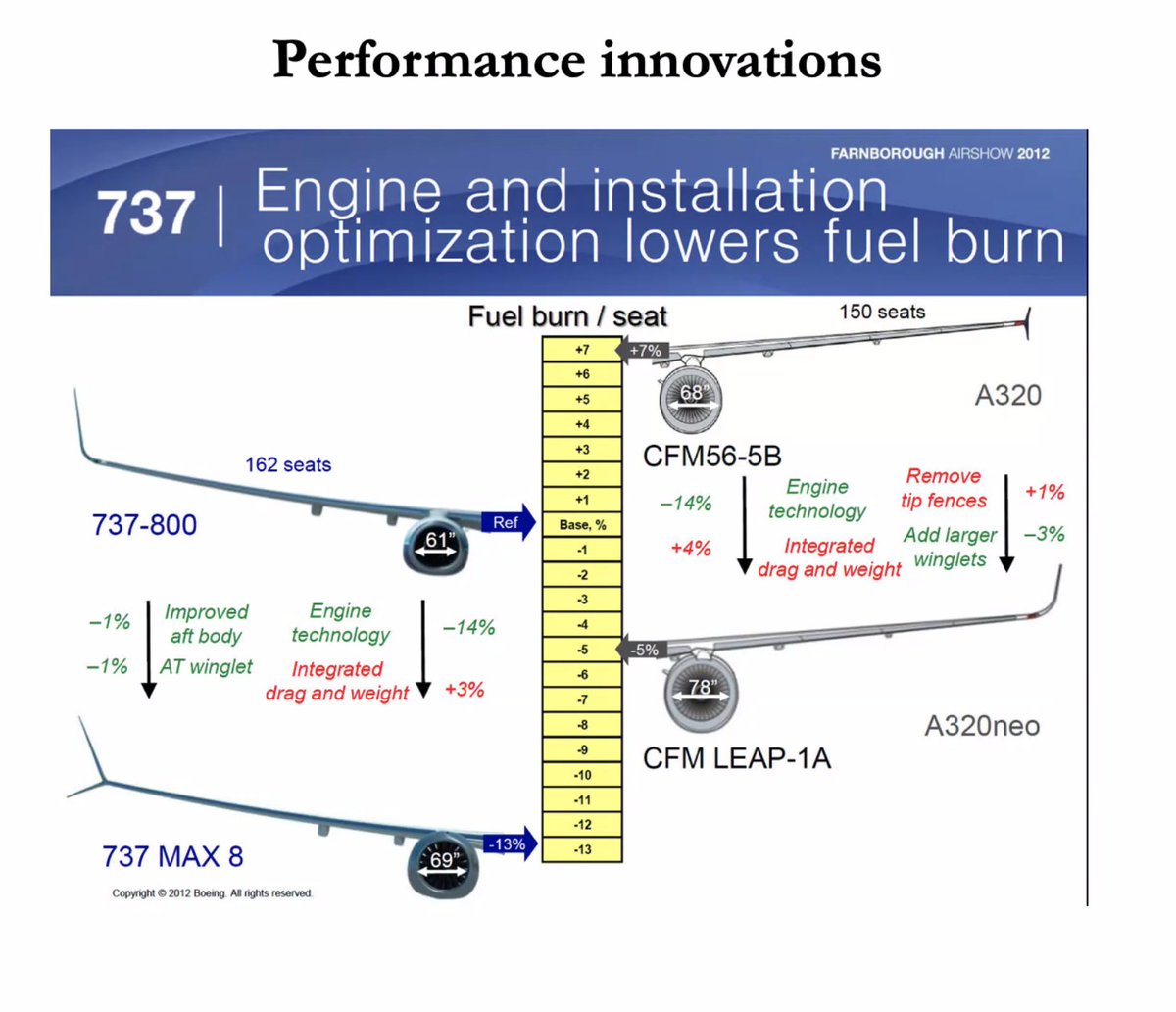

On #exaptation, #recombination, #coevolution, #birds, and #planes — emerging incrementally from performance innovations:

On #exaptation, #recombination, #coevolution, #birds, and #planes — emerging incrementally from performance innovations:

1) It takes 260 suppliers to make the parts for a @Boeing 787. Each of those suppliers requires myriad other suppliers.

2, 3) On #coevolution and #invasion of traits modifying #FitnessLandscapes (see Kauffman's #AdjacentPossible). New traits are typically difficult to predict.

2, 3) On #coevolution and #invasion of traits modifying #FitnessLandscapes (see Kauffman's #AdjacentPossible). New traits are typically difficult to predict.

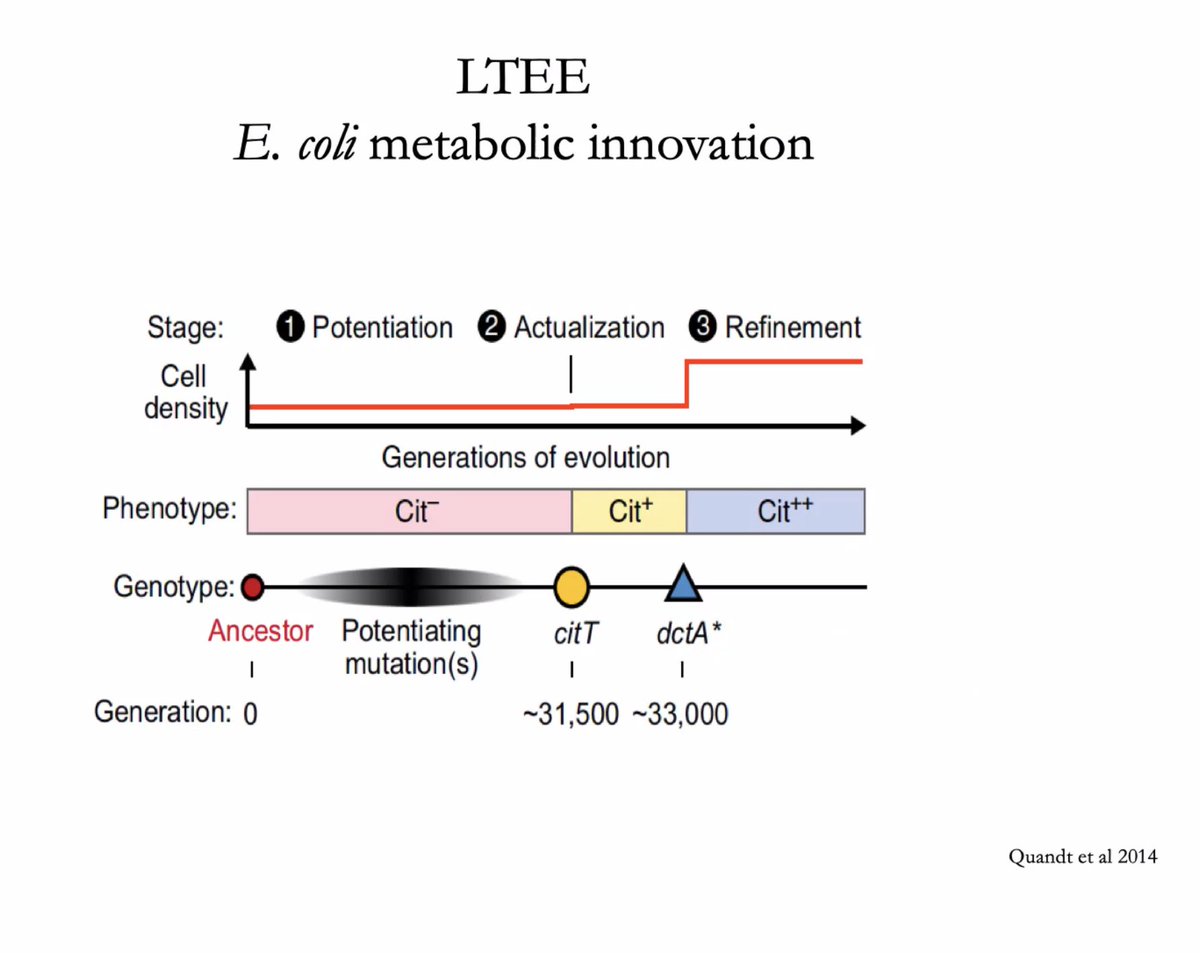

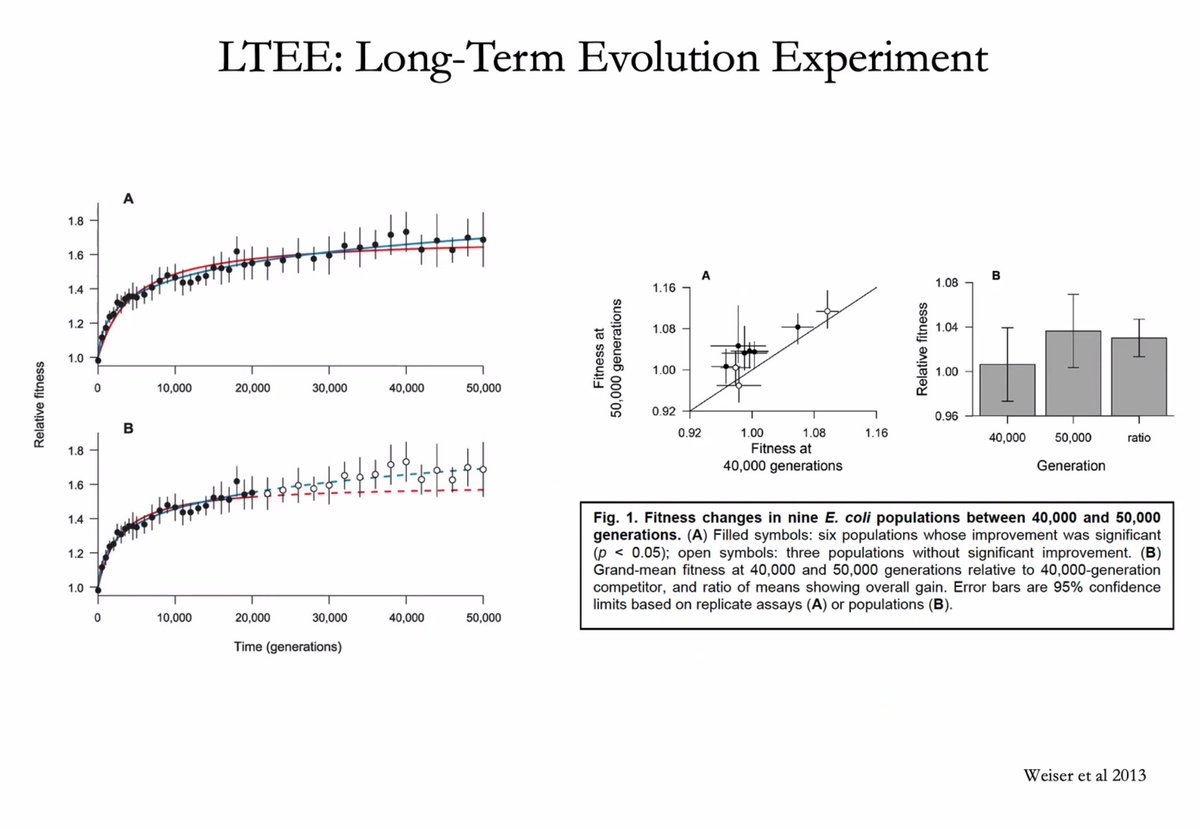

On @RELenski's Long-Term Evolution Experiment (#LTEE) — looking back thousands of #Ecoli generations, researchers found precursor "scaffolding" mutations that permitted later major metabolic innovations but were themselves not responsible for them.

(#Continency is key...)

(#Continency is key...)

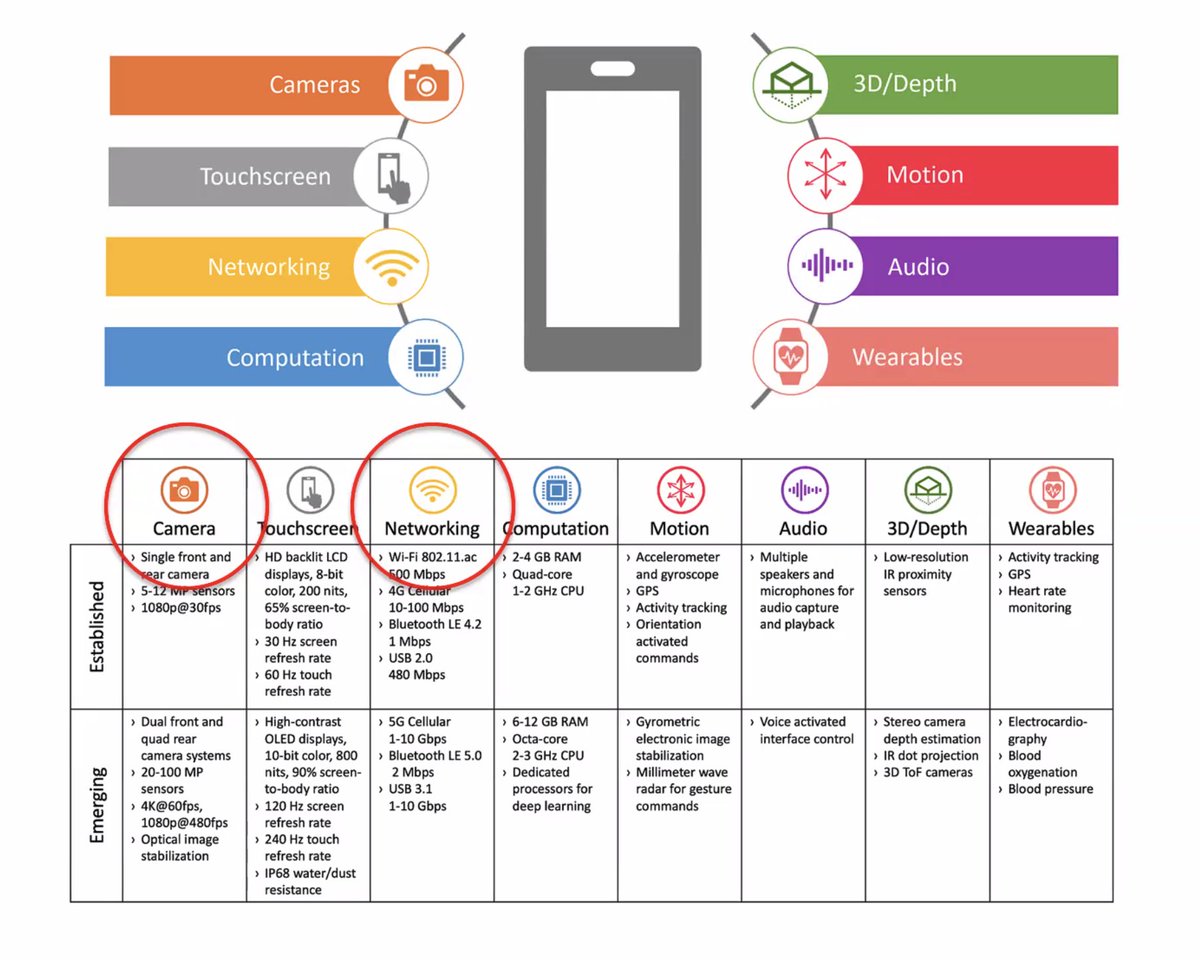

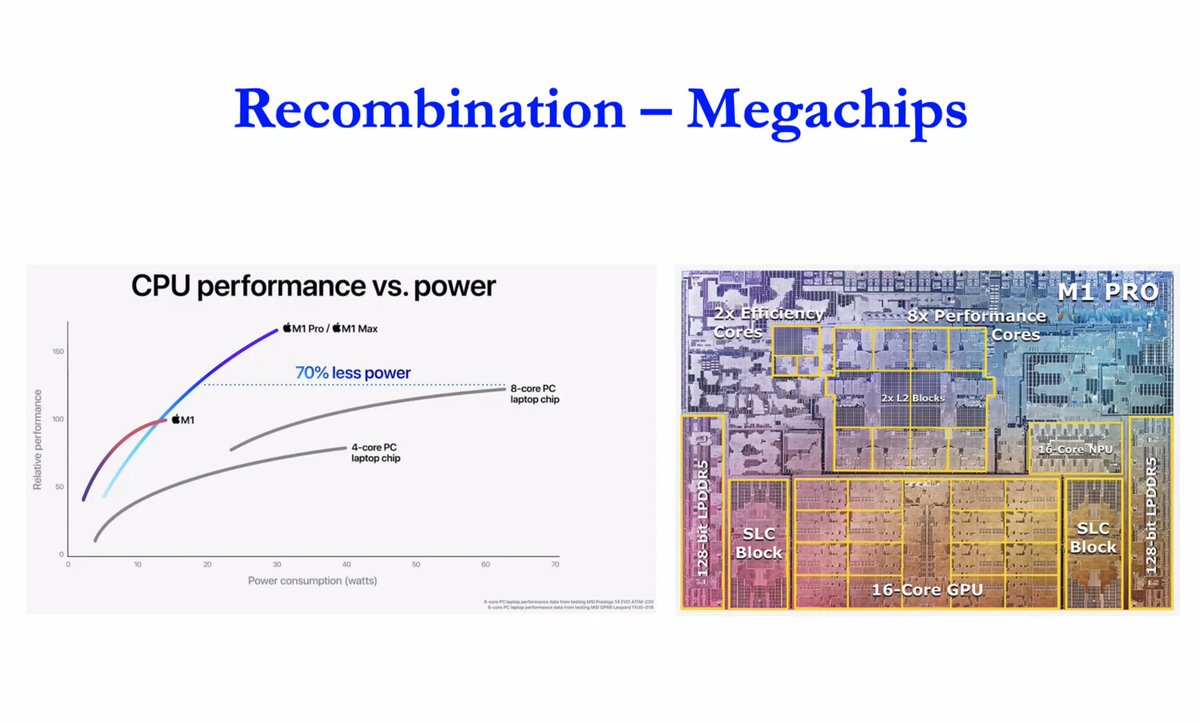

On the horizontal transfer and #recombination of traits in #biology and #technology:

"Because cell phones become a necessary part of what we are, for most of us, and we're willing to pay the price, it's difficult to think of most of these novelties as innovations."

"Because cell phones become a necessary part of what we are, for most of us, and we're willing to pay the price, it's difficult to think of most of these novelties as innovations."

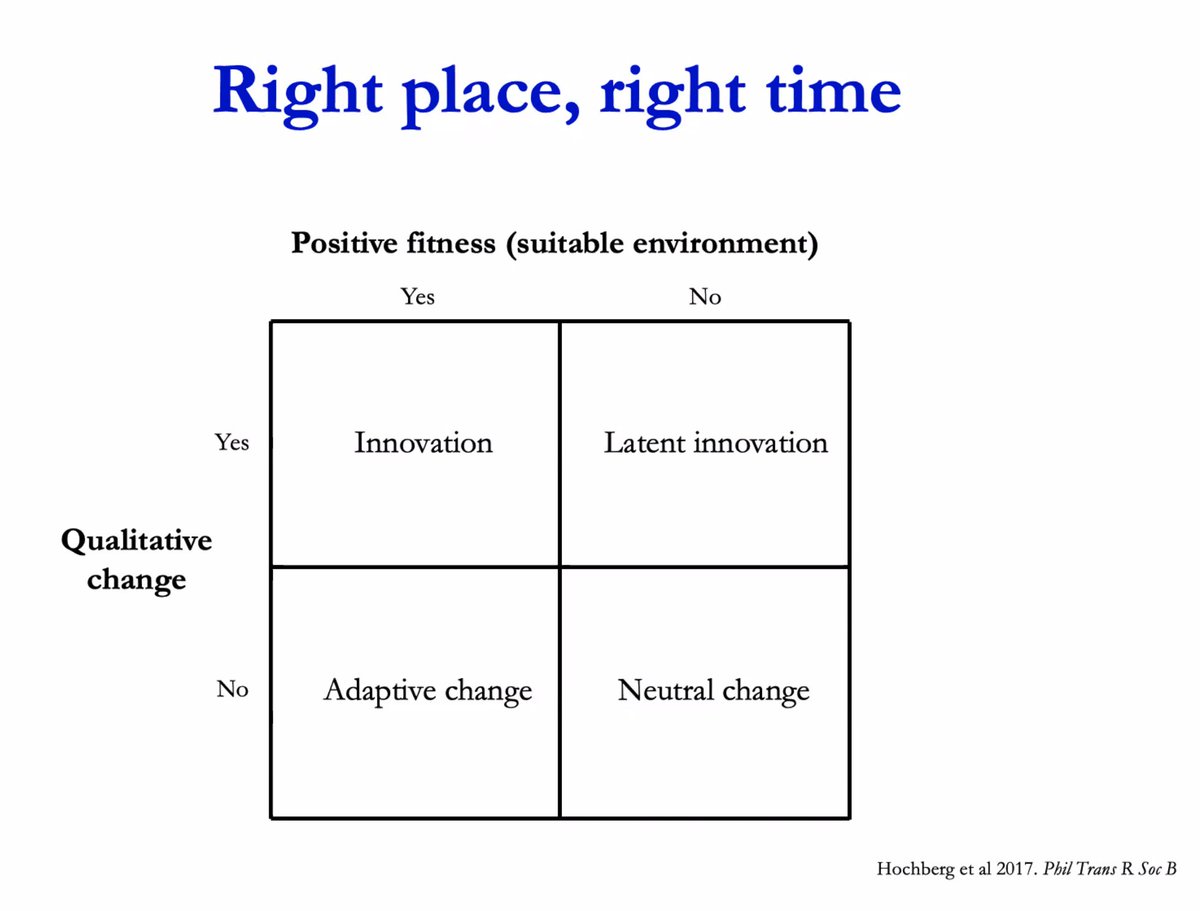

Sometimes major innovations never see the light of day because they're perceived as non-competitive.

"The idea is there, the patent is there, it's in the public domain, and numerous researchers have tried to revive it. But there has been no marketed device based on this."

"The idea is there, the patent is there, it's in the public domain, and numerous researchers have tried to revive it. But there has been no marketed device based on this."

1) "The cell phone did away with the bottom three. Internet has done away with the top three."

#CreativeDestruction

2) On the diffusion of #innovation via #EarlyAdopters:

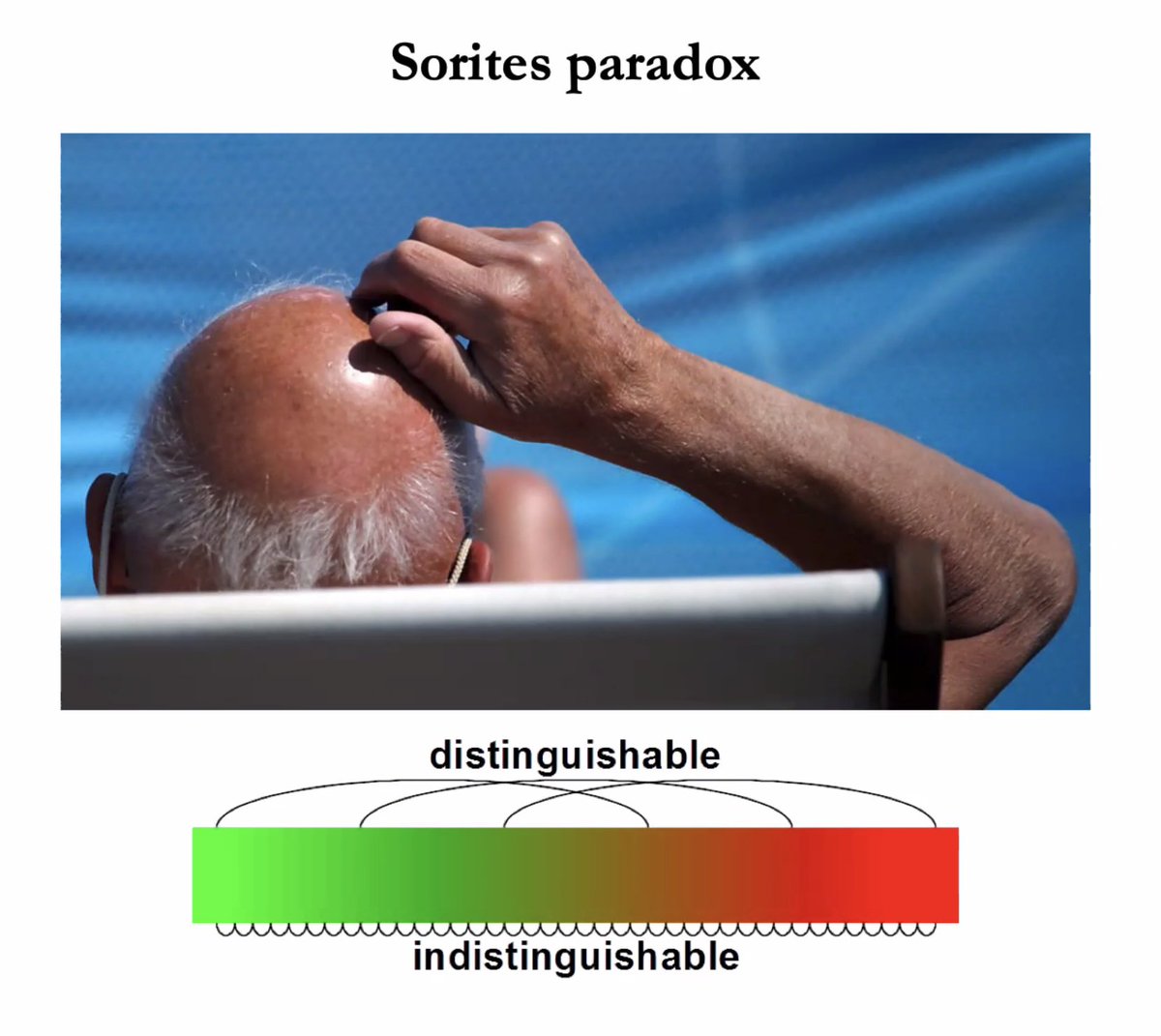

"Can we call an innovation something that invades only 10% of the market? 50%?)

@HochTwit speaking now:

#CreativeDestruction

2) On the diffusion of #innovation via #EarlyAdopters:

"Can we call an innovation something that invades only 10% of the market? 50%?)

@HochTwit speaking now:

"Perhaps there's a very #LongTail to the fixation of cell phone cameras, and a period of co-existence of [them with #DigitalCameras]."

(Are true transformatory innovations becoming rarer and rarer, or is creative destruction almost never perfect and complete?)

#Evolution + #Tech

(Are true transformatory innovations becoming rarer and rarer, or is creative destruction almost never perfect and complete?)

#Evolution + #Tech

1) Why have US patent applications slowed asymptotically over the last decade?

More efficient harvesting of existing patents?

(Red line shows what would have happened had the 2008-2009 Great Recession not occurred.)

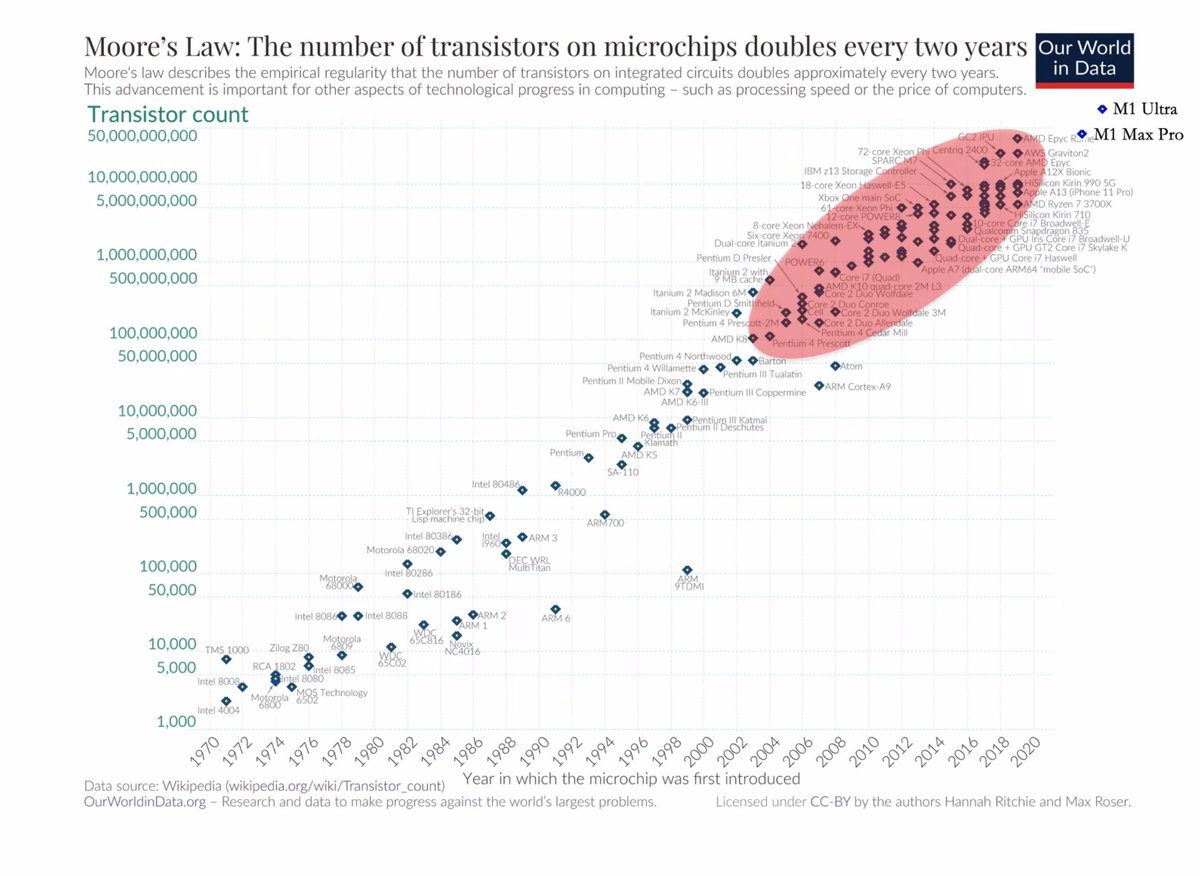

2) #MooresLaw running up against hurdles, then #Recombination

More efficient harvesting of existing patents?

(Red line shows what would have happened had the 2008-2009 Great Recession not occurred.)

2) #MooresLaw running up against hurdles, then #Recombination

"Are we really running out of ideas?"

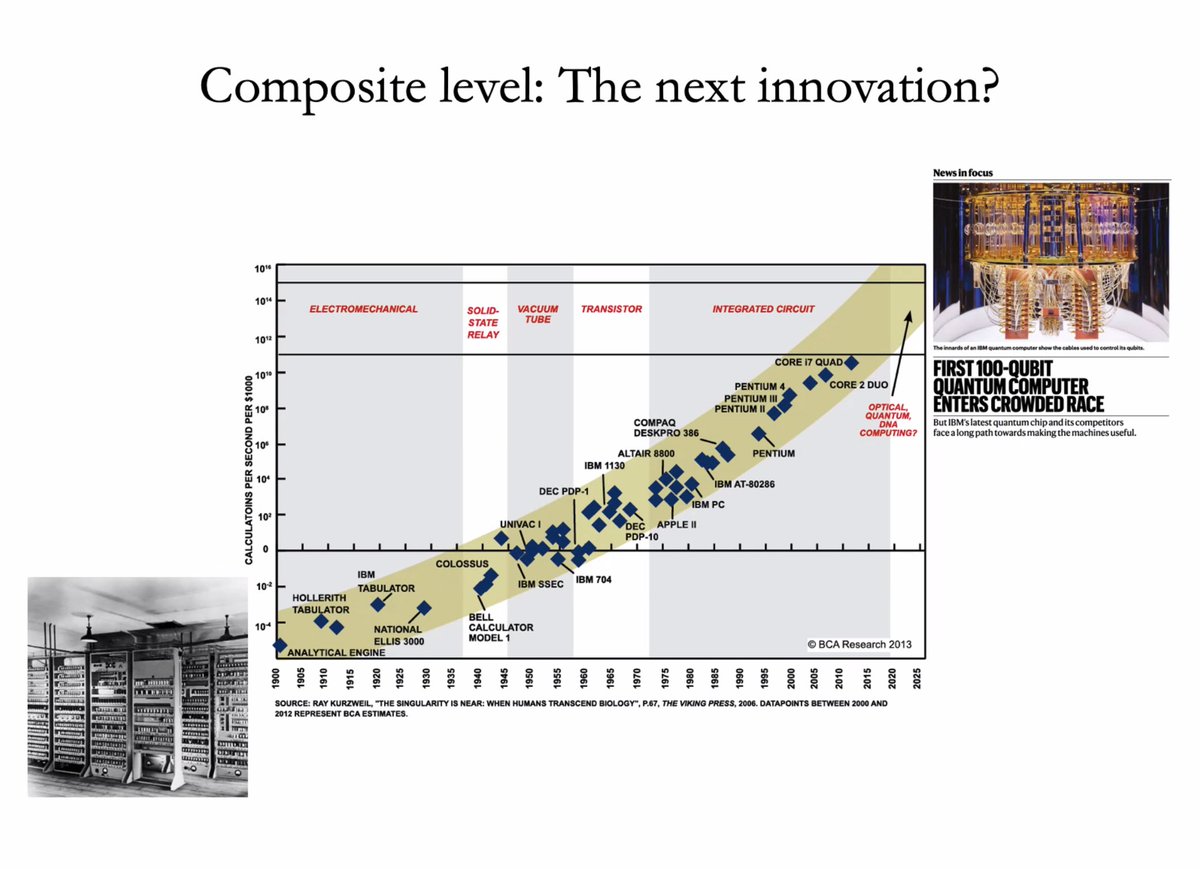

"What will be the next innovation? We see these macro transformations over the last 100 years. We do not know if #QuantumComputing will ever see the light of day."

Notable differences between bio & tech include goal orientation, theory...

"What will be the next innovation? We see these macro transformations over the last 100 years. We do not know if #QuantumComputing will ever see the light of day."

Notable differences between bio & tech include goal orientation, theory...

"I think one of the ingredients we'll need for a first-principles theory of #innovation, first of all, is this notion of 'surprisal.'"

"To what extent are there leaps available, or are we exhausting what is potentially out there?"

Read @RSocPublishing B:

royalsocietypublishing.org/doi/full/10.10…

"To what extent are there leaps available, or are we exhausting what is potentially out there?"

Read @RSocPublishing B:

royalsocietypublishing.org/doi/full/10.10…

• • •

Missing some Tweet in this thread? You can try to

force a refresh