Warning: strong opinions

The analysis is naive and the findings are ridiculous; the fact that it was published is a sign that when medical journal editors hear "deep learning AI" their brains stop working.

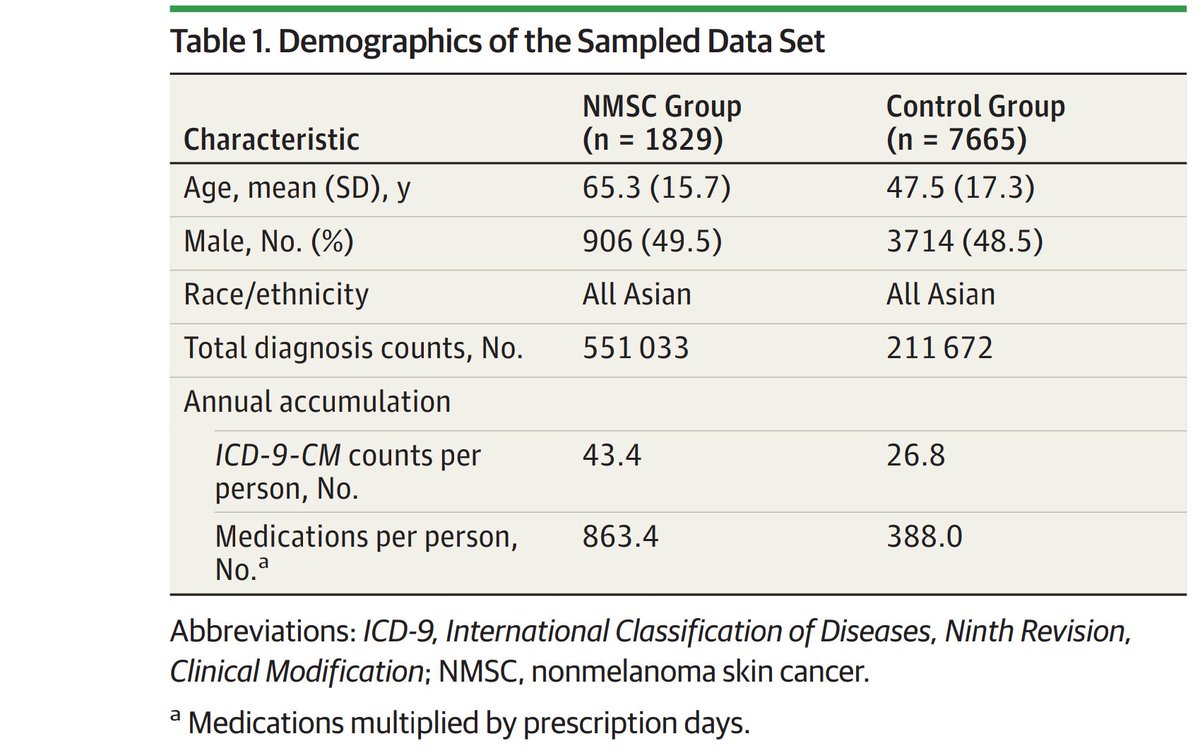

That you can predict skin cancer in general pop in the next year w AUC 0.9 (and 0.8+ based on medications alone)

That is nuts.

PHOTOGRAPHS OF SUSPICIOUS SKIN LESIONS have worst test characteristics.

Age doesn't matter much. Medications not known to be associated w skin cancer matter a lot.

Many of the meds are taken for years/decades, so couldn't have a huge impact on 1-year risk

Non-melanoma skin cancers are asymptomatic, slow growing, underdiagnosed. Any 'prediction" model is more likely to be predicting detection that disease

No discussion. Even in limitations.

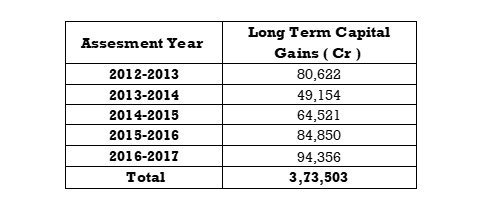

They supposedly have millions of records, find 1,829 cancer patients, then choose 7,665 random patients without cancer to compare to.

The controls are 20 years younger with half the number of conditions! #WTH

YOU CAN'T DO THAT

Sens and spec depend on population prevalence.

You can't create a fake population with 20% prevalence of undiagnosed cancer that will be detected next year!

Set aside 20% of the whole sample, run "prediction" on entire sample, and report how many who met cutoff actually had cancer diagnosed.

What is going on here?

I think the reviewers & editors were intimidated by AI/ML

How many VCs are funding healthcare inexperienced entrepreneurs who are waving "deep learning" "ML/AI" claims (that they themselves completely believe) in willful ignorance of what's gone before?

That's what has implications for using a test of given sens/spec in different populations.

example: With Sens 83% and Spec 82%, if prevalence is 0.1% then that means your positive predictive value is 0.4% (8/1808)

At @AledadeACO we're using these tools to predict mortality, no-shows, ED visits, renal failure. But you don't just "plug data into XGBoost"

Epi matters (and we're hiring)

Looking for someone who loves epidemiology/methods and is all-in on the @AledadeACO mission (good for patients, good for doctors, good for society)

hire.withgoogle.com/public/jobs/al…