which I've outlined a bit here link.medium.com/MV65AP9Xu2

and I've noticed some others doing it too (this deserves it's own thread)

but let's spend a few tweets thinking about individual data points

(see what I did there?)

it takes way too much brain power to imagine it, and its both common and important that shapes match.

it's even called "shape"

there should be way more emphasis on not imagining whats happening when it comes to linear algebra and hence ML

distill.pub/2018/building-…

en.m.wikipedia.org/wiki/Curse_of_…

so the average person is a meaningless concept because people are really complex (represented by many dimensions)

even if you don't end up showing them in your vis, you damn sure better take a look at them yourself

not necessarily quantified self, any data set that interests you.

bl.ocks.org/enjalot/c1f459…

it's also about one way to compute similarity

the problem is we don't have good interfaces to tell the computer how to feel the difference

umap-learn.readthedocs.io/en/latest/para…

I've heard of 3 of them (euclidean, cosine and manhattan) and only kind of know why I'd choose between euclidean and cosine.

how do you get an intuition for that?

should it find it's place among the existing cluster/map? or should it be able to create a new cluster if it's substantially different?

both can be legit usecases

this is an open problem in ML + vis

also a fair bit of sampling individual data points and staring at them. this is where vis can make things much better.

in ML I think they call it hyperparameter search (sounds too sci-fi...)

either way it should be a first class thing

I owe many links to all of their work if this turns into a blog post

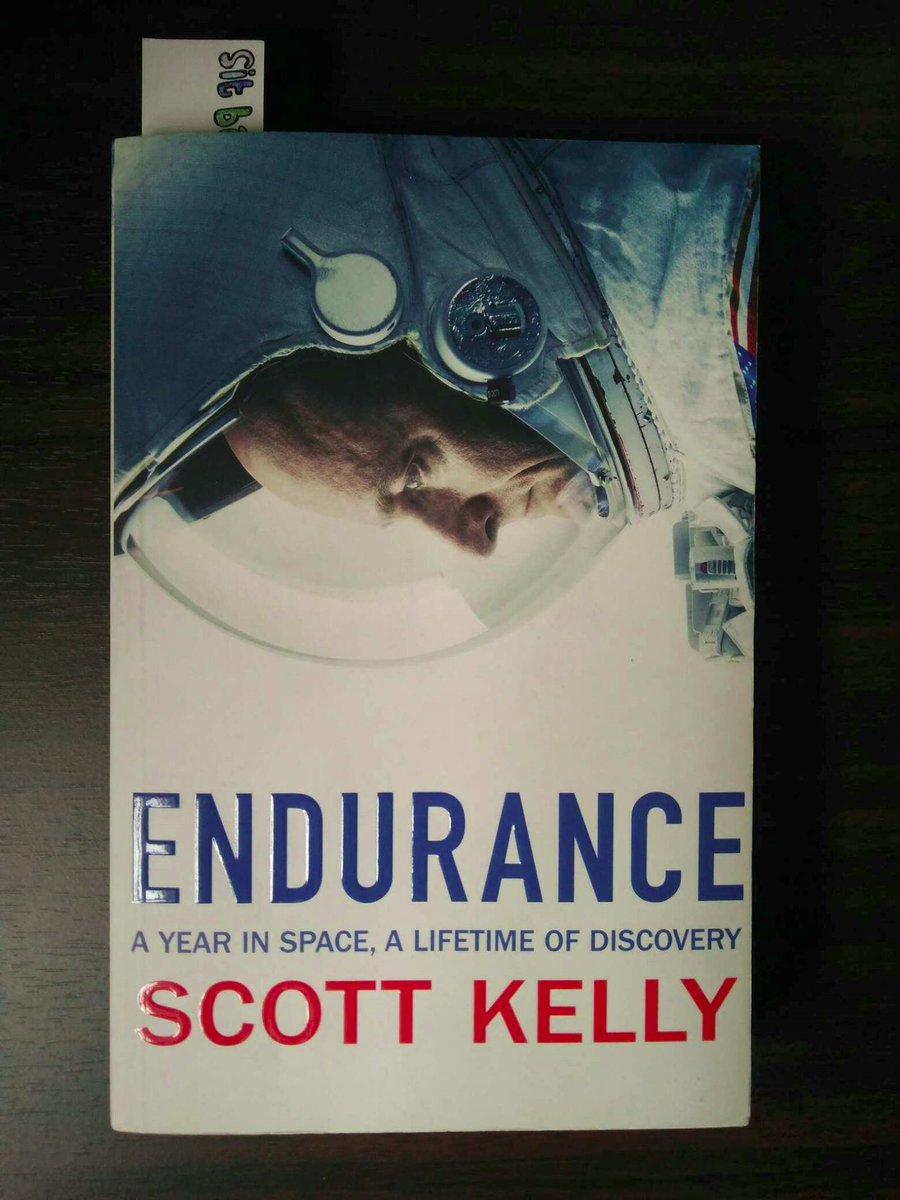

it is a fun read and will give you a more first principles approach going forward in whatever data you work with