အခုဖြစ်နေတဲ့ အခက်အခဲတွေကို ဘယ်လိုမှတ်တမ်းတင်မလဲ (ဓါတ်ပုံဖြစ်စေ၊ ဗီဒီယိုဖြစ်စေ) 👇

Escalating crisis as people standup to #MyanmarCoup. It's critical to document repression. Here's how to do it with phone. #WhatIsHappeningInMyanmar #HearTheVoiceOfMyanmar #CivilDisobedienceMovement

Escalating crisis as people standup to #MyanmarCoup. It's critical to document repression. Here's how to do it with phone. #WhatIsHappeningInMyanmar #HearTheVoiceOfMyanmar #CivilDisobedienceMovement

အထောက်အထားအဖြစ်အသုံးဝင်သောလက်ရှိဖြစ်ရပ်များ၏ဓါတ်ပုံများနှင့်ဗွီဒီယိုများကိုမည်သို့ယူရမည်နည်း

More detail on making #Video of #MyanmarCoup actions stronger as evidence (Burmese). Follow @WITNESS_Asia for + info #WhatIsHappeningInMyanmar

Full guide PDF bubbles.sevensnails.com/Guides_Tips_Re…

More detail on making #Video of #MyanmarCoup actions stronger as evidence (Burmese). Follow @WITNESS_Asia for + info #WhatIsHappeningInMyanmar

Full guide PDF bubbles.sevensnails.com/Guides_Tips_Re…

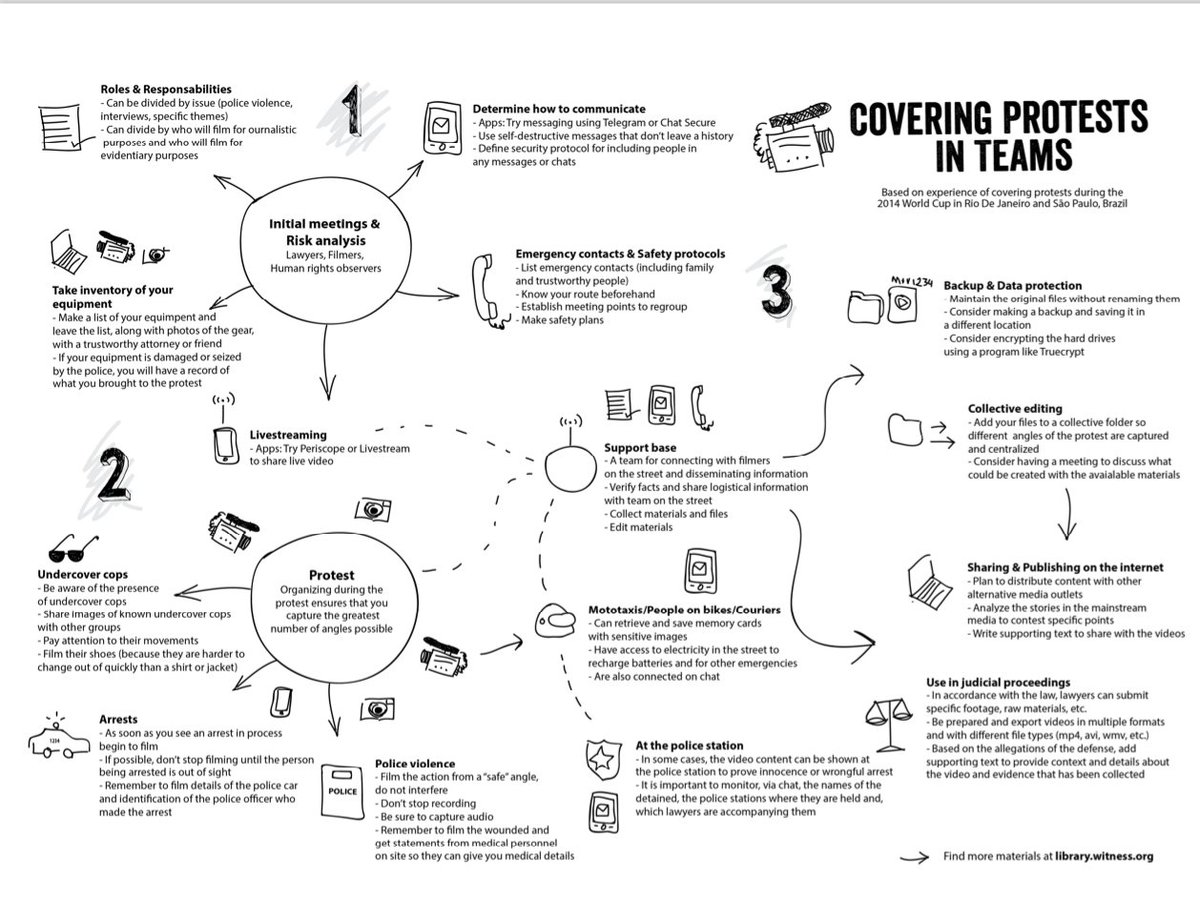

အဖွဲ့တစ်ဖွဲ့အဖြစ်ဆန္ဒပြရိုက်ကူးဖို့ဘယ်လို If you are #filming #MyanmarCoup think how to do as team: this simple infographic provides guidance on documenting #protests --> library.witness.org/product/infogr… Follow @WITNESS_Asia for + info

#WhatsHappeningInMyanmar #HearTheVoiceOfMyanmar

#WhatsHappeningInMyanmar #HearTheVoiceOfMyanmar

Document #humanrights abuses during enforced #InternetShutdowns using our guidelines 👇 #MyanmarCoup #WhatsHappeningInMyanmar. Follow @WITNESS_Asia for more info. Burmese translation coming soon.

blog.witness.org/2020/02/docume…

blog.witness.org/2020/02/docume…

သင်သည် Facebook Live ကိုအသုံးပြုနေပါသလား။ Using #Facebook Live to video #NightArrests #MyanmarCoup protests? Tips: understand risks, be safe/protect others, prepare, film details, explain clearly, protect videos. More tips @WITNESS_Asia. #WhatIsHappeninginMyanmar

#internetshutdown? You can still interview witnesses. Here @WITNESS_Asia tips to protect #Anonymity + conceal ID of people in videos re #MyanmarCoup 👇🏾 Don't release publicly if not safe - share direct to media or trusted ally

library.witness.org/product/concea…

#PeopleCannotSleepWellAtNight

library.witness.org/product/concea…

#PeopleCannotSleepWellAtNight

To slow spread of #Misinformation, follow @WITNESS_Asia tips to help you double-check #Images #Videos you see online: library.witness.org/product/visual…

#MyanmarCoup #WhatsHappeningInMyanmar #Verification #Feb15Coup

[resource developed last year in #COVID19 Response Hub.]

#MyanmarCoup #WhatsHappeningInMyanmar #Verification #Feb15Coup

[resource developed last year in #COVID19 Response Hub.]

unroll @threadreaderapp

• • •

Missing some Tweet in this thread? You can try to

force a refresh