"Liquid and Solid #Brains: Mapping the #Cognition Space"

Today's SFI Seminar by Ext Prof @ricard_sole, streaming now — follow this 🧵 for highlights:

Today's SFI Seminar by Ext Prof @ricard_sole, streaming now — follow this 🧵 for highlights:

"Why #brains? Brains are very costly...it seems like they are not a very good idea to bring complex cognition to a #biosphere that just needs simple replicators."

"I also want to explore the problem of #consciousness, which is around all the time..."

@ricard_sole

"I also want to explore the problem of #consciousness, which is around all the time..."

@ricard_sole

Two different views of #evolution:

- Stephen Jay Gould and an emphasis on #contingency

- Simon Conway Morris/Pere Albach and an emphasis on #constraints and #convergence

--> "Replay the tape with different results vs. 'the logic of monsters' & 'life's solution'"

- Stephen Jay Gould and an emphasis on #contingency

- Simon Conway Morris/Pere Albach and an emphasis on #constraints and #convergence

--> "Replay the tape with different results vs. 'the logic of monsters' & 'life's solution'"

"We can use evolutionary robotics to evolve language...in principle we can evolve VERY different things. #SyntheticBiology...we have a wet lab, which sometimes I think was a mistake. But we can build alternatives that neither biology nor engineering has considered."

@ricard_sole

@ricard_sole

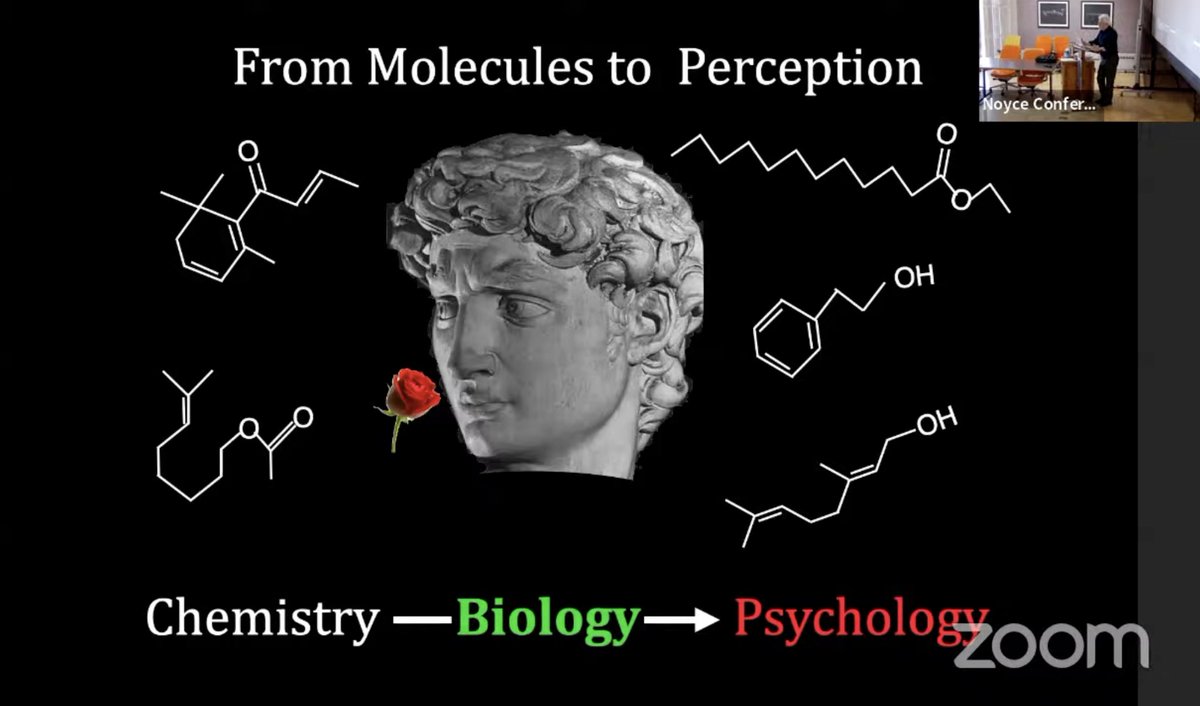

"What makes #biology different from #physics? #Computation. You can use #PhaseTransitions to understand how biology goes from #individuals to #collectives. But we don't have an overall idea of cognition, and what is important...what about '#LiquidBrains'?"

@ricard_sole

@ricard_sole

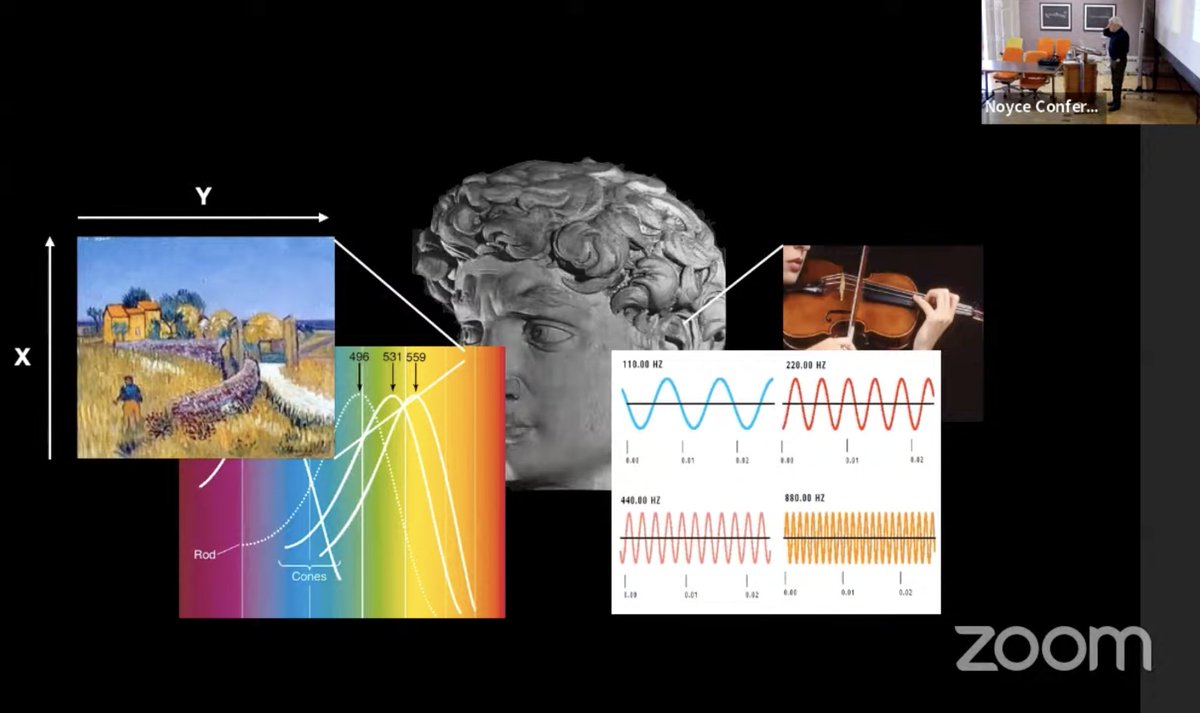

"When you think about the selective driver responsible for building brains, think about movement through an environment. Having a central system that integrates the information is really helpful."

@ricard_sole

@ricard_sole

Re: #SolidBrains and #GeneticAlgorithms, @ricard_sole outlines a history stretching back into the work of W. Pitts, J. J. Hopfield, S. A. Kauffman, and others to examine the origin of attractor neural network models — a.k.a., "An EXTREME simplification of the reality."

1) Thinking about #HebbsRule and memories as stored in minima on a hyperdimensional attractor basin.

2) Is the brain operating at #TheEdgeOfChaos?

3) "When we look closely, #brains operate at a #critical state."

@ricard_sole

2) Is the brain operating at #TheEdgeOfChaos?

3) "When we look closely, #brains operate at a #critical state."

@ricard_sole

Re: #multicellular vs. single-celled brains.

1) Fixed-position #networks

2) Motile individual #replicators

3) #Dinoflagellates *with retina-bearing camera eyes* (!)

4) #SlimeMold Physarum "in principle, single-celled...able to search its environment with tubes"

@ricard_sole:

1) Fixed-position #networks

2) Motile individual #replicators

3) #Dinoflagellates *with retina-bearing camera eyes* (!)

4) #SlimeMold Physarum "in principle, single-celled...able to search its environment with tubes"

@ricard_sole:

"If nature has found out solid and liquid [#brain] counterparts, is there something else, something we can engineer?"

"One of the things you observe is that the vast majority of ant colonies have to form nests, structures that come out of the collective mind."

@ricard_sole

"One of the things you observe is that the vast majority of ant colonies have to form nests, structures that come out of the collective mind."

@ricard_sole

"In natural systems you don't see colonies of ants made of individuals with HUGE brains. In @StarTrek there is this idea of #TheBorg, where the queen is very interesting with a large brain, but you don't see this. There is a tradeoff [between individual & collective cognition]."

1) "Swarms of birds are VERY close to a critical state."

2, 3) "In ant colonies, ants synchronize by touching each other...you have synchronization that is not very periodic."

4) "In information transfer between individuals, you have a peak...there's a critical density."

2, 3) "In ant colonies, ants synchronize by touching each other...you have synchronization that is not very periodic."

4) "In information transfer between individuals, you have a peak...there's a critical density."

"Are plants intelligent? Do they have complex cognition? There's a lot of response, integration with the environment, that has to do with changing shapes...morphology is very important. But their memory is completely inaccessible to the plant."

@ricard_sole

@ricard_sole

Exploring "cognition space":

How do we pick the right axes?

How do we measure those variables?

Is development part of the story of building the system?

Are there empty spaces — voids in the morphospace?

"There may be fundamental physical constraints. But MAYBE..."

@ricard_sole

How do we pick the right axes?

How do we measure those variables?

Is development part of the story of building the system?

Are there empty spaces — voids in the morphospace?

"There may be fundamental physical constraints. But MAYBE..."

@ricard_sole

Back to the question of whether #convergence rules #evolution:

"#Birds have very different #brains than ours. But they do very similar things."

"In The Cave of Hands, it's only left hands, because they were using the right [to blow pigment]."

"What makes us different?"

"#Birds have very different #brains than ours. But they do very similar things."

"In The Cave of Hands, it's only left hands, because they were using the right [to blow pigment]."

"What makes us different?"

"No machine today manages time."

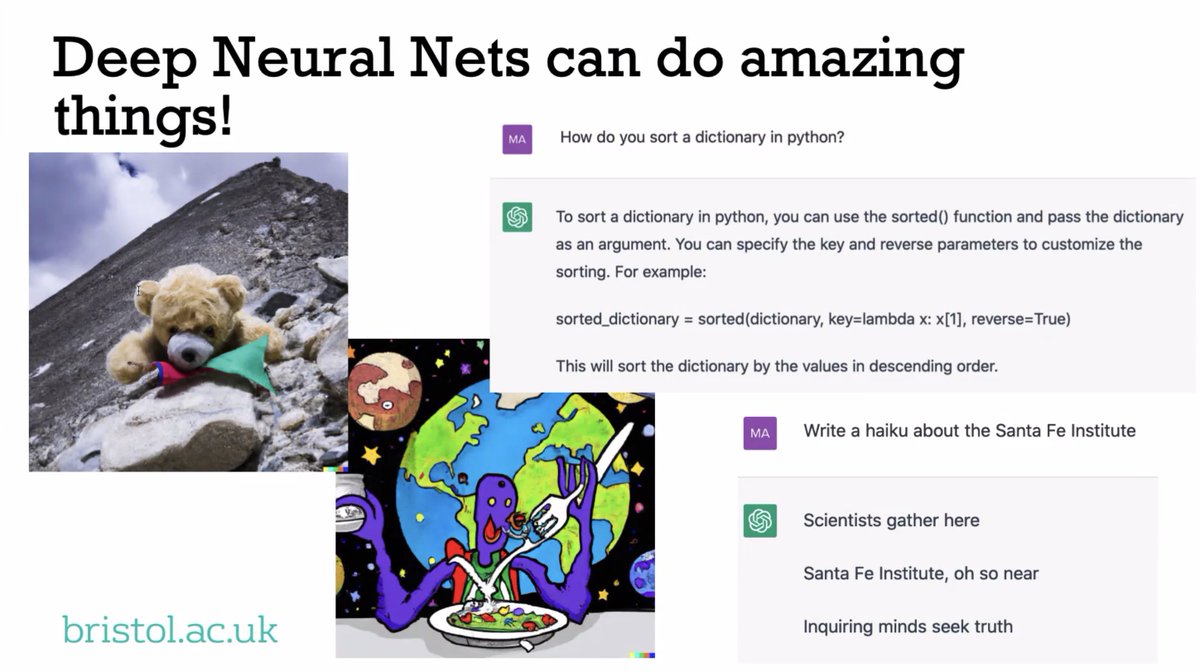

"You can see a kind of proto-language emerging in #robotics...you have two embodied agents programmed to look around and invent words to refer to objects or actions. Everything is complete with a rule that makes the agents agree about the words."

"You can see a kind of proto-language emerging in #robotics...you have two embodied agents programmed to look around and invent words to refer to objects or actions. Everything is complete with a rule that makes the agents agree about the words."

"To what extent should we have evolutionary rules that build the minds that we know as part of the story — as part of the preconditions for intelligence?"

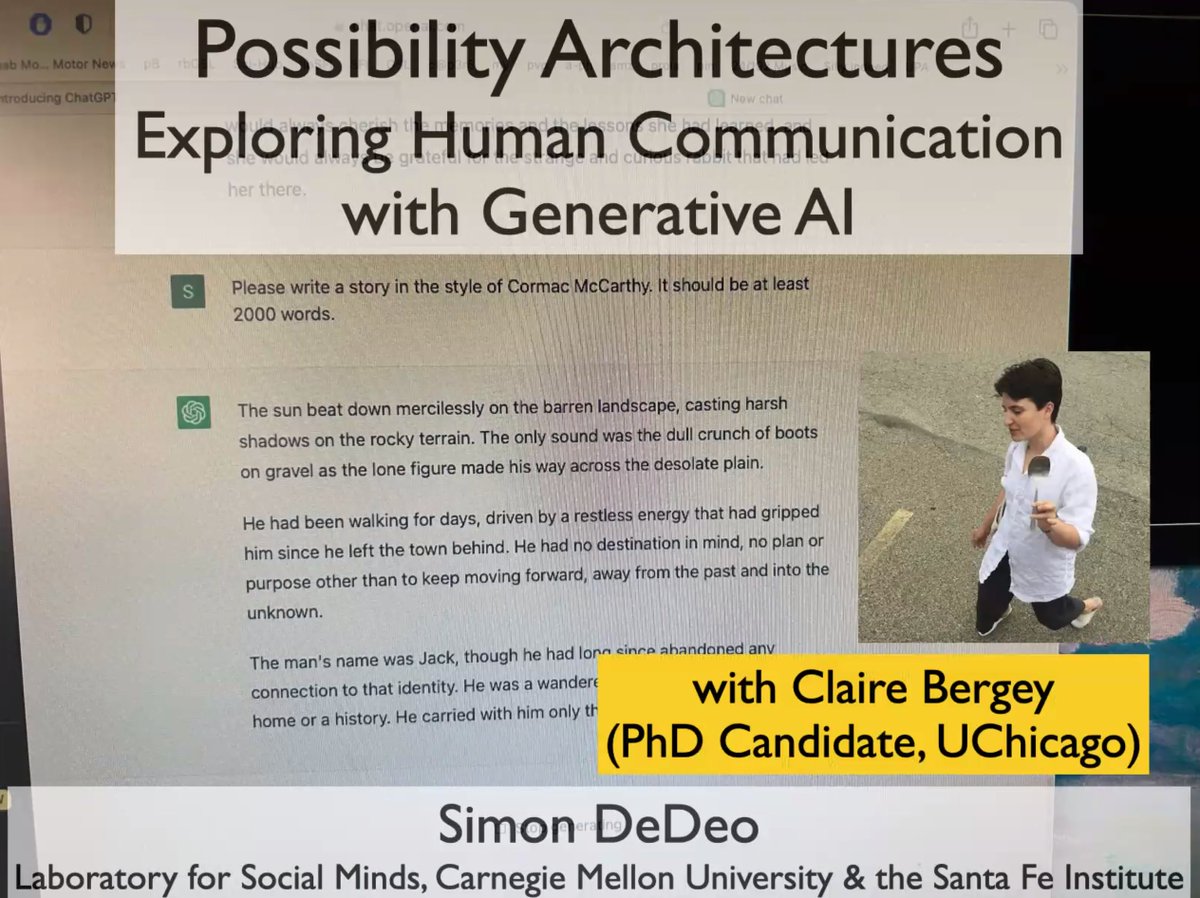

"@kevin2kelly makes the point that #AI will have intelligences that have nothing to do with ours..."

"@kevin2kelly makes the point that #AI will have intelligences that have nothing to do with ours..."

"...but when you build artificial #systems, all the time you are taking inspiration from #biology. I haven't seen anything that is really bizarre, that escapes from that."

@ricard_sole on design #constraints for #embodied #cognition:

@ricard_sole on design #constraints for #embodied #cognition:

"When it comes to #ExtendedMind(s) we think that humans are kind of outliers...you have things like #spiders who use their webs for #cognition, but [when it comes to a human-level extended phenotype], how do you 'jump' into that?"

@ricard_sole

@ricard_sole

• • •

Missing some Tweet in this thread? You can try to

force a refresh