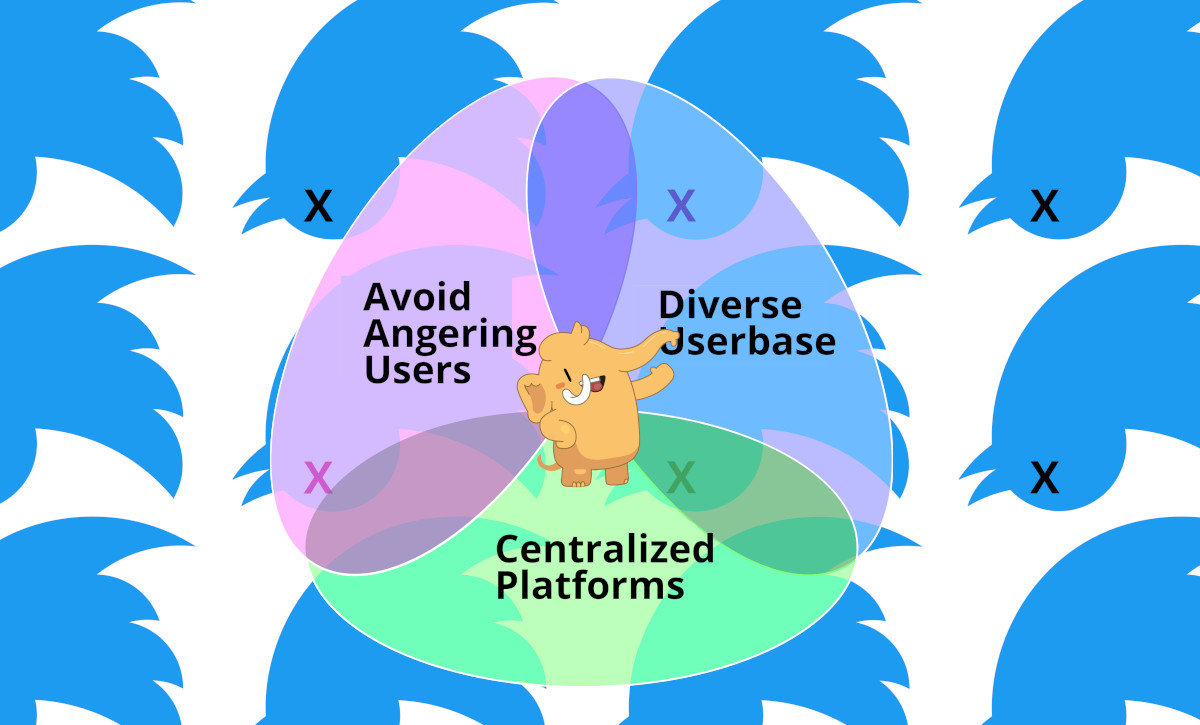

The classic #trilemma goes: "Fast, cheap or good, pick any two." The #ModeratorsTrilemma goes, "Large, diverse userbase; centralized platforms; don't anger users - pick any two." 1/

If you'd like an essay-formatted version of this thread to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

pluralistic.net/2023/03/04/pic… 2/

pluralistic.net/2023/03/04/pic… 2/

The Moderator's Trilemma is introduced in "Moderating the Fediverse: Content Moderation on Distributed Social Media," a superb paper from @ARozenshtein of @UofMNLawSchool, forthcoming in the journal @JournalSpeech, available as a prepub on @SSRN:

papers.ssrn.com/sol3/papers.cf… 3/

papers.ssrn.com/sol3/papers.cf… 3/

Rozenshtein proposes a solution (of sorts) to the Moderator's Trilemma: federation. De-siloing social media and breaking it out of centralized walled gardens. 4/

Then, recomposing it as a bunch of small servers run by a diversity of operators with a diversity of #ContentModeration approaches. The #Fediverse, in other words. 5/

In Albert Hirschman's classic treatise *Exit, Voice, and Loyalty,* stakeholders in an institution who are dissatisfied with its direction have two choices: #voice (arguing for changes) or #exit (going elsewhere). 6/

Rozenshtein argues that Fediverse users (especially users of #Mastodon, the most popular part of the Fediverse) have more voice *and* more #FreedomOfExit:

en.wikipedia.org/wiki/Exit,_Voi…

Large platforms - think #Twitter, #Facebook, etc - are very unresponsive to users. 7/

en.wikipedia.org/wiki/Exit,_Voi…

Large platforms - think #Twitter, #Facebook, etc - are very unresponsive to users. 7/

Most famously, Facebook polled its users on whether they wanted to be spied on. Faced with overwhelming opposition to commercial surveillance, Facebook ignored the poll result and cranked the #surveillance dial up to a million:

nbcnews.com/tech/tech-news… 8/

nbcnews.com/tech/tech-news… 8/

A decade later, Musk performed the same stunt, asking users whether they wanted him to fuck all the way off from the company, then ignored the #VoxPopuli, which, in this instance, was not #VoxDei:

apnews.com/article/elon-m… 9/

apnews.com/article/elon-m… 9/

Facebook, Twitter and other #WalledGardens are designed to be sticky-traps, relying on high #SwitchingCosts to keep users locked within their garden walls which are really prison walls. 10/

Internal memos from the companies reveal that this strategy is deliberate, designed to keep users from defecting even as the service degrades:

eff.org/deeplinks/2021… 11/

eff.org/deeplinks/2021… 11/

By contrast, the Fediverse is designed for ease of exit. With one click, users can export the list of the accounts they follow, block and mute, as well as the accounts that follow *them*. 12/

With one more click, users can import that data into any other Fediverse server and be back up and running with almost no cost or hassle:

pluralistic.net/2022/12/23/sem… 13/

pluralistic.net/2022/12/23/sem… 13/

Last month, "Nathan," the volunteer operator of mastodon.lol, announced that he was pulling the plug on the server because he was sick of his users' arguments about the new #HarryPotter game. 14/

Many commentators pointed to this as a mark against federated social media, "You can't rely on random, thin-skinned volunteer sysops for your online social life!"

mastodon.lol/@nathan/109836… 15/

mastodon.lol/@nathan/109836… 15/

But the mastodon.lol saga demonstrates the *strength* of federated social media, not its weakness. 16/

After all, 450 million Twitter users are also at the mercy of a thin-skinned sysop - but when he #enshittifies his platform, they can't just export their data and re-establish their social lives elsewhere in two clicks: 17/

Mastodon.lol shows us how, if you don't like your host's content moderation policies, you can exercise voice - even to the extent of making him so upset that he shuts off his server. 18/

Where voice fails, exit steps in to fill the gap, providing a soft landing for users who find the moderation policies untenable:

doctorow.medium.com/twiddler-1b5c9…

Traditionally, centralization has been posed as beneficial to content moderation. 19/

doctorow.medium.com/twiddler-1b5c9…

Traditionally, centralization has been posed as beneficial to content moderation. 19/

As Rozenshtein writes, a company that can "enclose" its users and lock them in has an incentive to invest in better user experience, while companies whose users can easily migrate to rivals are less invested in those users. 20/

And centralized platforms are more nimble. The operators of centralized systems can add hundreds of knobs and sliders to their back end and #twiddle them at will. They act unilaterally, without having to convince other members of a federation to back their changes. 21/

Centralized platforms claim that their most powerful benefit to users is extensive content moderation. As @TarletonG writes, “Moderation is central to what platforms do, not peripheral... [it] is, in many ways, the commodity that platforms offer":

yalebooks.yale.edu/book/978030026… 22/

yalebooks.yale.edu/book/978030026… 22/

Centralized systems claim that their enclosure keeps users safe - from bad code and bad people. Though Rozenshtein doesn't say so, it's important to note that this claim is wildly oversold. Platforms routinely fail at preventing abuse:

nbcnews.com/nbc-out/out-ne… 23/

nbcnews.com/nbc-out/out-ne… 23/

And they also fail at blocking malicious code:

scmagazine.com/news/threats/a…

But even where platforms *do* act to "keep users safe," they fail, thanks to the Moderator's Trilemma. Setting speech standards for millions or even billions of users is an impossible task. 24/

scmagazine.com/news/threats/a…

But even where platforms *do* act to "keep users safe," they fail, thanks to the Moderator's Trilemma. Setting speech standards for millions or even billions of users is an impossible task. 24/

Some users will *always* feel like speech is being underblocked - while others will feel it's overblocked (and both will be right!):

eff.org/deeplinks/2021… 25/

eff.org/deeplinks/2021… 25/

And platforms play very fast and loose with their definition of "malicious code" - as when #Apple blocked #OGApp, an #Instagram ad-blocker that gave you a simple feed consisting of just the posts from the people you followed:

pluralistic.net/2023/02/05/bat… 26/

pluralistic.net/2023/02/05/bat… 26/

To resolve the Moderator's Trilemma, we need to embrace #subsidiarity: "decisions should be made at the lowest organizational level capable of making such decisions."

pluralistic.net/2023/02/07/ful… 27/

pluralistic.net/2023/02/07/ful… 27/

For Rozenshtein, "content-moderation subsidiarity devolves decisions to the individual instances that make up the overall network." 28/

The fact that users can leave a server and set up somewhere else means when a user gets pissed off enough about a moderation policy, they don't have to choose between leaving social media or tolerating the policy - they can choose another server in the federation. 29/

Rozenshtein asks whether Reddit is an example of this, because moderators of individual subreddits are given broad latitude to set their own policies and anyone can fork a subreddit into a competing community with different modeations norms. 30/

But Reddit's devolution is a matter of *policy*, not *architecture* - subreddits exist at the sufferance of Reddit's owners (and Reddit is about to go public, meaning those owners will include activist investors and large institutions who might not care about your community). 31/

You might be happy about Reddit banning /r_TheDonald, but if they can band that subreddit, they can ban *any* subreddit. Policy works well, but fails badly. 32/

Moving subsidiarity into architecture, rather than human policy, means the fediverse can move from *antagonism* (the "zero-sum destructiveness" of current debate) to #agonism, where your opponents aren't enemies - they are "political adversaries":

yalelawjournal.org/article/the-ad… 33/

yalelawjournal.org/article/the-ad… 33/

Here, Rozenshtein cites Aymeric Mansoux and @rscmbbng's "Seven Theses On The Fediverse And The Becoming Of Floss":

test.roelof.info/seven-theses.h… 34/

test.roelof.info/seven-theses.h… 34/

> For this to happen, different ideologies must be allowed to materialize via different channels and platforms. An important prerequisite is that the goal of political consensus must be abandoned and replaced with conflictual consensus... 35/

So your chosen Mastodon server "may have rules that are far more restrictive than those of the major social media platforms." 36/

But the whole Fediverse "is substantially more speech protective than are any of the major social media platforms, since no user or content can be permanently banned from the network. 37/

"And anyone is free to start an instance that communicates both with the major Mastodon instances and the peripheral, shunned instances."

A good case-study here is #Gab, a Fediverse server by and for far-right cranks, conspiratorialists and white nationalists. 38/

A good case-study here is #Gab, a Fediverse server by and for far-right cranks, conspiratorialists and white nationalists. 38/

Most Fediverse servers have defederated (that is, blocked) Gab, but Gab is still there, and Gab has actually defederated from many of the remaining servers, leaving its users to speak freely - but only to people who want to hear what they have to say. 39/

This is true meaning of "freedom of speech isn't freedom of reach." Willing listeners aren't blocked from willing speakers - but you don't have the right to be heard by people who don't want to talk to you:

pluralistic.net/2022/12/10/e2e… 40/

pluralistic.net/2022/12/10/e2e… 40/

Fediverse servers are (thus far) nonprofits or hobbyist sites, and don't have the same incentives to drive "engagement" to maximize the opportunties to show advertisements. 41/

Fediverse applications are frequently designed to be #antiviral - that is, to prevent spectacular spreads of information across the system. 42/

It's likely that future Fediverse servers *will* be operated by commercial operators seeking to maximize attention in order to maximize revenue - but the users of these servers will still have the freedom of exit that they enjoy on today's #Jeffersonian volunteer-run servers. 43/

That means commercial servers will have to either curb their worst impulses or lose their users to better systems. 44/

I'll note here that this is a progressive story of the benefits of competition - not the capitalist's fetishization of competition for its own sake, but rather, competition as a means of disciplining capital. 45/

It can be readily complimented by discipline through regulation - for example, extending today's burgeoning crop of data-protection laws to require servers to furnish users with exports of their follow/follower data so they can go elsewhere. 46/

There's another dimension to decentralized content moderation that exit and voice *don't* address - moderating "harmful" content. 47/

Some kinds of harm can be mitigated through exit - if a server tolerates hate speech or harassment, you can go elsewhere, preferably somewhere that blocks your previous server. 48/

But there are other kinds of speech that must not exist - either because they are illegal or because they enact harms that can't be mitigated by going elsewhere (or both). 49/

The most spectacular version of this is #ChildSexAbuseMaterial (#CSAM), a modern term-of-art to replace the more familiar "child porn." 50/

Rozenshtein says there are "reasons for optimism" when it comes to the Fediverse's ability to police this content, though as he unpacked this idea, I found it much weaker than his other material. 51/

Rozenshtein proposes that Fediverse hosts could avail themselves of #PhotoDNA, #Microsoft's automated scanning tool, to block and purge themselves of CSAM, while noting that this is "hardly foolproof." 52/

If automated scanning fails, Rozenshtein allows this could cause "greater consolidation" of Mastodon servers for economies of scale to pay for more active, human moderation, which he compares to the consolidation of email that arose as a result of the #spam-wars. 53/

But the spam-wars have been catastrophic for email as a federated system and produced all kinds of opportunities for mischief by the big players:

doctorow.medium.com/dead-letters-7… 54/

doctorow.medium.com/dead-letters-7… 54/

Rozenshtein: "There is a tradeoff between a vibrant and diverse communication system and the degree of centralized control that would be necessary to ensure 100% filtering of content. The question, as yet unknown, is how stark that tradeoff is." 55/

It's much simpler when it comes to servers hosted by moderators who are complicit in illegal conduct: "the Fediverse may live in the cloud, its servers, moderators, and users are physically located in nations whose governments are more than capable of enforcing local law." 56/

That is, people who operate "rogue" servers dedicated to facilitating assassination, CSAM, or what-have-you will be arrested, and their servers will be seized.

Fair enough! 57/

Fair enough! 57/

But this butts up against one of the Fediverse's shortcomings: it isn't particularly useful for promoting illegal speech that *should* be legal, like the communications of #SexWorkers who were purged from the internet en masse following the passage of #SESTA/#FOSTA. 58/

When sex workers tried to establish a new home in the fediverse on a server called #Switter, it was effectively crushed. 59/

This simply reinforces the idea that code is no substitute for law, and while code can interpret bad law as damage and route around it, it can only do so for a short while. 60/

The best use of speech-enabling code isn't to avoid the unjust suppression of speech - it's to organize resistance to that injustice, including, if necessary, the replacement of the governments that enacted it:

onezero.medium.com/rubber-hoses-f… 61/

onezero.medium.com/rubber-hoses-f… 61/

Rozenshtein briefly addresses the question of #FilterBubbles, and notes that there is compelling research that filter bubbles don't really exist, or at least, aren't as important to our political lives as once thought:

sciendo.com/article/10.247… 62/

sciendo.com/article/10.247… 62/

Rozenshtein closes by addressing the role policy can play in encouraging the Fediverse. First, he proposes that governments could host their own servers and use them for official comms, as the EU Commission did following Musk's Twitter takeover:

social.network.europa.eu 63/

social.network.europa.eu 63/

He endorses #interoperability mandates which would required dominant platforms to connect to the fediverse (facilitating their users' departure), like the ones in the EU's #DSA and #DMA, and proposed in US legislation like the #ACCESSAct:

eff.org/deeplinks/2022… 64/

eff.org/deeplinks/2022… 64/

To get a sense of how that would work, check out #InteroperableFacebook, a video and essay I put together with @EFF to act as a kind of #DesignFiction, in the form of a user manual for a federated, interoperable Facebook:

eff.org/interoperablef… 65/

eff.org/interoperablef… 65/

He points out that this kind of mandatory interop is a preferable alternative to the unconstitutional (and unworkable!) speech bans proposed by #Florida and #Texas, which limit the ability of platforms to moderate speech. 66/

Indeed, this is an either-or proposition - under the terms proposed by Florida and Texas, the Fediverse *couldn't* operate. 67/

This is likewise true of proposals to eliminate #Section230, the law that immunizes platforms from federal liability for most criminal speech acts committed by their users. 68/

While this law is incorrectly smeared as a gift to #BigTech, it is most needed by small services that can't possibly afford to monitor everything their users say:

techdirt.com/2020/06/23/hel… 69/

techdirt.com/2020/06/23/hel… 69/

One more recommendation from Rozenshtein: treat interop mandates as an alternative (or adjunct) to antitrust enforcement. 70/

Competition agencies could weigh interoperability with the Fediverse by big platforms to determine whether to enforce against them, and enforcement orders could include mandates to interoperate with the Fediverse. 71/

This is a much faster remedy than break-ups, which Rozenshtein is dubious of because they are "legally risky" and "controversial."

To this, I'd add that even for people who would welcome break-ups (like me!) they are *sloooow*. The breakup of #ATT took *69 years*. 72/

To this, I'd add that even for people who would welcome break-ups (like me!) they are *sloooow*. The breakup of #ATT took *69 years*. 72/

By contrast, interop remedies would give relief to users *right now*:

onezero.medium.com/jam-to-day-46b… 73/

onezero.medium.com/jam-to-day-46b… 73/

• • •

Missing some Tweet in this thread? You can try to

force a refresh