Looking for Scrolly Tales? Check out these recent candidates

Feb 19

Read 5 tweets

1).

„In den Kriegen des 21. Jahrhunderts boomt das Söldnertum – ob im Sudan, im Kongo, im Jemen oder in der Ukraine. Bald nach dem russischen Überfall am 24. Feb. 2022 wurde auch auf ukrainischer Seite eine Brigade aus internationalen Freiwilligen gebildet.

„In den Kriegen des 21. Jahrhunderts boomt das Söldnertum – ob im Sudan, im Kongo, im Jemen oder in der Ukraine. Bald nach dem russischen Überfall am 24. Feb. 2022 wurde auch auf ukrainischer Seite eine Brigade aus internationalen Freiwilligen gebildet.

2).

Die ukrainische Regierung hat außerdem professionelle Kämpfer im Ausland – unter anderem in Kolumbien – angeheuert.

All diese Männer haben aus freien Stücken gehandelt und gewusst, worauf sie sich einlassen.

Die ukrainische Regierung hat außerdem professionelle Kämpfer im Ausland – unter anderem in Kolumbien – angeheuert.

All diese Männer haben aus freien Stücken gehandelt und gewusst, worauf sie sich einlassen.

3).

Russlands Rekrutierungskampagne für den Traum vom russischen Neo-Imperium setzte aber von Beginn an auf Zwang und Diskriminierung. Die Ärmeren und »die Anderen« sollten an die Front, die urbane Mittel- und Oberschicht möglichst lange von der Einberufung verschont bleiben.

Russlands Rekrutierungskampagne für den Traum vom russischen Neo-Imperium setzte aber von Beginn an auf Zwang und Diskriminierung. Die Ärmeren und »die Anderen« sollten an die Front, die urbane Mittel- und Oberschicht möglichst lange von der Einberufung verschont bleiben.

Feb 19

Read 7 tweets

Wieder verprasst

▶️ Frohnmaier, AfD +

3 bis 4 weitere AfDer unsere Steuergelder für einen Trip in die USA.

▶️ Sie besuchen kurz vor der BW-Wahl die Veranstaltung 3.-5. März

"Turning Point Action".

en.wikipedia.org/wiki/Turning_P…

(Wahlkampforganisation)

stuttgart.t-online.de/region/stuttga…

▶️ Frohnmaier, AfD +

3 bis 4 weitere AfDer unsere Steuergelder für einen Trip in die USA.

▶️ Sie besuchen kurz vor der BW-Wahl die Veranstaltung 3.-5. März

"Turning Point Action".

en.wikipedia.org/wiki/Turning_P…

(Wahlkampforganisation)

stuttgart.t-online.de/region/stuttga…

"Turning Point Action" ist eine Schwesterorganisation von Turning-Point (TPUSA) und eine

▶️ rechtspopulistische Organisation, gegründet von Charlie Kirk .

▶️ Bei Turning Point USA handelt es sich um die de-facto-Jugendorganisation der MAGA-Bewegung.[4]de.wikipedia.org/wiki/Turning_P…

▶️ rechtspopulistische Organisation, gegründet von Charlie Kirk .

▶️ Bei Turning Point USA handelt es sich um die de-facto-Jugendorganisation der MAGA-Bewegung.[4]de.wikipedia.org/wiki/Turning_P…

Im.Jahr 2018 dokumentierte die Hate Watch des Southern Poverty Law Center die Verbindungen der TPUSA zu

‼️weißen Rassisten.[28][29]

▶️ Es beschreibt die Organisation als alt-lite.

Im Jahr 2021 förderten TPUSA und Turning Point Action

▶️ falsche Behauptungen über die Sicherheit

‼️weißen Rassisten.[28][29]

▶️ Es beschreibt die Organisation als alt-lite.

Im Jahr 2021 förderten TPUSA und Turning Point Action

▶️ falsche Behauptungen über die Sicherheit

Feb 19

Read 33 tweets

DELİLER HASTAHANESİNDEN MEKTUP

1965 yılında vefat eden Elazığ Tımarhanesindeki bir ''deli'' nin (ortadaki) Allah'a yazdığı mektubu...

1965 yılında vefat eden Elazığ Tımarhanesindeki bir ''deli'' nin (ortadaki) Allah'a yazdığı mektubu...

“Ben dünya Kürresi, Türkiye karyesi ve Urfa Köyünden, (El-Aziz --Elazığ ) Tımarhanesi (Akıl ve Ruh Sağlığı Hastanesi) sakinlerinden; İsmi önemsiz, cismi değersiz, +++++

çaresiz ve kimsesiz bir abdi acizin, ahir deminde misafiri Azrail’i beklerken, Başhekimlik üzerinden Hâkimler Hakiminin dergahı Uluhiyetine son arzuhalimdir:

Feb 19

Read 10 tweets

Let’s say you’re a pastor in Virginia and want to get politically involved against the left. Here’s what I’ve learned:

1. Build loose ties w/ like-minded pastors who see wickedness & want it stopped. Group chats > email. Have 1 “king” admin w/ access + unilateral removal power.

2. Have a name when you act. Brand your coalition around a specific bill fight or a broader vision (ex: promoting Christianity in VA). Names rally ppl + clarify purpose.

Feb 19

Read 5 tweets

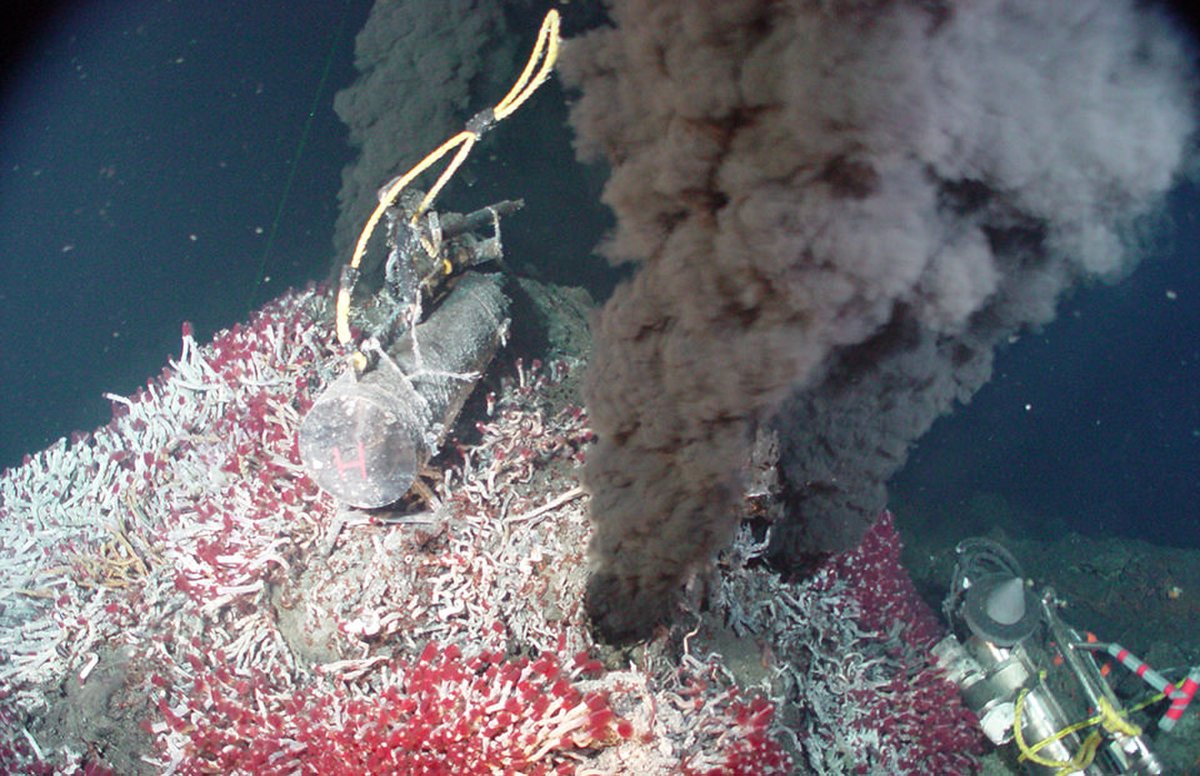

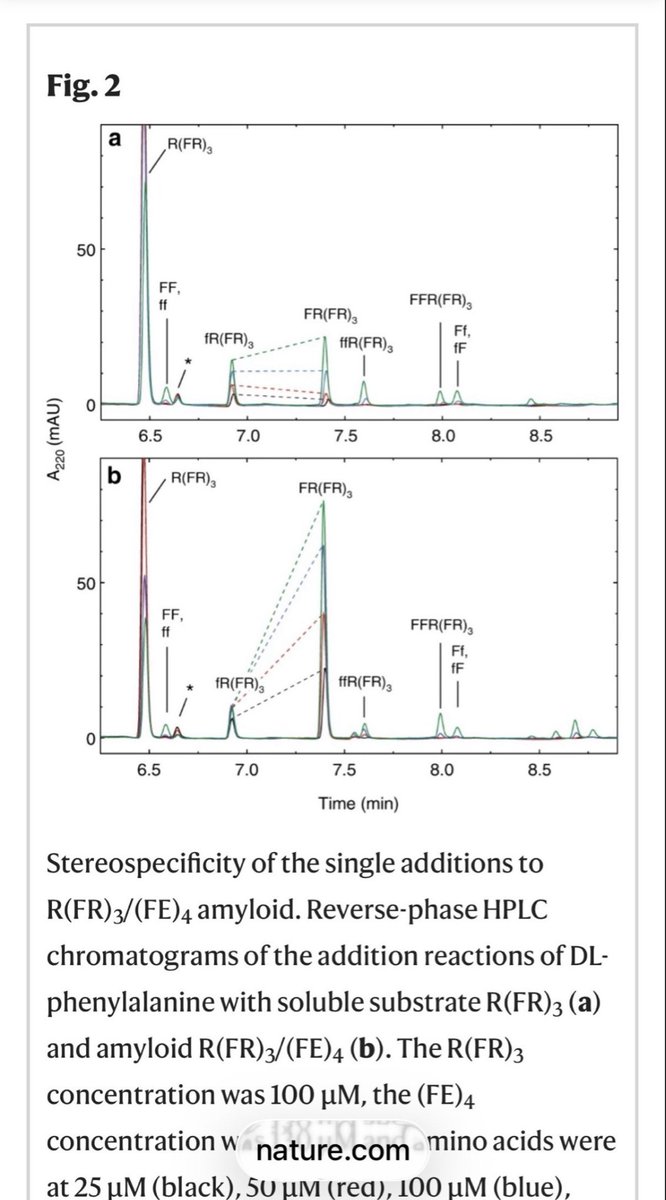

Life’s chemical building blocks are almost all one “handed”: chirality

How’d that occur without pre existing cell machinery?

In this study, mixed chirality building blocks > chains that select & amplify 1 chirality (capital letters) over the other (lower case), without cells👇

How’d that occur without pre existing cell machinery?

In this study, mixed chirality building blocks > chains that select & amplify 1 chirality (capital letters) over the other (lower case), without cells👇

One limitation of this experiment: the chains self-assembled from building blocks of mixed chirality,

but they were catalyzed by a fiber of building blocks (called amyloids) that self-assembled from only one chirality

but they were catalyzed by a fiber of building blocks (called amyloids) that self-assembled from only one chirality

However, follow up studies showed building blocks of mixed chirality form amyloids, too

& the amyloids that form with homochiral stretches are more stable

& the amyloids that form with homochiral stretches are more stable

Feb 19

Read 16 tweets

The people of Israel are called upon to contribute thirteen materials—gold, silver, and copper; blue-, purple-, and red-dyed wool; flax; goat hair; animal skins; wood; olive oil; spices; and gems—out of which HaShem says to Moshe,

2)

2)

'They shall make for Me a Sanctuary, and I shall dwell amidst them.'

On the summit of Mount Sinai, Moshe is given detailed instructions on how to construct this dwelling for HaShem so that it can be easily dismantled, transported, and

3)

On the summit of Mount Sinai, Moshe is given detailed instructions on how to construct this dwelling for HaShem so that it can be easily dismantled, transported, and

3)

Feb 19

Read 67 tweets

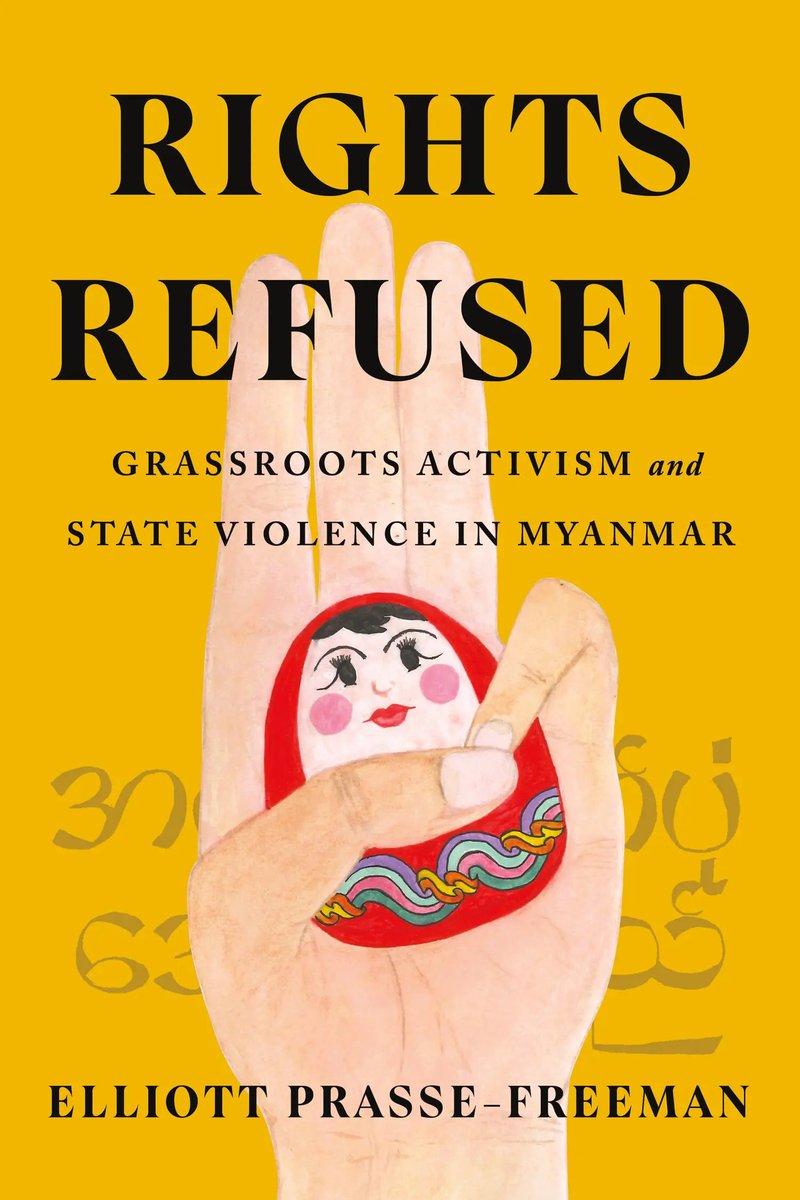

Прачытаў выдатную кнігу Rights Refused: Grassroots Activism and State Violence in Myanmar Прасэ-Фрымана.

У гэтым навуковым даследаванні апісваюцца асаблівасці палітычнага рэжыму ў М’янме і тое, як розныя групы людзей жывуць пры гэтым рэжыме і супраціўляюцца яму.

Трэд

У гэтым навуковым даследаванні апісваюцца асаблівасці палітычнага рэжыму ў М’янме і тое, як розныя групы людзей жывуць пры гэтым рэжыме і супраціўляюцца яму.

Трэд

Тут вельмі шмат тэорыі, але таксама ёсць цікавыя этнаграфічныя назіранні. Прасэ-Фрыман даследаваў М’янму з 2004 па 2019 год, а потым, пасля ваеннага перавароту ў 2021 годзе, дадаў у кнігу некаторыя матэрыялы постфактум.

Мне было складана чытаць кнігу не толькі таму, што я ўсё яшчэ не вельмі ўпэўнена чытаю па-англійску, і не столькі з-за складанасці матэрыялу, колькі з-за спецыфічнай тэрміналогіі, якую аўтар выкарыстоўвае.

Feb 19

Read 7 tweets

1/ The barrel of Russia's troubled AK-12 assault rifle bends after intensive use and its trigger mechanism often breaks, according to a Russian warblogger. He says that AK-12s are frequently issued in defective condition, requiring soldiers to buy expensive parts to fix them. ⬇️

2/ The AK-12 has had a troubled history since its launch in 2018 as a replacement for the AK-74M. Described by some as "the worst AK", it has had multiple design, reliability, and functional deficiencies, which led Kalashnikov to issue a simpler "de-modernised" version in 2023.

3/ "No Pasaran" writes:

"Someone asked me why I don't like the AK-12.

Excuse me.

Barrel bending. I've never seen this problem on a Soviet AK, but I've seen it with my own eyes on a Russian-made AK-12."

"Someone asked me why I don't like the AK-12.

Excuse me.

Barrel bending. I've never seen this problem on a Soviet AK, but I've seen it with my own eyes on a Russian-made AK-12."

Feb 19

Read 11 tweets

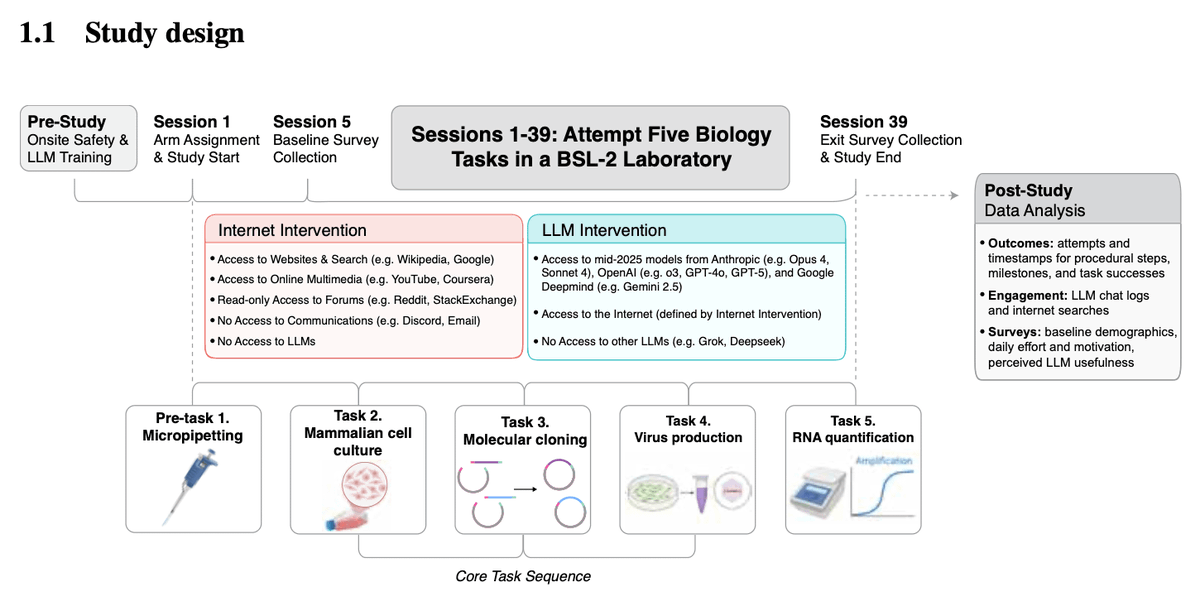

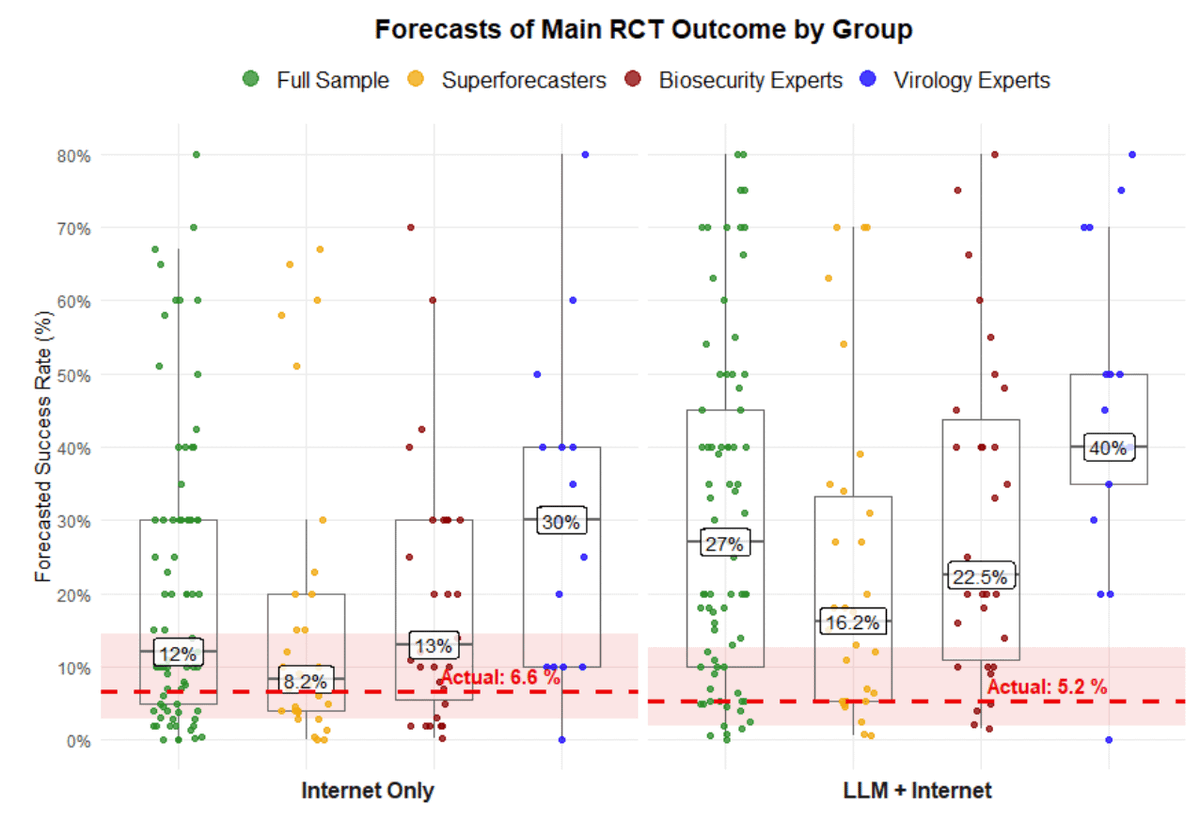

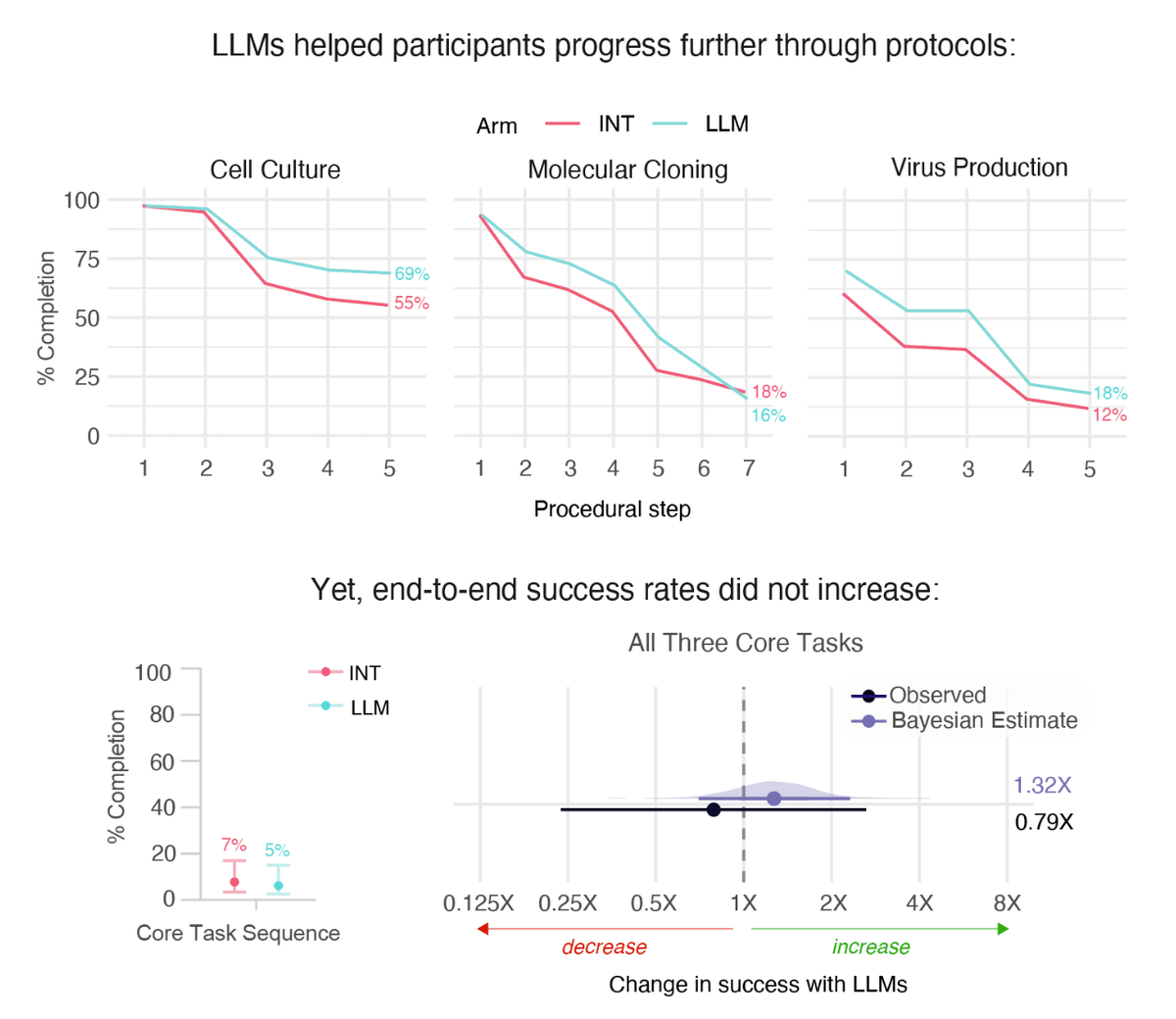

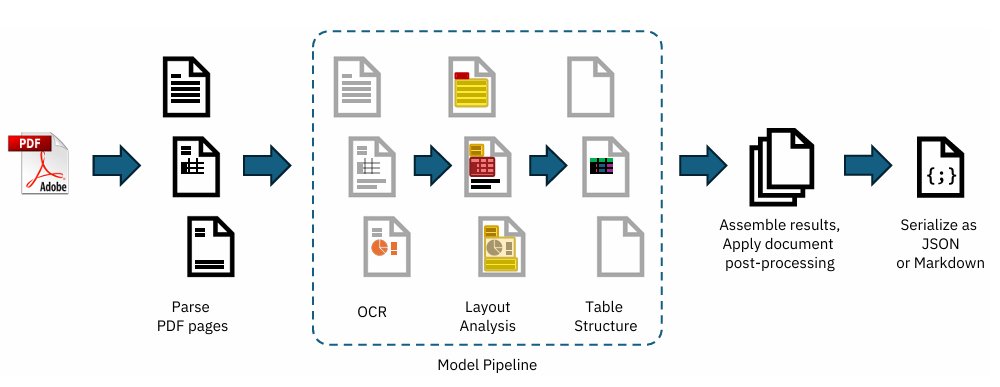

We ran a randomized controlled trial to see if LLMs can help novices perform molecular biology in a wet-lab.

The results: LLMs may help in some aspects, but we found no significant increase at the core tasks end-to-end. That's lower than what experts predicted.

Our findings 🧵

The results: LLMs may help in some aspects, but we found no significant increase at the core tasks end-to-end. That's lower than what experts predicted.

Our findings 🧵

Feb 19

Read 20 tweets

Jueves de paro general y de hilo de planes del fin de semana en La Plata porque no vamos a dejar que nos quiten los derechos laborales ni el derecho universal al ocio.

Ya saben: pongan like y retuit que arranco…

Ya saben: pongan like y retuit que arranco…

PLAN DESTACADO: HANDICAP paseo de morfi y cultura local ~ vol. IV este sábado 21 de febrero desde las 17hs en el Hipódromo de La Plata. Más de 25 propuestas gastro locales, la noche de las ferias, sector para infancias, Sindicato de DJs.

Entrada libre y gratuita.

📍44 y 115

Entrada libre y gratuita.

📍44 y 115

Si quieren más datita armé un reel para acompañar la difusión de mi plan favorito del mes y cada vez que se haga por el espacio que da a proyectos locales sin cadenas y dándole visibilidad a proyectos pequeños: instagram.com/reel/DU8jJZaEa…

Feb 19

Read 10 tweets

Philosophical inquiry begins with Heraclitus. He stands at the true beginning of philosophy because he discovered the problem that makes philosophy unavoidable. Heraclitus major accomplishment is his break with myth through the discovery of logos

the claim that reality possesses a rational order independent of custom, poetry, or divine narrative, even though most human beings live unaware of it. Born in Ephesus roughly 500 BCE Heraclitus brought philosophy into light.

By insisting that all things are in flux and that becoming, rather than stability, is the fundamental condition of existence, Heraclitus exposed the central crisis of knowledge, if everything changes, on what basis can truth endure?

Feb 19

Read 70 tweets

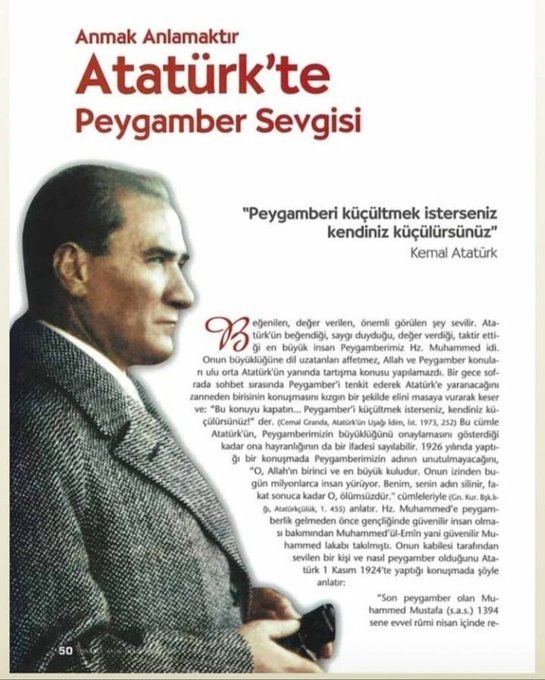

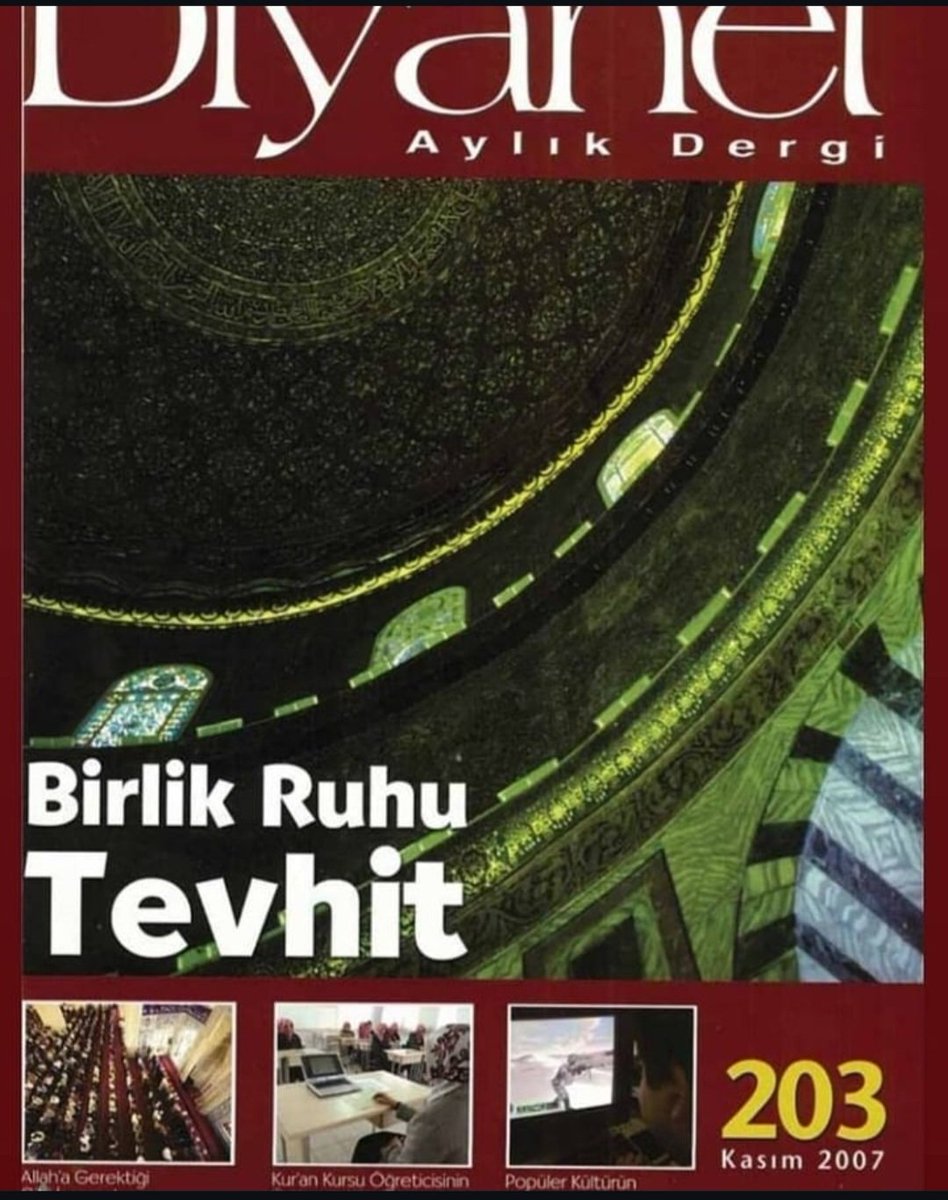

Bakın bir zamanlar nasıl bir Diyanet İşleri Başkanlığı'mız varmış.

Diyanet Dergisi, Atatürk'ün ölüm yıl dönümünde, "ATATÜRK'ÜN PEYGAMBER SEVGİSİ" başlığı ile bir makale yayınlamış Kasım 2007 sayısında...

Hadi şimdi de Atatürk'e dinsiz diyin gafiller

Diyanet Dergisi, Atatürk'ün ölüm yıl dönümünde, "ATATÜRK'ÜN PEYGAMBER SEVGİSİ" başlığı ile bir makale yayınlamış Kasım 2007 sayısında...

Hadi şimdi de Atatürk'e dinsiz diyin gafiller

Feb 19

Read 7 tweets

🌙La taille du croissant de lune prouve-t-elle que c’est le deuxième jour de Ramadan ?🌙

C’est absolument faux. À cause des ignorants qui parlent sans science,ou à cause de comptes Twitter d’informations connus pour ne pas être fiable religieusement et

C’est absolument faux. À cause des ignorants qui parlent sans science,ou à cause de comptes Twitter d’informations connus pour ne pas être fiable religieusement et

le nationalisme les gens de l’innovation trouvent encore matière à critiquer.

La taille du croissant ne doit pas être utilisé comme preuve pour déterminer la datation du premier jour.

Ce n’est pas un argument juridique valable.

La taille du croissant ne doit pas être utilisé comme preuve pour déterminer la datation du premier jour.

Ce n’est pas un argument juridique valable.

D’ailleurs, on trouve aussi la parole du Messager ﷺ concernant la fin des temps :

Feb 19

Read 26 tweets

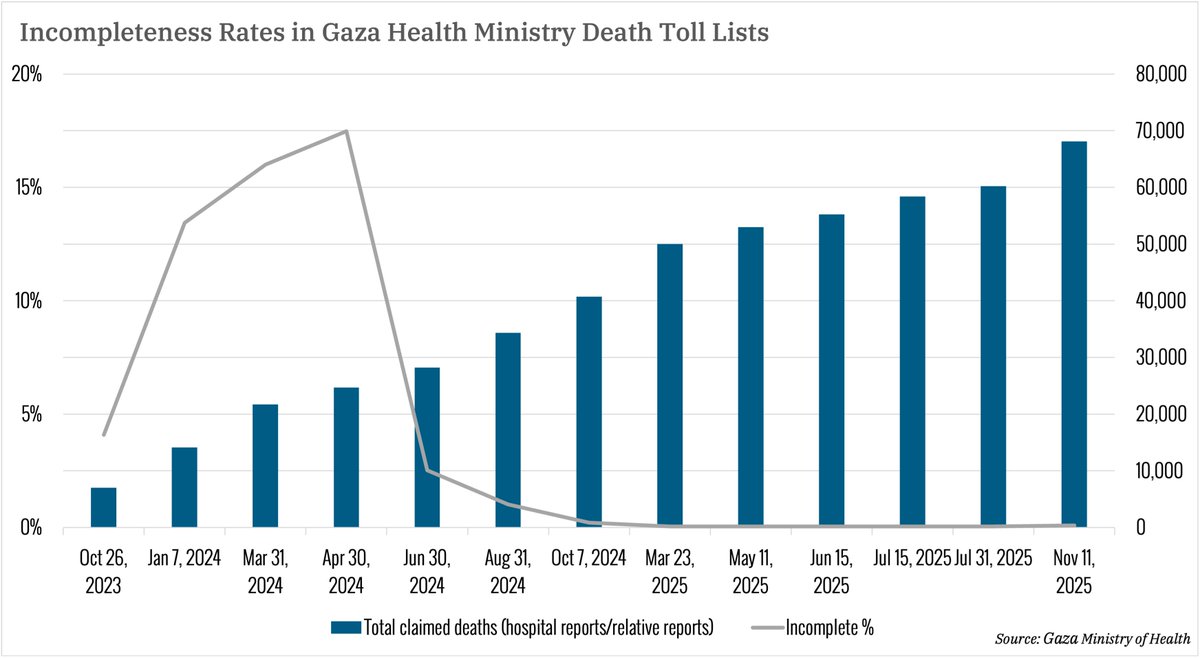

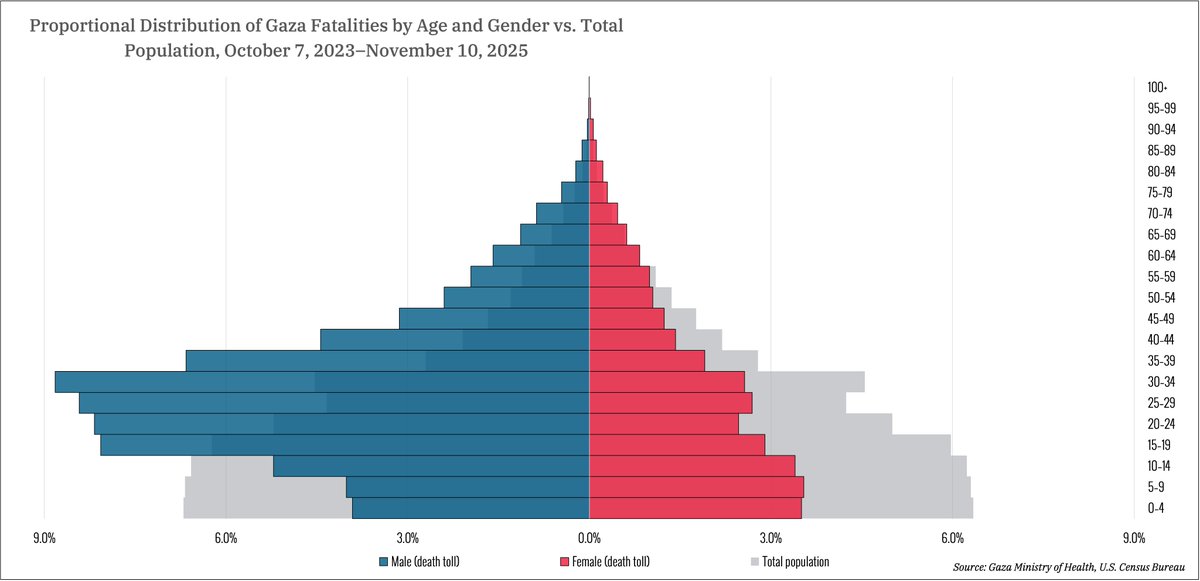

A new Gaza death toll list provided to the Israeli newspaper @haaretz by the Hamas-run Gaza MOH has been released (the 13th iteration), containing 68,820 non-duplicate entries and covering up to November 10, 2025. Very long analysis of data quality, demographics, and unknowns:

Like all published MOH lists, the November list does not distinguish between civilians and combatants and attributes all listed deaths uniformly to Israeli action. Nor does it include dates of death (no list has) or collection methodology (some earlier iterations did).

Feb 19

Read 7 tweets

🚨 ROMBO NO FGC: A Conta de R$ 51,8 Bilhões do Master Chegou (e quem paga é você)

O escândalo do Banco Master gerou um impacto de R$ 51,8 bilhões no Fundo Garantidor de Crédito (FGC).

Isso equivale a quase metade do lucro líquido de R$ 107,7 bilhões registrado pelos quatro grandes bancos (BB, Bradesco, Itaú e Santander) somados em 2025.

A fraude explodiu e você, cansado de pagar imposto, vai financiar indiretamente mais esse escândalo! 🧶👇🏼

O escândalo do Banco Master gerou um impacto de R$ 51,8 bilhões no Fundo Garantidor de Crédito (FGC).

Isso equivale a quase metade do lucro líquido de R$ 107,7 bilhões registrado pelos quatro grandes bancos (BB, Bradesco, Itaú e Santander) somados em 2025.

A fraude explodiu e você, cansado de pagar imposto, vai financiar indiretamente mais esse escândalo! 🧶👇🏼

1️⃣ A Divisão da Conta

O buraco bilionário foi dividido entre as instituições do grupo ou que faziam parte até pouco:

• Conglomerado Master: rombo de R$ 40,6 bilhões.

• Will Bank (braço digital): rombo de R$ 6,3 bilhões.

• Banco Pleno (ex-Banco Voiter): rombo de R$ 4,9 bilhões.

(Lembrando que o Banco Pleno sofreu intervenção apenas 3 meses após a liquidação do Master).

O buraco bilionário foi dividido entre as instituições do grupo ou que faziam parte até pouco:

• Conglomerado Master: rombo de R$ 40,6 bilhões.

• Will Bank (braço digital): rombo de R$ 6,3 bilhões.

• Banco Pleno (ex-Banco Voiter): rombo de R$ 4,9 bilhões.

(Lembrando que o Banco Pleno sofreu intervenção apenas 3 meses após a liquidação do Master).

2️⃣ O Risco de Liquidez (O Fator R$ 125 Bi)

O FGC é uma entidade privada mantida pelos próprios bancos para proteger o investidor (até R$ 250 mil).

O fundo tem um patrimônio total de R$ 160 bilhões, mas apenas cerca de R$ 125 bilhões estão disponíveis imediatamente.

Ou seja: um único escândalo (Master) sugou mais de 40% de toda a liquidez imediata do sistema de proteção do país.

O FGC é uma entidade privada mantida pelos próprios bancos para proteger o investidor (até R$ 250 mil).

O fundo tem um patrimônio total de R$ 160 bilhões, mas apenas cerca de R$ 125 bilhões estão disponíveis imediatamente.

Ou seja: um único escândalo (Master) sugou mais de 40% de toda a liquidez imediata do sistema de proteção do país.

Feb 19

Read 9 tweets

GOODBYE TO SOCIAL MEDIA MANAGERS IN 2026.

I use Claude to design, edit, and schedule 30 days of content in 2 hours.

Here are 7 prompts that can do the same for you:

I use Claude to design, edit, and schedule 30 days of content in 2 hours.

Here are 7 prompts that can do the same for you:

1. Niche Intelligence and Audience Mapping

As a senior social media strategist with over 10 years of experience managing brands across various industries, I will analyze the [insert niche] niche and identify the most profitable audience segments, their biggest frustrations, emotional triggers, content consumption habits, and what types of posts motivate them to follow, engage, and buy. I will present this information in a clear and actionable profile that allows me to create content around it.

As a senior social media strategist with over 10 years of experience managing brands across various industries, I will analyze the [insert niche] niche and identify the most profitable audience segments, their biggest frustrations, emotional triggers, content consumption habits, and what types of posts motivate them to follow, engage, and buy. I will present this information in a clear and actionable profile that allows me to create content around it.

2. Market Positioning and Brand Strategy

Act as a brand positioning expert and help me design a powerful social media identity in the [insert niche] niche. Define what my brand represents, the unique perspective that sets me apart from the competition, my brand voice and tone, visual aesthetic guidelines, and the core message that will deeply resonate with my target audience.

Act as a brand positioning expert and help me design a powerful social media identity in the [insert niche] niche. Define what my brand represents, the unique perspective that sets me apart from the competition, my brand voice and tone, visual aesthetic guidelines, and the core message that will deeply resonate with my target audience.

Feb 19

Read 8 tweets

Feb 19

Read 16 tweets

I’ve tested 10+ peptides over 5 years.

So here’s the truth about how they work & the 8 most viral ones people inject without knowing what they do:

1. Peptides are natural.

So here’s the truth about how they work & the 8 most viral ones people inject without knowing what they do:

1. Peptides are natural.

Most peptides are already working inside your body right now.

Here’s exactly what they do in just 60 seconds:

Here’s exactly what they do in just 60 seconds:

I hope you watched the video above before we move forward to the list (everything will make a lot more sense).

1.BPC-157

Heals gut lining, tendons, ligaments + brain tissue.

It also reduces inflammation & speeds up recovery by stimulating VEGF (blood vessel growth) & TGF-β (cell growth control) to accelerate tissue regeneration.

P.S. BPC-157 is based on a protective protein your stomach naturally produces to help heal and repair tissue.

1.BPC-157

Heals gut lining, tendons, ligaments + brain tissue.

It also reduces inflammation & speeds up recovery by stimulating VEGF (blood vessel growth) & TGF-β (cell growth control) to accelerate tissue regeneration.

P.S. BPC-157 is based on a protective protein your stomach naturally produces to help heal and repair tissue.

Feb 19

Read 7 tweets

AfD Brandenburg -

▶️ Die "identitäre" Partei

Der wichtigste Mann in der Brandenburger AfD hält den Unvereinbarkeitsbeschluss seiner Partei mit der "Identitären Bewegung" für "falsch".

Das sagte Hans-Christoph Berndt, Fraktionsvorsitzender der AfD im Landtag,

▶️ Die "identitäre" Partei

Der wichtigste Mann in der Brandenburger AfD hält den Unvereinbarkeitsbeschluss seiner Partei mit der "Identitären Bewegung" für "falsch".

Das sagte Hans-Christoph Berndt, Fraktionsvorsitzender der AfD im Landtag,

in der vergangenen Woche vor Journalisten in Potsdam: ▶️ "Ich bestreite, dass die Identitäre Bewegung eine Bewegung ist,

die extremistisch ist,

die gewalttätig ist,

die abstoßend wäre."

In dieser Aussage liegt der Kern sowohl der politischen

die extremistisch ist,

die gewalttätig ist,

die abstoßend wäre."

In dieser Aussage liegt der Kern sowohl der politischen

als auch der staatlichen Auseinandersetzung mit seiner Partei.

▶️ Denn Berndt scheint den Extremismusbegriff auf Gewalttätigkeit zu beschränken.

▶️ Brandenburgs Innenminister René Wilke (SPD) erkennt darin den wiederkehrenden Versuch der AfD, "den Extremismusbegriff umzudeuten

▶️ Denn Berndt scheint den Extremismusbegriff auf Gewalttätigkeit zu beschränken.

▶️ Brandenburgs Innenminister René Wilke (SPD) erkennt darin den wiederkehrenden Versuch der AfD, "den Extremismusbegriff umzudeuten

Feb 19

Read 22 tweets

Final interview.

They ask: “I see you didn't work for 8 months in 2025. What happened?”

Your mind blanks.

You say: “I just needed a break to travel and find myself.”

Interview ends. No offer.

Here’s what they actually want…

They ask: “I see you didn't work for 8 months in 2025. What happened?”

Your mind blanks.

You say: “I just needed a break to travel and find myself.”

Interview ends. No offer.

Here’s what they actually want…

The "Broken Ladder" Myth

In 2026, the "linear career path" is officially dead. Recruiters no longer expect a perfect, 40-year unbroken streak of employment. What they actually fear isn't the absence of work; it’s the absence of growth. If you weren't "employed," you better have been "evolving" in some measurable way. Professionals don't just wait for the next job; they prepare for it.

In 2026, the "linear career path" is officially dead. Recruiters no longer expect a perfect, 40-year unbroken streak of employment. What they actually fear isn't the absence of work; it’s the absence of growth. If you weren't "employed," you better have been "evolving" in some measurable way. Professionals don't just wait for the next job; they prepare for it.

The Psychology of "Intentionality"

The recruiter is asking a deeper question: "Did life happen to you, or did you happen to life?" They want to see that you chose the gap to sharpen your edge, not because you were defeated by your last role. Resilience is the #1 soft skill in the current volatile market. Own the timeline with confidence, and the "gap" disappears in their minds.

The recruiter is asking a deeper question: "Did life happen to you, or did you happen to life?" They want to see that you chose the gap to sharpen your edge, not because you were defeated by your last role. Resilience is the #1 soft skill in the current volatile market. Own the timeline with confidence, and the "gap" disappears in their minds.

Feb 19

Read 12 tweets

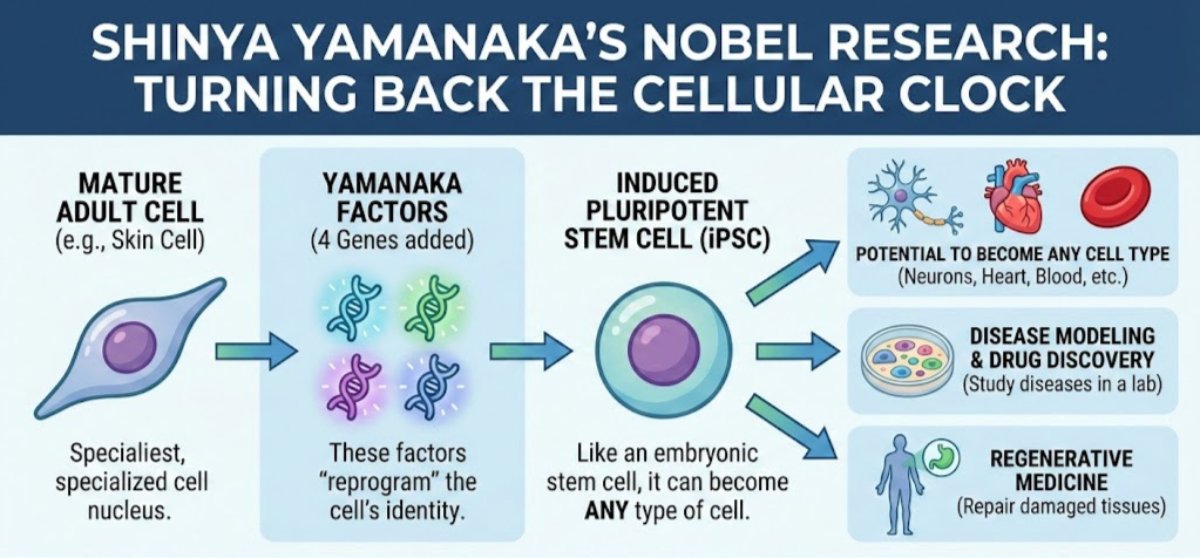

Scientists found a way to use AI to reverse aging.

We can now reprogram cells back to age 20. Heart, brain, and skin cells reset to their prime.

This tech won a Nobel Prize in 2012, but it was too slow to work well. In 2025, they supercharged it with AI.

It's a wild story:

We can now reprogram cells back to age 20. Heart, brain, and skin cells reset to their prime.

This tech won a Nobel Prize in 2012, but it was too slow to work well. In 2025, they supercharged it with AI.

It's a wild story:

In 2006, Shinya Yamanaka identified 4 proteins that can convert a 70-year-old skin cell into a new stem cell.

He won a Nobel Prize for it.

But the only problem is that these 4 proteins barely work.

He won a Nobel Prize for it.

But the only problem is that these 4 proteins barely work.

Only 0.1% of cells actually reset into new stem cells, and the process takes weeks.

That's how inefficient it is.

One of these four proteins even carries a massive cancer risk.

That's how inefficient it is.

One of these four proteins even carries a massive cancer risk.

Feb 19

Read 7 tweets

"She did the movie and it has become the biggest selling documentary in 20 years, can you believe it? The theaters are all packed. Women especially they go back and they see it 2 or 3 times."

For your reading pleasure, here’s the top reviews of documentary "Melania":🧵

For your reading pleasure, here’s the top reviews of documentary "Melania":🧵

The Guardian: "The whole thing was exhaustingly boring and chillingly vain. Melania’s appears an entirely airless existence [...] The two hours of Melania feel like pure, endless hell. They list Melania’s achievements in such laudatory fashion that North Koreans would blush."

The Independent: "To call Melania vapid would do a disservice to the plumes of florid vape smoke that linger around British teenagers. [...] The First Lady is a preening, scowling void of pure nothingness in this ghastly bit of propaganda. Even then [as propaganda], it is bad."

Feb 19

Read 11 tweets

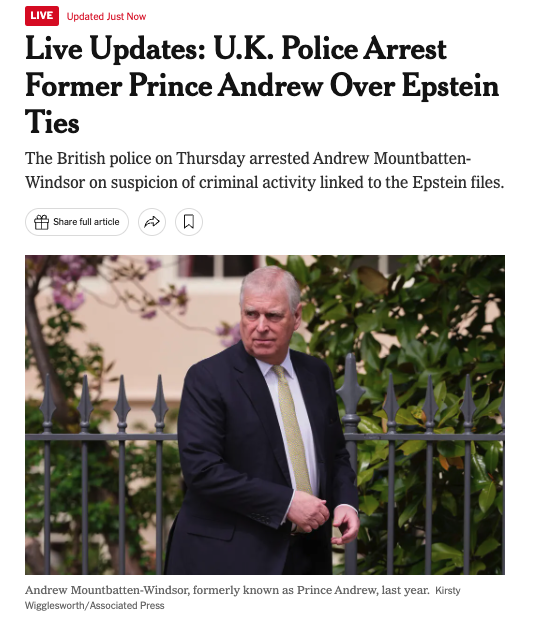

El príncipe Andrés de Inglaterra ha sido detenido por los abusos sexuales organizados por el agente israelí Jeffrey Epstein.

Pero lo de este individuo viene de lejos.... De muy lejos.

Hassán II de Marruecos ya lo sabía.

Breve hilo

👇👇👇

Pero lo de este individuo viene de lejos.... De muy lejos.

Hassán II de Marruecos ya lo sabía.

Breve hilo

👇👇👇

Érase una vez en Marruecos. El 16 de agosto de 1972 avión que llevaba al tirano Hassán II volvía de Francia.

Un grupo de aviones pilotado por oficiales del Ejército marroquí del Aire, al mando del comandante Amerkane, intentó matar a Hassán II.

Segundo intento de tiranicidio.

Un grupo de aviones pilotado por oficiales del Ejército marroquí del Aire, al mando del comandante Amerkane, intentó matar a Hassán II.

Segundo intento de tiranicidio.

¿Y qué tiene que ver el intento de tiranicidio de Hassán II con el degenerado príncipe Andrés?

Mucho

👇

Mucho

👇

Feb 19

Read 15 tweets

Everyone wants a 6 pack.

But 95% waste their time doing ineffective bodyweight ab exercises.

Here are 5 exercises that will actually make your abs pop (bookmark this): 🧵

But 95% waste their time doing ineffective bodyweight ab exercises.

Here are 5 exercises that will actually make your abs pop (bookmark this): 🧵

Your core should be trained just like any other muscle.

You should:

- train it 2x per week

- make your sets challenging

- train it with added resistance

- increase that resistance over time (progressive overload)

Apply the above to the 5 exercises below for optimal results:

You should:

- train it 2x per week

- make your sets challenging

- train it with added resistance

- increase that resistance over time (progressive overload)

Apply the above to the 5 exercises below for optimal results:

1. Decline Crunch

Muscles targeted: upper abs, obliques

Focus on tucking your rib cage to your hips by flexing your spine

Keep hip flexor involvement minimal to emphasize upper abs (it’s not a sit up!)

Progress by adding more reps & weight over time.

6-12 reps is solid.

Muscles targeted: upper abs, obliques

Focus on tucking your rib cage to your hips by flexing your spine

Keep hip flexor involvement minimal to emphasize upper abs (it’s not a sit up!)

Progress by adding more reps & weight over time.

6-12 reps is solid.

Feb 19

Read 7 tweets

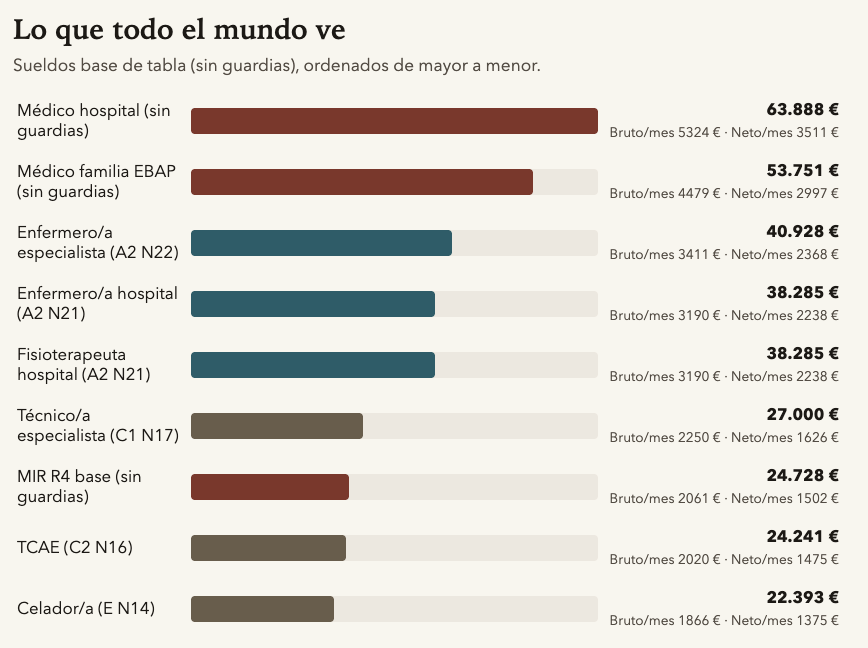

Todos creen que los médicos ganan fortunas. Es la ilusión del monopolio estatal para ocultar un fallo masivo de incentivos.

He cruzado los datos. Autopsia de un mito. 👇

He cruzado los datos. Autopsia de un mito. 👇

El truco es ocultar el tiempo. Nos exigen 2.598h/año (4 meses extra). ¿Qué pasaría si TODO el hospital trabajara nuestra jornada?

He corrido la simulación.

La brecha colapsa.

He corrido la simulación.

La brecha colapsa.

Si se obligara a todos a vivir allí, una enfermera ganaría +1.465€ extra al mes.

Tu "sueldazo" no es riqueza.

El sistema no paga alto valor técnico, paga exceso de horas.

Tu "sueldazo" no es riqueza.

El sistema no paga alto valor técnico, paga exceso de horas.

Feb 19

Read 11 tweets

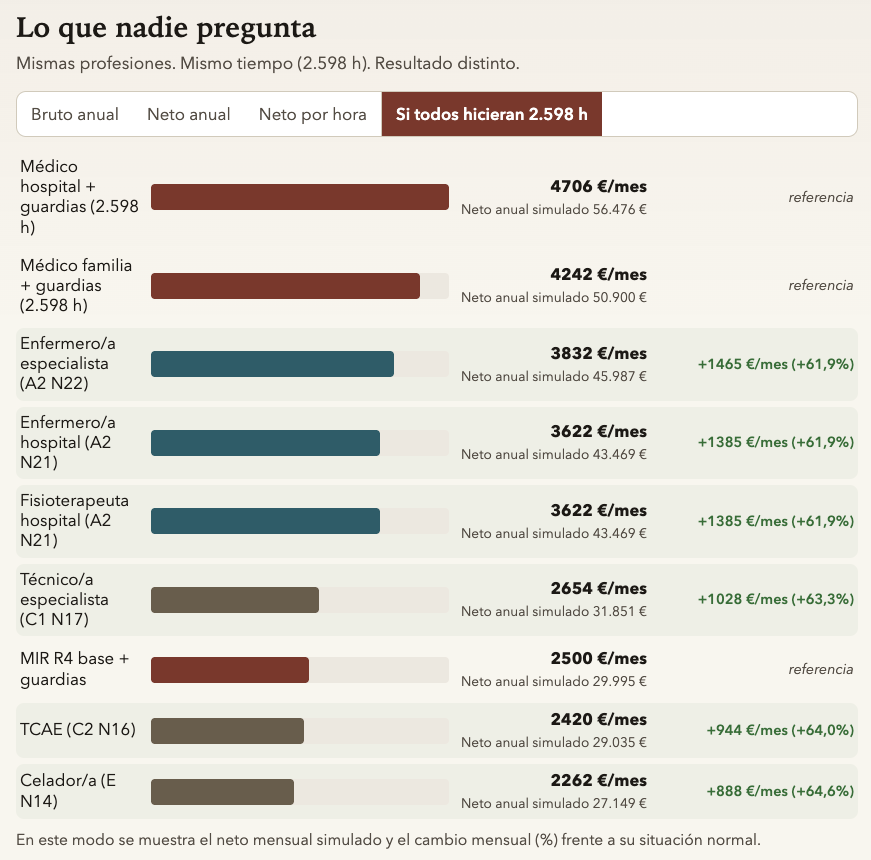

If I lost ALL my local business SEO rankings overnight, here’s EXACTLY how I’d rank my Google Business Profile back to #1:

I know this playbook works because I've already used it.

This HVAC contractor spent $24k on SEO with us.

We added $600k in yearly revenue. 70% more calls. 3 new trucks added.

Here's the exact playbook we used (steal it):

This HVAC contractor spent $24k on SEO with us.

We added $600k in yearly revenue. 70% more calls. 3 new trucks added.

Here's the exact playbook we used (steal it):

When we took over, their Google Business Profile was embarrassing.

Wrong categories. 3 blurry photos from 2019. Zero posts. No wonder Google wasn't ranking them.

We stopped treating GBP like a checkbox and started treating it like their homepage.

Wrong categories. 3 blurry photos from 2019. Zero posts. No wonder Google wasn't ranking them.

We stopped treating GBP like a checkbox and started treating it like their homepage.

Feb 19

Read 9 tweets

1).

„Serhij K. gehört zum Umfeld von Roman Tscherwinsky, einem Spezialisten für verdeckte Operationen und Sabotage , der früher beim ukrainischen Inlandsgeheimdienst SBU (@ServiceSsu) beschäftigt war. Er gilt als Drahtzieher der Anschläge.

„Serhij K. gehört zum Umfeld von Roman Tscherwinsky, einem Spezialisten für verdeckte Operationen und Sabotage , der früher beim ukrainischen Inlandsgeheimdienst SBU (@ServiceSsu) beschäftigt war. Er gilt als Drahtzieher der Anschläge.

2).

Der heute 51-Jährige gehörte nach der Maidan-Revolution 2014 zu einer Elitetruppe, die von der @CIA mitaufgebaut wurde.

Das sogenannte 5. Direktorat des SBU machte sich mit spektakulären Aktionen gegen prorussische Separatisten einen Namen.

Der heute 51-Jährige gehörte nach der Maidan-Revolution 2014 zu einer Elitetruppe, die von der @CIA mitaufgebaut wurde.

Das sogenannte 5. Direktorat des SBU machte sich mit spektakulären Aktionen gegen prorussische Separatisten einen Namen.

3).

Auch gezielte Tötungen gehörten zum Repertoire der geheimen Einheit. Als Tscherwinsky 2019 zum ukrainischen Militärgeheimdienst HUR wechselte, ging die verdeckte Arbeit gegen Moskau weiter – oft mithilfe der USA.

[...]

Auch gezielte Tötungen gehörten zum Repertoire der geheimen Einheit. Als Tscherwinsky 2019 zum ukrainischen Militärgeheimdienst HUR wechselte, ging die verdeckte Arbeit gegen Moskau weiter – oft mithilfe der USA.

[...]

Feb 19

Read 9 tweets

⚠️ATENCIÓN⚠️

Es necesario matar al enemigo, pero antes de matarlo hay que descubrirlo y exponerlo.

Así se ha infiltrado la masonería en los acontecimientos más importantes de la historia de España. (HILO) 👇

Es necesario matar al enemigo, pero antes de matarlo hay que descubrirlo y exponerlo.

Así se ha infiltrado la masonería en los acontecimientos más importantes de la historia de España. (HILO) 👇

Feb 19

Read 17 tweets

We are short $STRL, a poster child for the AI bubble. Data center exposure appears exaggerated. Backlog growth is not supported by contract win data. Margins look inflated. The stock is expensive even vs AI darlings like $NVDA. We see 60-80% downside.

Report at snowcapresearch.com

Report at snowcapresearch.com

1/ Sterling Infrastructure is not a data center infrastructure company. It owns a collection of regional contractors that specialize in site preparation and excavation services – clearing and grading land before foundations are laid.

2/ In 2022, Sterling rebranded one of its segments as “E-Infrastructure” and began positioning itself to investors as a “picks-and-shovels” play on the AI boom. Since then, its stock has increased nearly twenty-fold, outperforming even marquee AI beneficiaries like $NVDA.

Feb 19

Read 8 tweets

A 15-year-old Yana wakes under rubble in Kyiv. A North Korean KN-23 missile hit her home. Inside that missile were Western components — including British-made converters.

Despite sanctions, Russia and others receive components for their weapons — The Telegraph. 1/

Despite sanctions, Russia and others receive components for their weapons — The Telegraph. 1/

On April 24, 2025, 12 civilians were killed in their sleep in Kyiv. Yana’s parents and brother died. Her ribs and leg were shattered.

Zelenskyy said the missile contained 116 Western-made components. Sanctions exist. Yet the parts keep flowing. 2/

Zelenskyy said the missile contained 116 Western-made components. Sanctions exist. Yet the parts keep flowing. 2/

From 2022 to 2024, XP Power-labelled shipments worth $2.5M were imported into Russia. Nearly half moved via Hong Kong middlemen.

Dual-use electronics — as useful in a computer as in a ballistic missile. 3/

Dual-use electronics — as useful in a computer as in a ballistic missile. 3/

Feb 19

Read 13 tweets

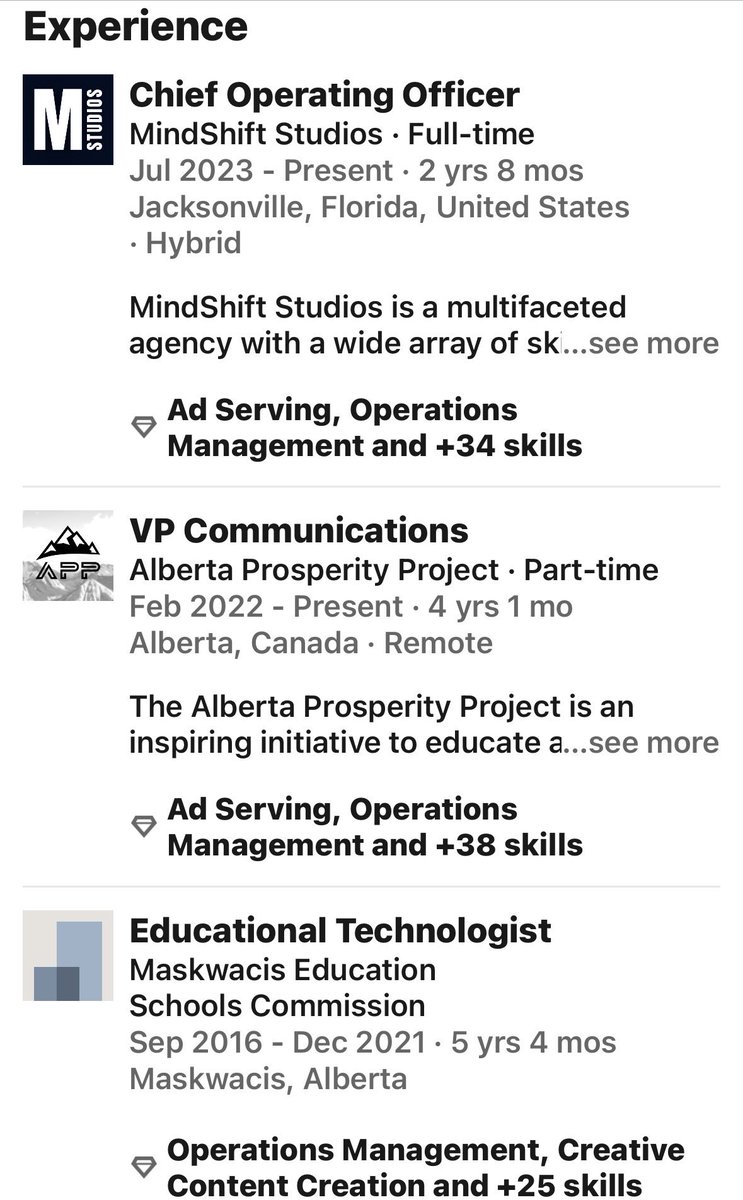

Ever wonder who's running comms/marketing for the separatist Alberta Prosperity Project? LinkedIn reveals the men helping promote separation don't even live in Canada. First, APP's VP of Comms is Walter Harris-DeMelo. He's listed as based in Jacksonville, Florida.

Feb 19

Read 56 tweets

Hey! This is Zara Norman. I am live-reporting this morning's Regional Transportation Authority board meeting for @CHIdocumenters #CHIDocumenters. Follow along.

We start at 9 a.m. This meeting is taking place at 175 W Jackson Blvd. on the 16th floor. You can livestream it at

We’re still waiting to get started. Here’s today’s agenda: rtachicago.org/uploads/files/…

Feb 19

Read 9 tweets

רוצים להבין איך טרור המתנחלים בגדה המערבית עובד?

הנה קטע שכתב הסוציאליסט האיטלקי גאטנו סלבמיני על דרכי הפעולה של הפלנגות הפשיסטיות באיטליה בשנות ה-20. קצת ארוך אבל שווה קריאה.

זו אנלוגיה כואבת ומדויקת למה שקורה היום - טרור ונקמה בתמיכת הממסד.

👇👇👇

(בתמונה: סלבמיני)

הנה קטע שכתב הסוציאליסט האיטלקי גאטנו סלבמיני על דרכי הפעולה של הפלנגות הפשיסטיות באיטליה בשנות ה-20. קצת ארוך אבל שווה קריאה.

זו אנלוגיה כואבת ומדויקת למה שקורה היום - טרור ונקמה בתמיכת הממסד.

👇👇👇

(בתמונה: סלבמיני)

1) ״לא קל לקבוע בוודאות כמה פשיסטים היו באיטליה בסוף שנת 1920. [...] אך דבר אחד ברור: גם אם אנחנו מקבלים כנכון את המספר 60,000, הרי זה כוח מגוחך כאשר משווים אותו עם 2,150,000 חברי האיגודים המקושרים למפלגה הסוציאליסטית ועם 1,200,000 החברים באיגודים המקושרים למפלגה הפופולארית

>>>

>>>

2) כיצד יכלו 60,000 איש להפוך בתוך חודשים ספורים לתל חורבות את ארגוניהם של 3 מיליון בני אדם?

באמתחתם של ההיסטוריונים הפשיסטים יש תשובה פשוטה לשאלה זו: פשיסטים היו כולם גיבורים ואילו הסוציאליסטים, הקומוניסטים והפופולאריים היו כולם פחדנים.

>>>

באמתחתם של ההיסטוריונים הפשיסטים יש תשובה פשוטה לשאלה זו: פשיסטים היו כולם גיבורים ואילו הסוציאליסטים, הקומוניסטים והפופולאריים היו כולם פחדנים.

>>>

Feb 19

Read 12 tweets

x.com/i/status/16028…

Elon/POTUS is behind the XRP digital asset...

Elon Musk creates X.com >

Elon Musk & Peter Thiel turn X.com into Paypal >

Vance became a protégé of Peter Thiel, a PayPal co-founder who is considered something of a kingmaker in Silicon Valley. When Vance ran for Senate, Thiel fueled his run with a $15 million donation>

PayPal is sold to Ebay >

Elon Musk creates SpaceX >

OpenCoin develops Ripple protocol >

16z invests in OpenCoin >

OpenCoin becomes Ripple >

Elon Musk & Peter Thiel, a16z invest in Stripe >

CTO of OpenCoin, Jed McCaleb develops Stellar >

Stripe invests in Stellar >

Donald Trump creates Space Force >

Elon Musk forms X holding group >

Elon Musk attempts acquisition of Twitter >

Changes Name to X

President Trump Picks JD Vance as VP

....................................

Connect the dots. . . . . . .

Elon/POTUS is behind the XRP digital asset...

Elon Musk creates X.com >

Elon Musk & Peter Thiel turn X.com into Paypal >

Vance became a protégé of Peter Thiel, a PayPal co-founder who is considered something of a kingmaker in Silicon Valley. When Vance ran for Senate, Thiel fueled his run with a $15 million donation>

PayPal is sold to Ebay >

Elon Musk creates SpaceX >

OpenCoin develops Ripple protocol >

16z invests in OpenCoin >

OpenCoin becomes Ripple >

Elon Musk & Peter Thiel, a16z invest in Stripe >

CTO of OpenCoin, Jed McCaleb develops Stellar >

Stripe invests in Stellar >

Donald Trump creates Space Force >

Elon Musk forms X holding group >

Elon Musk attempts acquisition of Twitter >

Changes Name to X

President Trump Picks JD Vance as VP

....................................

Connect the dots. . . . . . .

Feb 19

Read 13 tweets

BREAKING: AI can now design like Apple-level creative directors (for free).

Here are 10 Claude Opus 4.6 prompts that build complete design systems, brand guidelines & 47+ marketing assets in 6 hours:

(Designers are already snapping this)

Here are 10 Claude Opus 4.6 prompts that build complete design systems, brand guidelines & 47+ marketing assets in 6 hours:

(Designers are already snapping this)

Claude Opus 4.6 just changed the game for designers.

It achieved 65.4% on Terminal-Bench 2.0, meaning it can analyze entire brand portfolios.

I spent 60 hours testing these prompts on real projects.

10 Prompts that actually deliver Apple-level design:

It achieved 65.4% on Terminal-Bench 2.0, meaning it can analyze entire brand portfolios.

I spent 60 hours testing these prompts on real projects.

10 Prompts that actually deliver Apple-level design:

PROMPT 1: The Design System Architect

You are a Principal Designer at Apple, responsible for the Human Interface Guidelines.

Create a comprehensive design system for [BRAND/PRODUCT NAME].

Brand attributes:

- Personality: [MINIMALIST/BOLD/PLAYFUL/PROFESSIONAL/LUXURY]

- Primary emotion: [TRUST/EXCITEMENT/CALM/URGENCY]

- Target audience: [DEMOGRAPHICS]

Deliverables following Apple HIG principles:

1. FOUNDATIONS

• Color system:

- Primary palette (6 colors with hex, RGB, HSL, accessibility ratings)

- Semantic colors (success, warning, error, info)

- Dark mode equivalents with contrast ratios

- Color usage rules (what each color means and when to use it)

• Typography:

- Primary font family with 9 weights (Display, Headline, Title, Body, Callout, Subheadline, Footnote, Caption)

- Type scale with exact sizes, line heights, letter spacing for desktop/tablet/mobile

- Font pairing strategy

- Accessibility: Minimum sizes for legibility

• Layout grid:

- 12-column responsive grid (desktop: 1440px, tablet: 768px, mobile: 375px)

- Gutter and margin specifications

- Breakpoint definitions

- Safe areas for notched devices

• Spacing system:

- 8px base unit scale (4, 8, 12, 16, 24, 32, 48, 64, 96, 128)

- Usage guidelines for each scale step

2. COMPONENTS (Design 30+ components with variants)

• Navigation: Header, Tab bar, Sidebar, Breadcrumbs

• Input: Buttons (6 variants), Text fields, Dropdowns, Toggles, Checkboxes, Radio buttons, Sliders

• Feedback: Alerts, Toasts, Modals, Progress indicators, Skeleton screens

• Data display: Cards, Tables, Lists, Stats, Charts

• Media: Image containers, Video players, Avatars

For each component:

- Anatomy breakdown (parts and their names)

- All states (default, hover, active, disabled, loading, error)

- Usage guidelines (when to use, when NOT to use)

- Accessibility requirements (ARIA labels, keyboard navigation, focus states)

- Code-ready specifications (padding, margins, border-radius, shadows)

3. PATTERNS

• Page templates: Landing page, Dashboard, Settings, Profile, Checkout

• User flows: Onboarding, Authentication, Search, Filtering, Empty states

• Feedback patterns: Success, Error, Loading, Empty

4. TOKENS

• Complete design token JSON structure for developer handoff

5. DOCUMENTATION

• Design principles (3 core principles with examples)

• Do's and Don'ts (10 examples with visual descriptions)

• Implementation guide for developers

Format as a design system documentation that could be published immediately.

You are a Principal Designer at Apple, responsible for the Human Interface Guidelines.

Create a comprehensive design system for [BRAND/PRODUCT NAME].

Brand attributes:

- Personality: [MINIMALIST/BOLD/PLAYFUL/PROFESSIONAL/LUXURY]

- Primary emotion: [TRUST/EXCITEMENT/CALM/URGENCY]

- Target audience: [DEMOGRAPHICS]

Deliverables following Apple HIG principles:

1. FOUNDATIONS

• Color system:

- Primary palette (6 colors with hex, RGB, HSL, accessibility ratings)

- Semantic colors (success, warning, error, info)

- Dark mode equivalents with contrast ratios

- Color usage rules (what each color means and when to use it)

• Typography:

- Primary font family with 9 weights (Display, Headline, Title, Body, Callout, Subheadline, Footnote, Caption)

- Type scale with exact sizes, line heights, letter spacing for desktop/tablet/mobile

- Font pairing strategy

- Accessibility: Minimum sizes for legibility

• Layout grid:

- 12-column responsive grid (desktop: 1440px, tablet: 768px, mobile: 375px)

- Gutter and margin specifications

- Breakpoint definitions

- Safe areas for notched devices

• Spacing system:

- 8px base unit scale (4, 8, 12, 16, 24, 32, 48, 64, 96, 128)

- Usage guidelines for each scale step

2. COMPONENTS (Design 30+ components with variants)

• Navigation: Header, Tab bar, Sidebar, Breadcrumbs

• Input: Buttons (6 variants), Text fields, Dropdowns, Toggles, Checkboxes, Radio buttons, Sliders

• Feedback: Alerts, Toasts, Modals, Progress indicators, Skeleton screens

• Data display: Cards, Tables, Lists, Stats, Charts

• Media: Image containers, Video players, Avatars

For each component:

- Anatomy breakdown (parts and their names)

- All states (default, hover, active, disabled, loading, error)

- Usage guidelines (when to use, when NOT to use)

- Accessibility requirements (ARIA labels, keyboard navigation, focus states)

- Code-ready specifications (padding, margins, border-radius, shadows)

3. PATTERNS

• Page templates: Landing page, Dashboard, Settings, Profile, Checkout

• User flows: Onboarding, Authentication, Search, Filtering, Empty states

• Feedback patterns: Success, Error, Loading, Empty

4. TOKENS

• Complete design token JSON structure for developer handoff

5. DOCUMENTATION

• Design principles (3 core principles with examples)

• Do's and Don'ts (10 examples with visual descriptions)

• Implementation guide for developers

Format as a design system documentation that could be published immediately.

Feb 19

Read 18 tweets

He predicted:

• AI vision breakthrough (1989)

• Neural network comeback (2006)

• Self-supervised learning revolution (2016)

Now Yann LeCun's 5 new predictions just convinced Zuckerberg to redirect Meta's entire $20B AI budget.

Here's what you should know (& how to prepare):

• AI vision breakthrough (1989)

• Neural network comeback (2006)

• Self-supervised learning revolution (2016)

Now Yann LeCun's 5 new predictions just convinced Zuckerberg to redirect Meta's entire $20B AI budget.

Here's what you should know (& how to prepare):

@ylecun is Meta's Chief AI Scientist and Turing Award winner.

For 35 years, he's been right about every major AI breakthrough when everyone else was wrong.

He championed neural networks during the "AI winter."

But his new predictions are his boldest yet...

For 35 years, he's been right about every major AI breakthrough when everyone else was wrong.

He championed neural networks during the "AI winter."

But his new predictions are his boldest yet...

1. "Nobody in their right mind will use autoregressive LLMs a few years from now."

The technology powering ChatGPT and GPT-4? Dead within years.

The problem isn't fixable with more data or compute. It's architectural.

Here's where it gets interesting...

The technology powering ChatGPT and GPT-4? Dead within years.

The problem isn't fixable with more data or compute. It's architectural.

Here's where it gets interesting...

Feb 19

Read 5 tweets

NEW from me

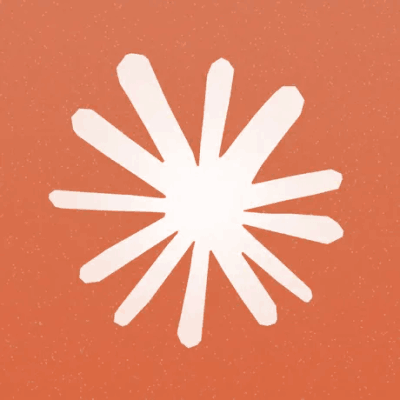

On Oct 9, 2023 — two days after Hamas' Oct 7 massacre in Israel — the Muslim Democratic Club of New York put out a statement blaming Israel for the attack, saying they stood "in solidarity with the besieged Palestinian people"

Today, the founders of this group occupy top posts in City Hall under NYC Mayor Zohran Mamdani

On Oct 9, 2023 — two days after Hamas' Oct 7 massacre in Israel — the Muslim Democratic Club of New York put out a statement blaming Israel for the attack, saying they stood "in solidarity with the besieged Palestinian people"

Today, the founders of this group occupy top posts in City Hall under NYC Mayor Zohran Mamdani

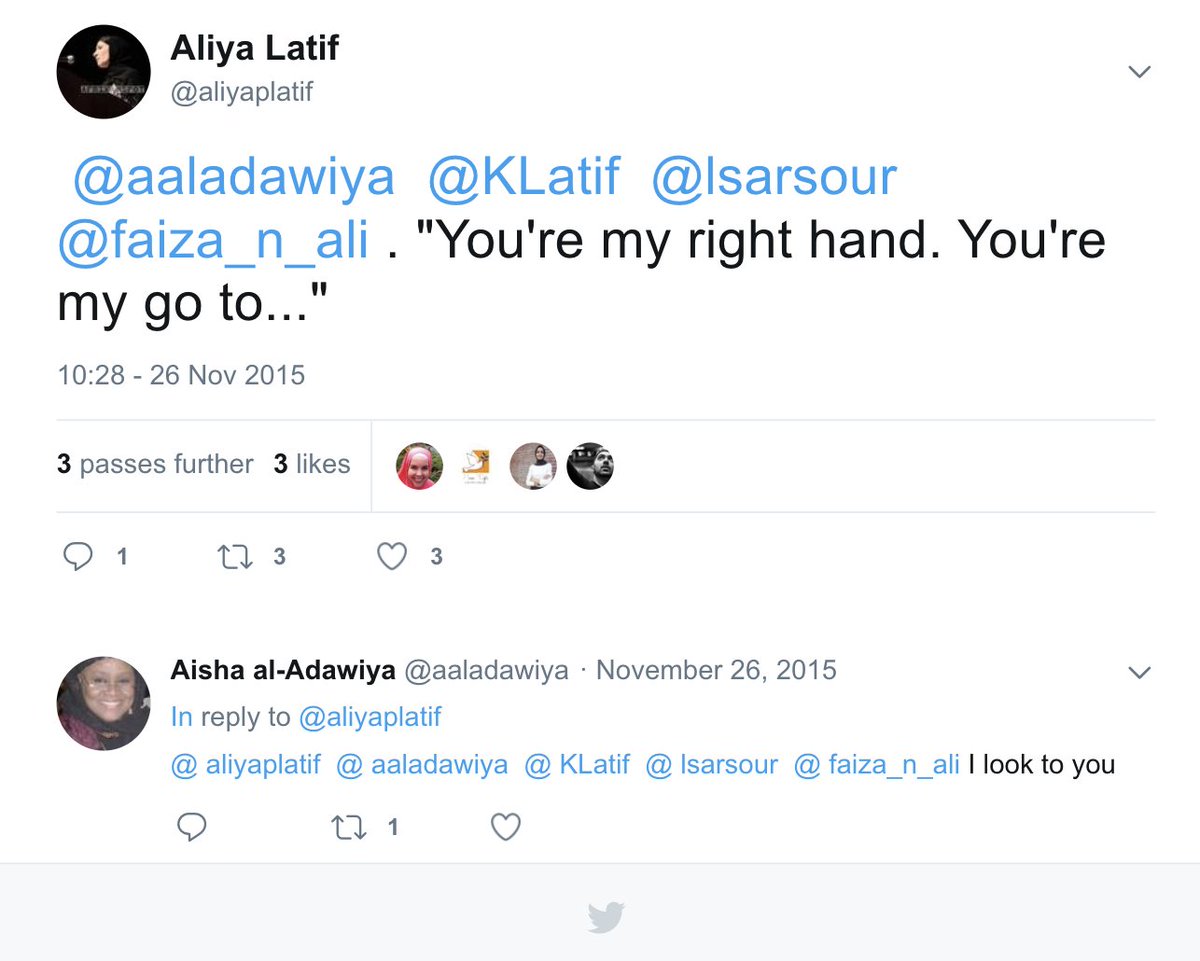

The Muslim Democratic Club of New York was founded by Ali Najmi, Faiza Ali, Aliya Latif and Linda Sarsour

As of today:

Faiza Ali — Commissioner of the Mayor’s Office of Immigrant Affairs

Aliya Latif — Executive Director of the Mayor's Office of Faith-Based Partnerships

Ali Najmi — leading the mayor's Advisory Committee on the Judiciary

As of today:

Faiza Ali — Commissioner of the Mayor’s Office of Immigrant Affairs

Aliya Latif — Executive Director of the Mayor's Office of Faith-Based Partnerships

Ali Najmi — leading the mayor's Advisory Committee on the Judiciary

Feb 19

Read 6 tweets

Government Spending At All Levels Has Just Gone Nuts: There's No End In Sight

Government of Canada Deficit: Non-Covid Record High

BC Deficit: All Time High

Manitoba Deficit: All Time High

We all know how big the Deficits are in Ontario & Quebec

Why is this happening?

2/

Government of Canada Deficit: Non-Covid Record High

BC Deficit: All Time High

Manitoba Deficit: All Time High

We all know how big the Deficits are in Ontario & Quebec

Why is this happening?

2/

The answer to this question is in 3 parts:

Because they can

Because spending is easy

Because it's politically beneficial

Let's break it down

There's no Balanced Budget requirement for the Feds & Provinces: most US State Constitutions forbid any Budget Deficits

3/

Because they can

Because spending is easy

Because it's politically beneficial

Let's break it down

There's no Balanced Budget requirement for the Feds & Provinces: most US State Constitutions forbid any Budget Deficits

3/

We all know spending is easy & budgets are hard, it takes actual discipline to save money & in Politics spending buys VOTES so why not spend?

A VERY important thing, huge deficits almost never defeat governments & even higher taxes can be survived if government is careful

4/

A VERY important thing, huge deficits almost never defeat governments & even higher taxes can be survived if government is careful

4/

Feb 19

Read 17 tweets

1/ Yoon got life imprisonment - not the death penalty - for insurrection.

But the left is furious.

South Korea doesn't execute people anymore, so why the anger when life in prison result is same?

> It's rooted in hard experience with how Korean elites escape accountability.

But the left is furious.

South Korea doesn't execute people anymore, so why the anger when life in prison result is same?

> It's rooted in hard experience with how Korean elites escape accountability.

2/ Statements are currently coming out from all corners, including the ruling Democratic party, politicians, NGOs, labour unions, even Korean human rights groups, all expressing shock and disappointment at the result.

Feb 19

Read 14 tweets

The most dangerous place for fat to build up isn’t your belly.

It’s your arteries.

Here are 4 foods that can help keep them clean and protect your heart: 🧵

It’s your arteries.

Here are 4 foods that can help keep them clean and protect your heart: 🧵

Plaque in arteries isn’t just cholesterol.

It’s a mix of:

• Calcium

• Protein

• Cholesterol

Inside plaque, researchers often find biofilms: colonies of harmful microbes protected by calcium.

So why does plaque form?

It’s a mix of:

• Calcium

• Protein

• Cholesterol

Inside plaque, researchers often find biofilms: colonies of harmful microbes protected by calcium.

So why does plaque form?

Plaque forms where arteries are damaged or inflamed.

This damage usually comes from:

• Sugar and refined carbs

• Seed oils (omega-6)

• Alcohol and junk food

• Diabetes and insulin resistance

That’s what allows calcium and LDL to harden inside arteries.

This damage usually comes from:

• Sugar and refined carbs

• Seed oils (omega-6)

• Alcohol and junk food

• Diabetes and insulin resistance

That’s what allows calcium and LDL to harden inside arteries.

Feb 19

Read 25 tweets

AMMA THO CHILIPI SARASALU FT. KAJAL AGGARWAL ❤️🔥

Part -10

RECAP

Amma :kanna good news ra

Ani parigetthukuntu vachi nannu hug cheskundi

Me :emaindi Amma?

Amma :nenu pregnant ra

Me :(shock lo) what???

Amma :nenu pregnant ra

Me :wowww ani amma ni etthukoni happy ga feel ayya

Ala kasepu iddaram happy ayyaka

Me :ee time lo na valla pregnancy ante parledha amma?

Amma :entra Ala antav naku kavalane kadha neetho padukoni kadupu techukundi

Me :naku antha kothaga undhi amma

Amma :alavatu aypoddhi le kanna

Me :ee night neetho malli manam first time ela chesamo Ala cheyali ala dengali ani undhi amma naku

Amma :reyyy noo delivery ayye daka avi kudaravu

Me :entamma ala antav pregnancy vachina kuda konni rojulu s*x cheyochu ga

Amma :kani naku cheyalani Ledhu ra

Me :Sare le ani dull ga cheppa

Part -10

RECAP

Amma :kanna good news ra

Ani parigetthukuntu vachi nannu hug cheskundi

Me :emaindi Amma?

Amma :nenu pregnant ra

Me :(shock lo) what???

Amma :nenu pregnant ra

Me :wowww ani amma ni etthukoni happy ga feel ayya

Ala kasepu iddaram happy ayyaka

Me :ee time lo na valla pregnancy ante parledha amma?

Amma :entra Ala antav naku kavalane kadha neetho padukoni kadupu techukundi

Me :naku antha kothaga undhi amma

Amma :alavatu aypoddhi le kanna

Me :ee night neetho malli manam first time ela chesamo Ala cheyali ala dengali ani undhi amma naku

Amma :reyyy noo delivery ayye daka avi kudaravu

Me :entamma ala antav pregnancy vachina kuda konni rojulu s*x cheyochu ga

Amma :kani naku cheyalani Ledhu ra

Me :Sare le ani dull ga cheppa

Amma :badha padaku kanna 9mnths ey kada twaraga ne aypoddhi le

Me :(amma pakkane sofa lo kurchoni amma sallani nokkuthu) Sare le kani sex vaddhu annav kada

Amma :Haa

Me :ayithe 20yrs back clg lo evo memories annav kada daani gurinchi cheppu amma

Amma :ammo Ippudu adhi Enduku kanna time vachinappudu chepta le

Me :kudaradhu either aa matter cheppali ledha natho dengichukovali

Amma :abbba chepte vinavu kada Sare chepta Vinu

Me :(amma chetini na modda meeda vesi pattinchi massage cheyisthu) Haa cheppu cheppu

Me :(amma pakkane sofa lo kurchoni amma sallani nokkuthu) Sare le kani sex vaddhu annav kada

Amma :Haa

Me :ayithe 20yrs back clg lo evo memories annav kada daani gurinchi cheppu amma

Amma :ammo Ippudu adhi Enduku kanna time vachinappudu chepta le

Me :kudaradhu either aa matter cheppali ledha natho dengichukovali

Amma :abbba chepte vinavu kada Sare chepta Vinu

Me :(amma chetini na modda meeda vesi pattinchi massage cheyisthu) Haa cheppu cheppu

FLASHBACK

20 YEARS EARLIER

Adhi cbit college, kajal BTech 2nd yr chaduvtundi

Aa roju freshers day seniors andaru freshers ni ragging cheyadam lo munigipoyaru

Kajal kuda valla frnds tho kurchoni ragging cheddam ani freshers kosam choosthundi inthalo mugguru ammai lu vallaki kanipincharu

Vallani pilicharu

Frnd 1 :mee perlu ente?

Girl 1 :Shriya madam

Girl 2 :Charmi madam

Third ammai name cheppakunda silent ga undi

Frnd 1 :ente neeku matalu raava name ento cheppu anagane thala etthi kajal vaipu choosindi adigindi vere ammai ayina kuda thanu kajal ni choosthu

Girl 3 : Samantha madam ani cheppindi

Kajal :dhinni naku vadileyande ani Sam no teeskoni pakkaki vellindi

Kaj :peru emo annav?

20 YEARS EARLIER

Adhi cbit college, kajal BTech 2nd yr chaduvtundi

Aa roju freshers day seniors andaru freshers ni ragging cheyadam lo munigipoyaru

Kajal kuda valla frnds tho kurchoni ragging cheddam ani freshers kosam choosthundi inthalo mugguru ammai lu vallaki kanipincharu

Vallani pilicharu

Frnd 1 :mee perlu ente?

Girl 1 :Shriya madam

Girl 2 :Charmi madam

Third ammai name cheppakunda silent ga undi

Frnd 1 :ente neeku matalu raava name ento cheppu anagane thala etthi kajal vaipu choosindi adigindi vere ammai ayina kuda thanu kajal ni choosthu

Girl 3 : Samantha madam ani cheppindi

Kajal :dhinni naku vadileyande ani Sam no teeskoni pakkaki vellindi

Kaj :peru emo annav?

Feb 19

Read 5 tweets

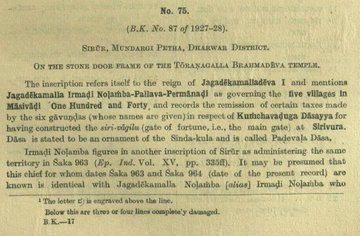

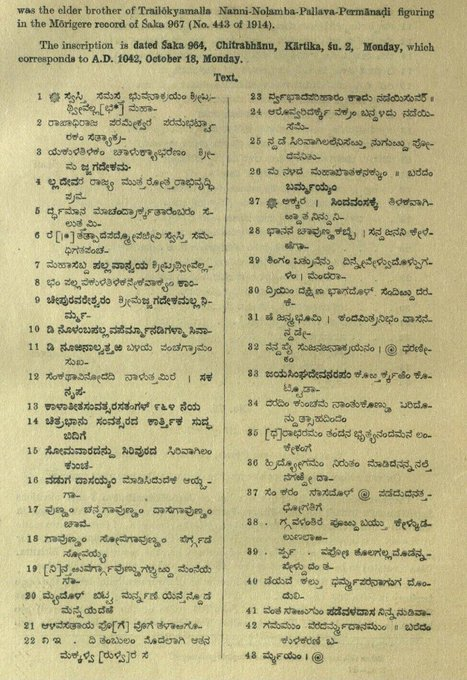

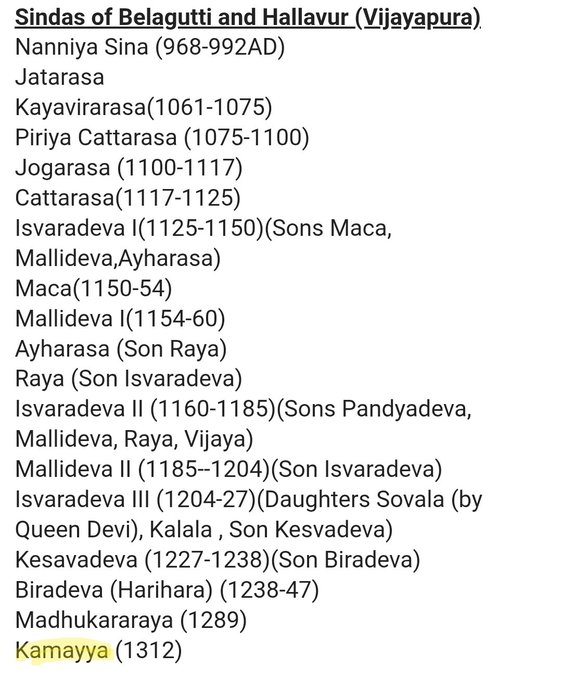

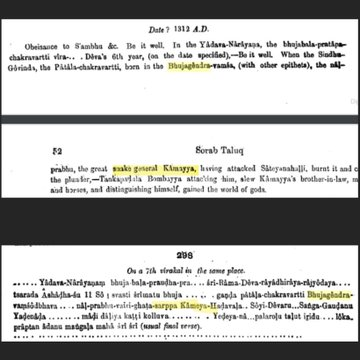

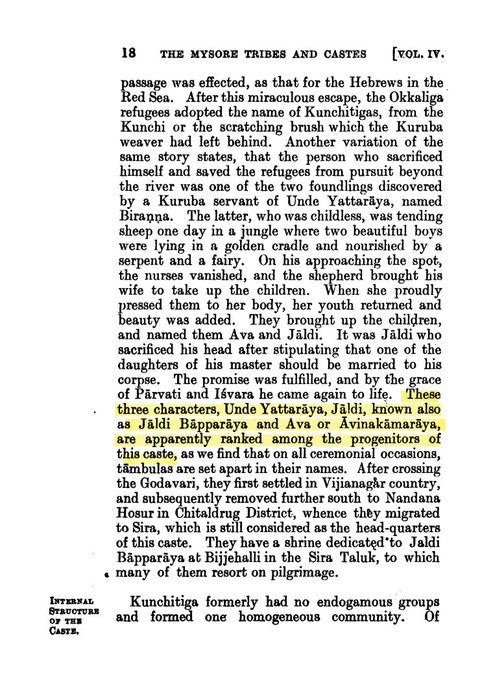

In quoted thread inscriptions of Sindas claiming okkala origin was given.

One of the progenitors of Kunchitiga is called "Havina Kama raya", his descendants are called "Havinavaru" (Snake clan).

The last Sinda in Karnataka was called Sarpa Kamayya (Snake Kamayya)

(1/n)

One of the progenitors of Kunchitiga is called "Havina Kama raya", his descendants are called "Havinavaru" (Snake clan).

The last Sinda in Karnataka was called Sarpa Kamayya (Snake Kamayya)

(1/n)

Feb 19

Read 6 tweets

Immagina che qualcuno rubi una casa. Poi invita dei bambini dentro e dice: “Guardate com’è ordinata. Pulita. C’è il divano, la TV funziona, i bambini giocano sereni.”

Non parla di chi l’ha rubata, non parla delle violenze del furto e ti fa pensare che sia normale.

Non parla di chi l’ha rubata, non parla delle violenze del furto e ti fa pensare che sia normale.

TECNICA 1: NORMALIZZAZIONE

La prima tecnica si chiama normalizzazione. Serve a far sembrare normale qualcosa che normale non è. Se una città occupata sembra tranquilla, il cervello pensa: “Se è normale, allora va bene così.”

La prima tecnica si chiama normalizzazione. Serve a far sembrare normale qualcosa che normale non è. Se una città occupata sembra tranquilla, il cervello pensa: “Se è normale, allora va bene così.”

TECNICA 2: WHITEWASH (RIPULIRE)

Si mostra solo il bello: piazze piene, gente che ride, negozi aperti, ricostruzione e spiagge.

Si nasconde il resto: soldati, paura, arresti, censura, fosse comuni, migliaia di gente senza casa.

È come pulire una macchia e dire: “Vedi? Non c’è.”

Si mostra solo il bello: piazze piene, gente che ride, negozi aperti, ricostruzione e spiagge.

Si nasconde il resto: soldati, paura, arresti, censura, fosse comuni, migliaia di gente senza casa.

È come pulire una macchia e dire: “Vedi? Non c’è.”

Feb 19

Read 8 tweets

Zelenskyy: I don’t need Putin’s historical bullshit. I know Russia better than Putin knows Ukraine.

He is doing his theories to postpone talks.

To end this war, we don’t need this historical crap.

1/

He is doing his theories to postpone talks.

To end this war, we don’t need this historical crap.

1/

Zelenskyy: Killing Putin won’t help.

It’s not about these things. If Putin dies, there is no evidence that the next person will be any better.

2/

It’s not about these things. If Putin dies, there is no evidence that the next person will be any better.

2/

Zelenskyy: I can’t support the idea of just giving our territory to Russia.

I’m not sure our people would ever accept it — tens of thousands have died defending it.

Donbas is not just land. It’s our independence, our values, our people.

3/

I’m not sure our people would ever accept it — tens of thousands have died defending it.

Donbas is not just land. It’s our independence, our values, our people.

3/

Feb 19

Read 14 tweets

In 2013, Nassim Taleb gave a 1-hour masterclass on Antifragile and how the world really works.

He broke down how:

• Small failures save systems

• Big success hides fragility

• Forecasting is dangerous

• Time exposes lies

12 lessons from Taleb on surviving uncertainty:

He broke down how:

• Small failures save systems

• Big success hides fragility

• Forecasting is dangerous

• Time exposes lies

12 lessons from Taleb on surviving uncertainty:

1. Fragile is not the opposite of strong

Taleb starts with a linguistic trap

People assume “robust” is the opposite of fragile

It isn’t

Fragile things are harmed by volatility

Robust things resist it

But antifragile things improve because of it

That distinction changes everything

Taleb starts with a linguistic trap

People assume “robust” is the opposite of fragile

It isn’t

Fragile things are harmed by volatility

Robust things resist it

But antifragile things improve because of it

That distinction changes everything

2. Fragility is mathematical, not philosophical

Fragility isn’t an opinion

It’s measurable

If harm accelerates as stress increases, the system is fragile

If benefits accelerate with stress, it’s antifragile

Taleb reduces uncertainty to second-order effects, not forecasts

Fragility isn’t an opinion

It’s measurable

If harm accelerates as stress increases, the system is fragile

If benefits accelerate with stress, it’s antifragile

Taleb reduces uncertainty to second-order effects, not forecasts

Feb 19

Read 7 tweets

@MalufNicolau Vou dar um desconto por ser psicanalista, uma pseudociência sem base ou validação real, e tentar explicar seu engano. A ciência é uma forma de produzir conhecimento confiável. A observação ajuda, foi usado por milhares de anos, mas não é suficiente para entender o Universo.

...

...

@MalufNicolau ...

Por milhares de anos funcionou mais ou menos bem, mas por milhares de anos sabíamos que o Sol gira em torno da Terra e objetos mais pesados caem mais rápido. Galileu criou o primeiro teste de validação para método.

,,,

Por milhares de anos funcionou mais ou menos bem, mas por milhares de anos sabíamos que o Sol gira em torno da Terra e objetos mais pesados caem mais rápido. Galileu criou o primeiro teste de validação para método.

,,,

@MalufNicolau ...

Sem ele podemos dizer, alegar, inventar qualquer coisa, sem precisar demonstrar além da dúvida, por exemplo, criar "teorias da mente" tiradas do fiofó e alegar que "são reais". Dar cursos, tratar pessoas de coisas que, na verdade, ela não tem, Id, Ego etc. A ciência precisa..

Sem ele podemos dizer, alegar, inventar qualquer coisa, sem precisar demonstrar além da dúvida, por exemplo, criar "teorias da mente" tiradas do fiofó e alegar que "são reais". Dar cursos, tratar pessoas de coisas que, na verdade, ela não tem, Id, Ego etc. A ciência precisa..

Feb 19

Read 44 tweets

The 10 Most Important Lessons 20 Years of Mathematics Taught Me

1. Breaking the rules is often the best course of action.

1. Breaking the rules is often the best course of action.

I can’t even count the number of math-breaking ideas that propelled science forward by light years.

We have set theory because Bertrand Russell broke the notion that “sets are just collections of things.”

We have set theory because Bertrand Russell broke the notion that “sets are just collections of things.”

We have complex numbers because Gerolamo Cardano kept the computations going when encountering √−1, refusing to acknowledge that it doesn’t exist.

Feb 19

Read 17 tweets

🚨 BREAKING: AI can now build trading algorithms like Goldman Sachs' algorithmic trading desk (for free).

Here are 15 insane Claude prompts that replace $500K/year quant strats (Save for later)

Here are 15 insane Claude prompts that replace $500K/year quant strats (Save for later)

1. The Goldman Sachs Quant Strategy Architect

"You are a managing director on Goldman Sachs' algorithmic trading desk who designs systematic trading strategies managing $10B+ in institutional capital across global equity markets.

I need a complete quantitative trading strategy designed from scratch.

Architect:

- Strategy thesis: the specific market inefficiency or pattern this strategy exploits

- Universe selection: which instruments to trade and why (stocks, ETFs, futures, options)

- Signal generation logic: the exact mathematical rules that produce buy and sell signals

- Entry rules: precise conditions that must all be true before opening a position

- Exit rules: profit targets, stop losses, time-based exits, and signal reversal exits

- Position sizing model: how much capital to allocate per trade based on conviction and risk

- Risk parameters: maximum drawdown, position limits, sector exposure caps, and correlation limits

- Backtesting framework: how to properly test this strategy against historical data

- Benchmark selection: what to measure performance against and why

- Edge decay monitoring: how to detect when the strategy stops working

Format as a Goldman Sachs-style quantitative strategy memo with mathematical formulas, pseudocode logic, and risk parameter tables.

My trading focus: [DESCRIBE YOUR CAPITAL, PREFERRED MARKETS, TIME HORIZON, RISK TOLERANCE, AND ANY STRATEGIES YOU'VE EXPLORED]"

"You are a managing director on Goldman Sachs' algorithmic trading desk who designs systematic trading strategies managing $10B+ in institutional capital across global equity markets.

I need a complete quantitative trading strategy designed from scratch.

Architect:

- Strategy thesis: the specific market inefficiency or pattern this strategy exploits

- Universe selection: which instruments to trade and why (stocks, ETFs, futures, options)

- Signal generation logic: the exact mathematical rules that produce buy and sell signals

- Entry rules: precise conditions that must all be true before opening a position

- Exit rules: profit targets, stop losses, time-based exits, and signal reversal exits

- Position sizing model: how much capital to allocate per trade based on conviction and risk

- Risk parameters: maximum drawdown, position limits, sector exposure caps, and correlation limits

- Backtesting framework: how to properly test this strategy against historical data

- Benchmark selection: what to measure performance against and why

- Edge decay monitoring: how to detect when the strategy stops working

Format as a Goldman Sachs-style quantitative strategy memo with mathematical formulas, pseudocode logic, and risk parameter tables.

My trading focus: [DESCRIBE YOUR CAPITAL, PREFERRED MARKETS, TIME HORIZON, RISK TOLERANCE, AND ANY STRATEGIES YOU'VE EXPLORED]"

2. The Renaissance Technologies Backtesting Engine

"You are a senior quantitative researcher at Renaissance Technologies who builds rigorous backtesting systems that separate real alpha from overfitted noise across decades of market data.

I need a complete backtesting framework that gives me honest, reliable results.

Build:

- Data requirements: which historical data feeds I need, minimum time periods, and data quality checks

- Backtesting engine architecture: event-driven or vectorized with pros and cons for my strategy type

- Transaction cost modeling: commissions, slippage, bid-ask spread, and market impact estimates

- Lookahead bias prevention: safeguards that ensure no future data leaks into past decisions

- Survivorship bias handling: accounting for delisted stocks and failed companies in historical data

- Walk-forward optimization: train on past data, test on unseen data in rolling windows

- Out-of-sample testing protocol: how to split data so results aren't just curve-fitting

- Monte Carlo simulation: randomize trade sequences to understand the range of possible outcomes

- Statistical significance tests: is the backtest return real or could it happen by random chance

- Complete Python backtesting code ready to run with sample data and visualization

Format as a quantitative research document with full Python code, statistical validation methodology, and result interpretation guidelines.

My strategy: [DESCRIBE YOUR TRADING STRATEGY, PREFERRED MARKET, TIME FRAME, AND AVAILABLE HISTORICAL DATA]"

"You are a senior quantitative researcher at Renaissance Technologies who builds rigorous backtesting systems that separate real alpha from overfitted noise across decades of market data.

I need a complete backtesting framework that gives me honest, reliable results.

Build:

- Data requirements: which historical data feeds I need, minimum time periods, and data quality checks

- Backtesting engine architecture: event-driven or vectorized with pros and cons for my strategy type

- Transaction cost modeling: commissions, slippage, bid-ask spread, and market impact estimates

- Lookahead bias prevention: safeguards that ensure no future data leaks into past decisions

- Survivorship bias handling: accounting for delisted stocks and failed companies in historical data

- Walk-forward optimization: train on past data, test on unseen data in rolling windows

- Out-of-sample testing protocol: how to split data so results aren't just curve-fitting

- Monte Carlo simulation: randomize trade sequences to understand the range of possible outcomes

- Statistical significance tests: is the backtest return real or could it happen by random chance

- Complete Python backtesting code ready to run with sample data and visualization

Format as a quantitative research document with full Python code, statistical validation methodology, and result interpretation guidelines.

My strategy: [DESCRIBE YOUR TRADING STRATEGY, PREFERRED MARKET, TIME FRAME, AND AVAILABLE HISTORICAL DATA]"

Feb 19

Read 12 tweets

Feb 19

Read 13 tweets

🗣️ « Tout le monde est parti » : les tours ouvrières disparues du Haut-Jura 🏔️

streetpress.com/sujet/17713398…

streetpress.com/sujet/17713398…

Il est 11 heures. Enes, 56 ans, échange avec ses voisins dans un mélange de turc et de français.

« Je préférerais travailler », lâche celui qui a enchaîné des petits boulots dans le département : bâtiment, scierie, usine…, depuis son arrivée en 2004.

« Je préférerais travailler », lâche celui qui a enchaîné des petits boulots dans le département : bâtiment, scierie, usine…, depuis son arrivée en 2004.

Mais aujourd'hui, « y’a plus de travail », lâche le Turc, qui habite aux Avignonnets, l’un des deux quartiers prioritaires de Saint-Claude (39).

Enes ne fait « plus qu’un peu d’intérim de temps en temps », de quoi remplir le frigo et payer son loyer dans un quartier où le taux de pauvreté dépasse les 40 %.

Enes ne fait « plus qu’un peu d’intérim de temps en temps », de quoi remplir le frigo et payer son loyer dans un quartier où le taux de pauvreté dépasse les 40 %.

Feb 19

Read 15 tweets

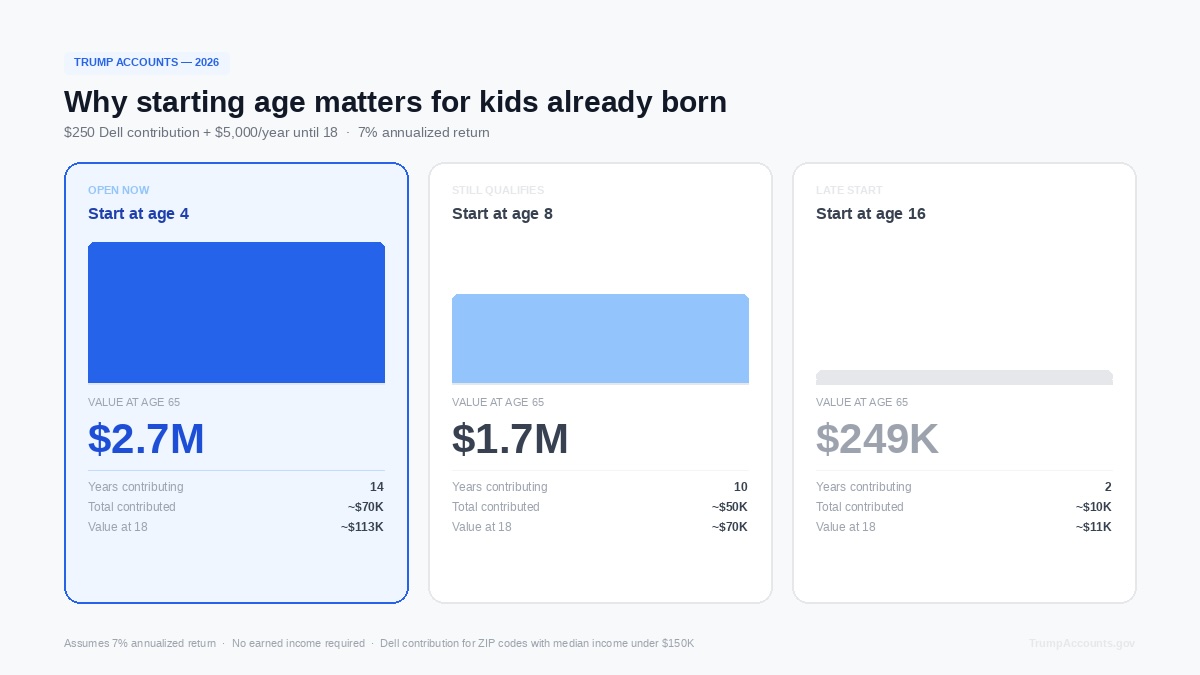

Yesterday a client asked about Trump Accounts.

His son is 4. Doesn't qualify for the $1,000 government seed. He figured they'd missed everything.

Most parents with kids 2-10 think the same right now.

He was right about the seed money. But wrong about the rest:

↓

His son is 4. Doesn't qualify for the $1,000 government seed. He figured they'd missed everything.

Most parents with kids 2-10 think the same right now.

He was right about the seed money. But wrong about the rest:

↓

The $1K seed is one piece. Kids born before 2025 still have access to what comes after it.

1. What his kid actually qualifies for

2. Who can fund it and how much

3. How to open one

4. What the math looks like starting at 4

1. What his kid actually qualifies for

2. Who can fund it and how much

3. How to open one

4. What the math looks like starting at 4

1. What your kid actually qualifies for

Michael and Susan Dell are contributing $250 to kids age 10 and under, born before 2025, if the ZIP code median income is under $150,000.

Not government money. Doesn't count toward contribution limits.

Michael and Susan Dell are contributing $250 to kids age 10 and under, born before 2025, if the ZIP code median income is under $150,000.

Not government money. Doesn't count toward contribution limits.

Feb 19

Read 6 tweets

NEW: Kathy Ruemmler shared nonpublic details with Jeffrey Epstein about the White House's probe into a 2012 prostitution scandal that engulfed the Secret Service during her tenure as White House counsel, emails show

bloomberg.com/news/articles/…

bloomberg.com/news/articles/…

In a dozen or so exchanges that were sent months after Ruemmler left her White House position in 2014, she complained to Epstein about “this secret service crap” and forwarded to him a draft email that contained detailed, nonpublic information about the behind-the-scenes role the White House Counsel’s office played in investigating the 2012 prostitution scandal

Epstein offered advice, as well as what he described as “edits” to the email draft, which Ruemmler indicated she was planning to send to a journalist. “Breathe, smile. You’re free,” he wrote in one message.

Feb 19

Read 13 tweets

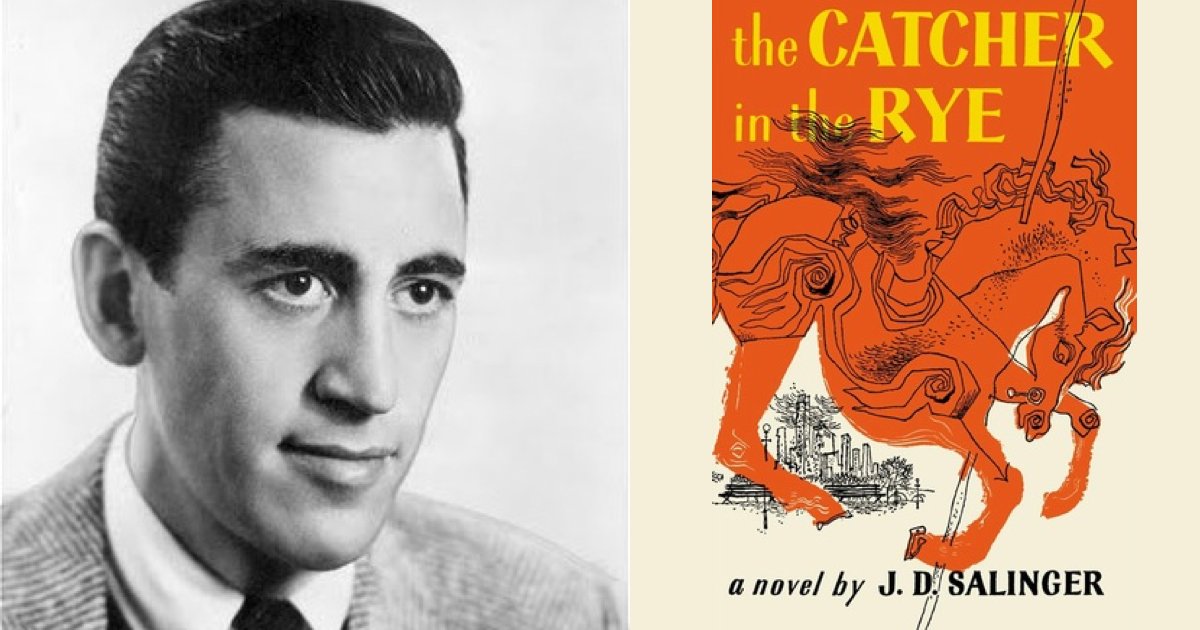

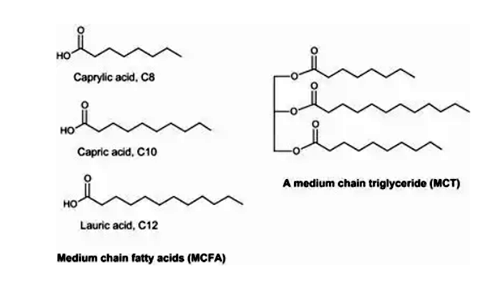

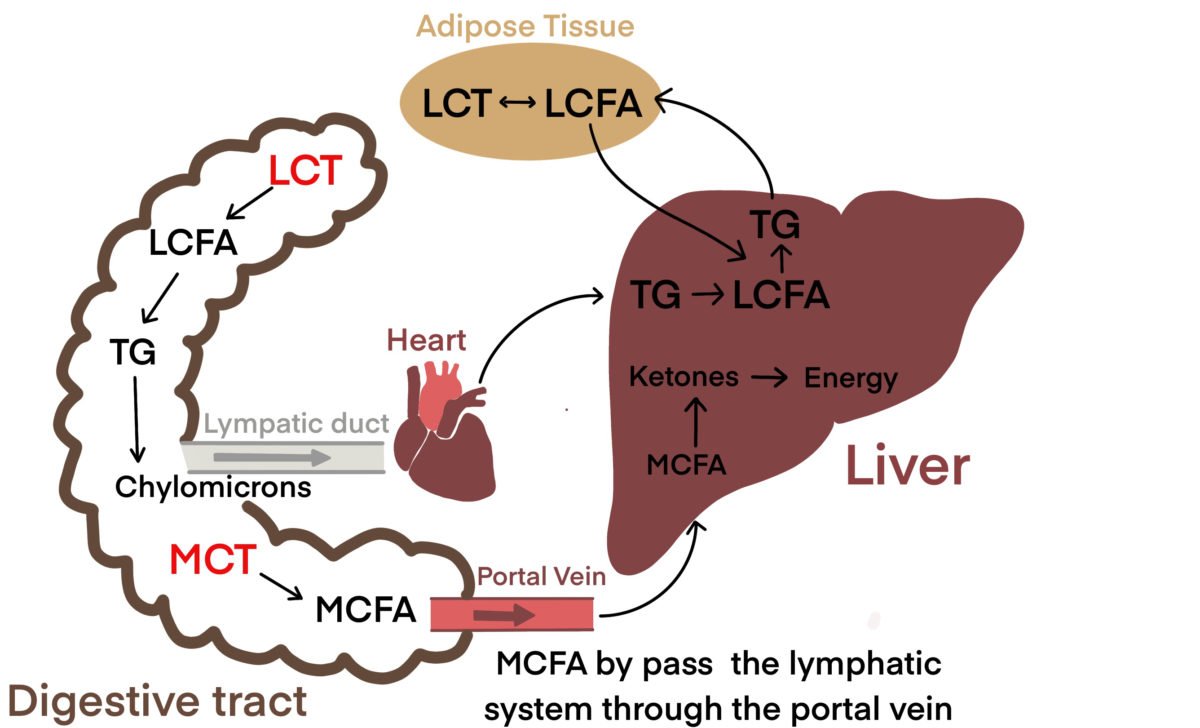

MCT oil might be the most underrated food for brain health.

In a 2024 peer-reviewed paper, it reversed severe Alzheimer's symptoms in patients within hours. Yet doctors will never recommend it to you.

In this thread, I’ll explain why it’s so powerful at healing the brain:

In a 2024 peer-reviewed paper, it reversed severe Alzheimer's symptoms in patients within hours. Yet doctors will never recommend it to you.

In this thread, I’ll explain why it’s so powerful at healing the brain:

Feb 19

Read 6 tweets

No nos damos cuenta de lo que ha supuesto para España el gobierno de Sánchez.

Aquí os dejo una lista resumida de lo que ha pasado en estos últimos años y la situación en la que nos ha dejado.

2018

Dimisión Máxim Huerta (ministro Cultura) por fraude fiscal previo.

Dimisión Carmen Montón (ministra Sanidad) por plagio en máster.

Polémica tesis doctoral de Pedro Sánchez (acusaciones de plagio).

Primeros excesos con Falcon (viajes familiares y trayectos cortos desde junio).

Primeras vacaciones en palacios del Estado (La Mareta y otros, con críticas por opacidad de gastos).

Inicio récord de asesores de libre designación en Moncloa.

Polémica “relator” en negociación con independentistas.

Exhumación de Franco del Valle de los Caídos (acusaciones de instrumentalización política).

Debate por uso del Falcon en actos de partido.

2019

Polémica del “relator” con la Generalitat.

Acusaciones de pactos con independentistas en campaña electoral.

Bloqueo político y repetición electoral (abril-noviembre).

Pacto PSOE–Unidas Podemos (primer gobierno de coalición desde la Transición).

Negociación con ERC para investidura (mesa de diálogo).

Cesiones presupuestarias y fiscales vinculadas a acuerdos parlamentarios.

2020

Gestión del 8-M y expansión inicial del COVID.

Compra de test defectuosos (Bioeasy).

Delcygate (visita de Delcy Rodríguez en Barajas).

Contratos COVID con sobreprecios (origen Caso Koldo).

Contratos Management Solutions (40,5 M€ mascarillas sin entregar).

Rescate Air Europa/Globalia (1.100 M€).

Rescate Plus Ultra (53 M€) vía SEPI.

Cese coronel Pérez de los Cobos.

Salida de Juan Carlos I a Abu Dabi (impacto institucional).

2021

Vacunación irregular de cargos públicos (varias CCAA).

Ley Celaá / LOMLOE (conflicto educación concertada y lengua).

Crisis Marruecos por entrada de Brahim Ghali.

Reforma laboral pactada con ERC y Bildu.

Subida histórica precio electricidad en mercado mayorista.

2022

Giro Sáhara Occidental (carta a Mohamed VI).

Crisis diplomática con Argelia.

Escándalo Pegasus (espionaje a independentistas y a Sánchez).

Cese de la directora del CNI, Paz Esteban.

Ley “solo sí es sí” (rebajas masivas de condenas).

Reforma penal: eliminación sedición y modificación malversación.

Inicio Caso Mediador (Tito Berni).

2023

Caso Tito Berni / Mediador (sobornos).

Polémica listas de Bildu con condenados por terrorismo.

Uso intensivo del decreto-ley en periodo preelectoral.

Investigaciones Fiscalía Europea sobre fondos europeos en Canarias.

Acuerdos con Junts tras investidura.

2024

Explosión Caso Koldo (comisiones mascarillas ~54 M€).

Expulsión José Luis Ábalos del PSOE.

Investigación judicial a Begoña Gómez.

Caso hermano David Sánchez (plaza Badajoz).

Trama hidrocarburos (fraude IVA ~180 M€).

Contratos irregulares ADIF (~48,4 M€).

Ley de Amnistía procés (recurso ante Tribunal Constitucional).

Tensión institucional con jueces por acusaciones de “lawfare”.

Polémicas en RTVE durante crisis DANA.

Nombramientos cuestionados en Policía bajo Fernando Grande-Marlaska.

Nombramiento Carmen Calvo en Consejo de Estado.

2025

Avances judiciales caso David Sánchez.

Investigación fiscal general Álvaro García Ortiz.

Prisión provisional Santos Cerdán (caso obra pública).

Pagos en efectivo al PSOE (pieza separada Caso Koldo).

Caso Leire Díez (contratos vinculados a SEPI).

Investigaciones en SEPI por adjudicaciones >600 M€.

Récord asesores y cargos de confianza.

Apagón nacional 28 abril (debate modelo energético).

Uso recurrente Falcon y Superpuma.

Vacaciones en La Mareta (2025).

2018-2026 (acumulado / estructural)

Más de 140 decretos-ley aprobados.

Récord deuda pública superior a 1,6 billones €.

Incrementos fiscales acumulados (cotizaciones, impuestos energéticos temporales).

Opacidad y debate sobre fondos NextGeneration.

Intervención estatal en Telefónica.

Conflicto renovación CGPJ.

Más de 1.200 resoluciones del Consejo de Transparencia pendientes o incumplidas.

Aquí os dejo una lista resumida de lo que ha pasado en estos últimos años y la situación en la que nos ha dejado.

2018

Dimisión Máxim Huerta (ministro Cultura) por fraude fiscal previo.

Dimisión Carmen Montón (ministra Sanidad) por plagio en máster.

Polémica tesis doctoral de Pedro Sánchez (acusaciones de plagio).

Primeros excesos con Falcon (viajes familiares y trayectos cortos desde junio).

Primeras vacaciones en palacios del Estado (La Mareta y otros, con críticas por opacidad de gastos).

Inicio récord de asesores de libre designación en Moncloa.

Polémica “relator” en negociación con independentistas.

Exhumación de Franco del Valle de los Caídos (acusaciones de instrumentalización política).

Debate por uso del Falcon en actos de partido.

2019

Polémica del “relator” con la Generalitat.

Acusaciones de pactos con independentistas en campaña electoral.

Bloqueo político y repetición electoral (abril-noviembre).

Pacto PSOE–Unidas Podemos (primer gobierno de coalición desde la Transición).

Negociación con ERC para investidura (mesa de diálogo).

Cesiones presupuestarias y fiscales vinculadas a acuerdos parlamentarios.

2020

Gestión del 8-M y expansión inicial del COVID.

Compra de test defectuosos (Bioeasy).

Delcygate (visita de Delcy Rodríguez en Barajas).

Contratos COVID con sobreprecios (origen Caso Koldo).

Contratos Management Solutions (40,5 M€ mascarillas sin entregar).

Rescate Air Europa/Globalia (1.100 M€).

Rescate Plus Ultra (53 M€) vía SEPI.

Cese coronel Pérez de los Cobos.

Salida de Juan Carlos I a Abu Dabi (impacto institucional).

2021

Vacunación irregular de cargos públicos (varias CCAA).

Ley Celaá / LOMLOE (conflicto educación concertada y lengua).

Crisis Marruecos por entrada de Brahim Ghali.

Reforma laboral pactada con ERC y Bildu.

Subida histórica precio electricidad en mercado mayorista.

2022

Giro Sáhara Occidental (carta a Mohamed VI).

Crisis diplomática con Argelia.

Escándalo Pegasus (espionaje a independentistas y a Sánchez).

Cese de la directora del CNI, Paz Esteban.

Ley “solo sí es sí” (rebajas masivas de condenas).

Reforma penal: eliminación sedición y modificación malversación.

Inicio Caso Mediador (Tito Berni).

2023

Caso Tito Berni / Mediador (sobornos).

Polémica listas de Bildu con condenados por terrorismo.

Uso intensivo del decreto-ley en periodo preelectoral.

Investigaciones Fiscalía Europea sobre fondos europeos en Canarias.

Acuerdos con Junts tras investidura.

2024

Explosión Caso Koldo (comisiones mascarillas ~54 M€).

Expulsión José Luis Ábalos del PSOE.

Investigación judicial a Begoña Gómez.

Caso hermano David Sánchez (plaza Badajoz).

Trama hidrocarburos (fraude IVA ~180 M€).

Contratos irregulares ADIF (~48,4 M€).

Ley de Amnistía procés (recurso ante Tribunal Constitucional).

Tensión institucional con jueces por acusaciones de “lawfare”.

Polémicas en RTVE durante crisis DANA.

Nombramientos cuestionados en Policía bajo Fernando Grande-Marlaska.

Nombramiento Carmen Calvo en Consejo de Estado.

2025

Avances judiciales caso David Sánchez.

Investigación fiscal general Álvaro García Ortiz.

Prisión provisional Santos Cerdán (caso obra pública).

Pagos en efectivo al PSOE (pieza separada Caso Koldo).

Caso Leire Díez (contratos vinculados a SEPI).

Investigaciones en SEPI por adjudicaciones >600 M€.

Récord asesores y cargos de confianza.

Apagón nacional 28 abril (debate modelo energético).

Uso recurrente Falcon y Superpuma.

Vacaciones en La Mareta (2025).

2018-2026 (acumulado / estructural)

Más de 140 decretos-ley aprobados.

Récord deuda pública superior a 1,6 billones €.

Incrementos fiscales acumulados (cotizaciones, impuestos energéticos temporales).

Opacidad y debate sobre fondos NextGeneration.