And by this I mean systems where a single user or app (or whatever the customer unit is) can saturate a shared resource and deny service to everyone, and this happens on a regular basis.

* the clients are other computers, not humans

* the users are other businesses, not people

* those businesses are building other business on top of you, and reselling access

* you allow your users and their users to write custom code and/or queries to run on *your* platform,

* especially (but not only) if their code or queries run on shared resources

😘🍭🍭🍭

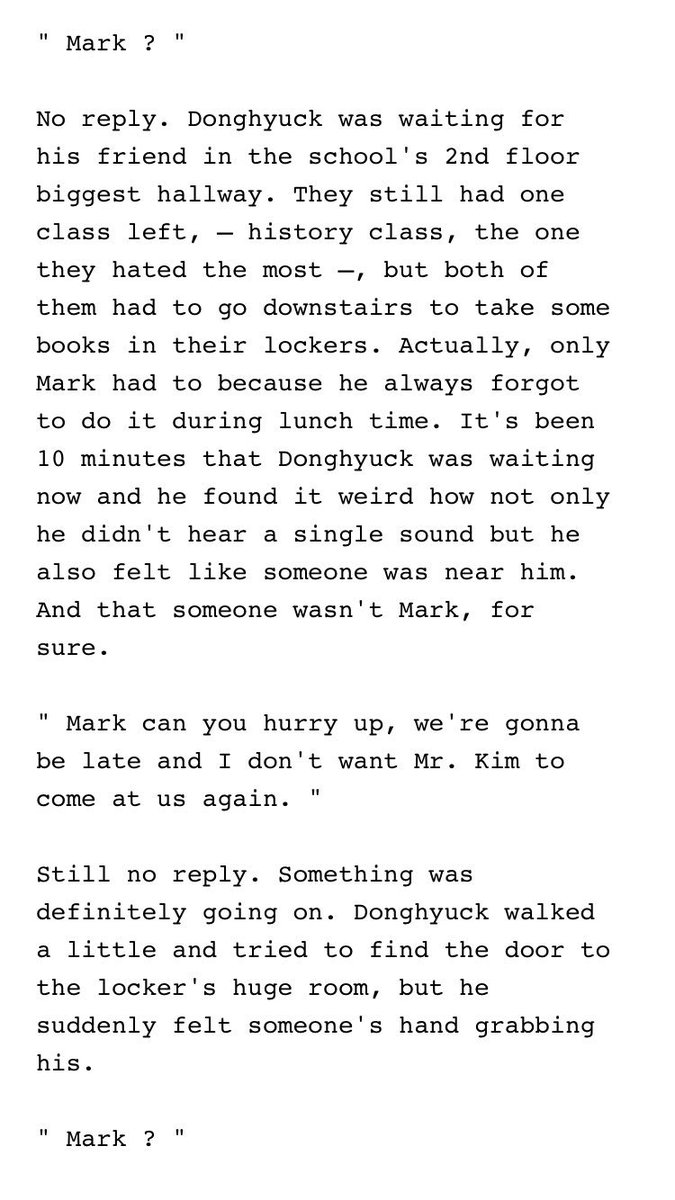

Platforms. Right. So to me, as a backend engineer, a platform is basically defined by this practice of *inviting a user's chaos to reside on your systems instead of theirs*. Making their unknown-unknowns YOUR ops problem, not theirs.

HAHAHAHA. Sweet child, if if does you have bigger problems.

One app, two apps, hundreds and thousands of apps... "EF" apps are ones you never even have to think about as individuals.

(**0 extra time and attention, above all.)

More likely you have some of these in your code:

if (uuid.equals("CA761232-ED42-11CE-BACD-00AA0057B223")){ do ..

Which doesn't change the fact that every time you do custom work for one of them, you are mortgaging your future. It's not sustainable.

When you have unpredictable traffic patterns and users prone to independent burstiness,

(assuming you have shared components; but not sharing any is hard and $$expensive$$)

And it's why your platform should be written in a multithreaded language.

It can take your entire system down in seconds flat.. for *everyone.*

It can be insanely difficult to figure out which of your thousands of active users actually took down the site. (Raise your hand if you've ever just started bisecting users or shards or services to narrow down the culprit... ✋)

It might not even be the fault of any *users*; it could be networking or db hardware or..

You just need to sum up the lock time held, then break down by app id to see what's holding the lock.

All that matters is each and every customer's experience. No matter how large or small they are, or how weird their use case.

But if you run a platform, you should try it. You'll wonder how you ever computered without it.

A one-off is never a one-off: never.

Ever.

If nothing else... Your product team should keep an eagle eye on those oneoffs.

Either that or build it and hemorrhage your engineering lifeblood trying to prop it up.