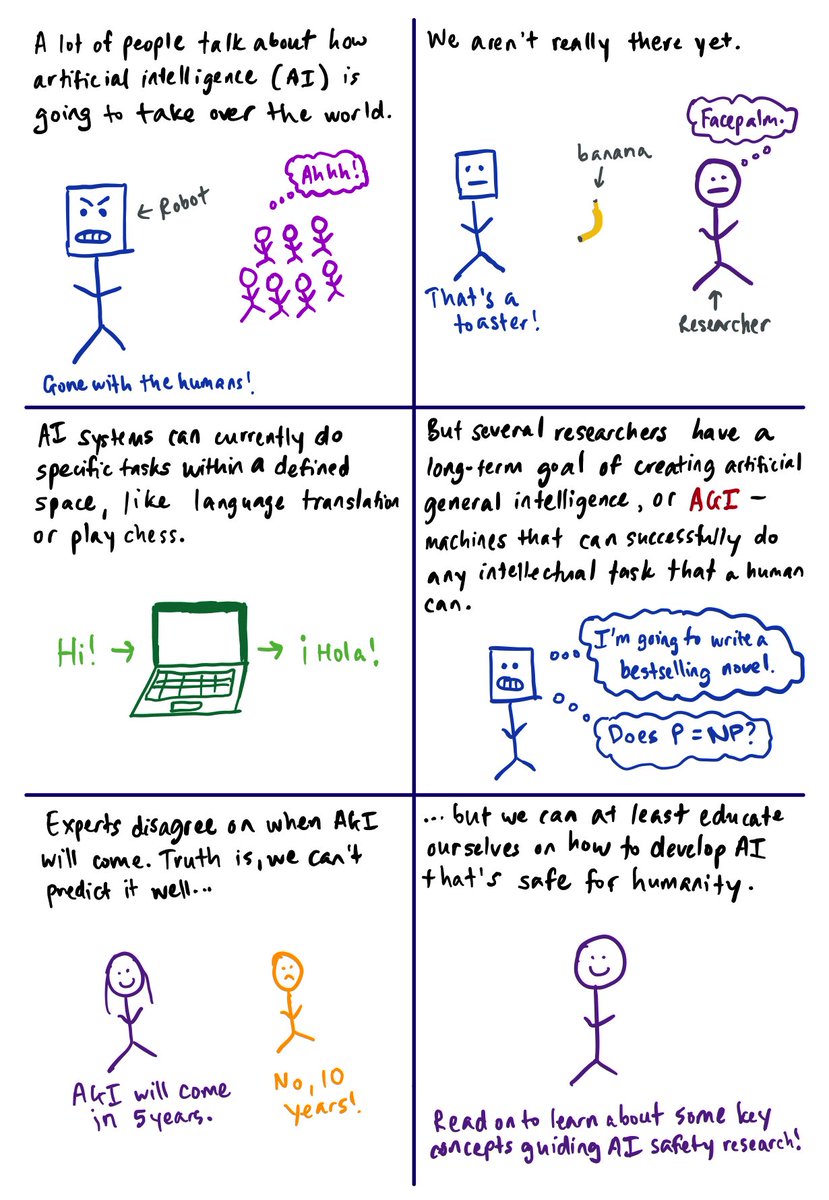

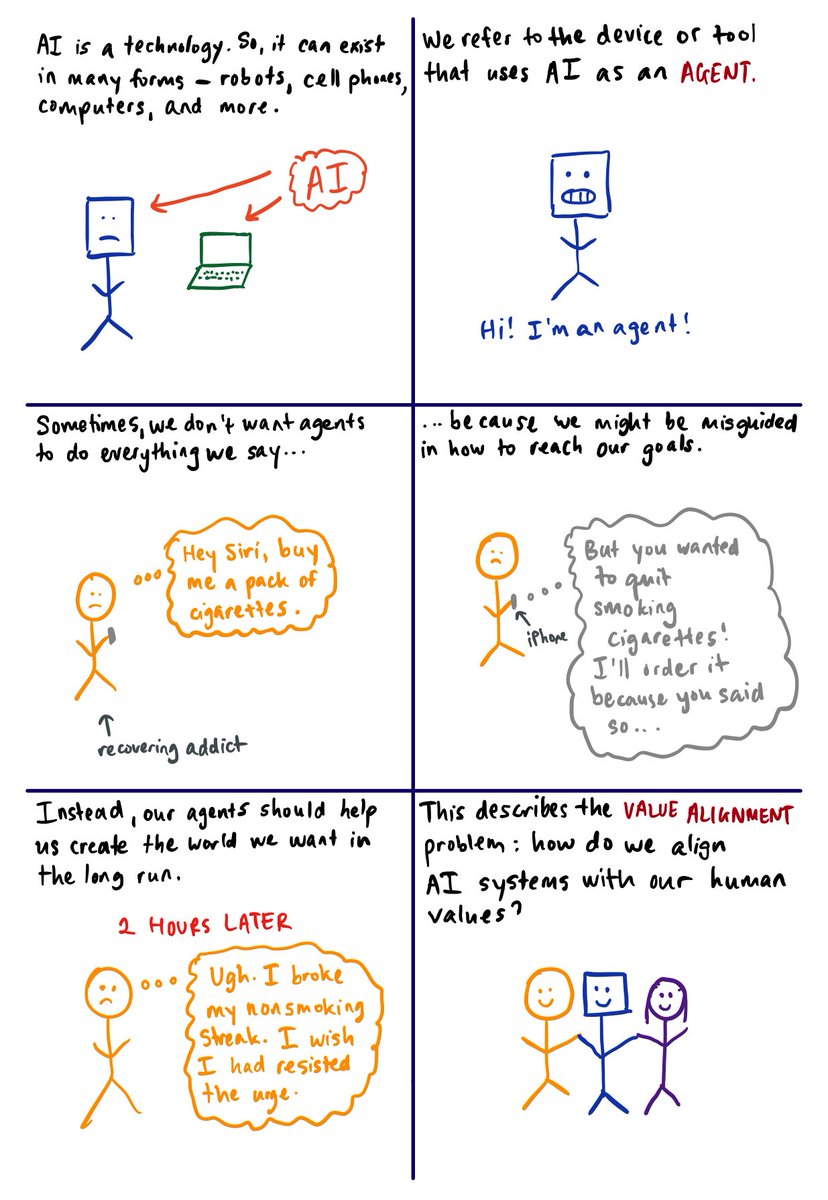

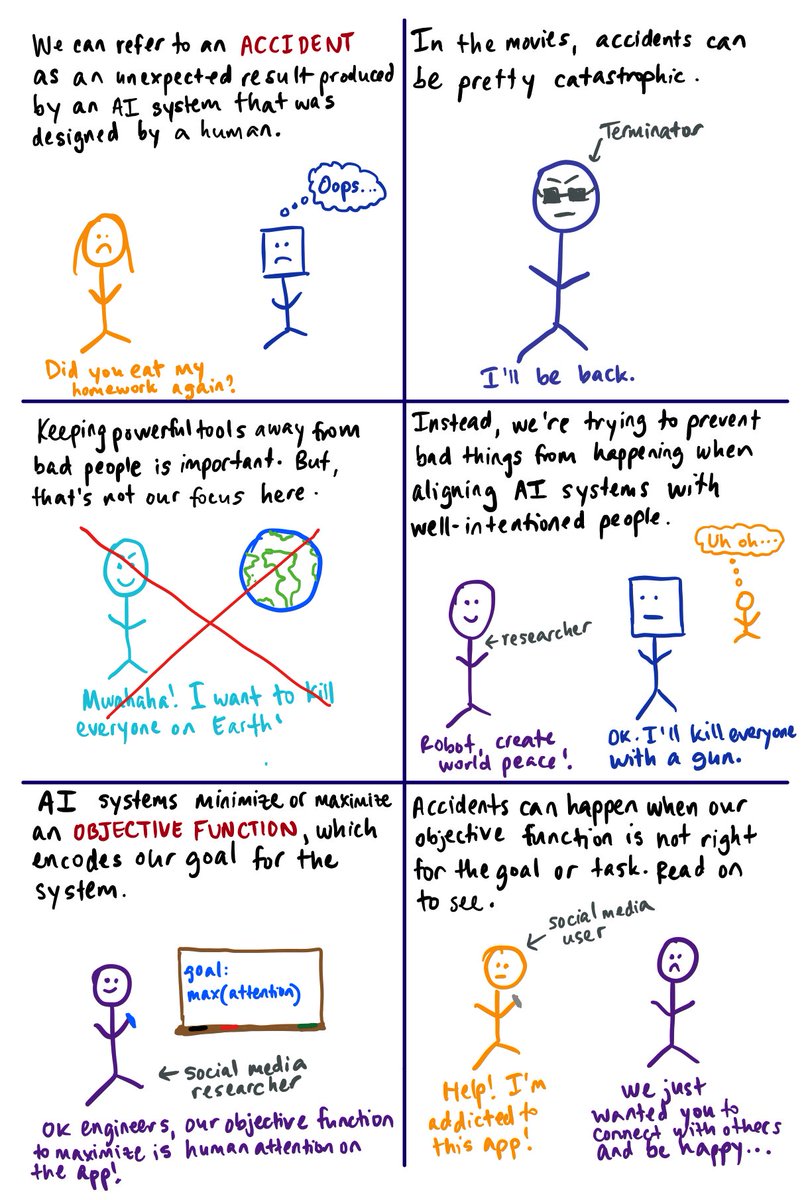

after reading papers & talking to peers, i realized that someone should probably explain the field in layman terms. here's my attempt! (1/14)

ex: corrupt politicians in the government, "mean girls" in middle school social dynamics

there are always malicious people trying to deceive ML systems. ex: uploading an inappropriate video to YouTube kids (2/14)

yes, there is high risk involved. but high rewards come from high risk. i choose to be an optimist. i hope you do, too. (14/14)

* Motivating the Rules of the Game for Adversarial Example Research: arxiv.org/abs/1807.06732 (Gilmer et al. 2018)

* Adversarial Examples Are Not Bugs, They Are Features: arxiv.org/abs/1905.02175 (Ilyas et al. 2019)

* Unsolved research problems vs. real-world threat models: medium.com/@catherio/unso… (@catherineols)

* Is attacking machine learning easier than defending it?: cleverhans.io/security/priva… (@goodfellow_ian and @NicolasPapernot )