You best believe ima live tweet this bad Larry

👇🏻

This is the biggest thing for me. As @saund_katie says: laughter isn’t joy, it’s excess of any emotion.

Ex. People only scowl 30% of the time when they’re angry. 70% of the time they’re doing something else when they’re angry.

Adding cultural diversity just worsens the matter.

TLDR: “evidence for universal emotional expressions vanishes.”

🔥🔥🔥🔥🔥🔥🔥🔥

“We often mistake statistical significance for actual significance for real life.”

GIRL PREACH.

It’s not that there’s NO correlation, it’s that FACE IS NOT ENOUGH.

face is not universal truth.

“Hundreds of papers are getting published in our very best journals based on stereotypes. Basically, it’s a study of emojis.”

🤯🤬😢

Emotions have vastly diverse neuro& behavioral signals.

Punchline: “variety is the norm.”

Fxnl dynamics, lesion studies, animal studies, etc. emotion categories are always degenerate.

We impose meaning on certain signals, because we’ve learned them. This is how we go scowl—>mad

It’s a problem of understanding the category a behavior belongs to in a given instance in a given environment.

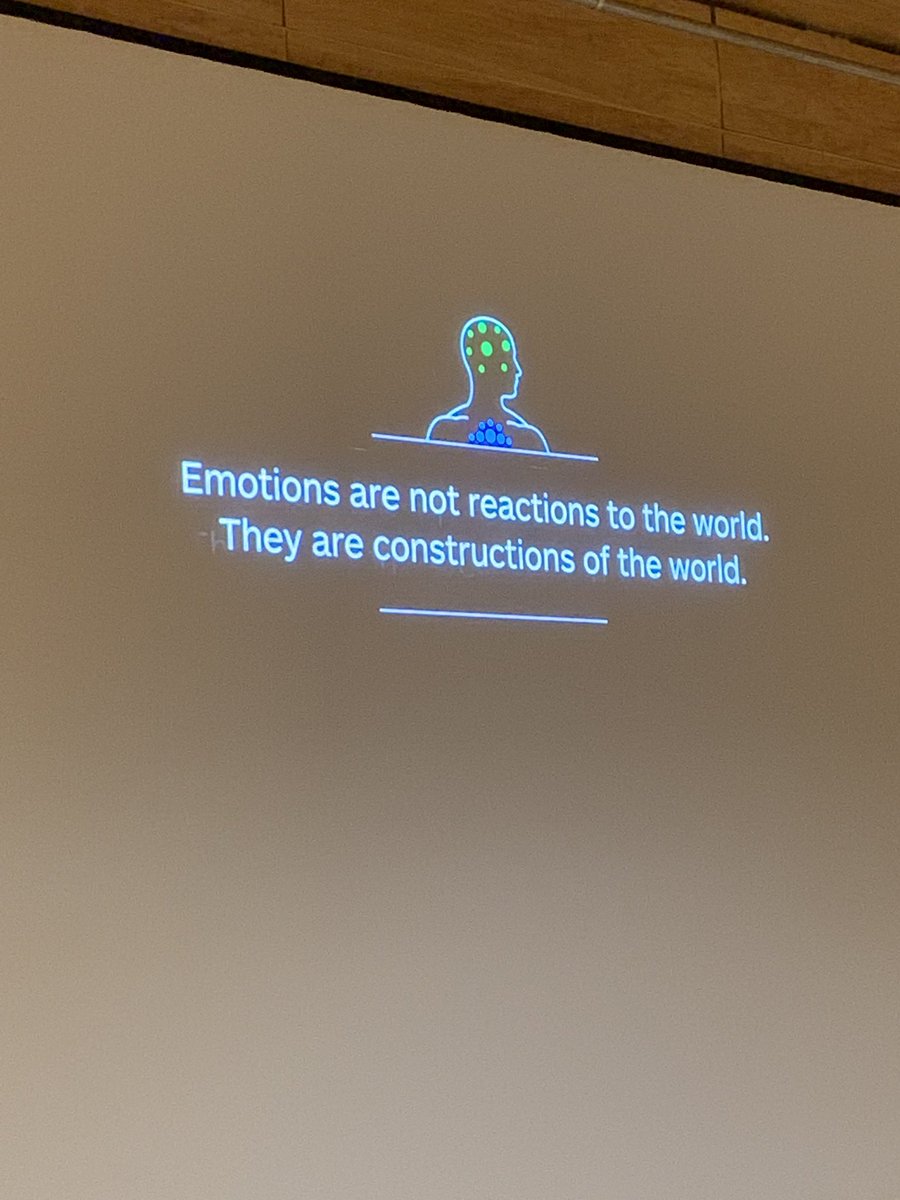

Emotions are not built in to your brain and body, but build BY your brain and body, dynamically, as you need them.

It feels like we’re reading, when in fact we’re constructing.

Your brain is stuck in a box, figuring out the outside world only through senses. Sensory changes are only effects of world, and your brain just guesses the causes.

Classic reverse instance, amirite?

Your brain remembers experiences that are similar to present.

These categories are potential explanations for all these wacky effects we feel.

Sidebar: Code Names is a great board game that is a real life demo of this. @LFeldmanBarrett have you played?? Want to play after this talk?? It’s a cognitive philosophers dream.

Like being nervous before a talk? 😇

This works for emotions too.

“Emotions that seem to happen TO you are actually made BY you.”

#zen

BUT physical signals are inherently ambiguous without context!

Who knows, she’s out of time ⌛️

Hands shooting up for Q&A, I love this.

A: depends on context. Maybe helpful, maybe prohibitive. “I’ve never considered validity/ethics separately. Given probabilistic accuracy, I don’t believe machines will ever outstrip humans.”

Given this: algorithms can be wrong. We don’t want algorithms that can be wrong to be determining outcomes.

However given how we regulate each other’s nervous systems, agents *could* be helpful to people by implementing biologically emotionally supportive mechanisms.

A: “I want to answer a different question.” The amount of money we spend on research like this is NOTHING compared to other sciences. If we had huge funding, we could have solved this.

People are into data about themselves, maybe we don’t need to make assumptions, we can just actually get the friggin data we need to solve this.

“We’re laboring under huge resource constraints.”

A: it’s hard. It’s cumbersome. It’s costly. You need to engage people as participant scientists. Don’t convince, be authentically inclusive. Be respectful to collaborative subjects.

A (discussion): I wanted all senior people to pool resources. Only Adolphs said yes 🙄

A: “I’ve never taken up anybody’s invitation to criticize Eckman personally.”

Our own assumptions percolate into all studies.

Stay tuned for more hot emotional content that machines can’t hope to understand 🙃