Chris: Inductive bias is not language-specific; entities need to be tracked e.g. by animals for perception. Early work in recursive neural networks can also be applied to vision.

Sam: Not aware of any recent work that uses a more linguistic-oriented inductive bias that works in practice on a benchmark task.

Q: Is there an endless cycle of collecting data, finding biases, trying to address these biases?

Sam: Building datasets to make vague intuitive definitions more concrete.

Yejin: Revisit how dataset is constructed (balance, counteract biases). Come up with algorithms that generate datasets.

Chris: Problem is lack of education. In psych, students spend a lot of time working on exp design.

Percy: 6 months ago really worried about bias. Not worried about it anymore.

Devi: Synthetic, complex datasets are also useful to build models that do certain things (e.g. CLEVR).

Indigenous NLP tradition is being replaced by ML tradition in last decade. ML people require i.i.d. data. Should not use i.i.d. data, but from a different distribution.

Q: Other ways to induce inductive bias besides architecture/data?

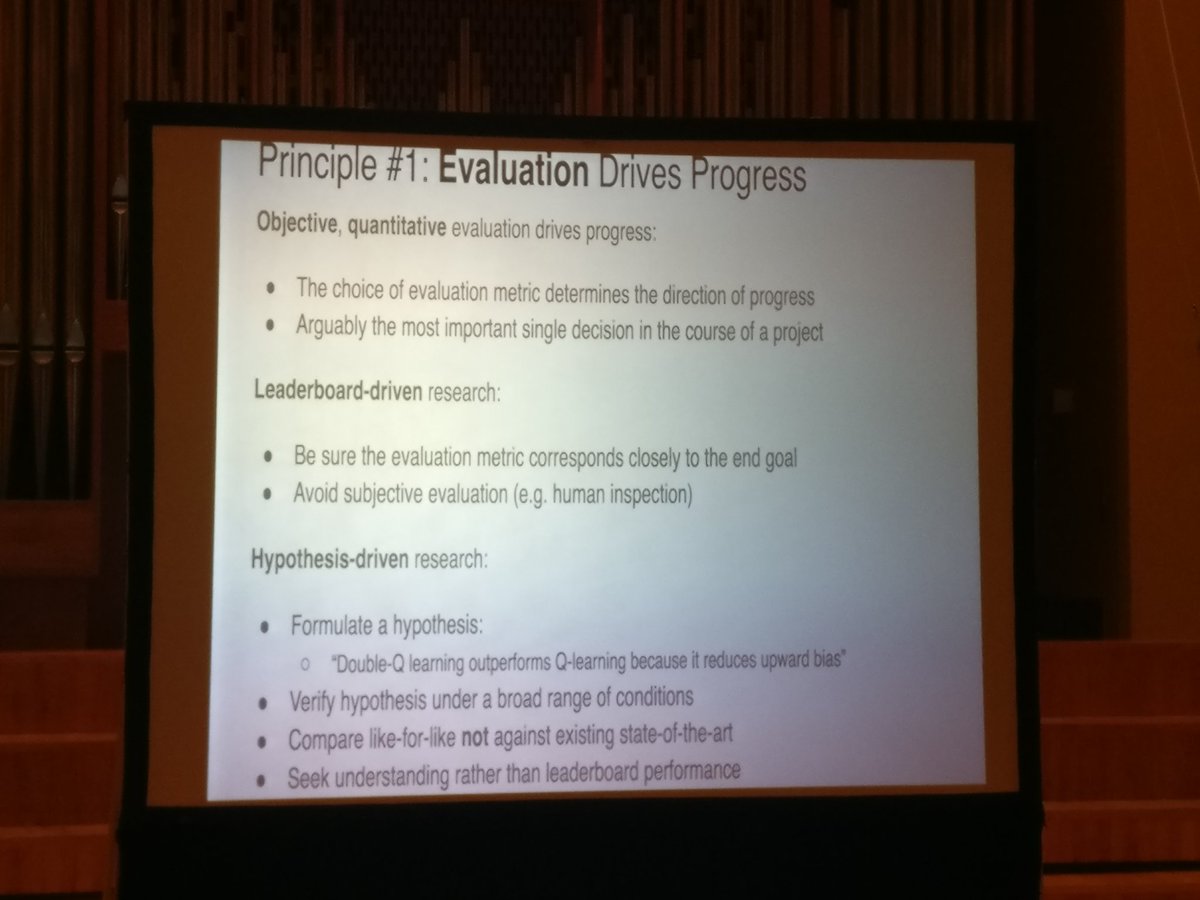

Yejin: Evaluation metrics like BLEU/ROUGE are not that meaningful; important to do more human evaluations.

Chris: Humans are not at constructing auxiliary tasks.

Percy: Summarization model trained with human in the loop on lots of examples would get human performance.

Q: Will pre-trained models will be used in all NLP tasks in the next years?

Percy: Room for pre-trained representation for some tasks; for most tasks, we will need to go beyond that.

Q: Should people release more challenge datasets?

Devi: Don't want to lose easy knowledge. E.g. for VQA, not clear what problems subsume each other.

Yejin: Possible. Shouldn't repeat what people have tried with symbolic logic. Model could encode natural language in knowledge representation.

Q: For MultiNLI, small gap btw in-domain and out-of-domain. Do we learn more about way annotators generate training examples than natural language phenomena?

Q: How do we evaluate the abstractiveness of NLG systems?

Yejing: Good question. Might want to measure whether summary compresses text well through rewriting vs. substitution.