A thread about how how it came to be at Amazon...

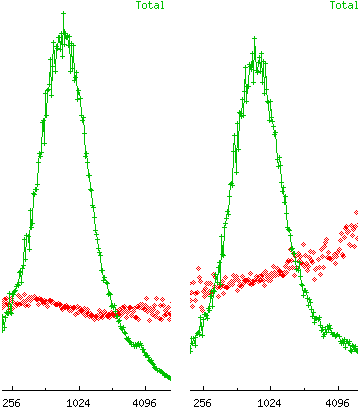

I broke down our page serve times a bunch of different ways, but for this thread, I'll just look at one, which is detail page latency.

The rest is history!

That focus on high percentiles instead of averages has driven so much good behavior.

That's it!

@EricaJoy @ewindisch @KatyMontgomerie @negrosubversive @kimmaytube @mykola @abbyfuller @ChloeCondon @ASpittel @jessfraz @QuetzalliAle @ErynnBrook @prisonculture @mekkaokereke @hirokonishimura