One key challenge is the vocabulary which may vary drastically across domains. Sentence structure may differ as well and this have heavy consequences on downstream tasks e.g., NER.

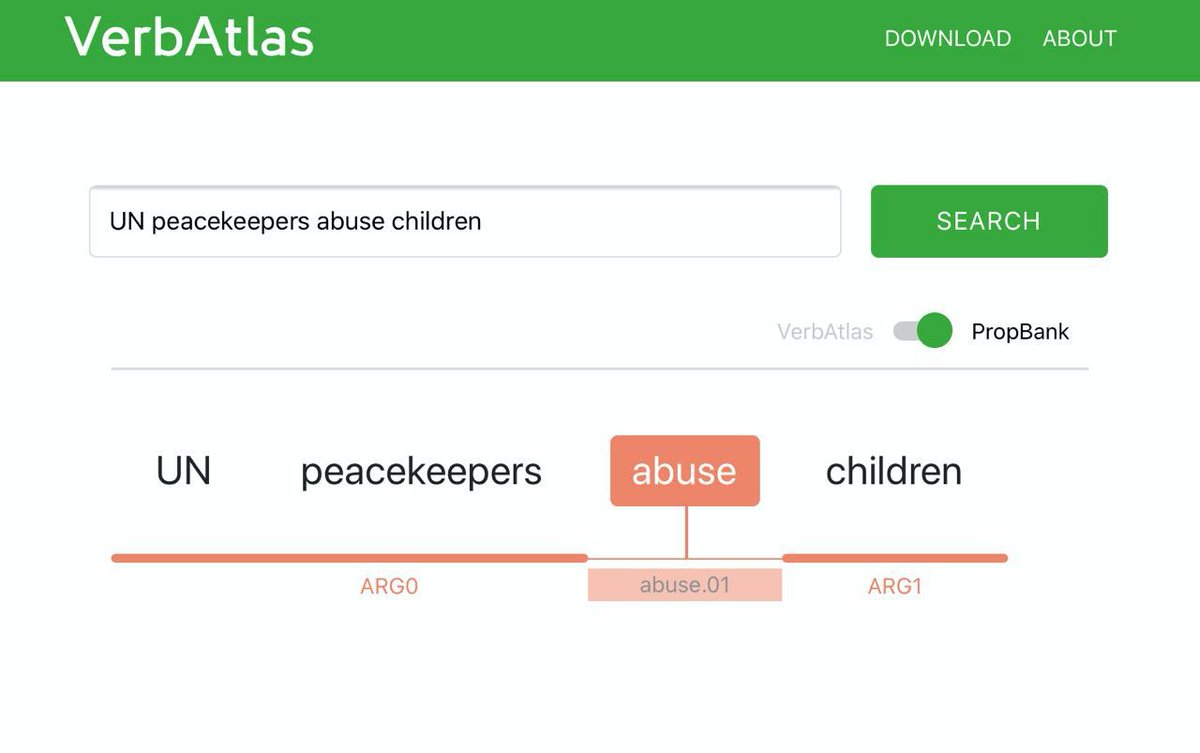

verbatlas.org/search?word=UN…

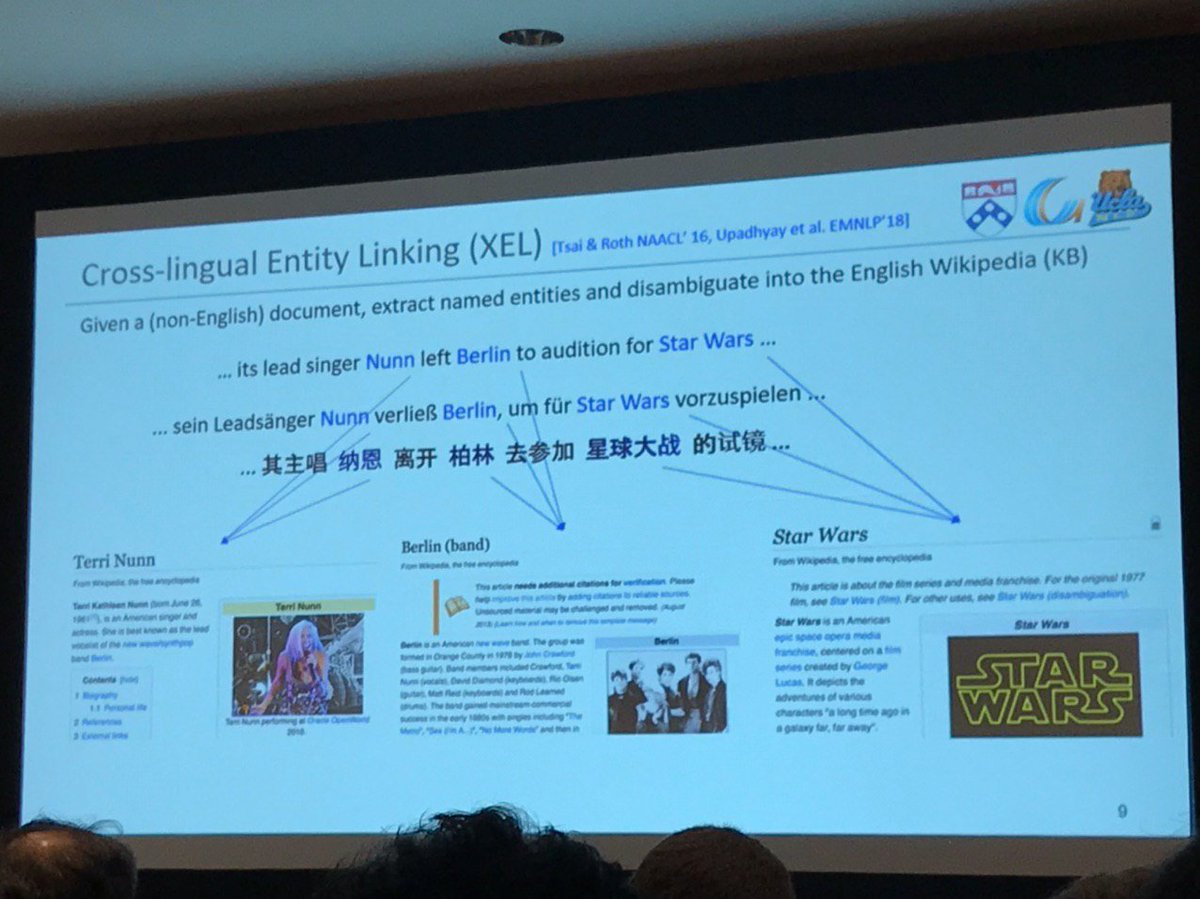

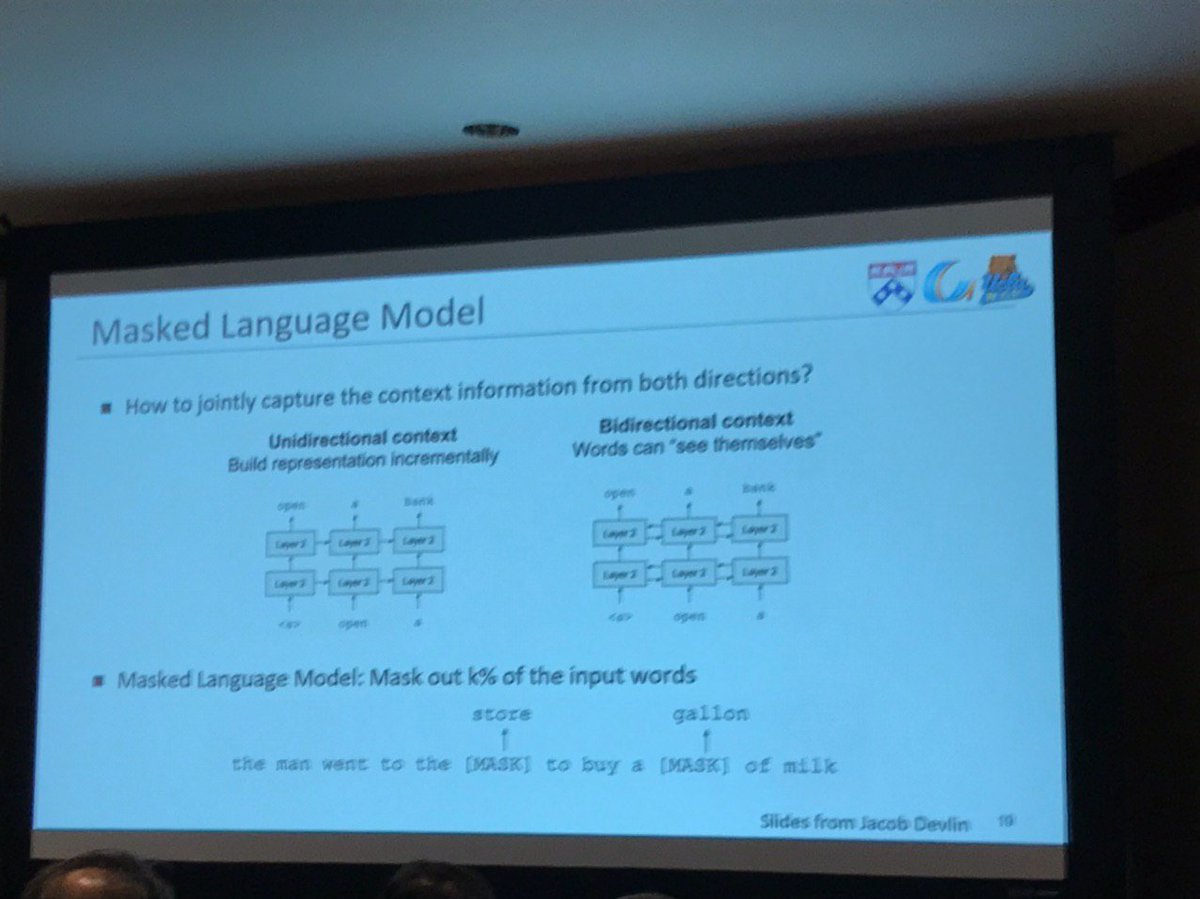

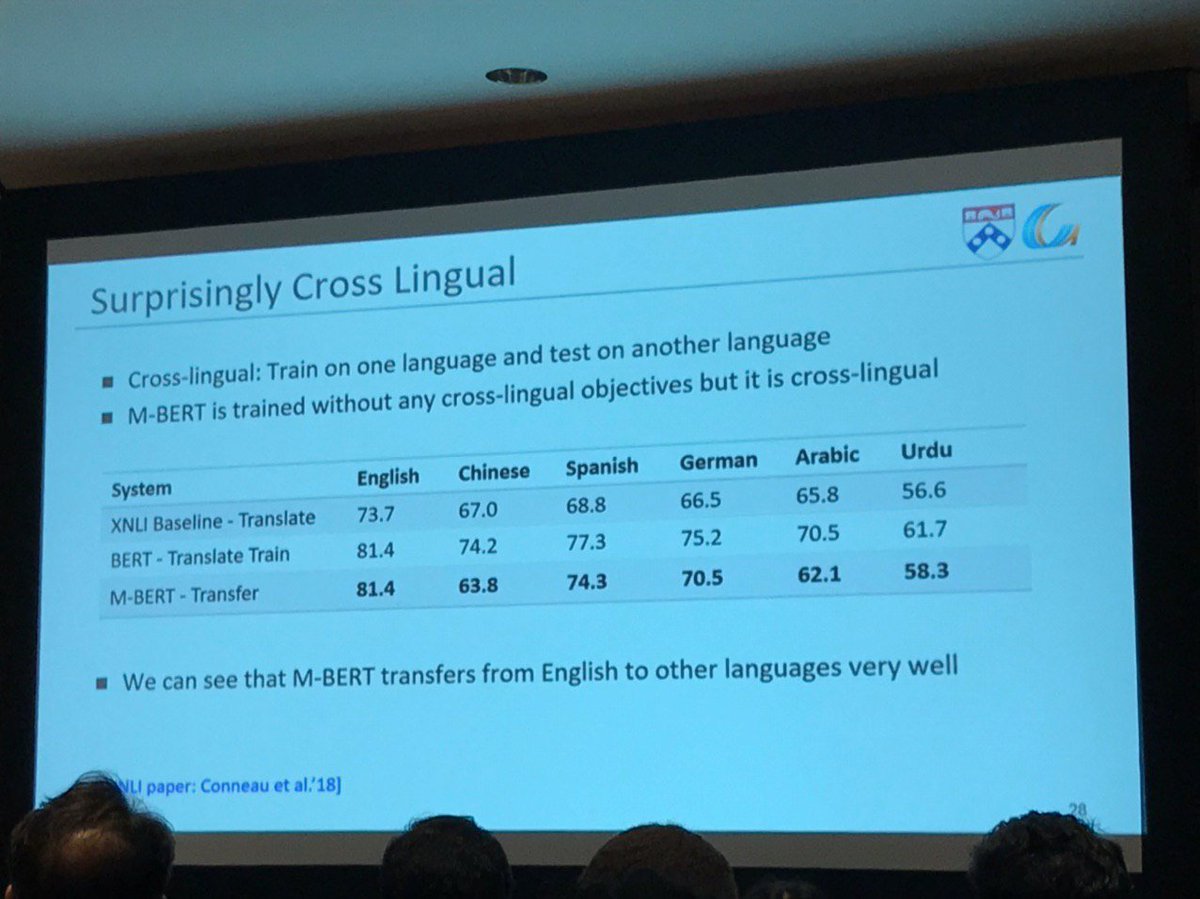

1) Can we utilize recent technologies to address cross-X transfer?

2) How?

Where X can be Language, Modality or Domain.

- two surprise languages (Odia and Ilocano)

- two weeks to develop a solution

- no annotated data

- minimal remote exposure to native speakers.