Why today? Because the House Oversight report has been released. Merry Christmas! oversight.house.gov/wp-content/upl…

I also made a prediction that the breach would be financially lucrative for Equifax - that it was effectively the best lead gen event for the company EVER.

Anyway. On to the security issues.

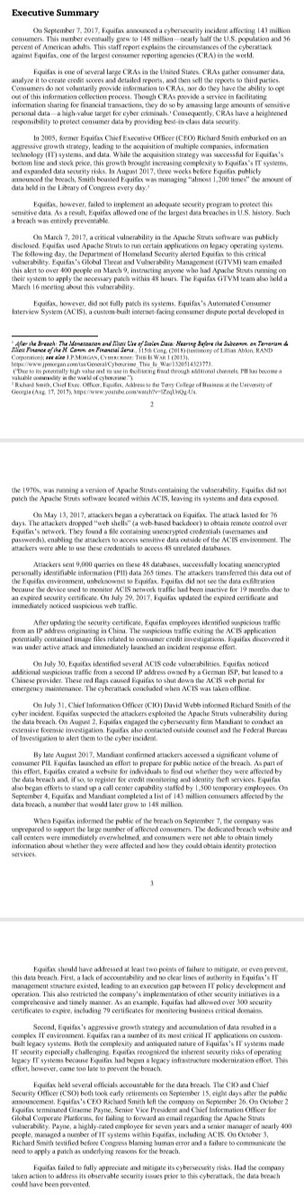

"Equifax, however, failed to implement an adequate security program to protect this sensitive data. As a result, Equifax allowed one of the largest data breaches in U.S. history. Such a breach was entirely preventable."

The email instructed Struts to be patched within 48 hours

The meeting was held a week after the email was sent.

Already, there is a red flag here.

Because you know that no one actually did.

However, you need a lot of internal political clout and organization in company that big to get things patched that quickly.

Equifax had a total of 2 months and one week to address this issue.

"How many web shells, Adrian?"

I'm glad you asked! 30 web shells. 30.

@viss found the answer for back when the details were first coming out from the Mandiant investigation

Another good question! Equifax owned a massive ip space - 17,152 possible IPs, to be exact.

I know what you're thinking. NO WAY - does anyone really still do that these days???

Any bets as to what kind of file it was? Here's a hint: it contained creds for 48 databases.

Why didn't Equifax notice the exfiltration? Well, honestly, most orgs aren't set up to detect data exfiltration on the wire.

And that's because no one was formally responsible for certificate management internally. In an organization that owned over 17,000 routable IPs. Maybe it was just internal cert responsibility that got the hot potato treatment?

At this point, the executive summary gets into breach response and corporate accountability.

1. Staff was AWARE of deficiencies

2. Proper processes, tools and policies existed

3. Lack of leadership and accountability allowed processes to fail, tools to fall into disrepair and policies disregarded.

*Use tact; don't literally do this, please. You can't help anyone when you're fired.

This was 2 months before the attack.

Why?

They forgot to use the recursive flag and were just searching the root web directory with the tool.

I can imagine the commentary:

"WOW, this tool is FAST!"

"Yeah, good thing we're not vulnerable!"

If your Java webapp starts randomly running 'whoami', that should be cause for alarm. Simple anomaly detection works here, as it does on Windows (CMD.exe or Powershell running as child of WINWORD.EXE = bad)

Wait, WHAT? Equifax had a dedicated Emerging Threats team???

"The Equifax Countermeasures team installed the Snort rule..."

A COUNTERMEASURES team also?!

This is scriptwriting 101. I don't think a script of mine has EVER worked on the first try.

@gossithedog used Google

Perhaps 5 or so could have prevented the breach entirely. Many of the remaining 29 could have detected the breach in enough time to stop it.