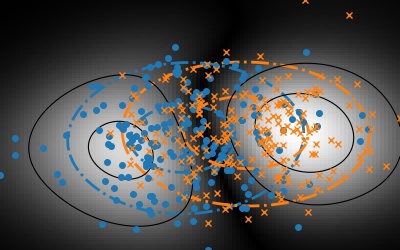

A simple summary of our #NeurIPS2019 work

gael-varoquaux.info/science/compar…

Given two set of observations, how to know if they are drawn from the same distribution? Short answer in the thread..

gael-varoquaux.info/science/compar…

This framework builds upon solid mathematical foundations (RKHS), fast testing procedures, and a line of results that originated from MMD, maximum mean discrepancy.

@ScetbonM presents this specific work on Thur with a spotlight at 4:55pm and after during the poster reception

nips.cc/Conferences/20…