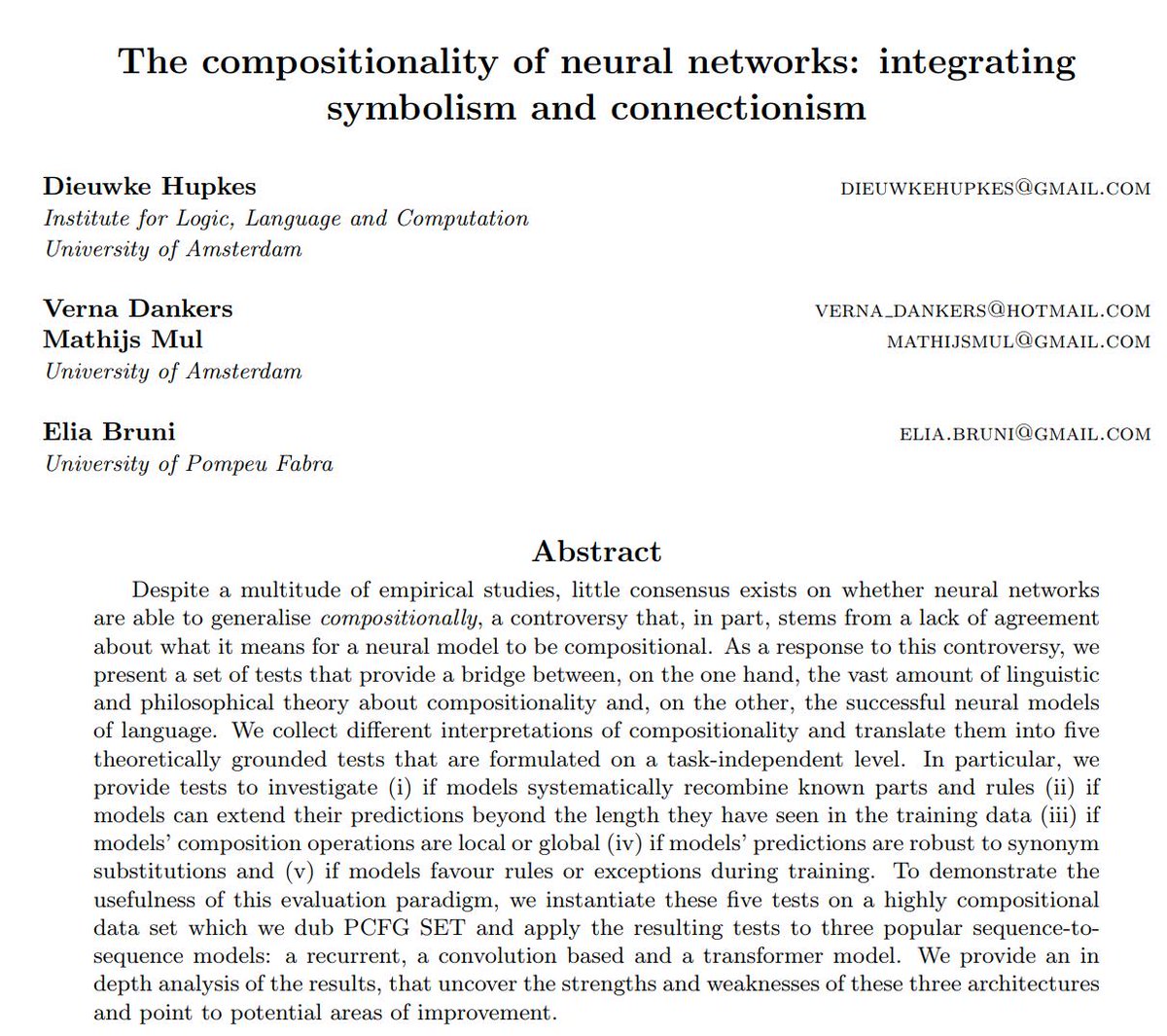

arxiv.org/abs/1801.01239

1. There is relatively little direct interaction between policy makers and science (*cough* *cough*)

2. The scientific problem is sufficiently hard

3. The scientific community tends to produce many, low powered(*) studies instead of a few high powered ones.

(For those unfamiliar, "power" is a term of art. Think about sample size as an approximation.)

journals.uchicago.edu/doi/abs/10.108…

dl.acm.org/doi/abs/10.114…