- Forgot to renew a domain name

- Didn't update security certificate and it expired...

- Physical hardware issues "surprisingly computers don't function well underwater" during Hurricane Sandy

Drift Into Failure by Sydney Dekker: Everyone can do everything right at every step you may still get a catastrophic failure as a result. Protip: don't read this on a plane or with a loved one nearby!

- Operations

- Application

- Software stack

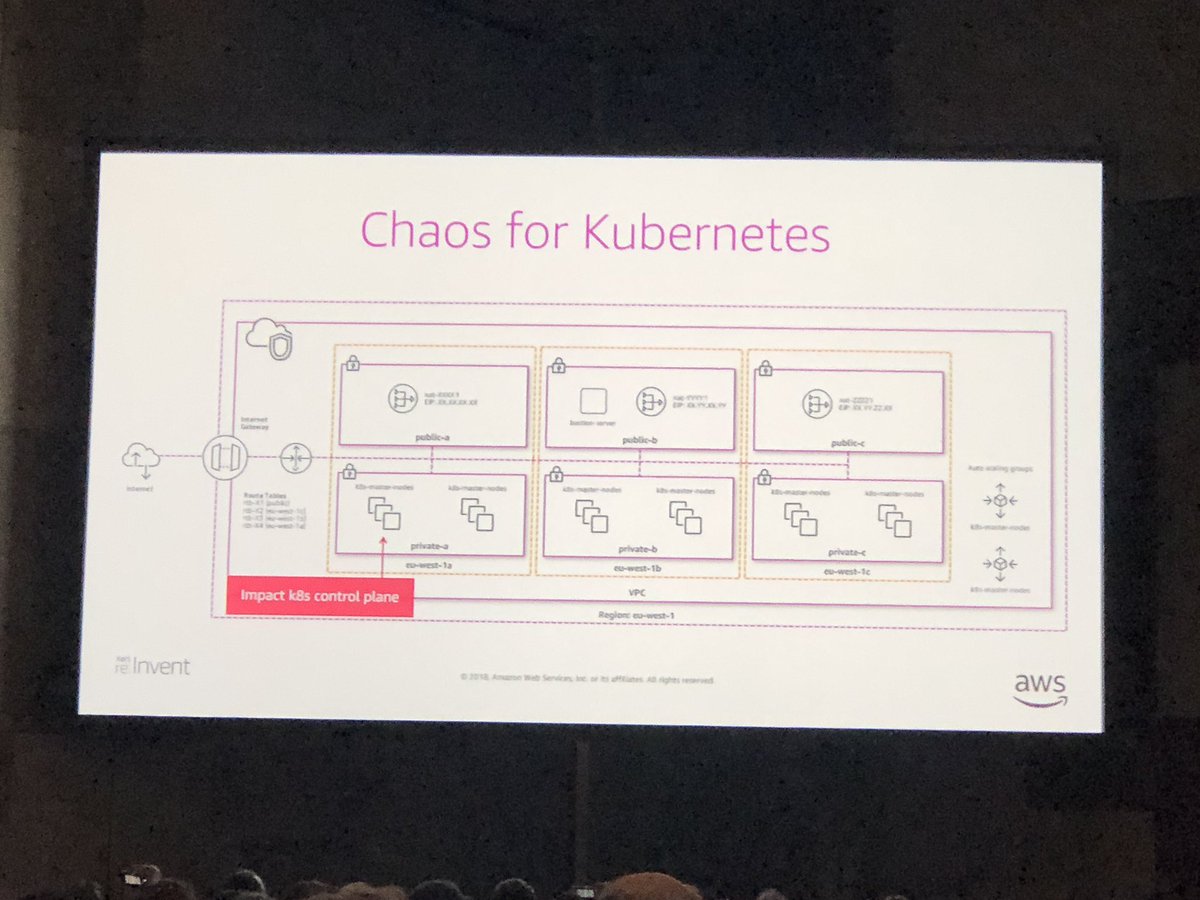

- Infrastructure

#reInvent

- Application level replication

- Structured database replication

- Storage block level replication

- Past: disaster recovery

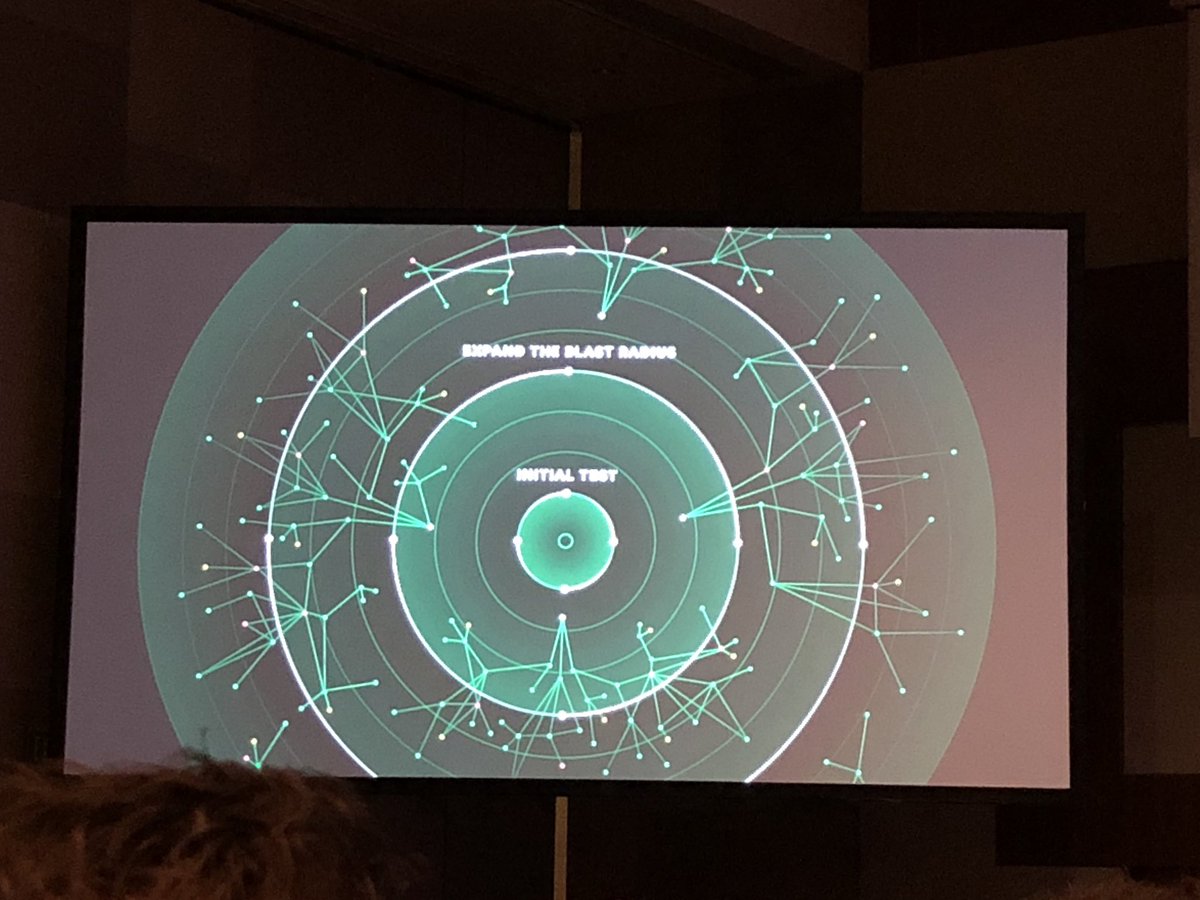

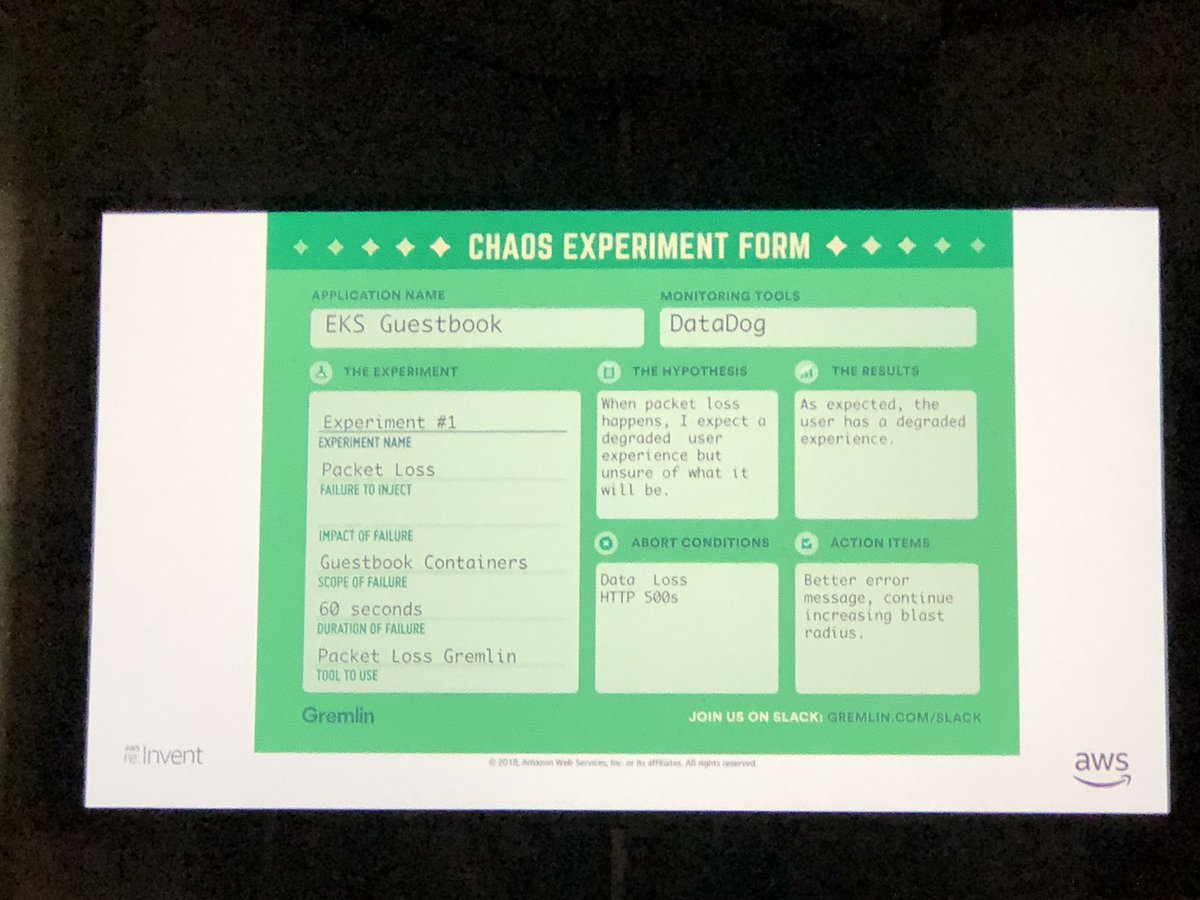

- Now: Chaos engineering

- Future: Resilient critical systems

Will be interesting to hear the difference between Now and Future.

- Started with Sungard & Mainframe Batch, core focus on Recovery Point Objectives (time interval between snapshots)and Recovery Time Objectives (time taken to recover from after a failure)

- AWS Isolation Model: No global network or service dependencies; AZ geographical separation with synchronous replication

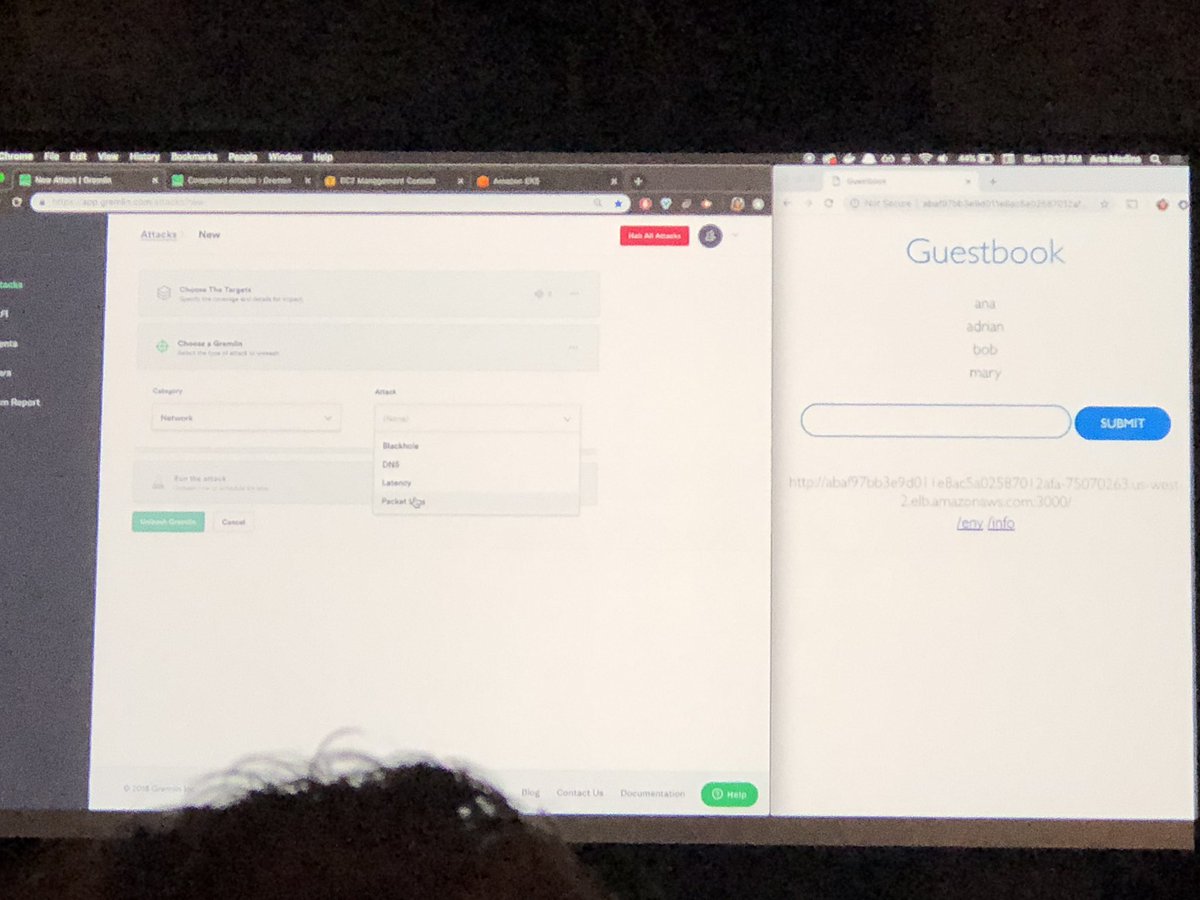

AWS Mechanisms: fault injection queries, simulating region failure via IAM region restriction

- Linux leap

- Sun SPARC cache bit-flip

- Cloud zone or region failure

- DNS failure (ok definitely seen this plenty of times - there's a great haiku about this)

Make sure to check out @ChaosConf for more details!