What do you think the sensitivity of the device is for an episode of AF?

(pick closest estimate)

(It may or may not have the answer)

Depending on time/interest, we will cover:

1. Diagnostic accuracy

2. Bias

3. Costs of trials

Let's start here.

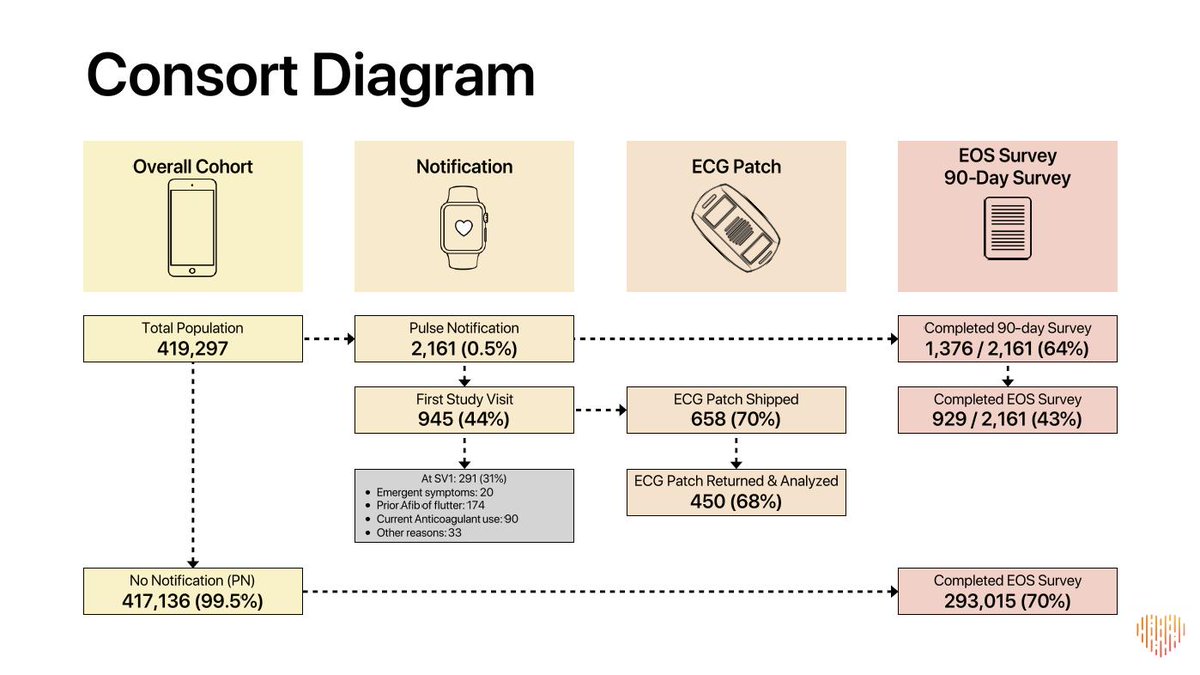

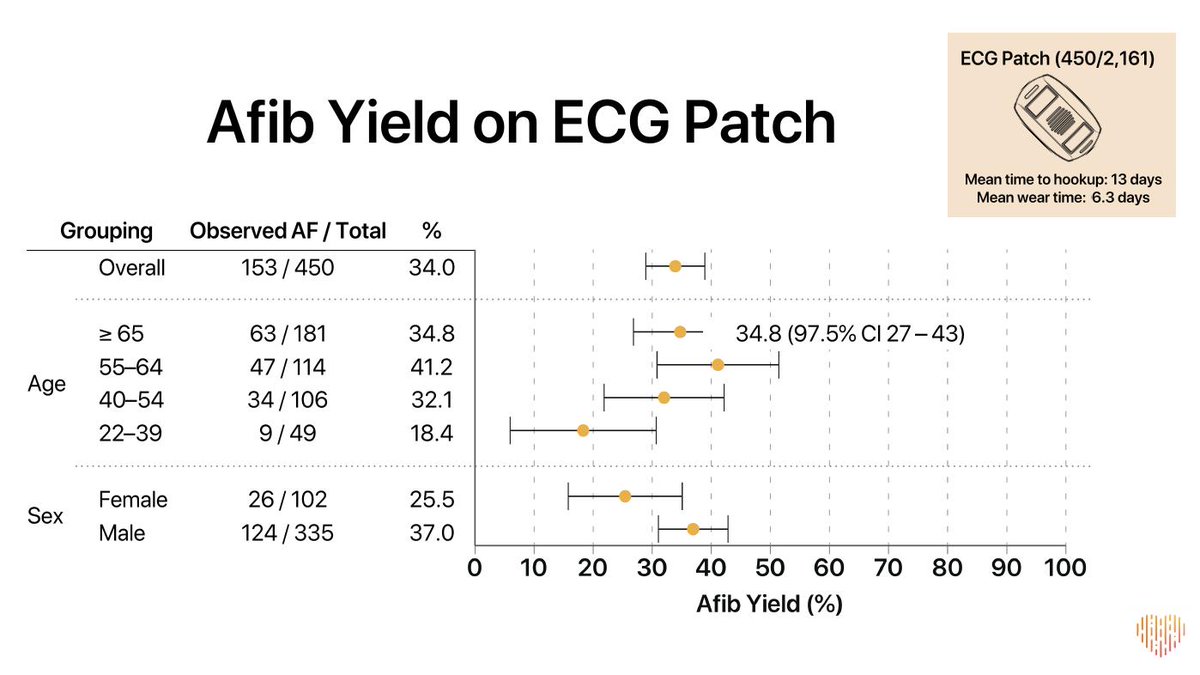

What is the prevalence of AF in the population who wore an ECG patch?

It's 33%. That is pretty high!

The answer is 450 (total patches) -153 (with AF) = 297

This number is the total of True Negatives + False Positives

The answer is on this slide:

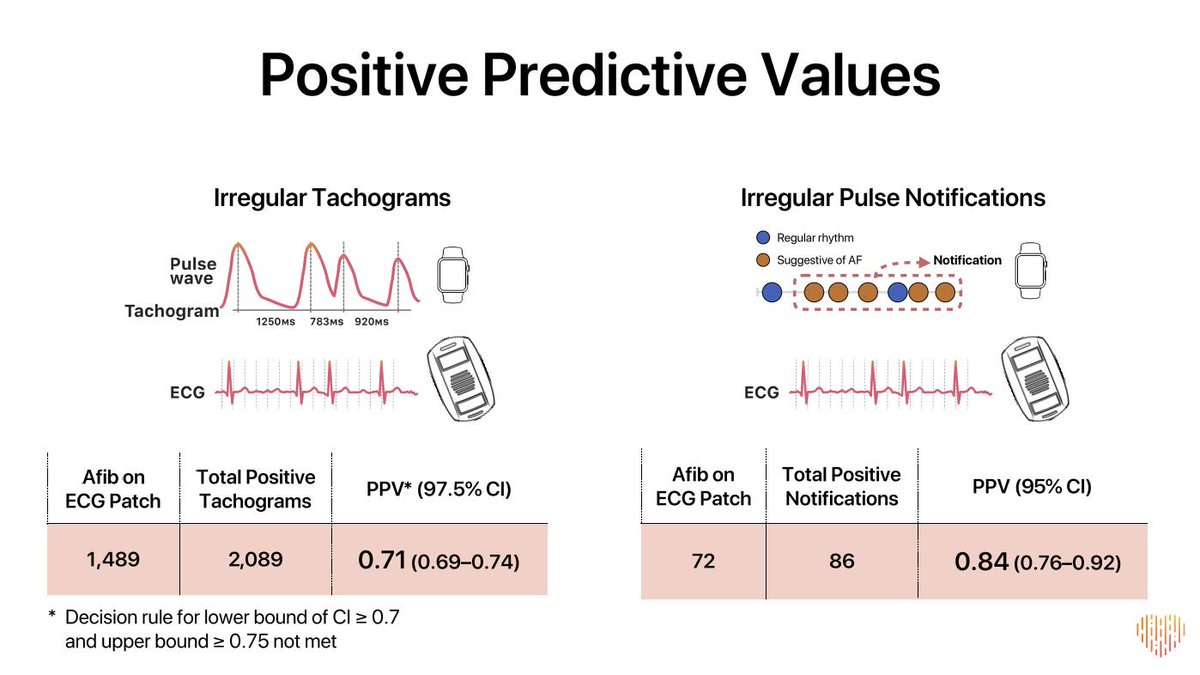

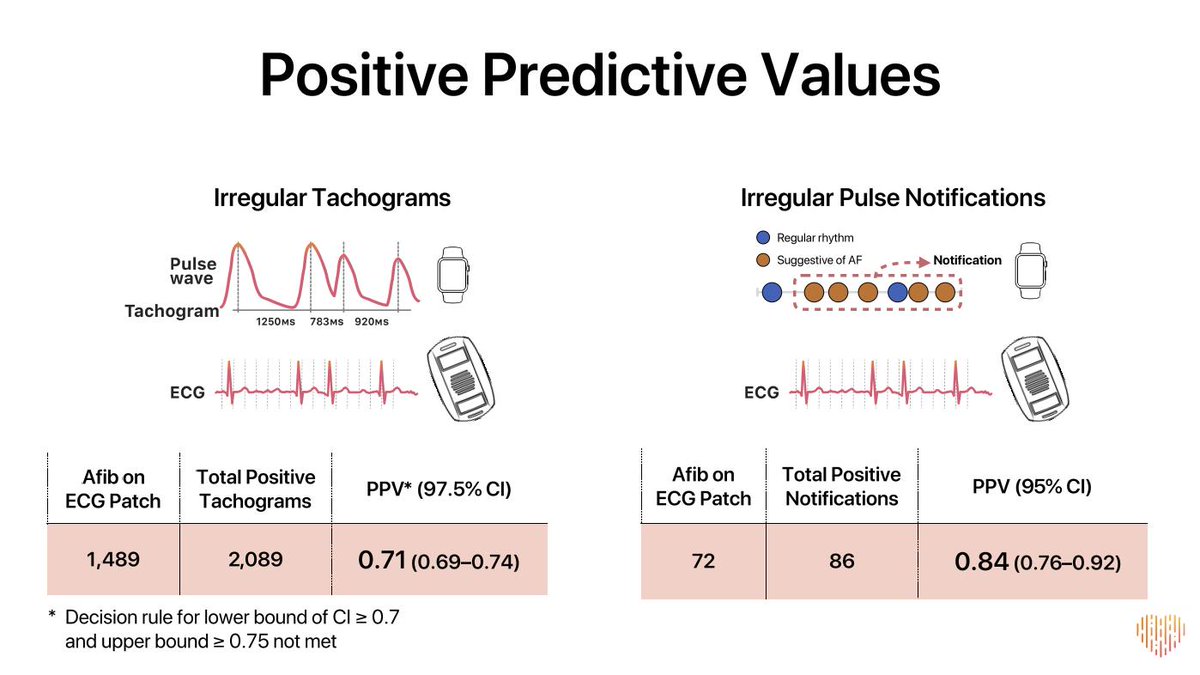

Total notifications of 86 MINUS 72 confirmed on patch (True Positives)

So there were 14 FALSE POSITIVES

True negatives = 283

False positives = 14

True positives = 72

Now we just need the false negatives....

That is astoundingly large to me.

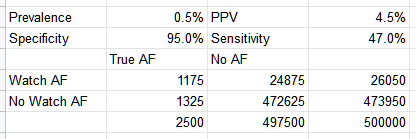

So that means the sensitivity is 72 / (72 + 81) = 47%

There were 283 TRUE NEGATIVES and 14 FALSE POSITIVES

This means specificity = 283 / (283 + 14) = 95%

That is pretty good, but not great for screening low risk people.

For round numbers, this is about 350k people!

I'm going to guess 0.5% or 2500 out of every 500,000 people

That is awfully low in my mind. Some may have chosen to get care outside of the study, others may have just blown the whole thing off.

Well young people (numerically by far biggest group in the study at 220k) were only 61% as likely to wear a patch after a notification than those >=65 (N=24.6K)

This is okay if you plan to use it in symptomatic pts, where this kind of bias is good. But not so good for screening the masses.

They certainly didn't change the algorithm mid-study (I hope!)

The truth is probably in between the two.

This trial was called big and inexpensive. Heralded by many as a new frontier in how to do science.

Dropout that is almost certainly not random (meaning it will bias the numbers in one direction or another).

It is done over 450 patients (152 who had AF with the patch on).

Per patient (over 450 patients where we have any truth at all), that is $18,200 per patient.

Is that cheap? Not to me.

I got a very nice email from Sanjay Kaul. Pointing out that an ITT analysis of @Apple Heart Study would have miserably poor results due to the loss to follow-up. This has major negative implications for using this in RCTs.

1. Very poor sensitivity, probably around 50%-ish or worse (given biases)

2. Excellent specificity, probably 95%-ish or higher

3. Terrible PPV in all comers