Software often forces even more simple and rigid schemas.

But deep learning makes fuzzy human ideas computable. Can it reverse the trend?

This causes grave harms and also just missed opportunities.

A mundane example I was reading about today: Google making assumptions about names that harm outliers.

"What is a dog?" is actually a very fuzzy, wiggly concept.

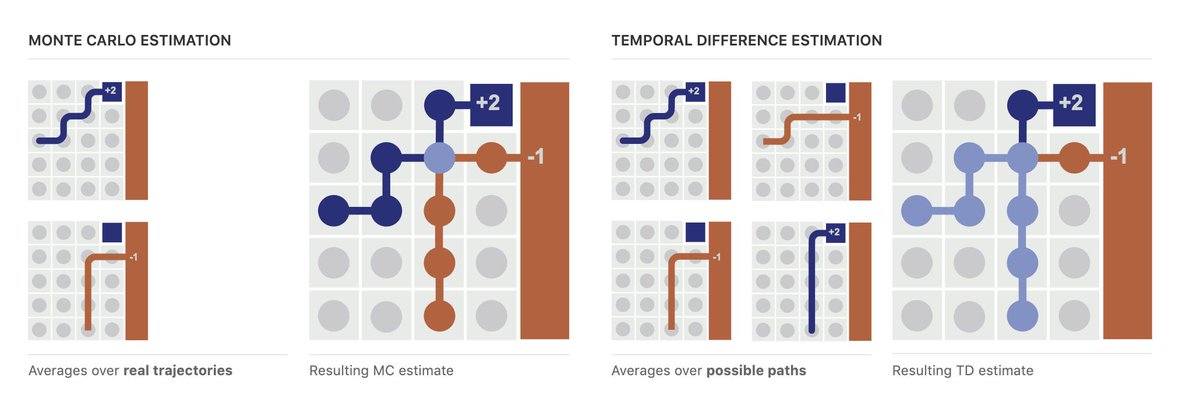

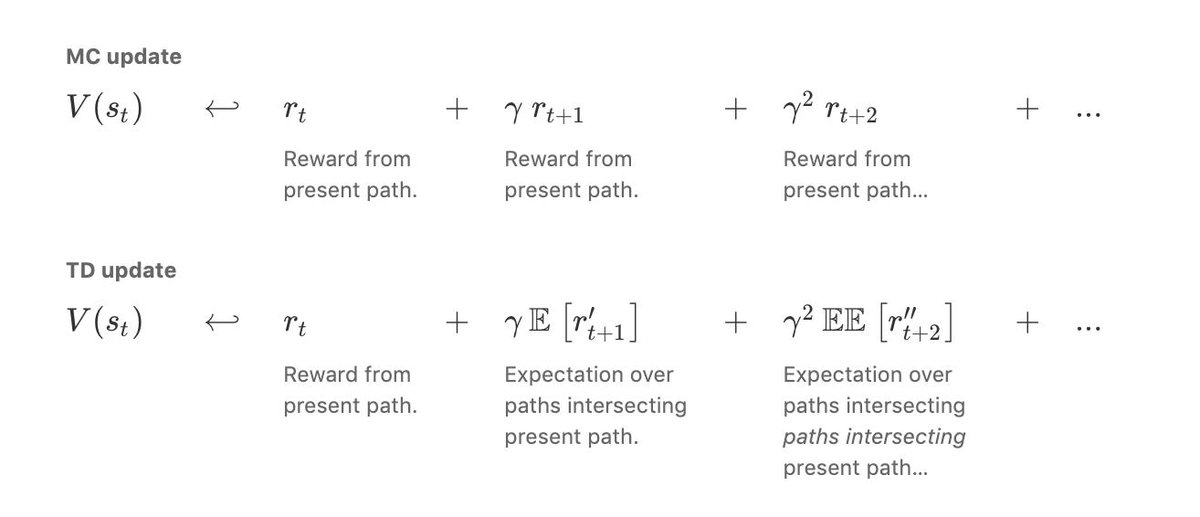

And so neural networks take all these things that previously couldn't be systematized, and being to make them computationally accessible.

Can we enable more understanding, flexible, humane governments that don't try to fit the world in simple boxes?

But that's just scratching the surface. The really exciting thing is the representations they form, full of rich abstractions.

Deep Learning & Human Beings - colah.github.io/posts/2015-01-…

Artificial Intelligence Augmentation: distill.pub/2017/aia/