(1/n)

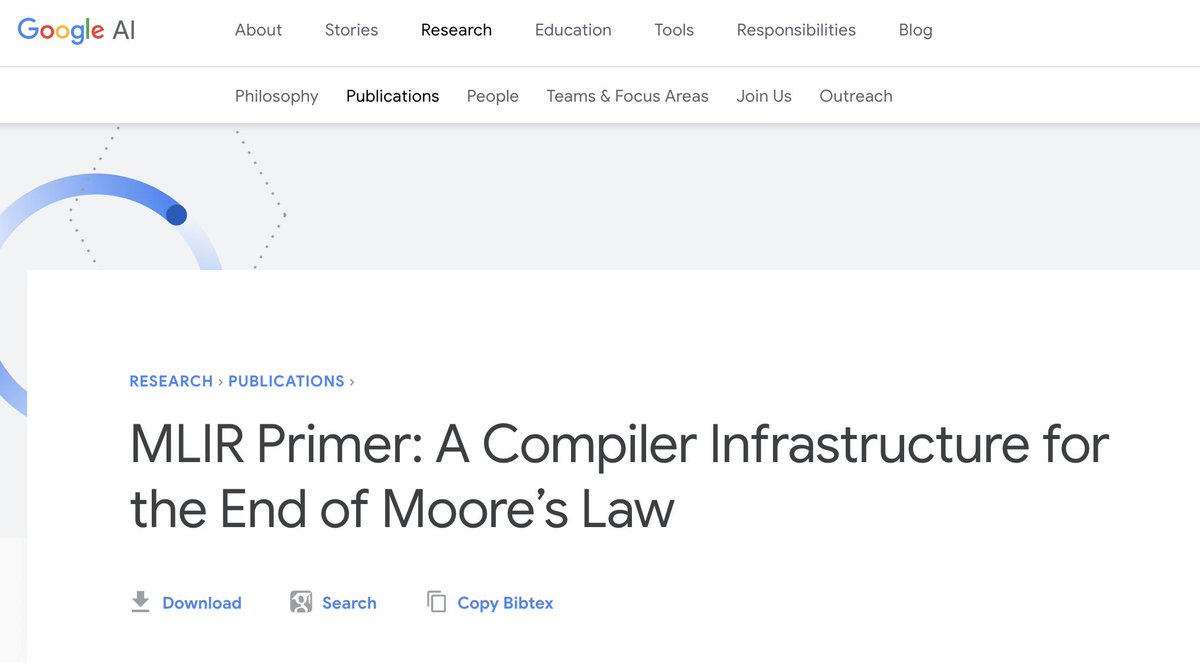

for more technical details, ref: ai.google/research/pubs/…

@GoogleAI's TPUs are an example of this, sure; but you also have @Apple's specialized chips for iPhones, @BaiduResearch's Kunlun, and literally *thousands* of others.

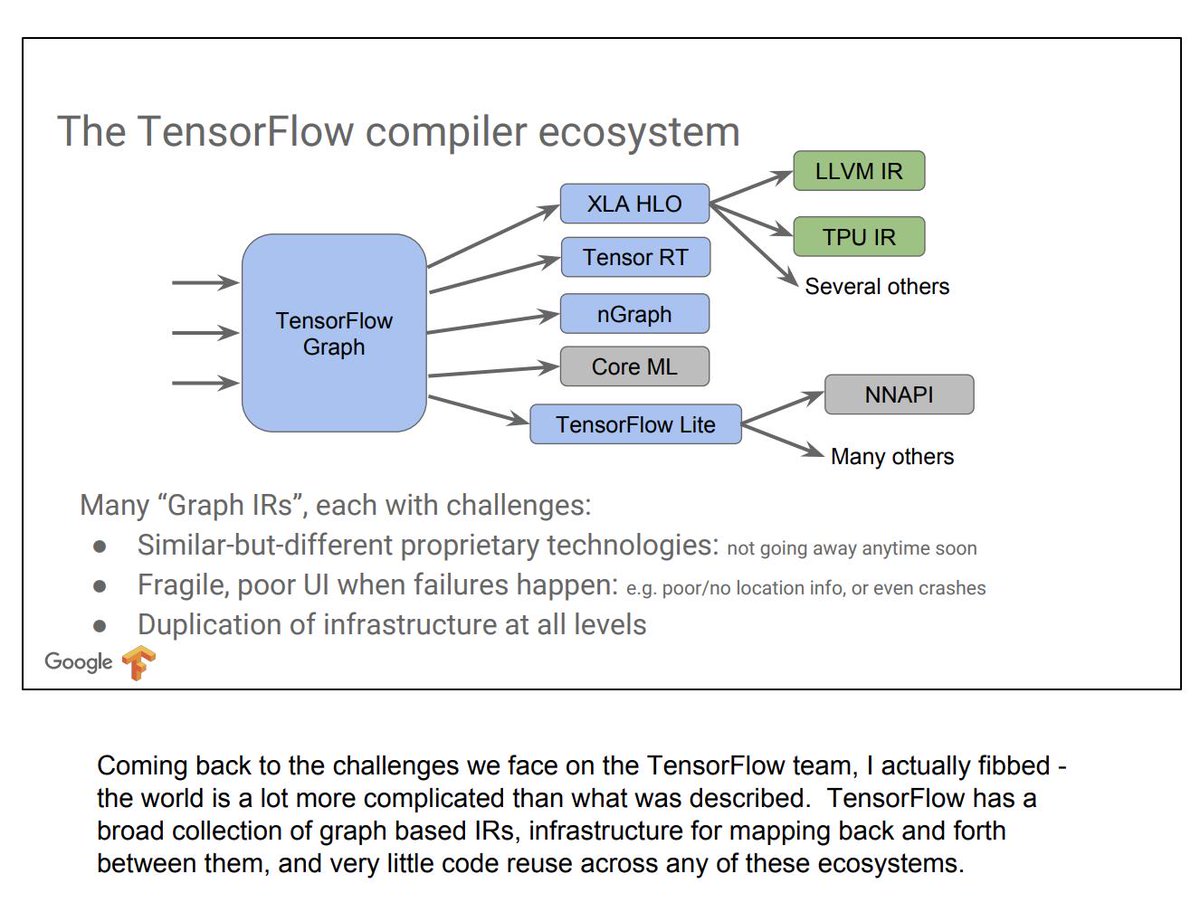

The way that you program for

(2/n)

Developer-facing tooling is essentially non-existent (or quite bad, even for TPUs); many models are built, and then found to be impossible to deploy to edge hardware; etc.

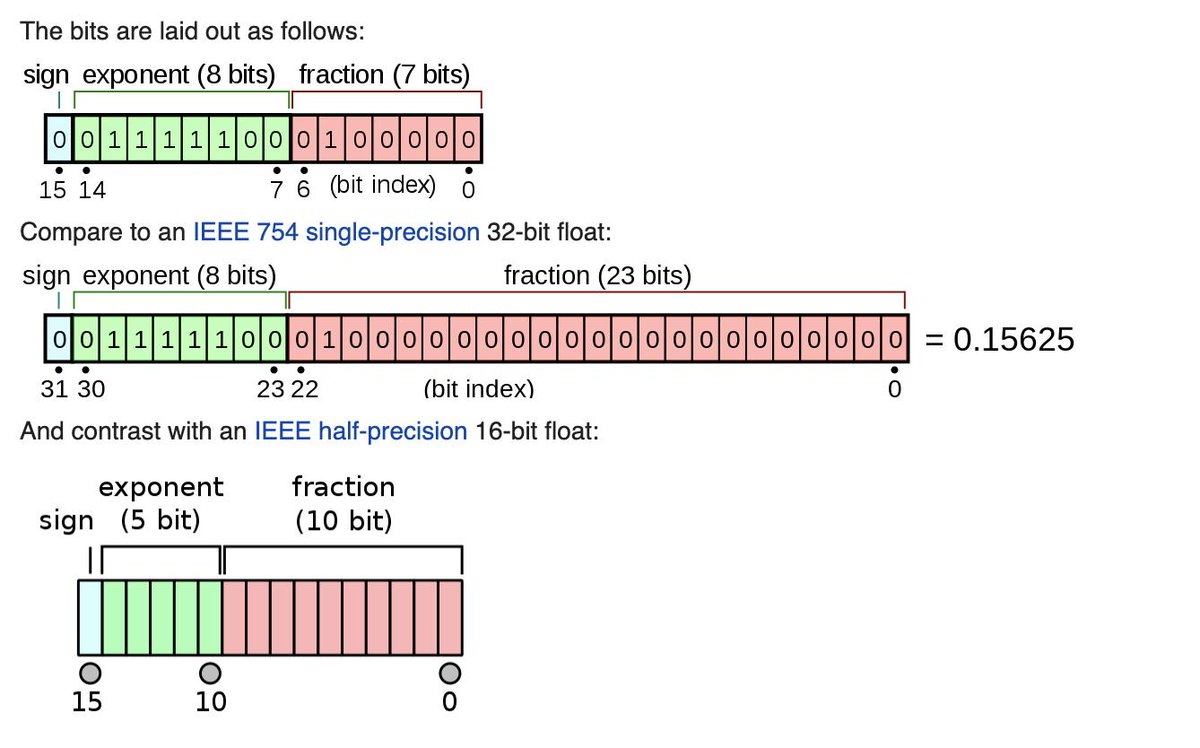

You also have very rad, but specialized types (e.g., bfloat16).

(3/n)

For @Google, it's saved us from increasing our number of data centers by a considerable amount ($$). It's also unlocked research for @DeepMindAI that would have been impossible.

(4/n)

Imagine trying to deploy a machine learning-enabled app to @Android phones. You'd have to know which ops could be accelerated *for each device*; which to run on GPU, and which to kick back to CPU.

It's crazypants.

(5/n)

As programmers, none of us should have to be concerned about this nonsense. We should be able to

(6/n)

Anything not runnable on GPU/TPU could be punted back to the CPU.

(7/n)

You can think of MLIR as a layer on top of LLVM, that allows you to focus on writing high-level code, instead of how to optimize ops or where to deploy.

(8/n)

If you're interested in these things (I hope you are!), join in our open design meetings / mailing lists:

MLIR: groups.google.com/a/tensorflow.o…

#S4TF: groups.google.com/a/tensorflow.o…

Mehdi's LLVM tutorial on MLIR (April 2019): llvm.org/devmtg/2019-04…

Chris Lattner and Tatiana's keynote: llvm.org/devmtg/2019-04…

Chris' podcast with @lexfridman:

Rasmus + Tatiana's @Stanford course: web.stanford.edu/class/cs245/sl…

(fin)