It'll be in this thread :)

We'd like to make everything between the {% %} transparent; that's the goal.

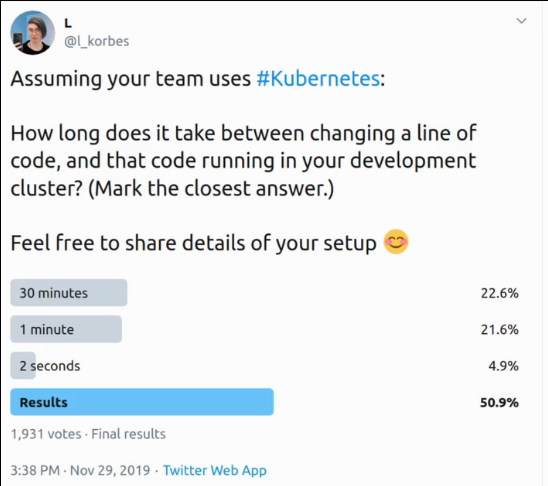

... Unfortunately, as their poll below shows, for almost half of the people who answered the question, that's close to 30 minutes. We need to do better!

(To clarify: while developing, we shouldn't save-waitforCI-checkresults, because that's just too damn slow.)

CI solves a different class of problems.

💯

This is the repo with the sample code for the app that they'll use:

github.com/windmilleng/en…

Build time was about 20s, so we get a 20s loop when working locally.

However, when working with a remote cluster, it takes almost 1 minute (because of push/pull time).

(Shamless plug to my series of blog posts about optimizing image size: ardanlabs.com/blog/2020/02/d…)

But can we see the compiler cache ... without having to copy it around?

We move to a whole new class of techniques involving "hot reload", where instead of creating new containers, we keep the same container.

(Garden calls this "hot reloads; Skaffold calls this "file sync"; there also tools entirely dedicated to this operation, like ksync!)

- the build tooling in the container

- the compute resources to actually do the compilation

So ... can we work around this?

(Yes)

If you're using a local cluster (minikube, kind, docker desktop, microk8s...)

-> compile locally and push the binary to the local cluster

If you're using a remote cluster

-> if you have extra resources, sync code and build remote

-> else, push compressed binary