blog.cardiogr.am/detecting-atri…

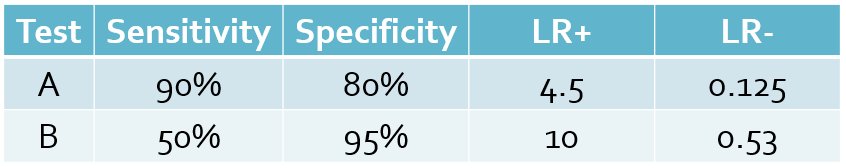

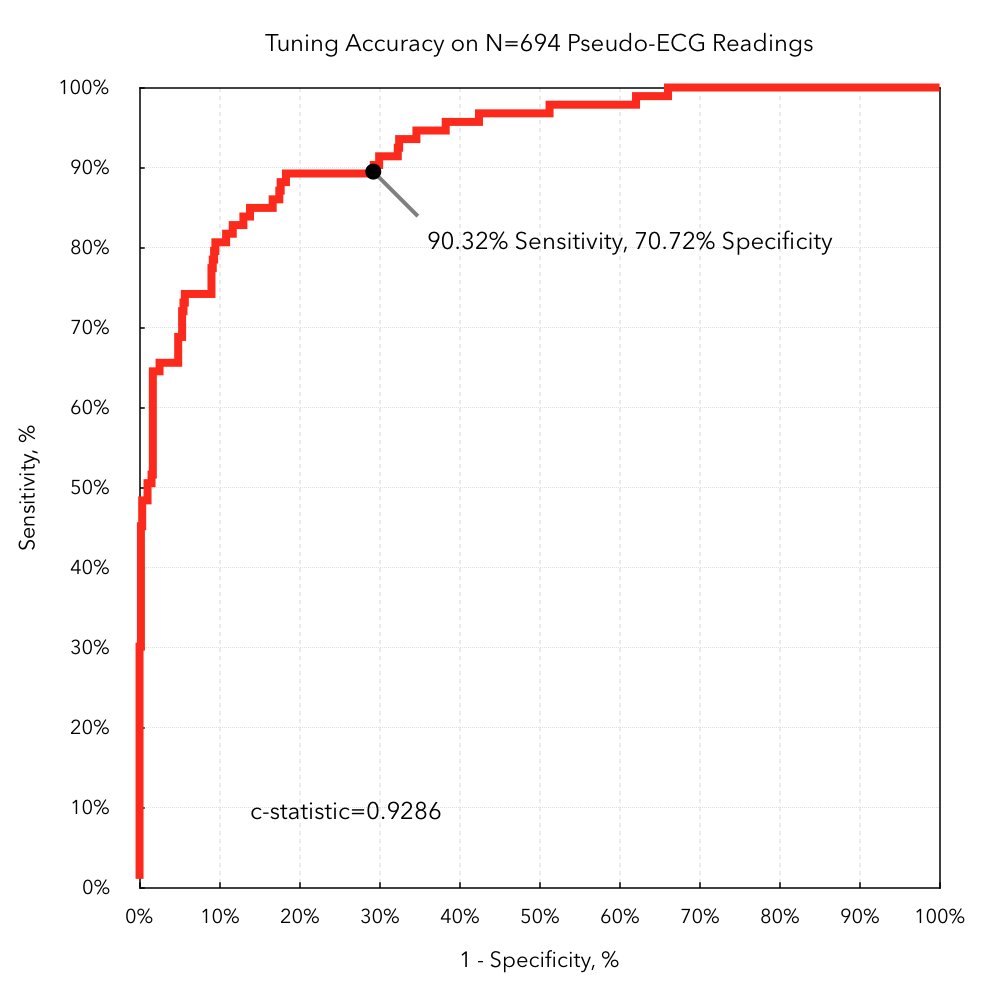

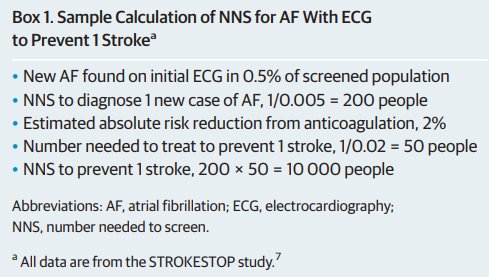

- 90% sensitivity

- 80% specificity

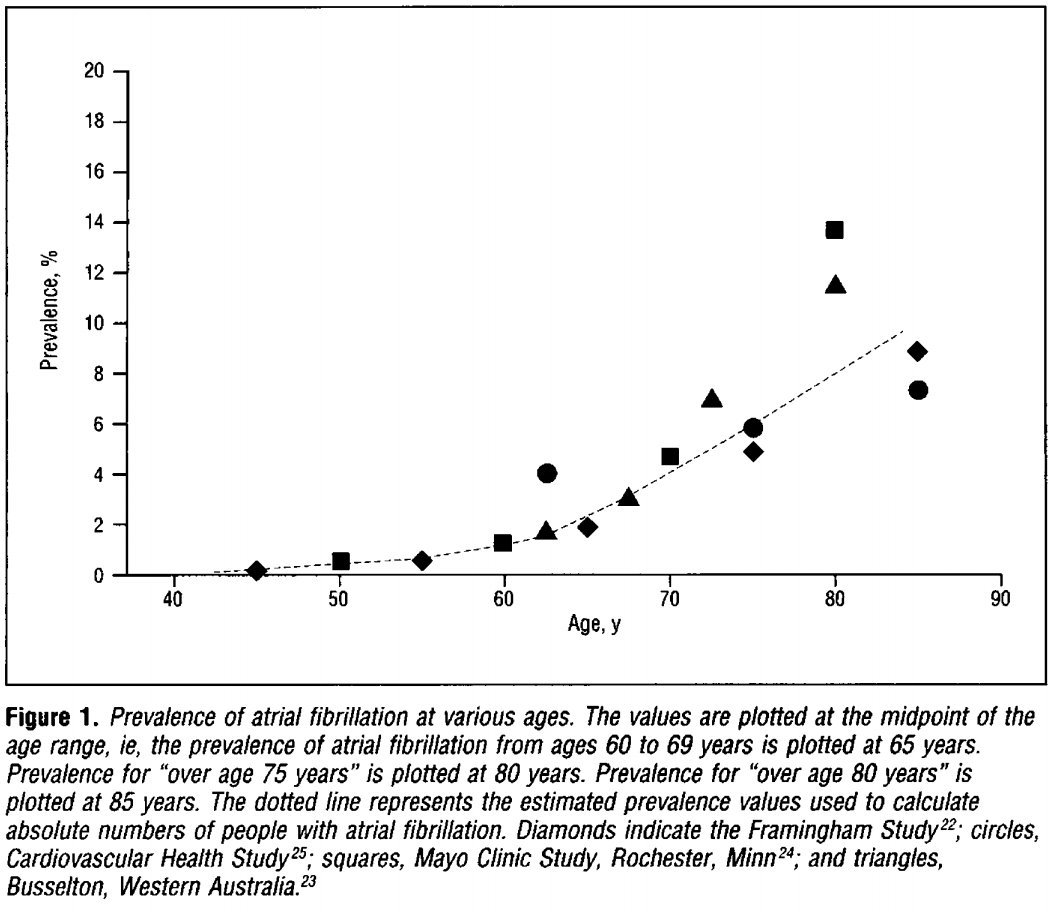

- 0.5% age-based prevalence

Sensitivity = P(Test+ | Disease+)

Specificity = P(Test- | Disease-)

Prevalence = P(Disease+)

Positive Predictive Value = P(Disease+ | Test+)

Negative Predictive Value = P(Disease- | Test-)

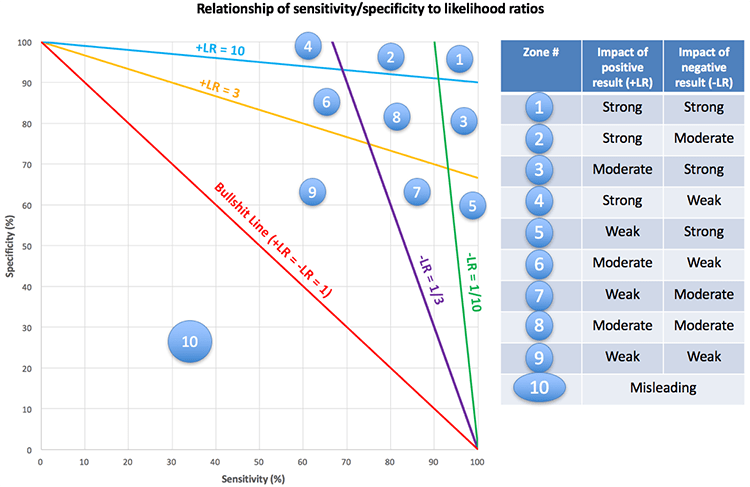

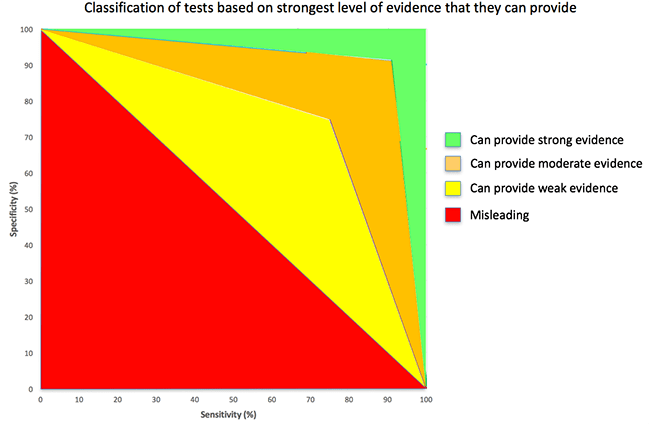

Positive Likelihood Ratio (LR+) = Sensitivity / (1-Specificity)

Negative Likelihood Ratio (LR-) = (1-Sensitivity) / Specificity

Odds Ratio = LR+/LR-

Post-test Odds = Pre-test Odds x Likelihood Ratio

(Use LR+ or LR- depending on test result)

(Can chain together product of multiple LRs)

Post-test Prob = Post-test Odds / (1 + Post-test Odds)

P(Disease+)

--------------

P(Disease-)

P(Disease+) x P(Test+|Disease+)

-------------------------------------

P(Disease-) x P(Test+|Disease-)

P(Disease+|Test+) * P(Test+)

---------------------------------

P(Disease-|Test+) * P(Test+)

en.wikipedia.org/wiki/Bayes%27_…

P(Disease+|Test+)

---------------------

P(Disease-|Test+)

P(Disease+|Test+) = Post-Test Odds / (Post-Test Odds + 1)

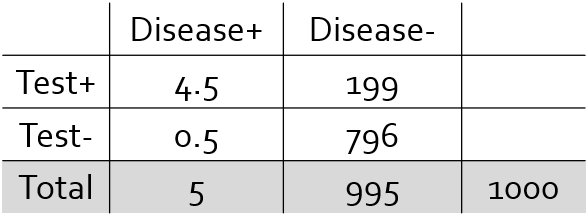

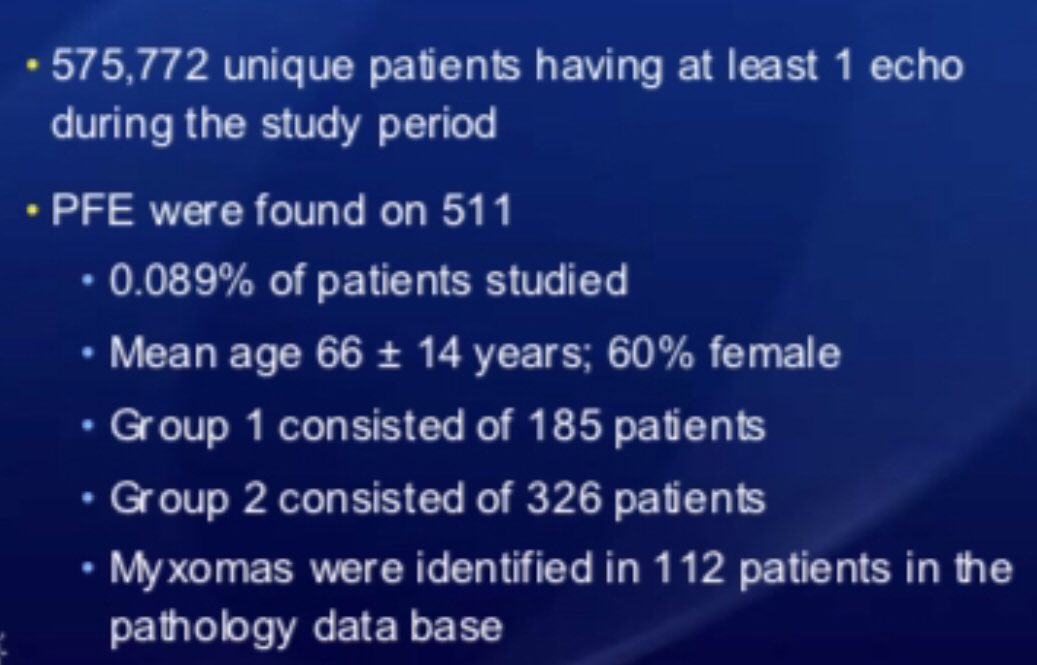

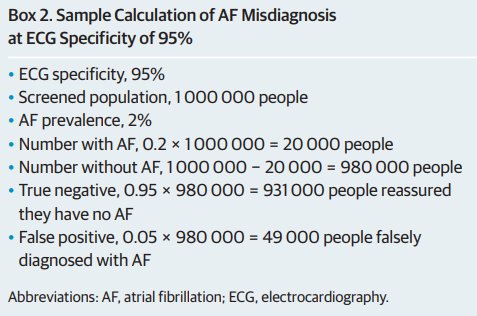

Pre-Test Probability = 0.5%

Pre-Test Odds = 5:995 ~ 0.005

Positive Likelihood Ratio = 0.90/(1-0.80) = 4.5

Post-Test Odds = 0.005 * 4.5 = 0.0225

Post-Test Probability = Positive Predictive Value = 0.0225 / (0.0225+1) ~ 2.2%

Pre-Test Odds ~ 0.005

Positive Likelihood Ratio (Test A) = 0.90/(1-0.80) = 4.5

Negative Likelihood Ratio (Test B) = (1-0.50)/0.95 = 0.53

Post-Test Odds = 0.005 x 4.5 x 0.53 = 0.012

Post-Test Prob = 0.012 / (0.012+1) ~ 1.2%

emcrit.org/pulmcrit/mythb…