I know lots of experts have written and presented great stuff on this. But if #medtwitter has taught me nothing else, it's that we can always add new ways to look at stuff.

Right?

So with that, here’s my practical approach to feedback on the wards and in clinic. Ready?

Okay, so 1st, let’s quickly revisit how feedback was defined in Ende’s classic paper:

“an informed, nonevaluative, objective appraisal of performance intended to improve clinical skills”

(Ende J. Feedback in clinical medical education. JAMA. 1983;250:777–781)

Cool? Cool.

Before you pass go: Acknowledge #bias of all kinds—especially your own. Such as but not limited to:

Race

Gender

Gender expression

Sexual orientation

Faith

Age

Weight

Skin shade (yep, that)

Language/How you talk (yes, that)

Because all of that can affect how we see. Right?

It’s not rocket science. I mean, how can any learner expect to meet a standard that hasn’t been made clear to them? To be fair, we need to think about required things PLUS our own expectations for learners at each level.

But how?

Stay with me. I’m going somewhere.

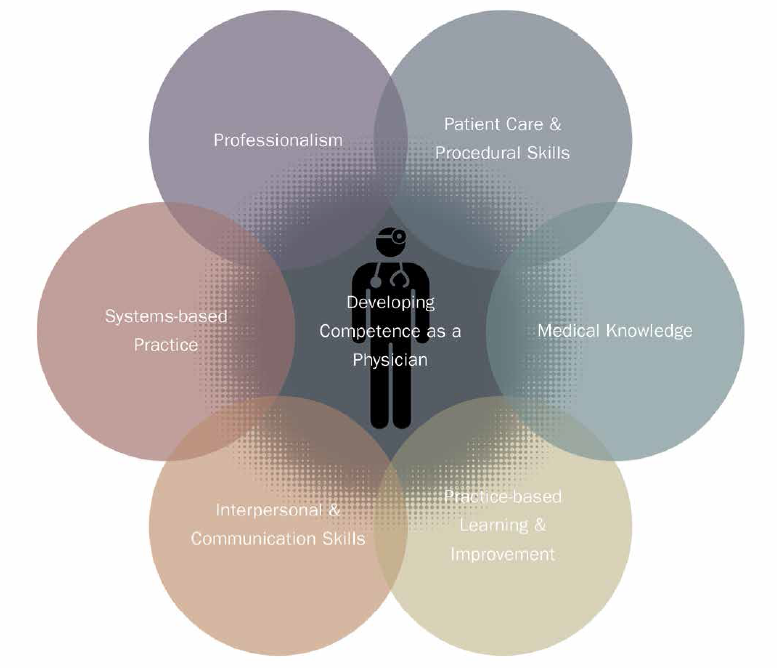

So, I literally write these on the board or handout. Then, I go one competency at a time with my team.

“Professionalism is defined as.. . . . Our program expects. . . . . On this team, I expect. . . .”

I am explicit.

And I explain expectation by level of training.

Yup.

After sharing expectations by each competency. This comes next (which includes timing):

Me: “You will also receive feedback this way—by competency. On the fly, at midpoint, and at the end of our time together.”

Then I tell them concretely how that will look.

Yup.

On-the-fly:

Me: “I want to give you some feedback. This falls under ‘Medical Knowledge.' On rounds, you checked Mr. Jones feet and backside for petechiae noting that it can be gravity dependent. You integrated your DIC differential with your exam. Great job.”

Make sense?

My on-the-fly feedback occurs frequent enough to afford reinforcement of positives and correction of areas to improve. I keep track of it so that I can have examples for my evaluation.

But mostly? I keep track so that I can see how learner responded so we can build on it.

For midpoint and end-of-experience, we return to the 6 competencies. Then, I give explicit feedback just the way we started.

Why I love this:

One can be a rockstar in 2 competencies but need serious remediation in another. It allows honesty. No "sandwiches" needed.

Nope.

Another awesome thing is that it helps keep you objective for:

High achieving learners

Learners whom you like a lot

Issues of professionalism

Your own biases

Your own biases

With this framework, learners know what to expect.

And YOU have a guide.

It's win-win, man.

Best of all? You started with expectations using the same format. So your learners knew their marching orders.

By the end of rotation, I've racked up examples under each competency. In my mind I arrange it this way:

What I saw

What we discussed

What you did after

Yup.

And yes. We’ve added the Milestones. But the ACGME STILL says this about the competencies:

“The Competencies provide a conceptual framework describing the required domains for a trusted physician to enter autonomous practice.”

They also say this. . .

“These Competencies are core to the practice of all physicians, although the specifics are further defined by each specialty. The developmental trajectories in each of the Competencies are articulated through the Milestones for each specialty.”

Yup. They're still relevant.

And how can I end this without sharing a great piece of advice from a Grady elder? She said:

“Start the game the way you want to finish it.”

Which, now that I think of it, sums up an entire #tweetorial in one sentence. 😊👊🏾

#remembertheendgame #thepatients #learnercentered