Model developers have been taught to carefully think thru development/validation/calibration. This talk is not about that. It’s about what comes after...

A. It’s possible to have good discrimination but poor calibration.

B. It’s possible to have good calibration but poor discrimination.

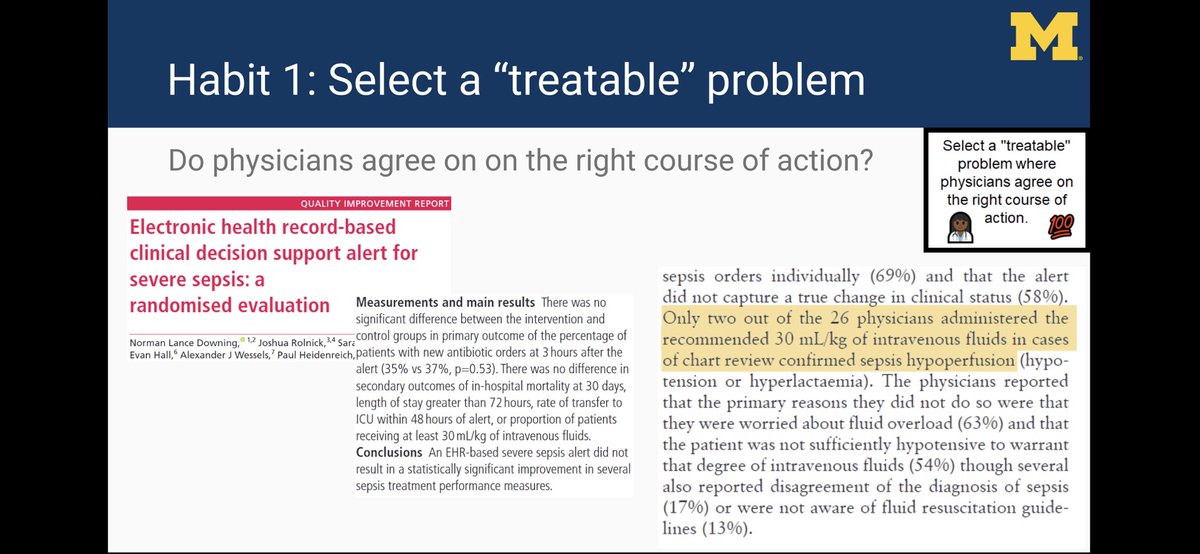

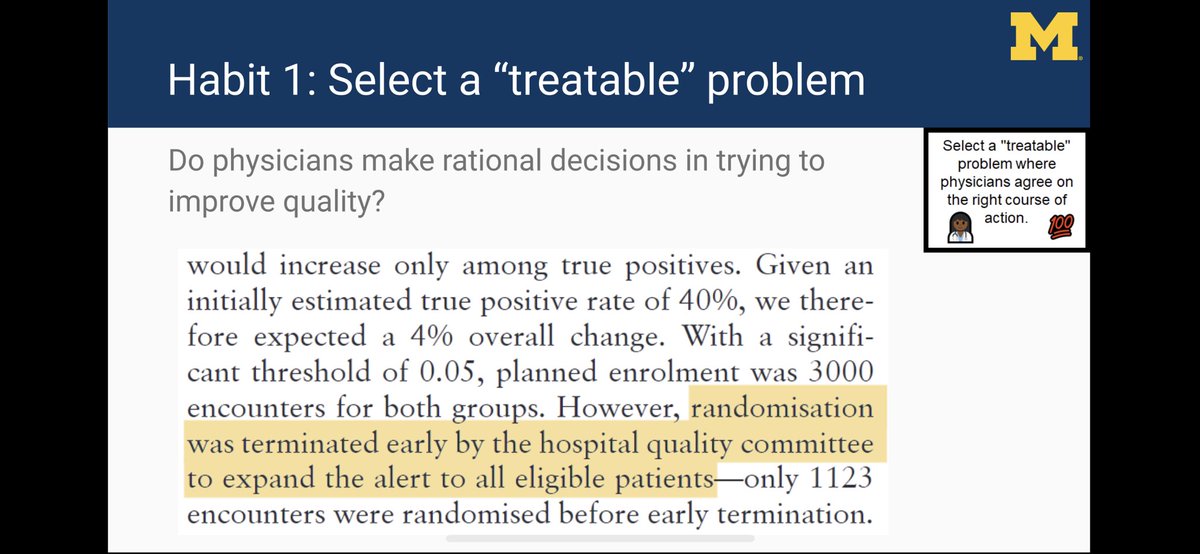

Let’s say we could all agree on a sepsis definition, do we all agree on treatment?

One of the outcomes was admin of 30 mL/kg of IV fluids for sepsis hypoperfusion. Why didn’t alerts work? Many MDs disagreed with guidelines...

A bold move... and one that we are copying at @umichmedicine (based on other evidence). So what else is needed?

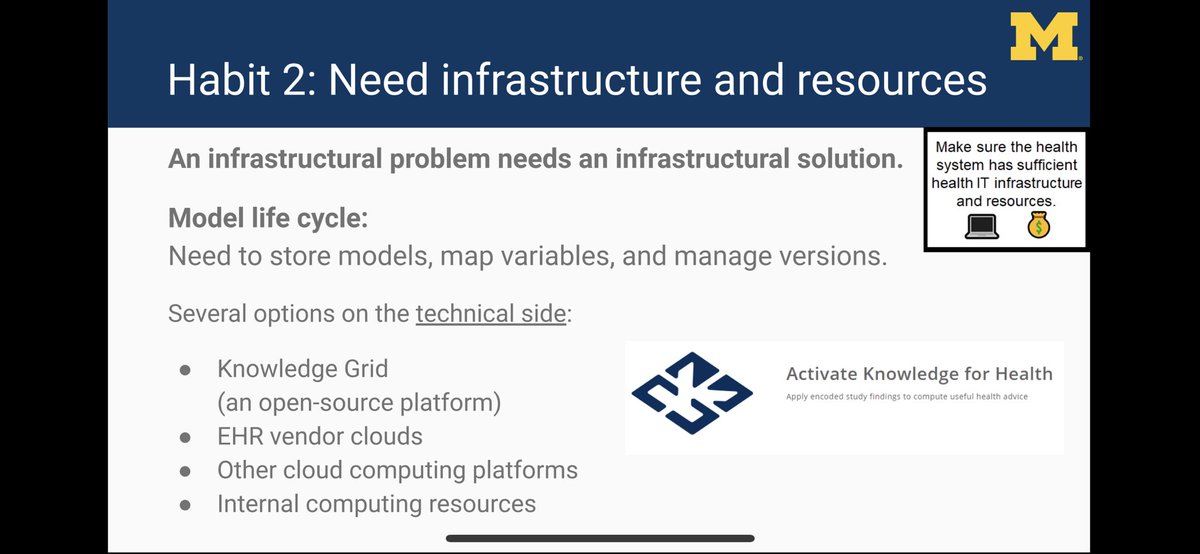

There are several options on technical side for running all models in one place, including open source solutions such as KGrid (developed at @umichDLHS), EHR vendor-based clouds, and other internal/external clouds.

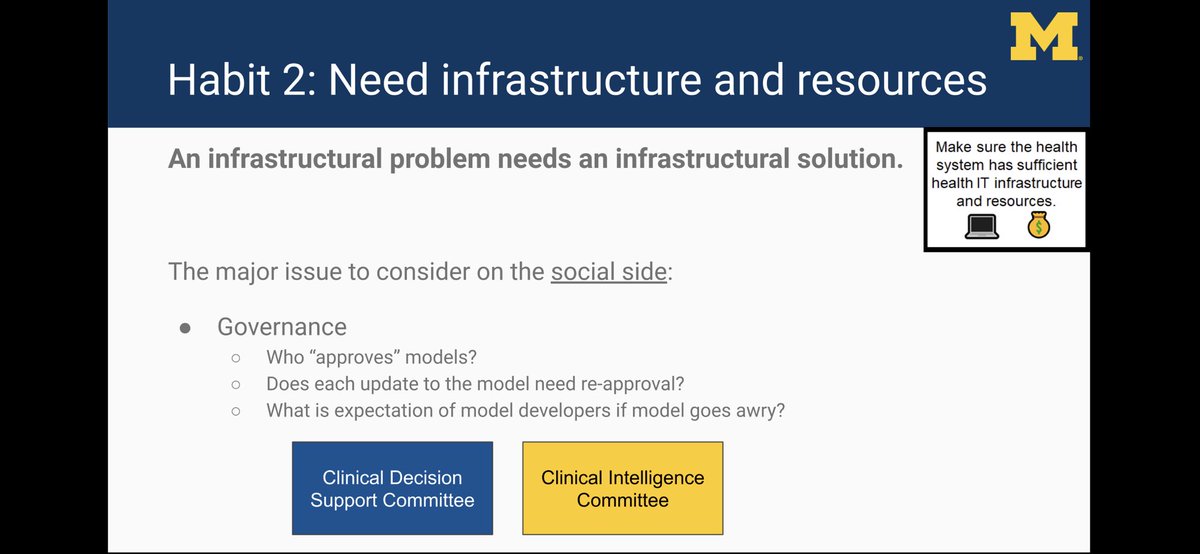

At @umichmedicine, we have a multidisciplinary committee (Clinical Intelligence Committee) that oversees model implementation. It is a “sister” committee to our CDS committee.

1. If model dev is researcher, do they need to support model during normal business hours if problems occur?

2. Outside of business hours, how to respond to models gone rogue?

3. Prior to model updates, who needs to sign off?

Most of these methods can be readily implemented to adjust an existing model’s output to be better calibrated for local data.

We use:

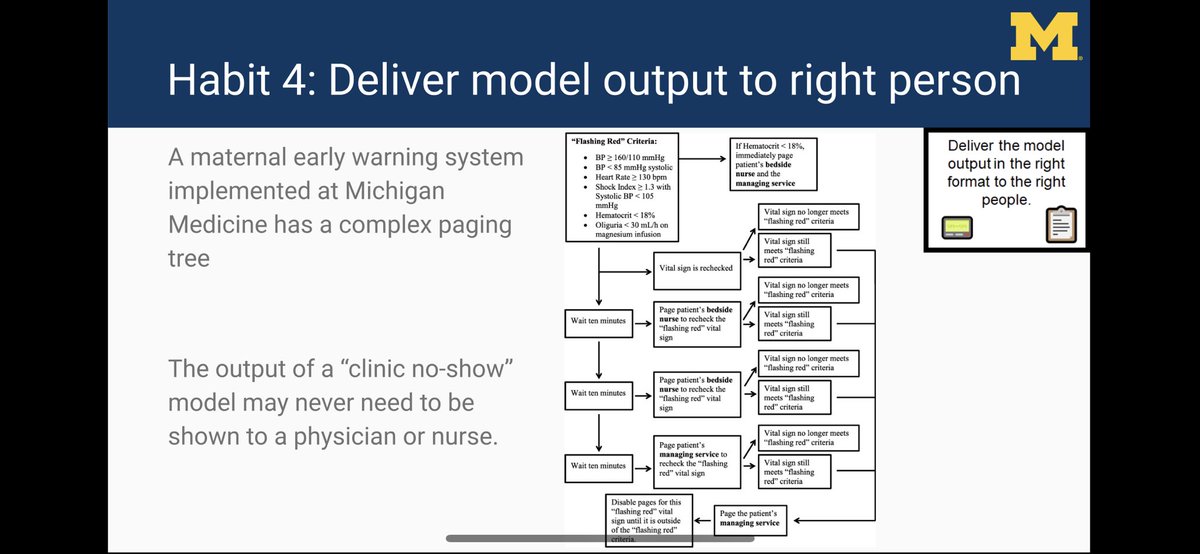

1. Pages (for sepsis alerts)

2. Case management of hi-risk pts in SIM project (with @CHRTumich)

3. Pt lists sorted by acuity (in ER)

and others...

On the other hand, output from a clinic no-show model might be shown primarily to an admin (we have not implemented one).

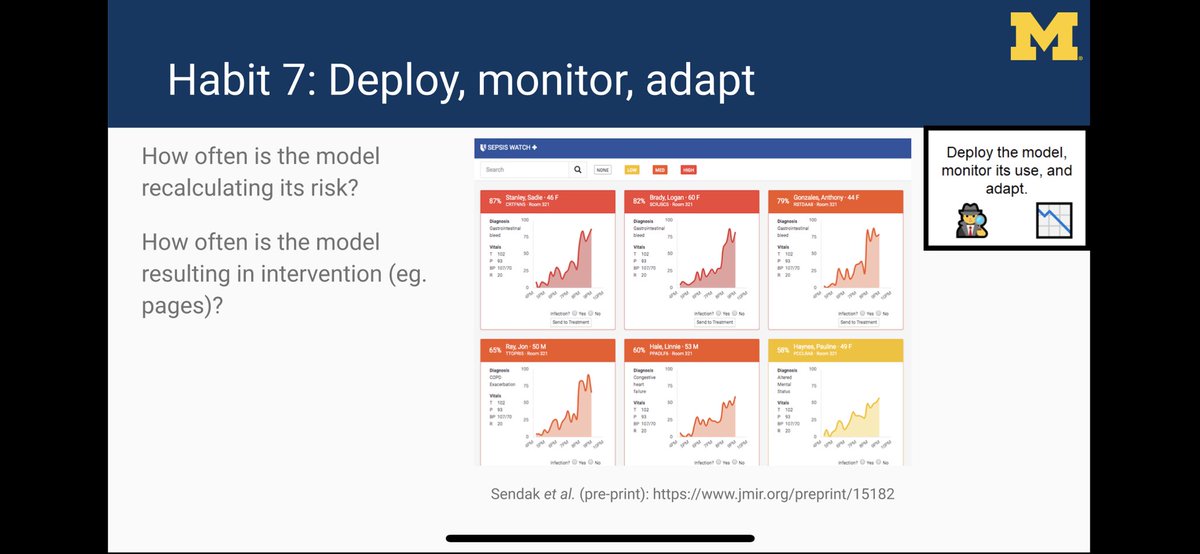

Do you know how often your model is running/alerting? An EHR update can break models silently by messing up variable mappings.

Need a dashboard to monitor models.

👇 dashboard by @MarkSendak @JFutoma @kat_heller @DukeInnovate

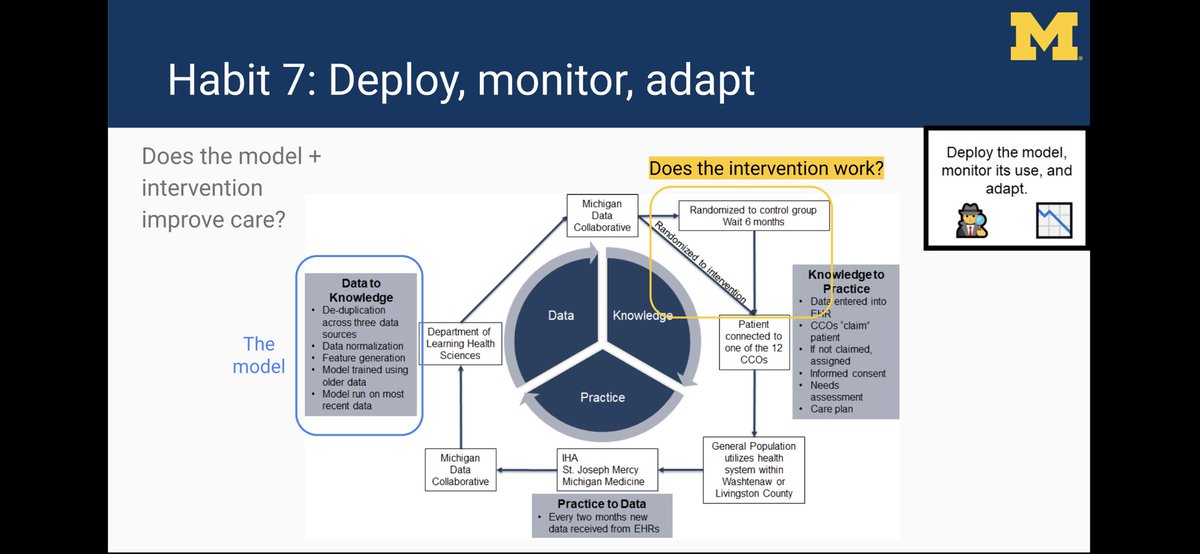

We embedded an ER utilization model within an RCT (working with @CHRTumich @Andy_Ryan_dydx) to figure out if intervention works

Here’s a link to our abstract: static1.squarespace.com/static/59d5ac1…

/fin