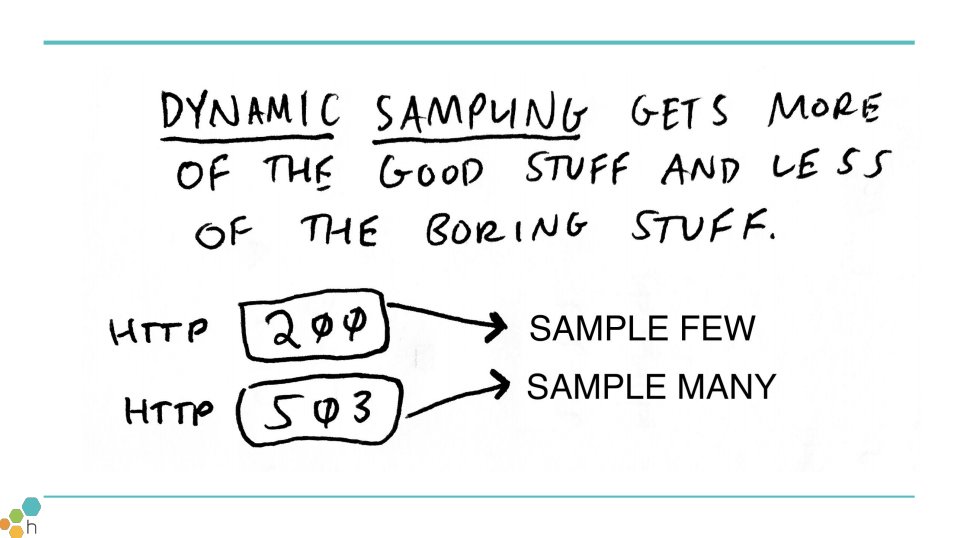

unfortunately, most monitoring tools have been built in ways that prevent you from doing that.

(see more on this: qconsf.com/system/files/p…)

not only that, but things like 90th percentile and avg got written out to disk too. you can't compute those percentiles again when you query,

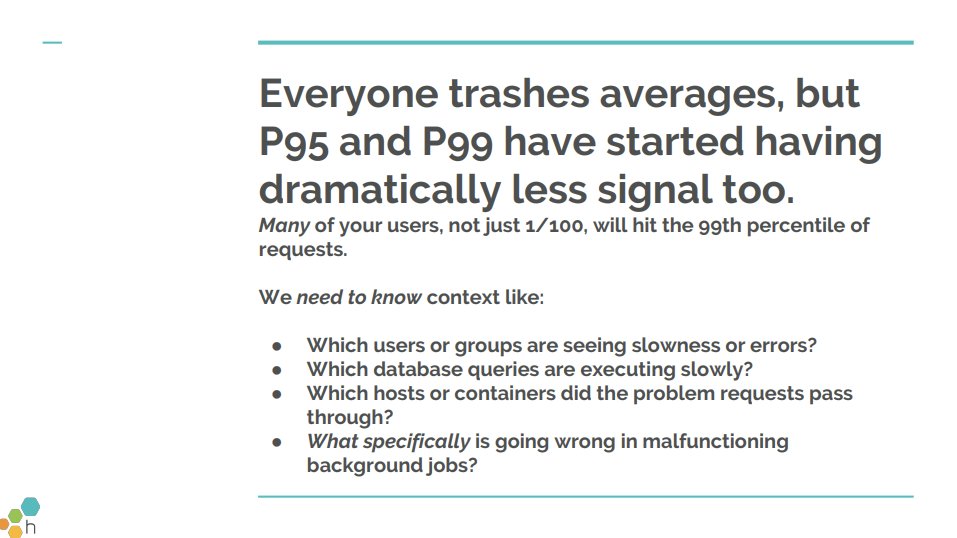

metrics are not useless, they're very cheap and efficient ways of storing aggregate information about the system, counters, etc. but that's not observability. and worst of all...

and then you need to access your data in a way that isn't just fancy grep. (me: screaming)

if your tool can't do that, it isn't observability.

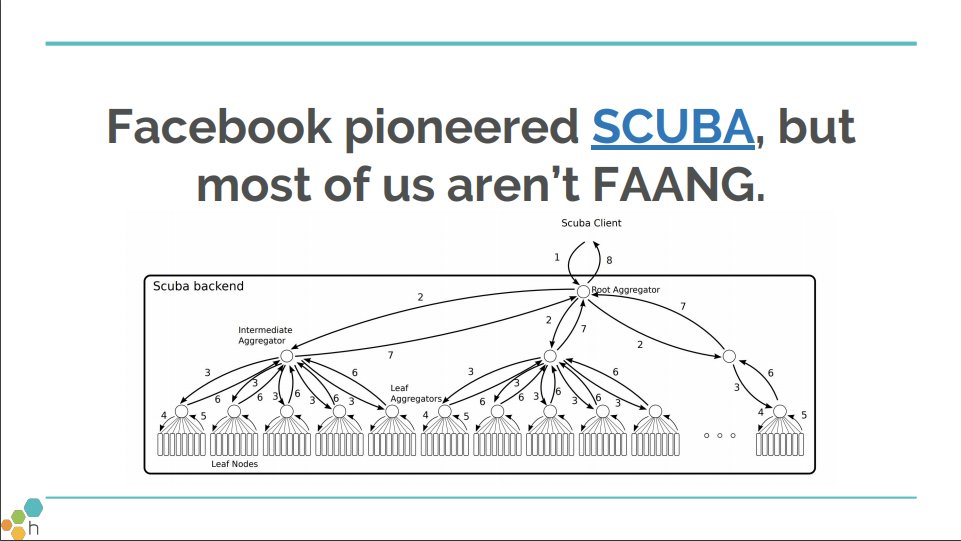

honeycomb grew out of our experiences using scuba at facebook. (whitepaper for scuba: research.fb.com/wp-content/upl…) and FB doesn't pay a multiple of their infra costs for observability, so how does FB solve this problem?

4 - grasping observability will separate the engineers who can build and maintain complex systems from those who can't

cheers!🐝