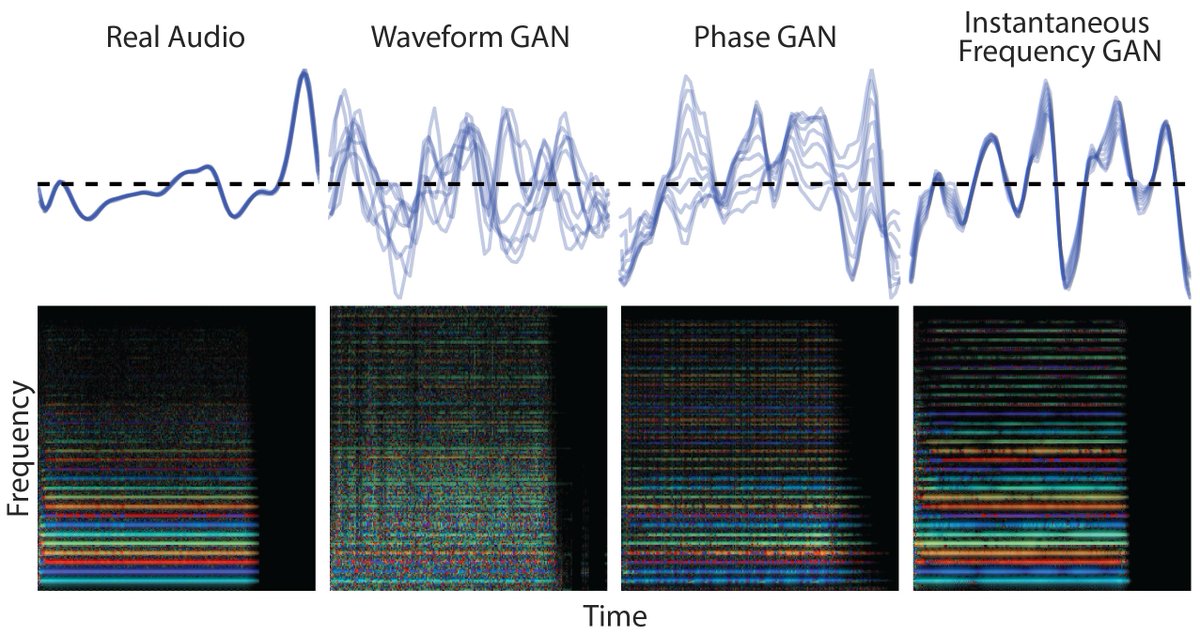

GANSynth is a new method for fast generation of high-fidelity audio.

🎵 Examples: goo.gl/magenta/gansyn…

⏯ Colab: goo.gl/magenta/gansyn…

📝 Paper: goo.gl/magenta/gansyn…

💻 Code: goo.gl/magenta/gansyn…

⌨️ Blog: magenta.tensorflow.org/gansynth

1/

I'm excited to finally release this code, have fun making your own sounds with the colab notebook!