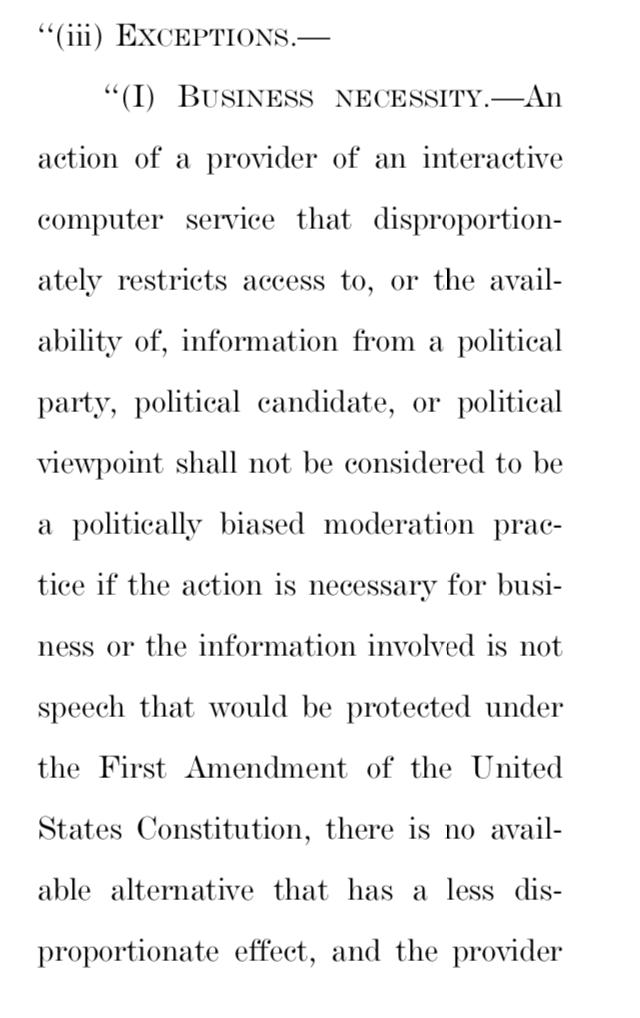

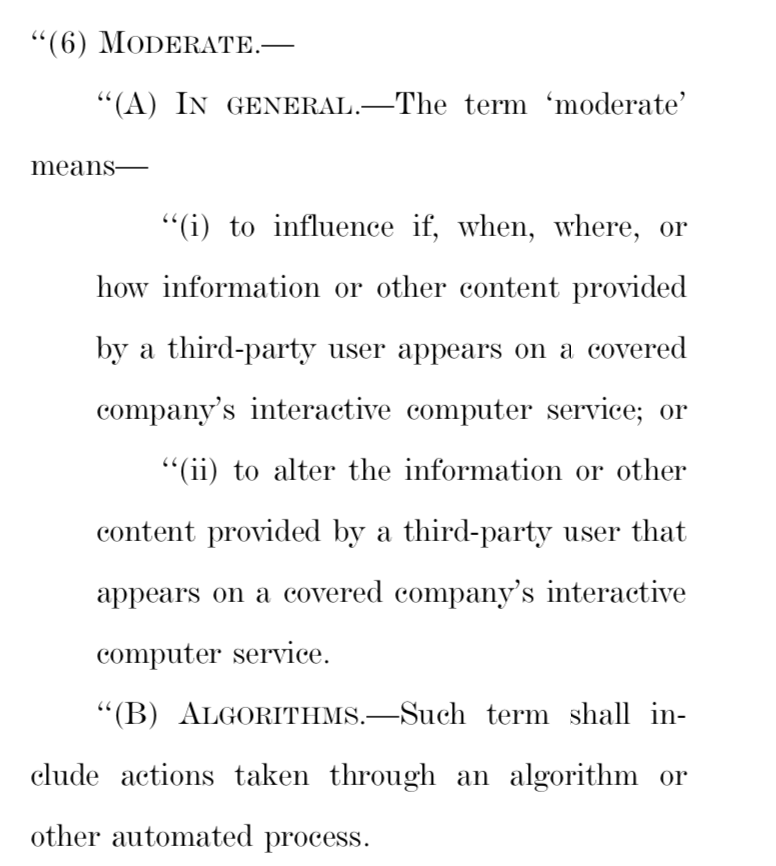

Here's the key - it makes BOTH of Section 230's liability waivers contingent on a "covered company" getting an "immunity certification" from the FTC.

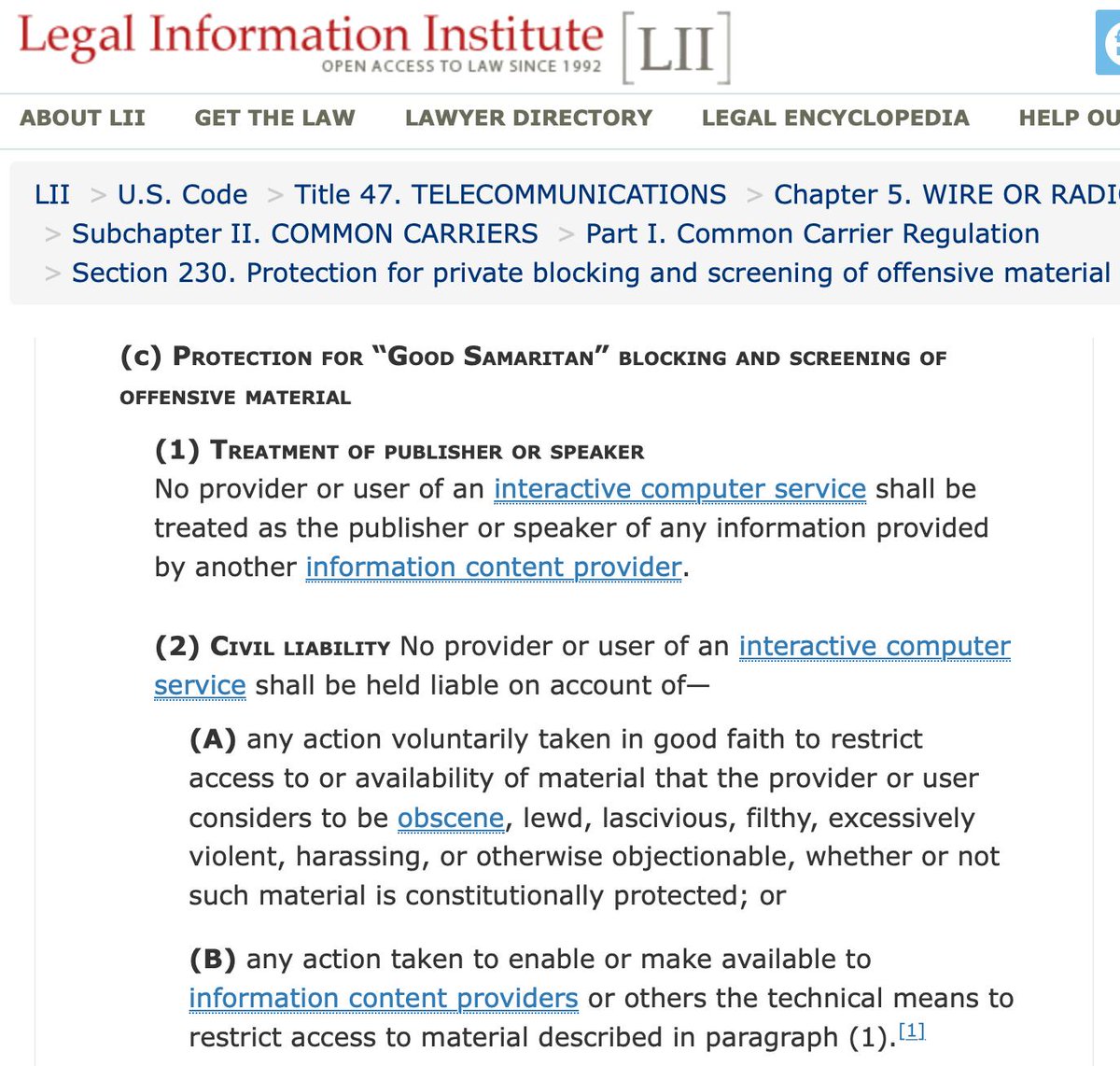

See where it says "paragraphs (1) and (2) shall not apply"?

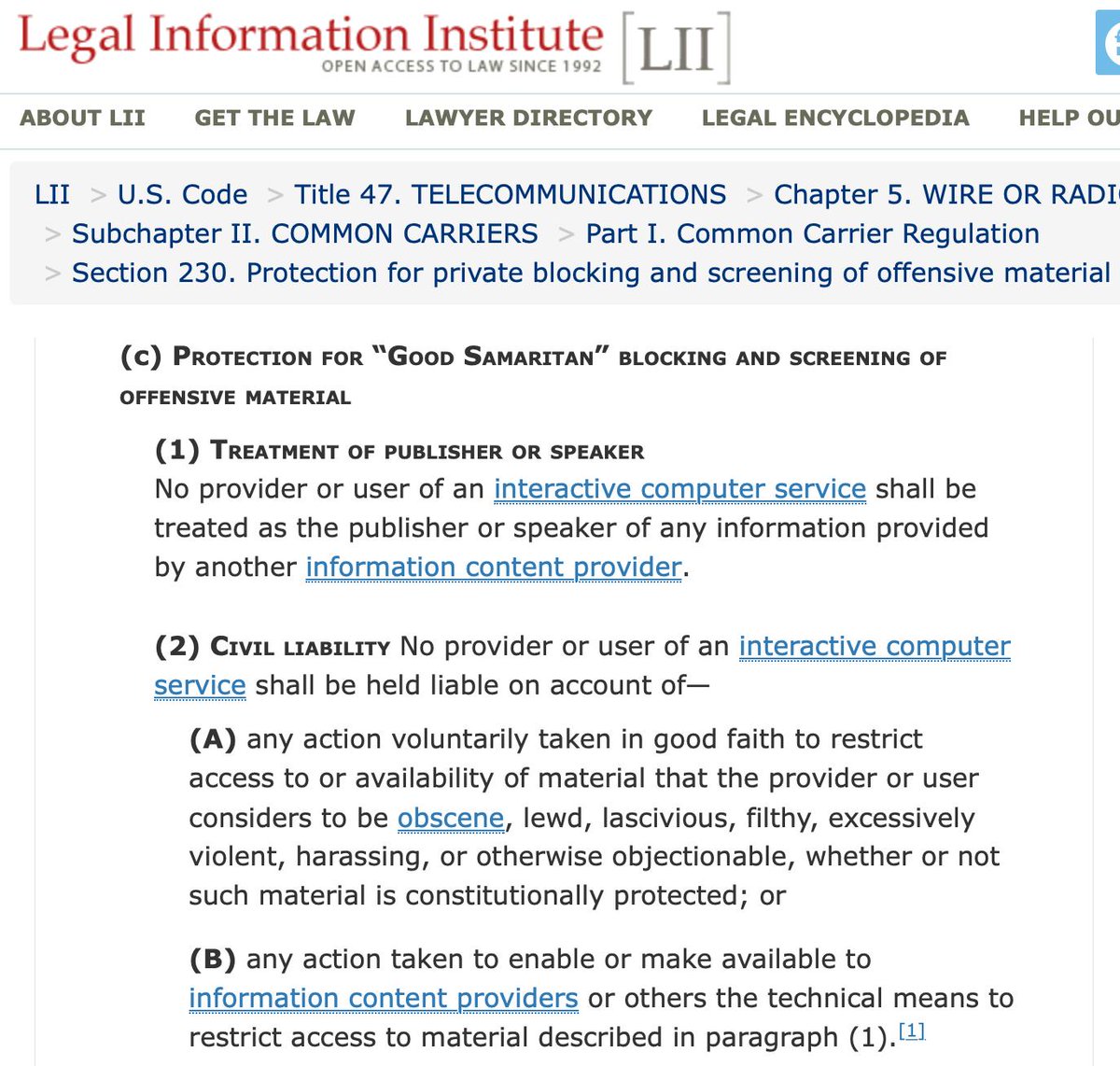

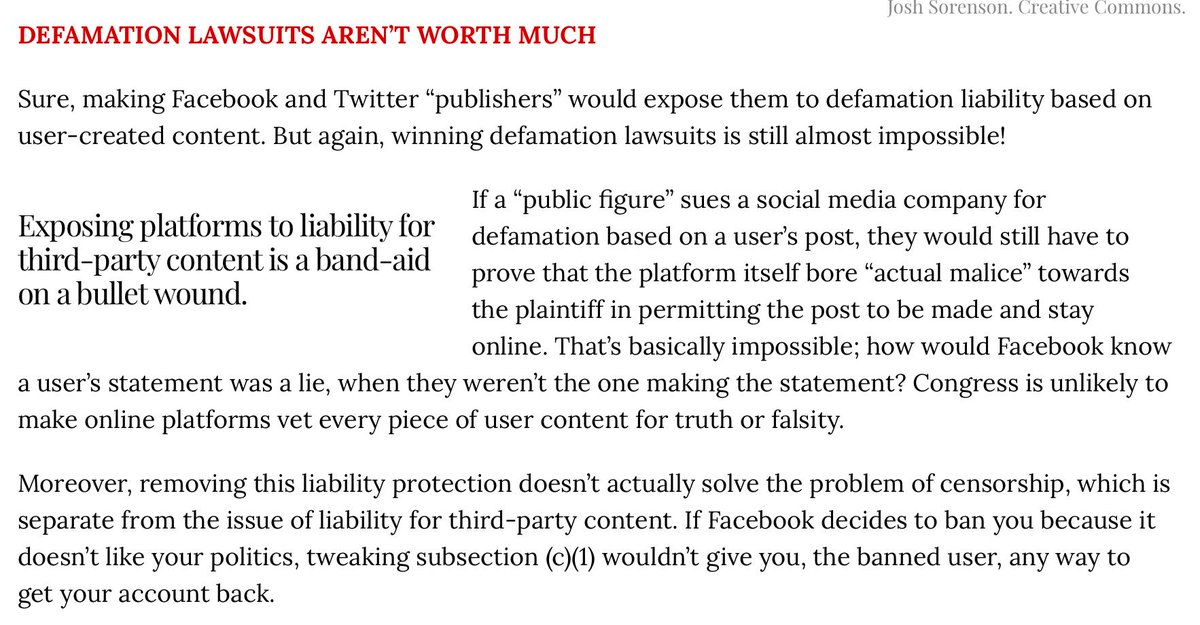

In The Section 230 illusion, @RonColeman and I argued that tweaking that wasn't enough, because defamation lawsuits are near impossible to win.

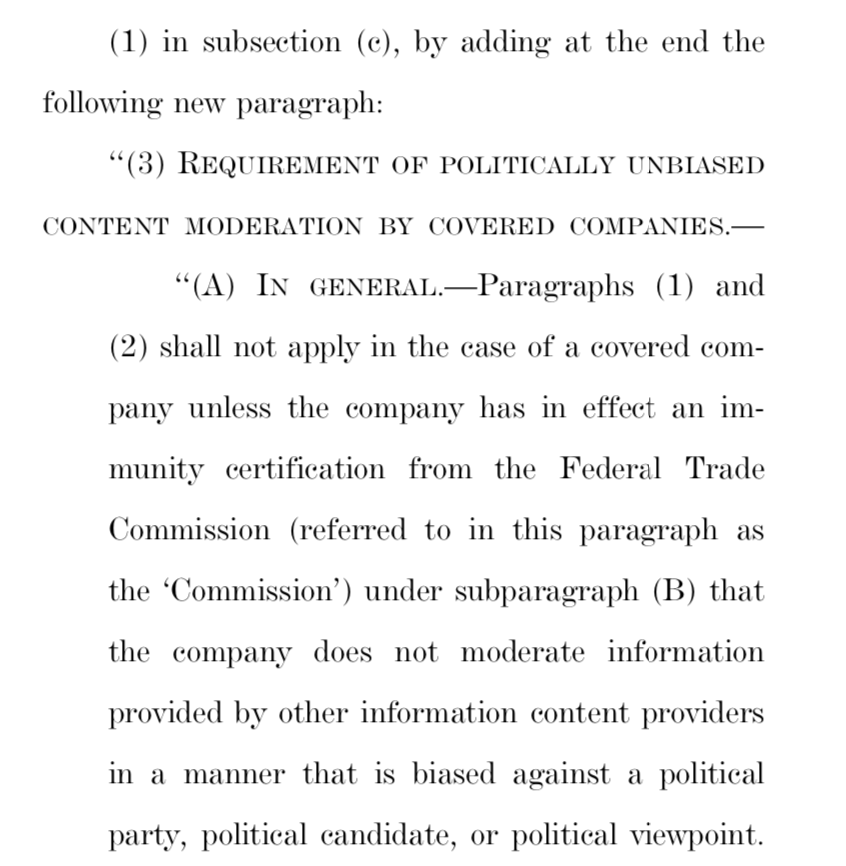

That's the one that protects the platforms' ability to remove "obscene" and "objectionable" content in good faith.

They need that one, folks. Otherwise moderation is a legal nightmare.

They'll have to worry about content removal CONSTANTLY if they lose the protection of paragraph (2).

That's the crown jewel - not the "publisher/platform" distinction. Which is precisely what @RonColeman and I argued.

And @HawleyMO's bill makes sure that's within the FTC's purview

Their crown jewel - the liability carveout under § 230(c)(2) protecting good faith moderation decisions - is fully in play

Will it pass? Who knows

But it's one hell of a shot across the bow

FIN