(today is also going to contain MANY Céline Dion gifs. So be ready)

jyu.fi/hytk/fi/laitok…

Paper here: dafx.labri.fr/main/papers/p2…

sonicvisualiser.org

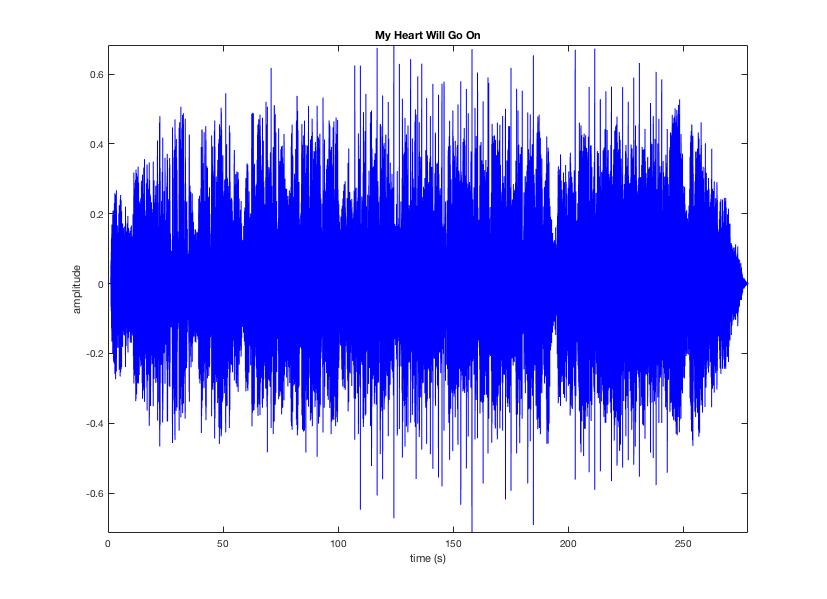

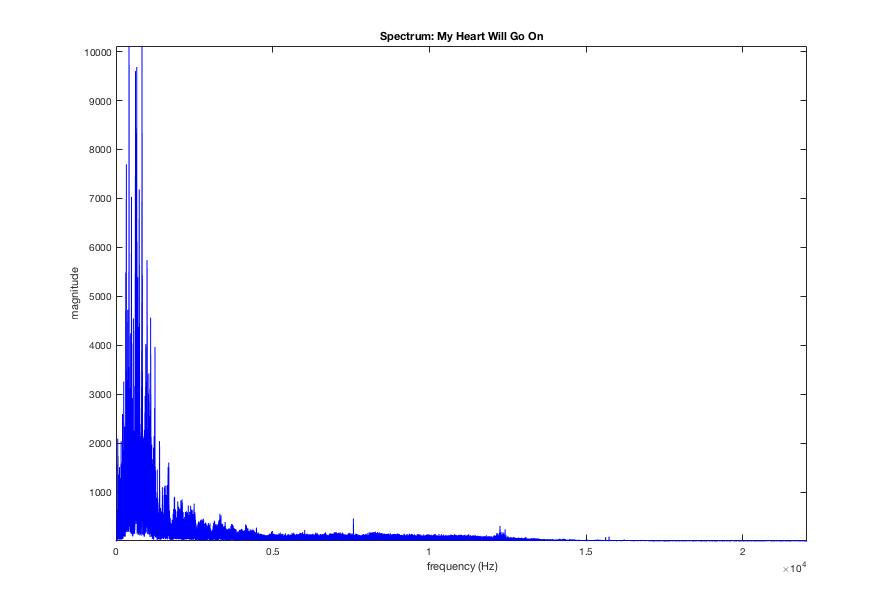

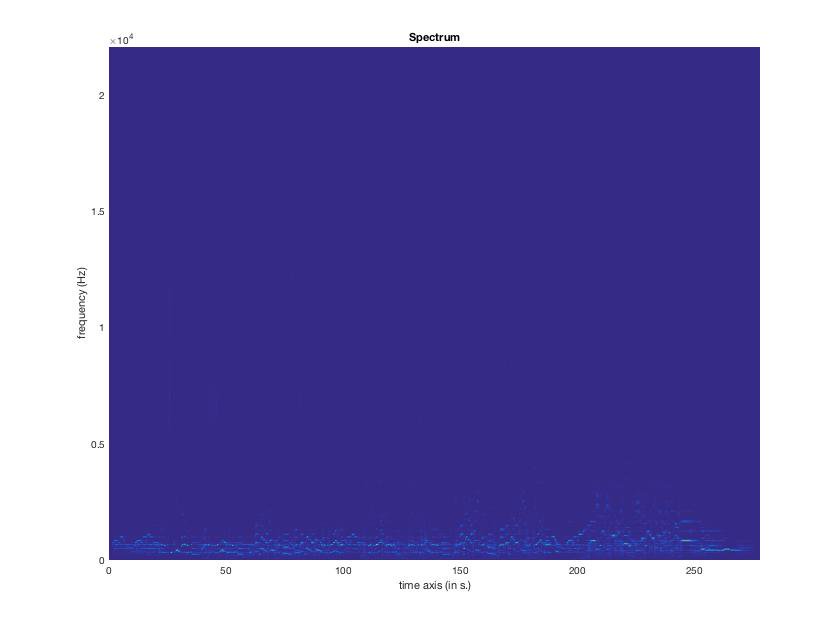

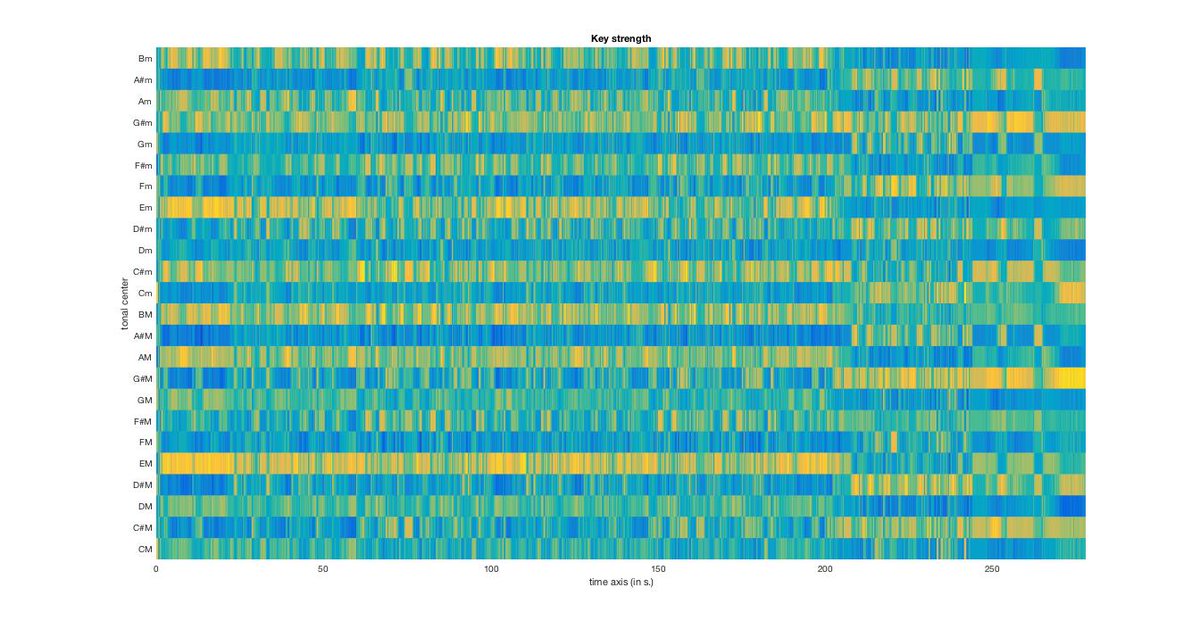

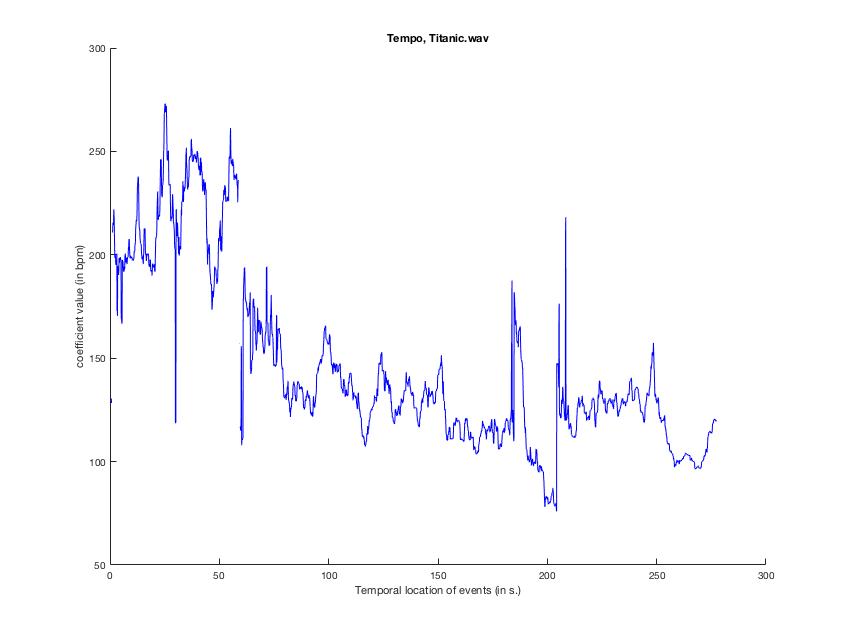

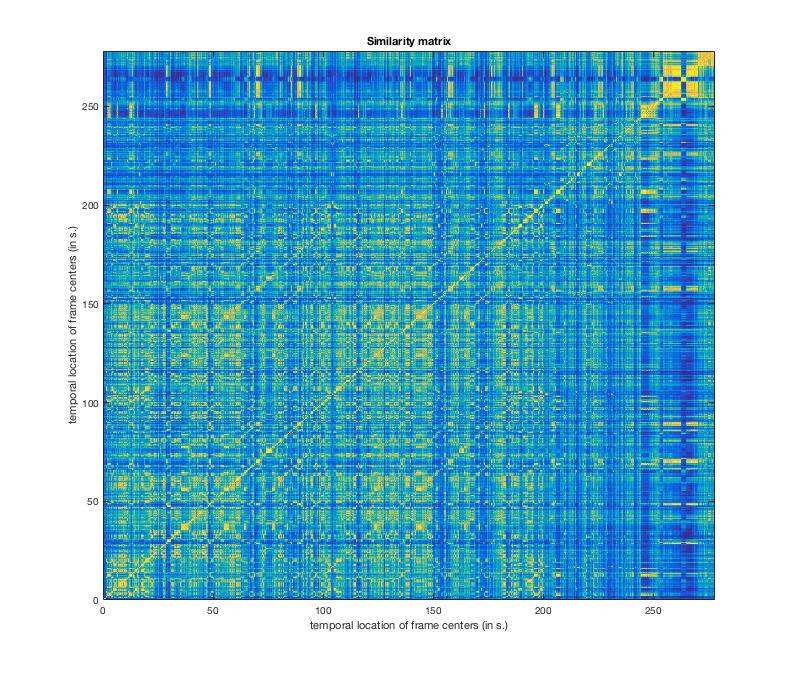

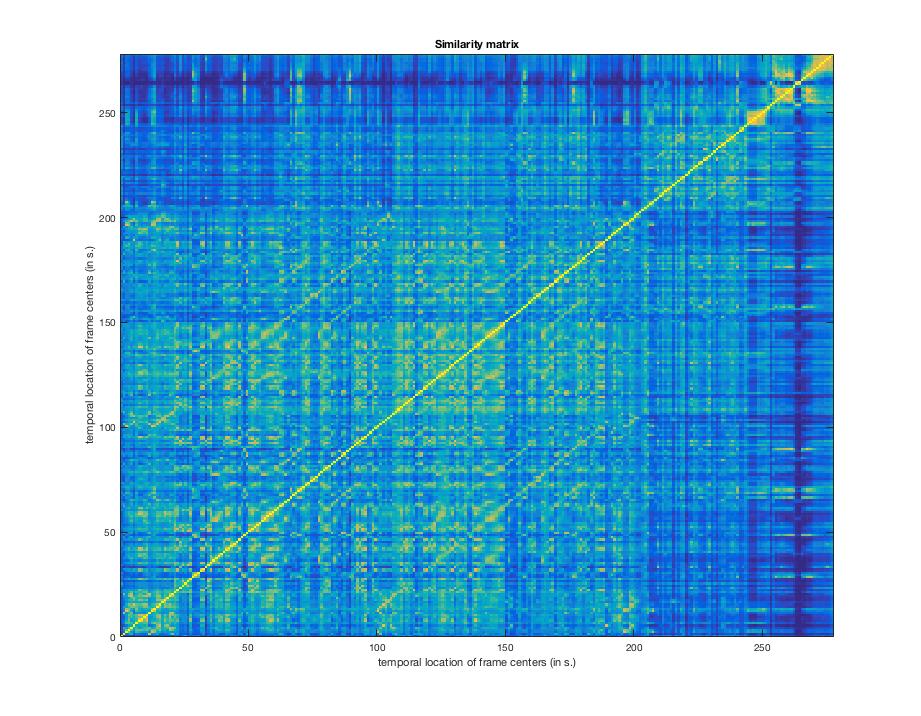

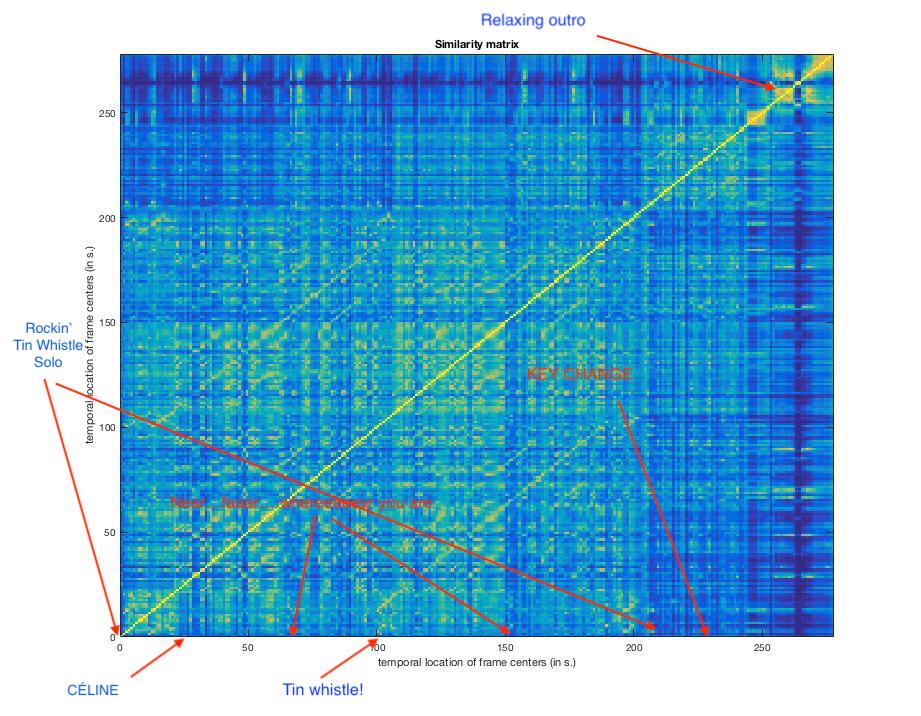

A piece of music is a multi-laaaaaayerd thing (again, CAKE.) that combines lots of information into a single package.

(Rubato = playing with the tempo to make things DRAMATIC)

tylervigen.com/spurious-corre…

drive.google.com/open?id=1h6uly…