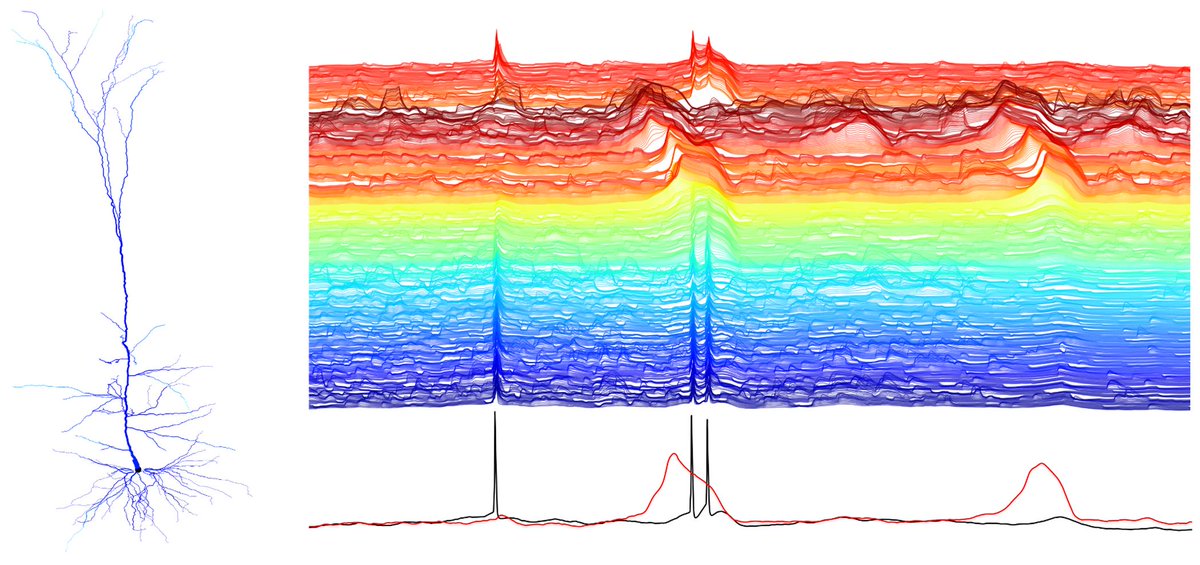

1) Neurons in the brain are bombarded with massive synaptic input distributed across a large tree like structure - its dendritic tree.

During this bombardment, the tree goes wild

preprint: biorxiv.org/content/10.110…

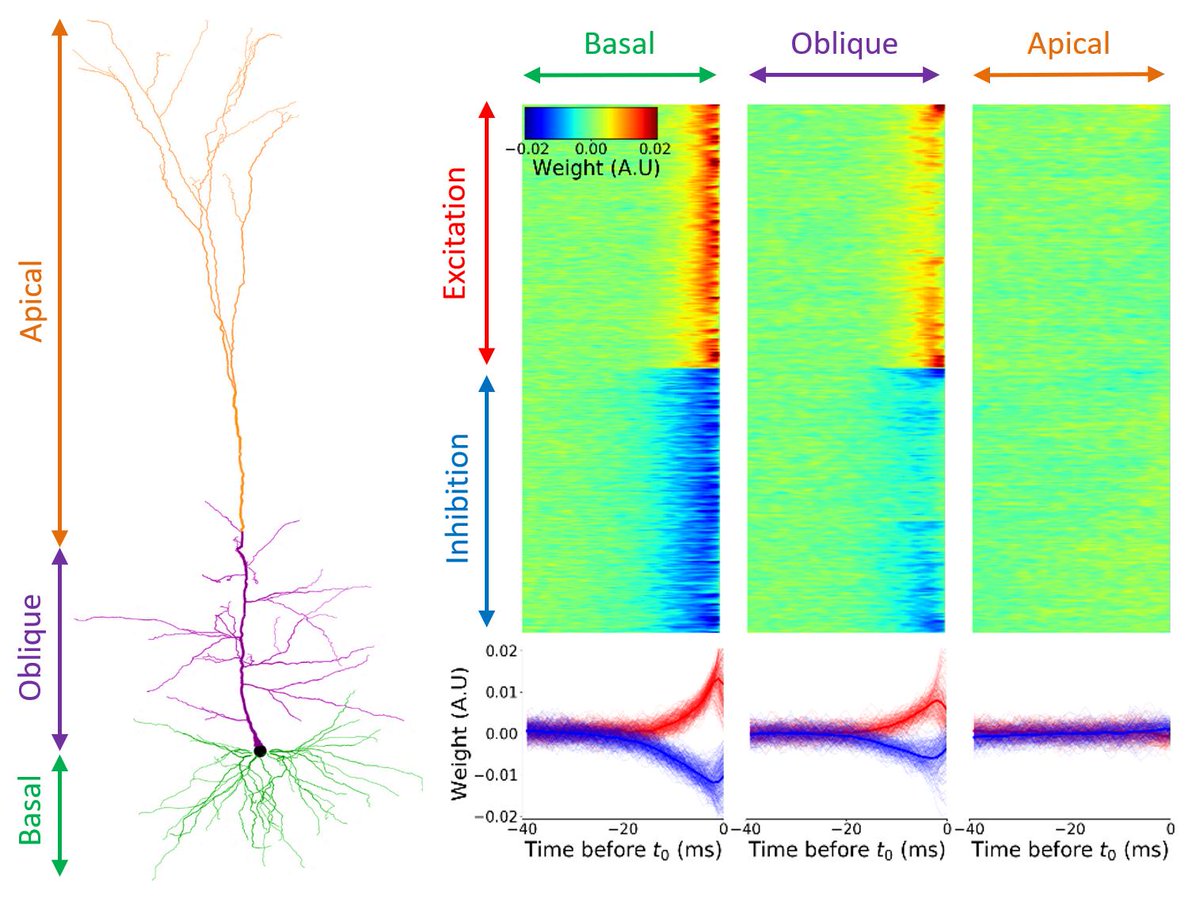

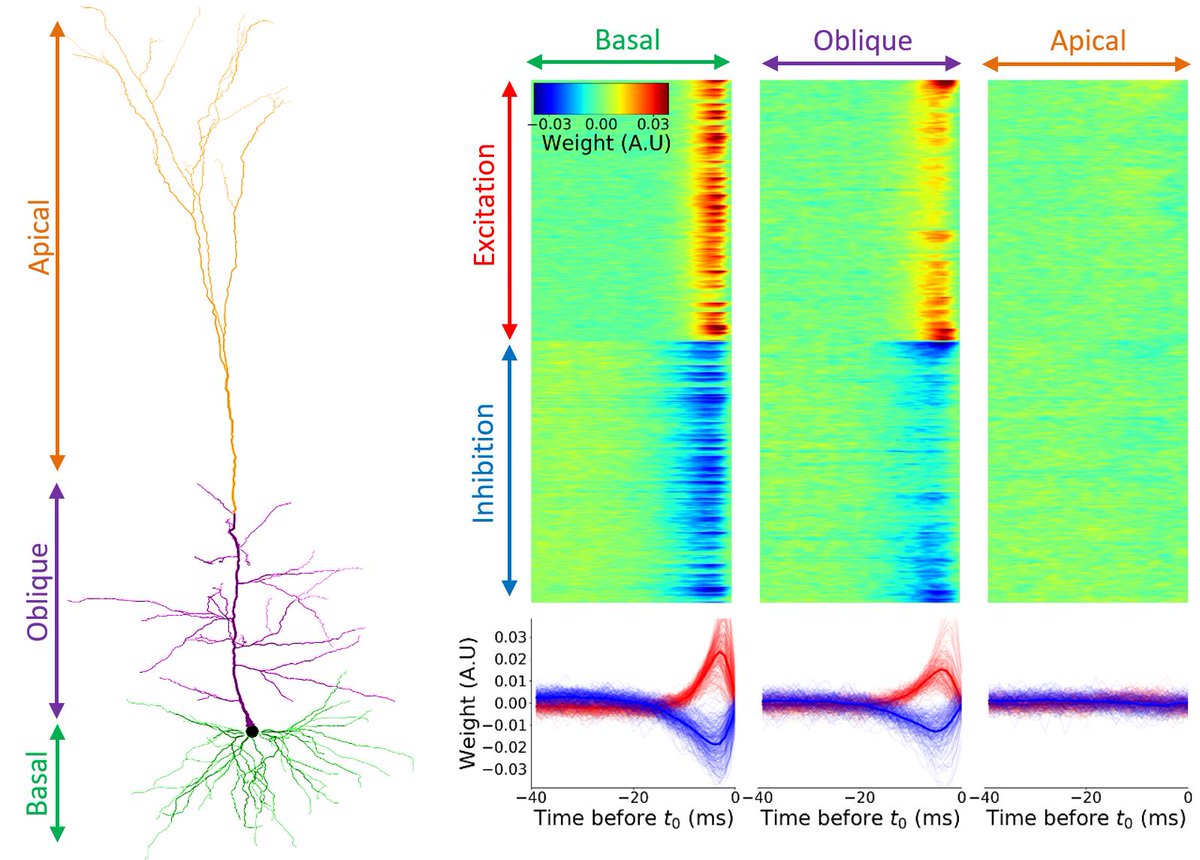

Take away one of those things - and a neuron turns to a simple device

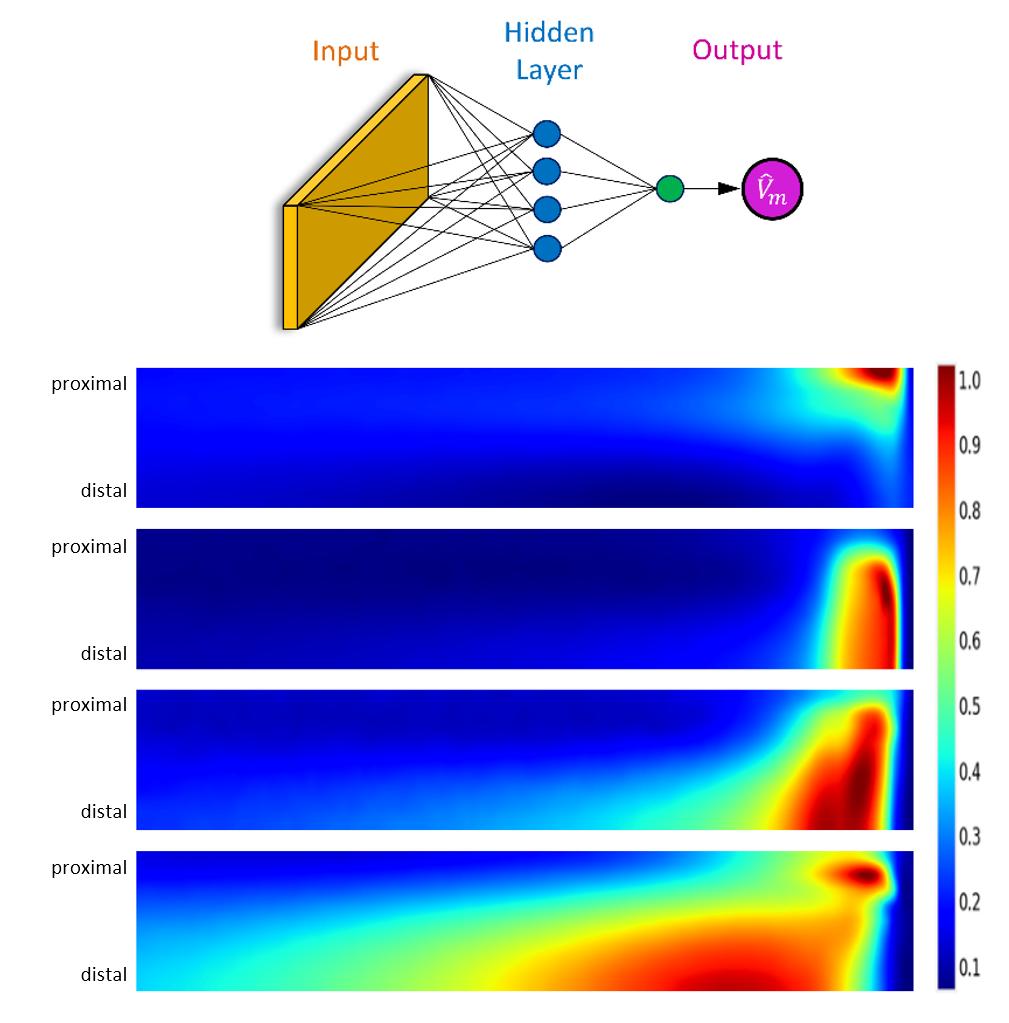

The simplest method is to look at the first layer weights of the neural network:

@bioRxiv @biorxiv_neursci