Let me convince you otherwise.

In places like India, YouTube has replaced Google as the place people turn to ask questions & learn about the world.

wsj.com/articles/india…

70% of that -- 700,000,000 hours -- is driven by the recommendation algorithm. But no one really knows how it works.

Which is great when what you want is, say, endless @bonappetit cooking videos.

Not so great when it delivers videos of children to pedophiles. nytimes.com/2019/06/03/wor…

@gchaslot, a former YouTube engineer who worked on the recommendation algorithm, shared an example in this thread:

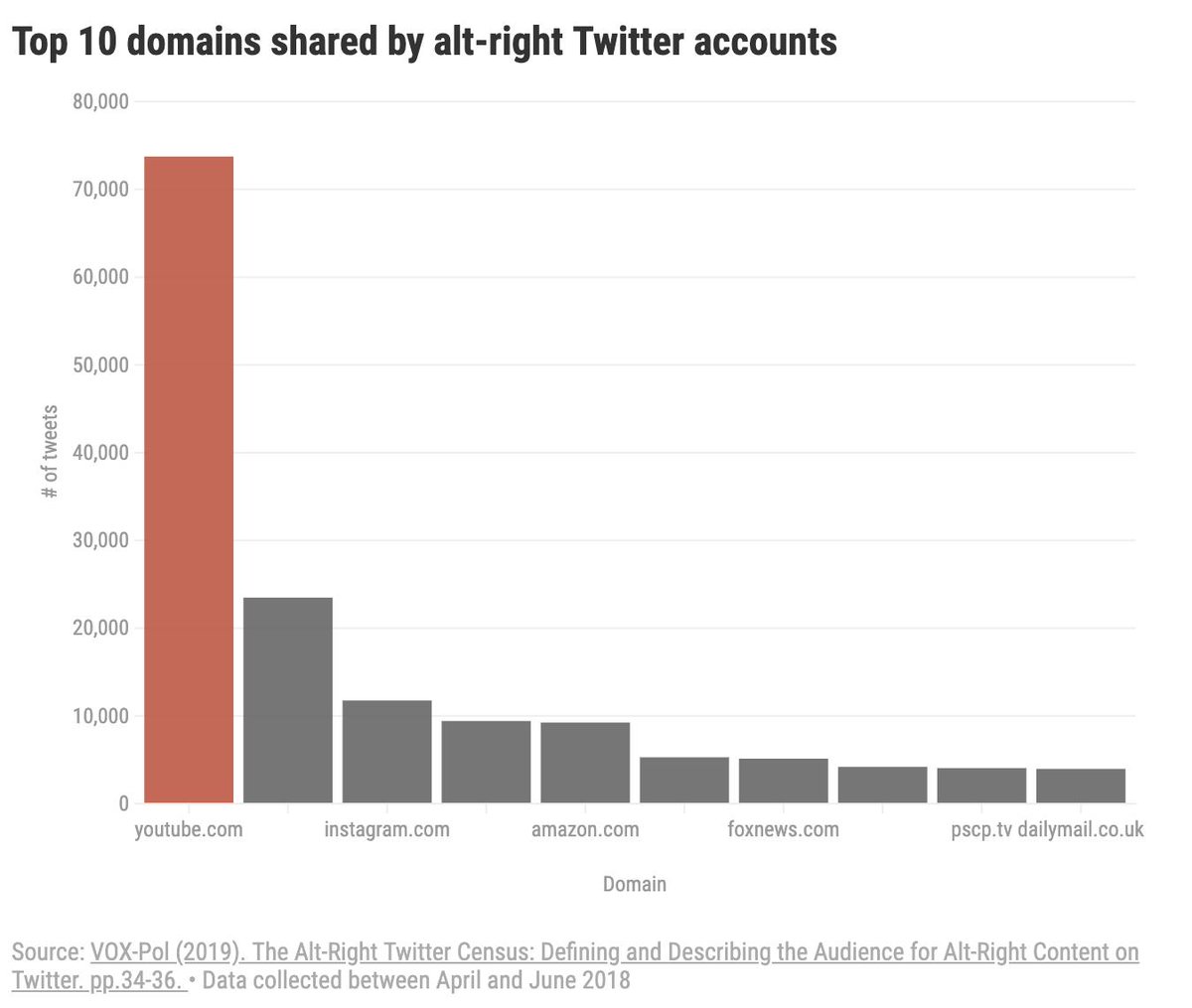

Parents searching for info about Zika are being served anti-vax conspiracies. Young conservative provocateurs are gaining followings on YT, getting elected to federal office, and using their platform to attack rivals.

nytimes.com/2019/08/11/wor…

Last year, she published a @datasociety report mapping how the "Alternative Influence Network" uses the platform to spread extremist political ideology.

datasociety.net/output/alterna…

nytimes.com/interactive/20…

Due to their proprietary nature, we don't have access to the inputs or design. And because they're driven by machine learning, even their engineers don't fully know how they work.

Some governments are pushing for greater accountability, but regulation of a multinational private tech company is an incredibly complicated matter.

This should concern you!

I hope to publish parts of my thesis soon. Let me know if you're interested in reading it.

YouTube is experimenting with ways to make its algorithm even more addictive technologyreview.com/s/614432/youtu…