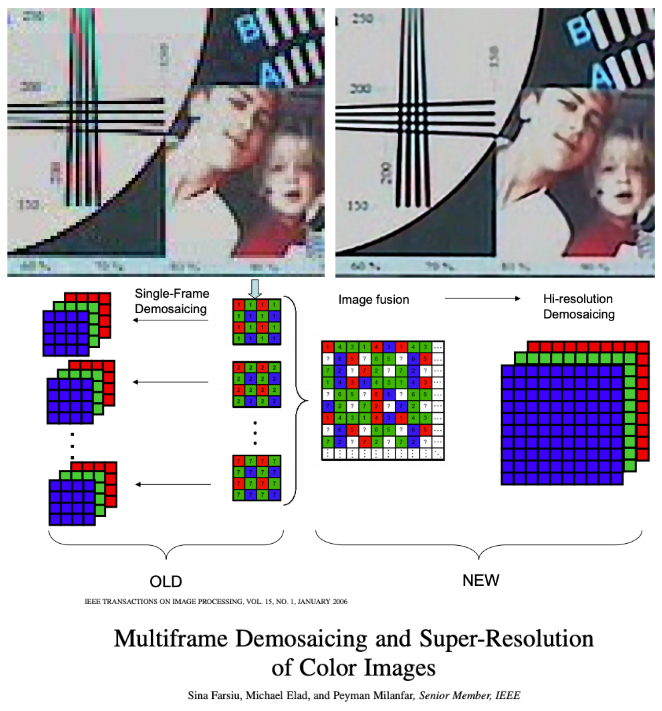

bme.duke.edu/faculty/sina-f…

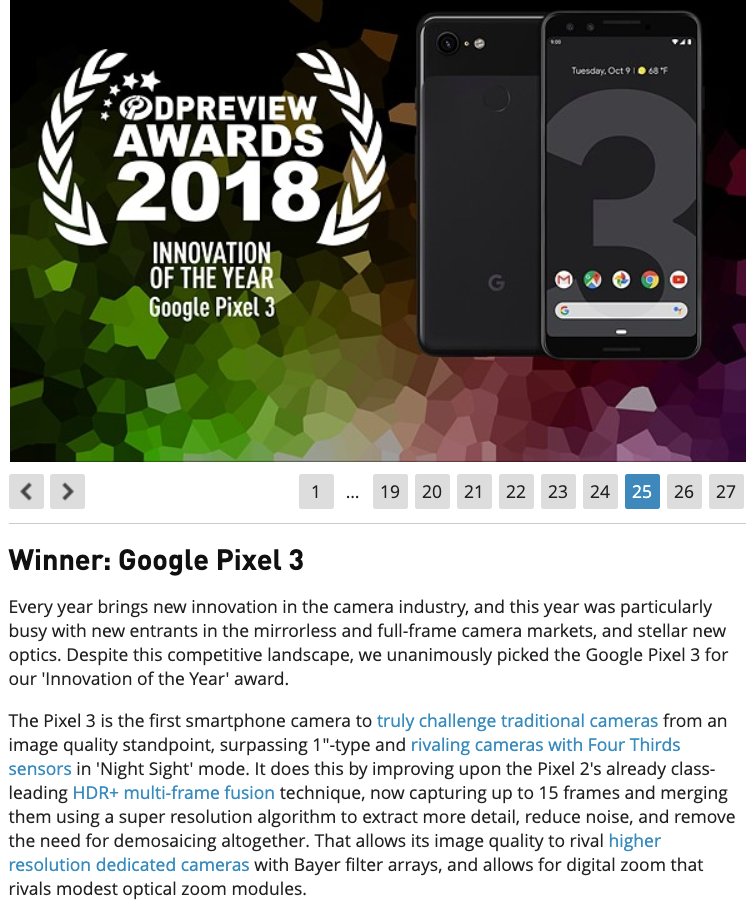

bit.ly/34jJwbZ

* Making a thing practical is harder than you think

* You'll hear <it'll never work> 1000 times. It's OK

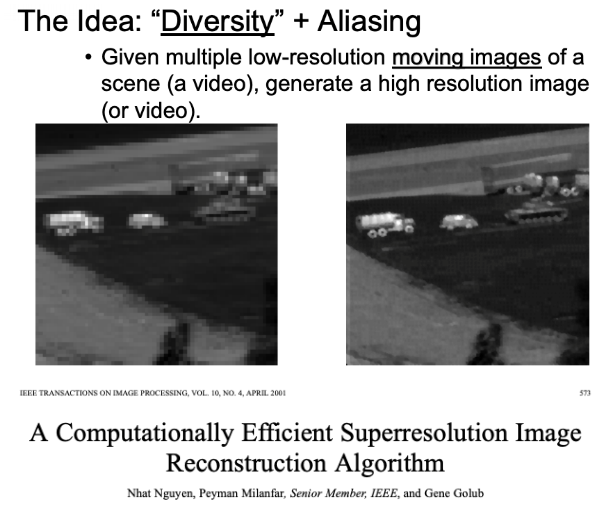

* Don’t dismiss good ideas because they’re not yet practical

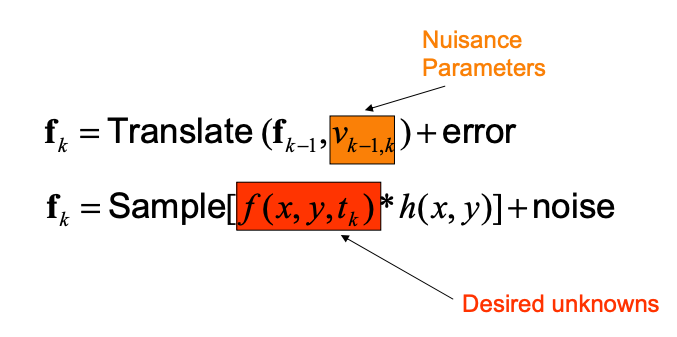

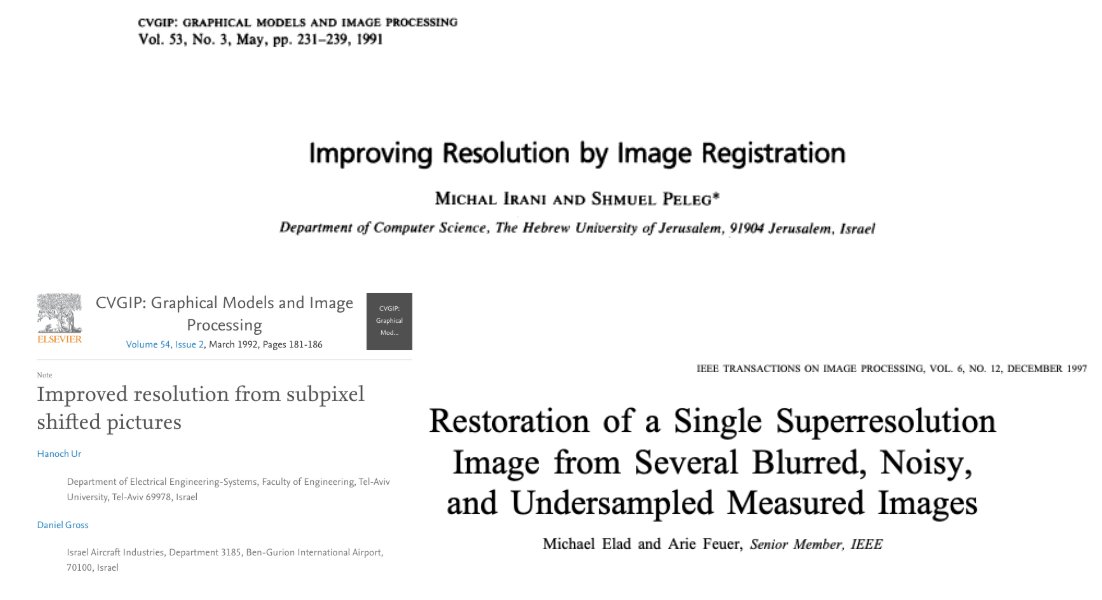

* There’s never a “main” inventor - it’s always a team sport

* It pays to be lucky